Kathrin Grosse

Paper download is intended for registered attendees only, and is

subjected to the IEEE Copyright Policy. Any other use is strongly forbidden.

Papers from this author

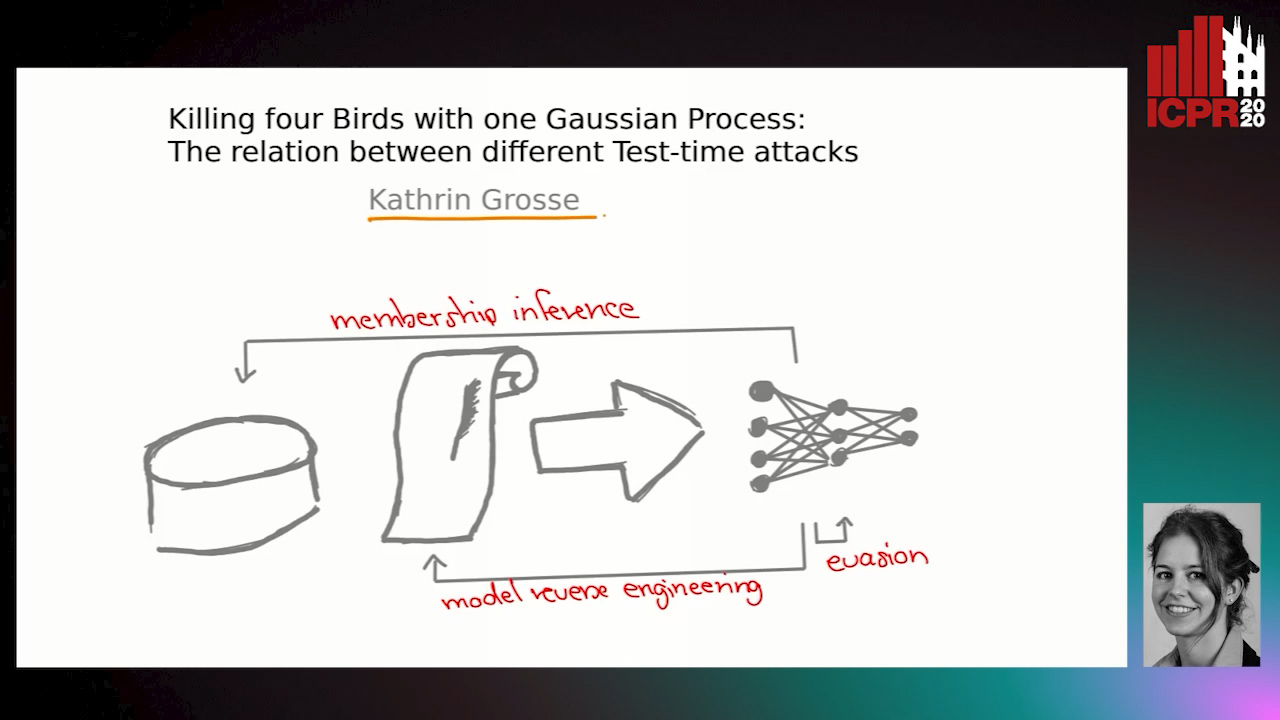

Killing Four Birds with One Gaussian Process: The Relation between Different Test-Time Attacks

Kathrin Grosse, Michael Thomas Smith, Michael Backes

Auto-TLDR; Security of Gaussian Process Classifiers against Attack Algorithms

Abstract Slides Poster Similar

In machine learning (ML) security, attacks like evasion, model stealing or membership inference are generally studied in individually. Previous work has also shown a relationship between some attacks and decision function curvature of the targeted model. Consequently, we study an ML model allowing direct control over the decision surface curvature: Gaussian Process classifiers (GPCs). For evasion, we find that changing GPC's curvature to be robust against one attack algorithm boils down to enabling a different norm or attack algorithm to succeed. This is backed up by our formal analysis showing that static security guarantees are opposed to learning. Concerning intellectual property, we show formally that lazy learning does not necessarily leak all information when applied. In practice, often a seemingly secure curvature can be found. For example, we are able to secure GPC against empirical membership inference by proper configuration. In this configuration, however, the GPC's hyper-parameters are leaked, e.g. model reverse engineering succeeds. We conclude that attacks on classification should not be studied in isolation, but in relation to each other.