Jia Huei Tan

Paper download is intended for registered attendees only, and is

subjected to the IEEE Copyright Policy. Any other use is strongly forbidden.

Papers from this author

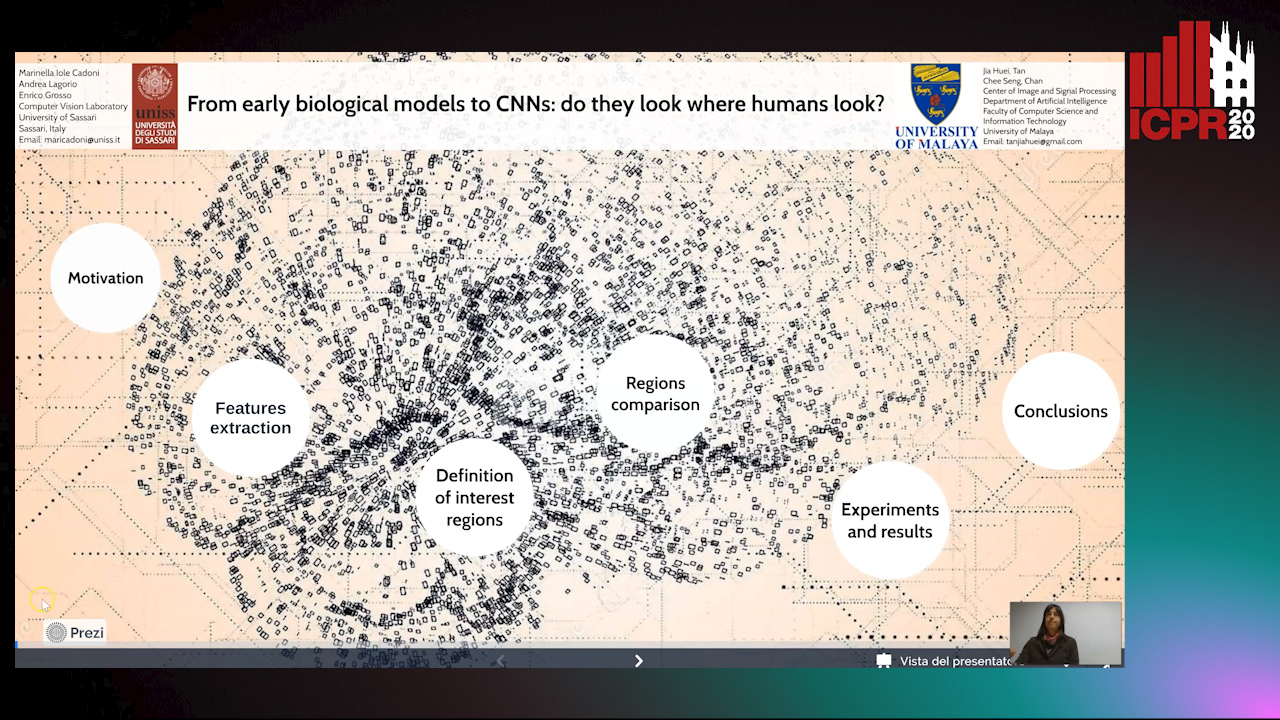

From Early Biological Models to CNNs: Do They Look Where Humans Look?

Marinella Iole Cadoni, Andrea Lagorio, Enrico Grosso, Jia Huei Tan, Chee Seng Chan

Auto-TLDR; Comparing Neural Networks to Human Fixations for Semantic Learning

Abstract Slides Poster Similar

Early hierarchical computational visual models as well as recent deep neural networks have been inspired by the functioning of the primate visual cortex system. Although much effort has been made to dissect neural networks to visualize the features they learn at the individual units, the scope of the visualizations has been limited to a categorization of the features in terms of their semantic level. Considering the ability humans have to select high semantic level regions of a scene, the question whether neural networks can match this ability, and if similarity with humans attention is correlated with neural networks performance naturally arise. To address this question we propose a pipeline to select and compare sets of feature points that maximally activate individual networks units to human fixations. We extract features from a variety of neural networks, from early hierarchical models such as HMAX up to recent deep convolutional neural netwoks such as Densnet, to compare them to human fixations. Experiments over the ETD database show that human fixations correlate with CNNs features from deep layers significantly better than with random sets of points, while they do not with features extracted from the first layers of CNNs, nor with the HMAX features, which seem to have low semantic level compared with the features that respond to the automatically learned filters of CNNs. It also turns out that there is a correlation between CNN’s human similarity and classification performance.