Mohammad Adiban

Paper download is intended for registered attendees only, and is

subjected to the IEEE Copyright Policy. Any other use is strongly forbidden.

Papers from this author

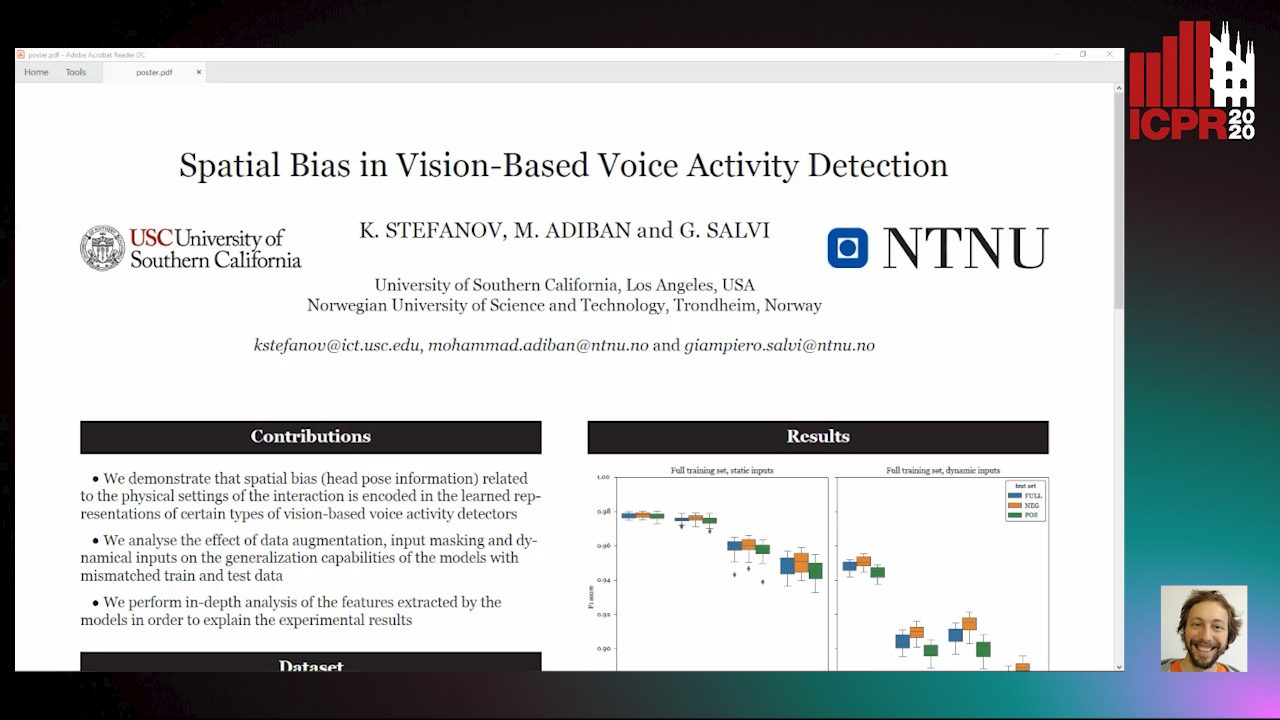

Spatial Bias in Vision-Based Voice Activity Detection

Kalin Stefanov, Mohammad Adiban, Giampiero Salvi

Auto-TLDR; Spatial Bias in Vision-based Voice Activity Detection in Multiparty Human-Human Interactions

We present models for automatic vision-based voice activity detection (VAD) in multiparty human-human interactions that are aimed at complementing the acoustic VAD methods. We provide evidence that this type of vision-based VAD models are susceptible to spatial bias in the datasets. The physical settings of the interaction, usually constant throughout data acquisition, determines the distribution of head poses of the participants. Our results show that when the head pose distributions are significantly different in the training and test sets, the performance of the models drops significantly. This suggests that previously reported results on datasets with a fixed physical configuration may overestimate the generalization capabilities of this type of models. We also propose a number of possible remedies to the spatial bias, including data augmentation, input masking and dynamic features, and provide an in-depth analysis of the visual cues used by our models.