Ulrik Beierholm

Paper download is intended for registered attendees only, and is

subjected to the IEEE Copyright Policy. Any other use is strongly forbidden.

Papers from this author

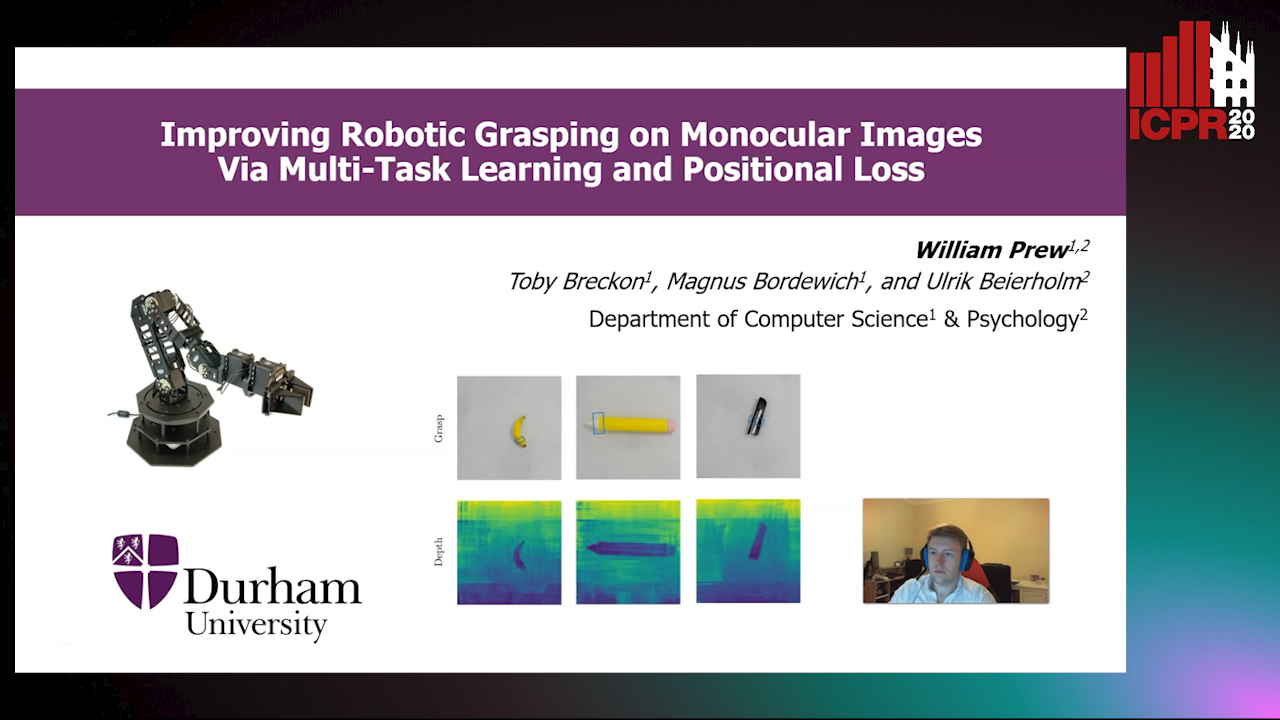

Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

William Prew, Toby Breckon, Magnus Bordewich, Ulrik Beierholm

Auto-TLDR; Improving grasping performance from monocularcolour images in an end-to-end CNN architecture with multi-task learning

Abstract Slides Poster Similar

In this paper we introduce two methods of improv-ing real-time objecting grasping performance from monocularcolour images in an end-to-end CNN architecture. The first isthe addition of an auxiliary task during model training (multi-task learning). Our multi-task CNN model improves graspingperformance from a baseline average of 72.04% to 78.14% onthe large Jacquard grasping dataset when performing a supple-mentary depth reconstruction task. The second is introducinga positional loss function that emphasises loss per pixel forsecondary parameters (gripper angle and width) only on points ofan object where a successful grasp can take place. This increasesperformance from a baseline average of 72.04% to 78.92% aswell as reducing the number of training epochs required. Thesemethods can be also performed in tandem resulting in a furtherperformance increase to 79.12%, while maintaining sufficientinference speed to enable processing at 50FPS