Audio-Based Near-Duplicate Video Retrieval with Audio Similarity Learning

Pavlos Avgoustinakis,

Giorgos Kordopatis-Zilos,

Symeon Papadopoulos,

Andreas L. Symeonidis,

Ioannis Kompatsiaris

Auto-TLDR; AuSiL: Audio Similarity Learning for Near-duplicate Video Retrieval

Similar papers

Attention-Based Deep Metric Learning for Near-Duplicate Video Retrieval

Kuan-Hsun Wang, Chia Chun Cheng, Yi-Ling Chen, Yale Song, Shang-Hong Lai

Auto-TLDR; Attention-based Deep Metric Learning for Near-duplicate Video Retrieval

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

Ballroom Dance Recognition from Audio Recordings

Tomas Pavlin, Jan Cech, Jiri Matas

Auto-TLDR; A CNN-based approach to classify ballroom dances given audio recordings

Abstract Slides Poster Similar

Exploiting Local Indexing and Deep Feature Confidence Scores for Fast Image-To-Video Search

Savas Ozkan, Gözde Bozdağı Akar

Auto-TLDR; Fast and Robust Image-to-Video Retrieval Using Local and Global Descriptors

Abstract Slides Poster Similar

Which are the factors affecting the performance of audio surveillance systems?

Antonio Greco, Antonio Roberto, Alessia Saggese, Mario Vento

Auto-TLDR; Sound Event Recognition Using Convolutional Neural Networks and Visual Representations on MIVIA Audio Events

Unsupervised Co-Segmentation for Athlete Movements and Live Commentaries Using Crossmodal Temporal Proximity

Yasunori Ohishi, Yuki Tanaka, Kunio Kashino

Auto-TLDR; A guided attention scheme for audio-visual co-segmentation

Abstract Slides Poster Similar

DenseRecognition of Spoken Languages

Jaybrata Chakraborty, Bappaditya Chakraborty, Ujjwal Bhattacharya

Auto-TLDR; DenseNet: A Dense Convolutional Network Architecture for Speech Recognition in Indian Languages

Abstract Slides Poster Similar

AttendAffectNet: Self-Attention Based Networks for Predicting Affective Responses from Movies

Thi Phuong Thao Ha, Bt Balamurali, Herremans Dorien, Roig Gemma

Auto-TLDR; AttendAffectNet: A Self-Attention Based Network for Emotion Prediction from Movies

Abstract Slides Poster Similar

Video Face Manipulation Detection through Ensemble of CNNs

Nicolo Bonettini, Edoardo Daniele Cannas, Sara Mandelli, Luca Bondi, Paolo Bestagini, Stefano Tubaro

Auto-TLDR; Face Manipulation Detection in Video Sequences Using Convolutional Neural Networks

The Effect of Spectrogram Reconstruction on Automatic Music Transcription: An Alternative Approach to Improve Transcription Accuracy

Kin Wai Cheuk, Yin-Jyun Luo, Emmanouil Benetos, Herremans Dorien

Auto-TLDR; Exploring the effect of spectrogram reconstruction loss on automatic music transcription

Hybrid Network for End-To-End Text-Independent Speaker Identification

Wajdi Ghezaiel, Luc Brun, Olivier Lezoray

Auto-TLDR; Text-Independent Speaker Identification with Scattering Wavelet Network and Convolutional Neural Networks

Abstract Slides Poster Similar

Rotation Invariant Aerial Image Retrieval with Group Convolutional Metric Learning

Hyunseung Chung, Woo-Jeoung Nam, Seong-Whan Lee

Auto-TLDR; Robust Remote Sensing Image Retrieval Using Group Convolution with Attention Mechanism and Metric Learning

Abstract Slides Poster Similar

Text Synopsis Generation for Egocentric Videos

Aidean Sharghi, Niels Lobo, Mubarak Shah

Auto-TLDR; Egocentric Video Summarization Using Multi-task Learning for End-to-End Learning

Generalized Local Attention Pooling for Deep Metric Learning

Carlos Roig Mari, David Varas, Issey Masuda, Juan Carlos Riveiro, Elisenda Bou-Balust

Auto-TLDR; Generalized Local Attention Pooling for Deep Metric Learning

Abstract Slides Poster Similar

RMS-Net: Regression and Masking for Soccer Event Spotting

Matteo Tomei, Lorenzo Baraldi, Simone Calderara, Simone Bronzin, Rita Cucchiara

Auto-TLDR; An Action Spotting Network for Soccer Videos

Abstract Slides Poster Similar

One-Shot Learning for Acoustic Identification of Bird Species in Non-Stationary Environments

Michelangelo Acconcjaioco, Stavros Ntalampiras

Auto-TLDR; One-shot Learning in the Bioacoustics Domain using Siamese Neural Networks

Abstract Slides Poster Similar

End-To-End Triplet Loss Based Emotion Embedding System for Speech Emotion Recognition

Puneet Kumar, Sidharth Jain, Balasubramanian Raman, Partha Pratim Roy, Masakazu Iwamura

Auto-TLDR; End-to-End Neural Embedding System for Speech Emotion Recognition

Abstract Slides Poster Similar

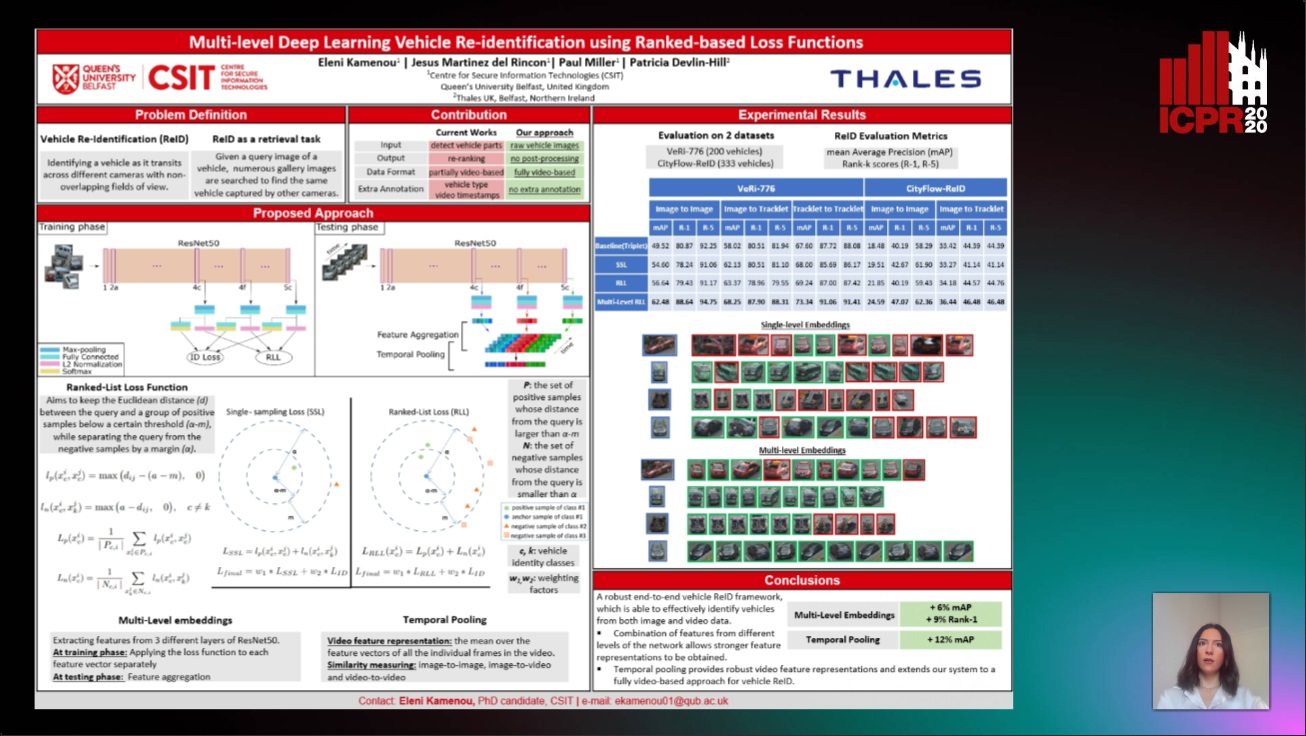

Multi-Level Deep Learning Vehicle Re-Identification Using Ranked-Based Loss Functions

Eleni Kamenou, Jesus Martinez-Del-Rincon, Paul Miller, Patricia Devlin - Hill

Auto-TLDR; Multi-Level Re-identification Network for Vehicle Re-Identification

Abstract Slides Poster Similar

Supporting Skin Lesion Diagnosis with Content-Based Image Retrieval

Stefano Allegretti, Federico Bolelli, Federico Pollastri, Sabrina Longhitano, Giovanni Pellacani, Costantino Grana

Auto-TLDR; Skin Images Retrieval Using Convolutional Neural Networks for Skin Lesion Classification and Segmentation

Abstract Slides Poster Similar

Feature Engineering and Stacked Echo State Networks for Musical Onset Detection

Peter Steiner, Azarakhsh Jalalvand, Simon Stone, Peter Birkholz

Auto-TLDR; Echo State Networks for Onset Detection in Music Analysis

Abstract Slides Poster Similar

Not 3D Re-ID: Simple Single Stream 2D Convolution for Robust Video Re-Identification

Auto-TLDR; ResNet50-IBN for Video-based Person Re-Identification using Single Stream 2D Convolution Network

Abstract Slides Poster Similar

Hierarchical Multimodal Attention for Deep Video Summarization

Melissa Sanabria, Frederic Precioso, Thomas Menguy

Auto-TLDR; Automatic Summarization of Professional Soccer Matches Using Event-Stream Data and Multi- Instance Learning

Abstract Slides Poster Similar

Adaptive L2 Regularization in Person Re-Identification

Xingyang Ni, Liang Fang, Heikki Juhani Huttunen

Auto-TLDR; AdaptiveReID: Adaptive L2 Regularization for Person Re-identification

Abstract Slides Poster Similar

Learning Visual Voice Activity Detection with an Automatically Annotated Dataset

Stéphane Lathuiliere, Pablo Mesejo, Radu Horaud

Auto-TLDR; Deep Visual Voice Activity Detection with Optical Flow

Mood Detection Analyzing Lyrics and Audio Signal Based on Deep Learning Architectures

Konstantinos Pyrovolakis, Paraskevi Tzouveli, Giorgos Stamou

Auto-TLDR; Automated Music Mood Detection using Music Information Retrieval

Abstract Slides Poster Similar

Learning Neural Textual Representations for Citation Recommendation

Thanh Binh Kieu, Inigo Jauregi Unanue, Son Bao Pham, Xuan-Hieu Phan, M. Piccardi

Auto-TLDR; Sentence-BERT cascaded with Siamese and triplet networks for citation recommendation

Abstract Slides Poster Similar

Hierarchical Deep Hashing for Fast Large Scale Image Retrieval

Yongfei Zhang, Cheng Peng, Zhang Jingtao, Xianglong Liu, Shiliang Pu, Changhuai Chen

Auto-TLDR; Hierarchical indexed deep hashing for fast large scale image retrieval

Abstract Slides Poster Similar

Deep Composer: A Hash-Based Duplicative Neural Network for Generating Multi-Instrument Songs

Jacob Galajda, Brandon Royal, Kien Hua

Auto-TLDR; Deep Composer for Intelligence Duplication

On Identification and Retrieval of Near-Duplicate Biological Images: A New Dataset and Protocol

Thomas E. Koker, Sai Spandana Chintapalli, San Wang, Blake A. Talbot, Daniel Wainstock, Marcelo Cicconet, Mary C. Walsh

Auto-TLDR; BINDER: Bio-Image Near-Duplicate Examples Repository for Image Identification and Retrieval

Audio-Visual Speech Recognition Using a Two-Step Feature Fusion Strategy

Auto-TLDR; A Two-Step Feature Fusion Network for Speech Recognition

Abstract Slides Poster Similar

Automated Whiteboard Lecture Video Summarization by Content Region Detection and Representation

Bhargava Urala Kota, Alexander Stone, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; A Framework for Summarizing Whiteboard Lecture Videos Using Feature Representations of Handwritten Content Regions

Influence of Event Duration on Automatic Wheeze Classification

Bruno M Rocha, Diogo Pessoa, Alda Marques, Paulo Carvalho, Rui Pedro Paiva

Auto-TLDR; Experimental Design of the Non-wheeze Class for Wheeze Classification

Abstract Slides Poster Similar

Multi-Scale Keypoint Matching

Auto-TLDR; Multi-Scale Keypoint Matching Using Multi-Scale Information

Abstract Slides Poster Similar

Progressive Learning Algorithm for Efficient Person Re-Identification

Zhen Li, Hanyang Shao, Liang Niu, Nian Xue

Auto-TLDR; Progressive Learning Algorithm for Large-Scale Person Re-Identification

Abstract Slides Poster Similar

Leveraging Quadratic Spherical Mutual Information Hashing for Fast Image Retrieval

Nikolaos Passalis, Anastasios Tefas

Auto-TLDR; Quadratic Mutual Information for Large-Scale Hashing and Information Retrieval

Abstract Slides Poster Similar

Multi-Scale 2D Representation Learning for Weakly-Supervised Moment Retrieval

Ding Li, Rui Wu, Zhizhong Zhang, Yongqiang Tang, Wensheng Zhang

Auto-TLDR; Multi-scale 2D Representation Learning for Weakly Supervised Video Moment Retrieval

Abstract Slides Poster Similar

Adversarially Training for Audio Classifiers

Raymel Alfonso Sallo, Mohammad Esmaeilpour, Patrick Cardinal

Auto-TLDR; Adversarially Training for Robust Neural Networks against Adversarial Attacks

Abstract Slides Poster Similar

Enriching Video Captions with Contextual Text

Philipp Rimle, Pelin Dogan, Markus Gross

Auto-TLDR; Contextualized Video Captioning Using Contextual Text

Abstract Slides Poster Similar

Improving Mix-And-Separate Training in Audio-Visual Sound Source Separation with an Object Prior

Quan Nguyen, Simone Frintrop, Timo Gerkmann, Mikko Lauri, Julius Richter

Auto-TLDR; Object-Prior: Learning the 1-to-1 correspondence between visual and audio signals by audio- visual sound source methods

Comparison of Deep Learning and Hand Crafted Features for Mining Simulation Data

Theodoros Georgiou, Sebastian Schmitt, Thomas Baeck, Nan Pu, Wei Chen, Michael Lew

Auto-TLDR; Automated Data Analysis of Flow Fields in Computational Fluid Dynamics Simulations

Abstract Slides Poster Similar

Relevance Detection in Cataract Surgery Videos by Spatio-Temporal Action Localization

Negin Ghamsarian, Mario Taschwer, Doris Putzgruber, Stephanie. Sarny, Klaus Schoeffmann

Auto-TLDR; relevance-based retrieval in cataract surgery videos

Aggregating Object Features Based on Attention Weights for Fine-Grained Image Retrieval

Hongli Lin, Yongqi Song, Zixuan Zeng, Weisheng Wang

Auto-TLDR; DSAW: Unsupervised Dual-selection for Fine-Grained Image Retrieval

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

Top-DB-Net: Top DropBlock for Activation Enhancement in Person Re-Identification

Auto-TLDR; Top-DB-Net for Person Re-Identification using Top DropBlock

Abstract Slides Poster Similar

RWF-2000: An Open Large Scale Video Database for Violence Detection

Ming Cheng, Kunjing Cai, Ming Li

Auto-TLDR; Flow Gated Network for Violence Detection in Surveillance Cameras

Abstract Slides Poster Similar

Unsupervised Sound Source Localization From Audio-Image Pairs Using Input Gradient Map

Tomohiro Tanaka, Takahiro Shinozaki

Auto-TLDR; Unsupervised Sound Localization Using Gradient Method

Abstract Slides Poster Similar

Context Matters: Self-Attention for Sign Language Recognition

Fares Ben Slimane, Mohamed Bouguessa

Auto-TLDR; Attentional Network for Continuous Sign Language Recognition

Abstract Slides Poster Similar

More Correlations Better Performance: Fully Associative Networks for Multi-Label Image Classification

Auto-TLDR; Fully Associative Network for Fully Exploiting Correlation Information in Multi-Label Classification

Abstract Slides Poster Similar