Hybrid Network for End-To-End Text-Independent Speaker Identification

Wajdi Ghezaiel,

Luc Brun,

Olivier Lezoray

Auto-TLDR; Text-Independent Speaker Identification with Scattering Wavelet Network and Convolutional Neural Networks

Similar papers

DenseRecognition of Spoken Languages

Jaybrata Chakraborty, Bappaditya Chakraborty, Ujjwal Bhattacharya

Auto-TLDR; DenseNet: A Dense Convolutional Network Architecture for Speech Recognition in Indian Languages

Abstract Slides Poster Similar

The Application of Capsule Neural Network Based CNN for Speech Emotion Recognition

Auto-TLDR; CapCNN: A Capsule Neural Network for Speech Emotion Recognition

Abstract Slides Poster Similar

End-To-End Triplet Loss Based Emotion Embedding System for Speech Emotion Recognition

Puneet Kumar, Sidharth Jain, Balasubramanian Raman, Partha Pratim Roy, Masakazu Iwamura

Auto-TLDR; End-to-End Neural Embedding System for Speech Emotion Recognition

Abstract Slides Poster Similar

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

Which are the factors affecting the performance of audio surveillance systems?

Antonio Greco, Antonio Roberto, Alessia Saggese, Mario Vento

Auto-TLDR; Sound Event Recognition Using Convolutional Neural Networks and Visual Representations on MIVIA Audio Events

Toward Text-Independent Cross-Lingual Speaker Recognition Using English-Mandarin-Taiwanese Dataset

Auto-TLDR; Cross-lingual Speech for Biometric Recognition

Ballroom Dance Recognition from Audio Recordings

Tomas Pavlin, Jan Cech, Jiri Matas

Auto-TLDR; A CNN-based approach to classify ballroom dances given audio recordings

Abstract Slides Poster Similar

Detection of Calls from Smart Speaker Devices

Vinay Maddali, David Looney, Kailash Patil

Auto-TLDR; Distinguishing Between Smart Speaker and Cell Devices Using Only the Audio Using a Feature Set

Abstract Slides Poster Similar

Digit Recognition Applied to Reconstructed Audio Signals Using Deep Learning

Anastasia-Sotiria Toufa, Constantine Kotropoulos

Auto-TLDR; Compressed Sensing for Digit Recognition in Audio Reconstruction

Audio-Visual Speech Recognition Using a Two-Step Feature Fusion Strategy

Auto-TLDR; A Two-Step Feature Fusion Network for Speech Recognition

Abstract Slides Poster Similar

The Effect of Spectrogram Reconstruction on Automatic Music Transcription: An Alternative Approach to Improve Transcription Accuracy

Kin Wai Cheuk, Yin-Jyun Luo, Emmanouil Benetos, Herremans Dorien

Auto-TLDR; Exploring the effect of spectrogram reconstruction loss on automatic music transcription

Audio-Video Detection of the Active Speaker in Meetings

Francisco Madrigal, Frederic Lerasle, Lionel Pibre, Isabelle Ferrané

Auto-TLDR; Active Speaker Detection with Visual and Contextual Information from Meeting Context

Abstract Slides Poster Similar

Audio-Based Near-Duplicate Video Retrieval with Audio Similarity Learning

Pavlos Avgoustinakis, Giorgos Kordopatis-Zilos, Symeon Papadopoulos, Andreas L. Symeonidis, Ioannis Kompatsiaris

Auto-TLDR; AuSiL: Audio Similarity Learning for Near-duplicate Video Retrieval

Abstract Slides Poster Similar

Mood Detection Analyzing Lyrics and Audio Signal Based on Deep Learning Architectures

Konstantinos Pyrovolakis, Paraskevi Tzouveli, Giorgos Stamou

Auto-TLDR; Automated Music Mood Detection using Music Information Retrieval

Abstract Slides Poster Similar

One-Shot Learning for Acoustic Identification of Bird Species in Non-Stationary Environments

Michelangelo Acconcjaioco, Stavros Ntalampiras

Auto-TLDR; One-shot Learning in the Bioacoustics Domain using Siamese Neural Networks

Abstract Slides Poster Similar

Feature Engineering and Stacked Echo State Networks for Musical Onset Detection

Peter Steiner, Azarakhsh Jalalvand, Simon Stone, Peter Birkholz

Auto-TLDR; Echo State Networks for Onset Detection in Music Analysis

Abstract Slides Poster Similar

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

Improving Gravitational Wave Detection with 2D Convolutional Neural Networks

Siyu Fan, Yisen Wang, Yuan Luo, Alexander Michael Schmitt, Shenghua Yu

Auto-TLDR; Two-dimensional Convolutional Neural Networks for Gravitational Wave Detection from Time Series with Background Noise

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

Improving Mix-And-Separate Training in Audio-Visual Sound Source Separation with an Object Prior

Quan Nguyen, Simone Frintrop, Timo Gerkmann, Mikko Lauri, Julius Richter

Auto-TLDR; Object-Prior: Learning the 1-to-1 correspondence between visual and audio signals by audio- visual sound source methods

AttendAffectNet: Self-Attention Based Networks for Predicting Affective Responses from Movies

Thi Phuong Thao Ha, Bt Balamurali, Herremans Dorien, Roig Gemma

Auto-TLDR; AttendAffectNet: A Self-Attention Based Network for Emotion Prediction from Movies

Abstract Slides Poster Similar

Three-Dimensional Lip Motion Network for Text-Independent Speaker Recognition

Jianrong Wang, Tong Wu, Shanyu Wang, Mei Yu, Qiang Fang, Ju Zhang, Li Liu

Auto-TLDR; Lip Motion Network for Text-Independent and Text-Dependent Speaker Recognition

Abstract Slides Poster Similar

Influence of Event Duration on Automatic Wheeze Classification

Bruno M Rocha, Diogo Pessoa, Alda Marques, Paulo Carvalho, Rui Pedro Paiva

Auto-TLDR; Experimental Design of the Non-wheeze Class for Wheeze Classification

Abstract Slides Poster Similar

EasiECG: A Novel Inter-Patient Arrhythmia Classification Method Using ECG Waves

Chuanqi Han, Ruoran Huang, Fang Yu, Xi Huang, Li Cui

Auto-TLDR; EasiECG: Attention-based Convolution Factorization Machines for Arrhythmia Classification

Abstract Slides Poster Similar

Exploring Seismocardiogram Biometrics with Wavelet Transform

Po-Ya Hsu, Po-Han Hsu, Hsin-Li Liu

Auto-TLDR; Seismocardiogram Biometric Matching Using Wavelet Transform and Deep Learning Models

Abstract Slides Poster Similar

Learning Visual Voice Activity Detection with an Automatically Annotated Dataset

Stéphane Lathuiliere, Pablo Mesejo, Radu Horaud

Auto-TLDR; Deep Visual Voice Activity Detection with Optical Flow

Dynamically Mitigating Data Discrepancy with Balanced Focal Loss for Replay Attack Detection

Yongqiang Dou, Haocheng Yang, Maolin Yang, Yanyan Xu, Dengfeng Ke

Auto-TLDR; Anti-Spoofing with Balanced Focal Loss Function and Combination Features

Abstract Slides Poster Similar

Spatial Bias in Vision-Based Voice Activity Detection

Kalin Stefanov, Mohammad Adiban, Giampiero Salvi

Auto-TLDR; Spatial Bias in Vision-based Voice Activity Detection in Multiparty Human-Human Interactions

Mutual Alignment between Audiovisual Features for End-To-End Audiovisual Speech Recognition

Hong Liu, Yawei Wang, Bing Yang

Auto-TLDR; Mutual Iterative Attention for Audio Visual Speech Recognition

Abstract Slides Poster Similar

Cross-Lingual Text Image Recognition Via Multi-Task Sequence to Sequence Learning

Zhuo Chen, Fei Yin, Xu-Yao Zhang, Qing Yang, Cheng-Lin Liu

Auto-TLDR; Cross-Lingual Text Image Recognition with Multi-task Learning

Abstract Slides Poster Similar

ResMax: Detecting Voice Spoofing Attacks with Residual Network and Max Feature Map

Il-Youp Kwak, Sungsu Kwag, Junhee Lee, Jun Ho Huh, Choong-Hoon Lee, Youngbae Jeon, Jeonghwan Hwang, Ji Won Yoon

Auto-TLDR; ASVspoof 2019: A Lightweight Automatic Speaker Verification Spoofing and Countermeasures System

Abstract Slides Poster Similar

Space-Time Domain Tensor Neural Networks: An Application on Human Pose Classification

Konstantinos Makantasis, Athanasios Voulodimos, Anastasios Doulamis, Nikolaos Doulamis, Nikolaos Bakalos

Auto-TLDR; Tensor-Based Neural Network for Spatiotemporal Pose Classifiaction using Three-Dimensional Skeleton Data

Abstract Slides Poster Similar

Graph Convolutional Neural Networks for Power Line Outage Identification

Auto-TLDR; Graph Convolutional Networks for Power Line Outage Identification

Adversarially Training for Audio Classifiers

Raymel Alfonso Sallo, Mohammad Esmaeilpour, Patrick Cardinal

Auto-TLDR; Adversarially Training for Robust Neural Networks against Adversarial Attacks

Abstract Slides Poster Similar

Generalization Comparison of Deep Neural Networks Via Output Sensitivity

Mahsa Forouzesh, Farnood Salehi, Patrick Thiran

Auto-TLDR; Generalization of Deep Neural Networks using Sensitivity

Radar Image Reconstruction from Raw ADC Data Using Parametric Variational Autoencoder with Domain Adaptation

Michael Stephan, Thomas Stadelmayer, Avik Santra, Georg Fischer, Robert Weigel, Fabian Lurz

Auto-TLDR; Parametric Variational Autoencoder-based Human Target Detection and Localization for Frequency Modulated Continuous Wave Radar

Abstract Slides Poster Similar

Person Recognition with HGR Maximal Correlation on Multimodal Data

Yihua Liang, Fei Ma, Yang Li, Shao-Lun Huang

Auto-TLDR; A correlation-based multimodal person recognition framework that learns discriminative embeddings of persons by joint learning visual features and audio features

Abstract Slides Poster Similar

Electroencephalography Signal Processing Based on Textural Features for Monitoring the Driver’s State by a Brain-Computer Interface

Giulia Orrù, Marco Micheletto, Fabio Terranova, Gian Luca Marcialis

Auto-TLDR; One-dimensional Local Binary Pattern Algorithm for Estimating Driver Vigilance in a Brain-Computer Interface System

Abstract Slides Poster Similar

Automatic Annotation of Corpora for Emotion Recognition through Facial Expressions Analysis

Alex Mircoli, Claudia Diamantini, Domenico Potena, Emanuele Storti

Auto-TLDR; Automatic annotation of video subtitles on the basis of facial expressions using machine learning algorithms

Abstract Slides Poster Similar

Robust Audio-Visual Speech Recognition Based on Hybrid Fusion

Hong Liu, Wenhao Li, Bing Yang

Auto-TLDR; Hybrid Fusion Based AVSR with Residual Networks and Bidirectional Gated Recurrent Unit for Robust Speech Recognition in Noise Conditions

Abstract Slides Poster Similar

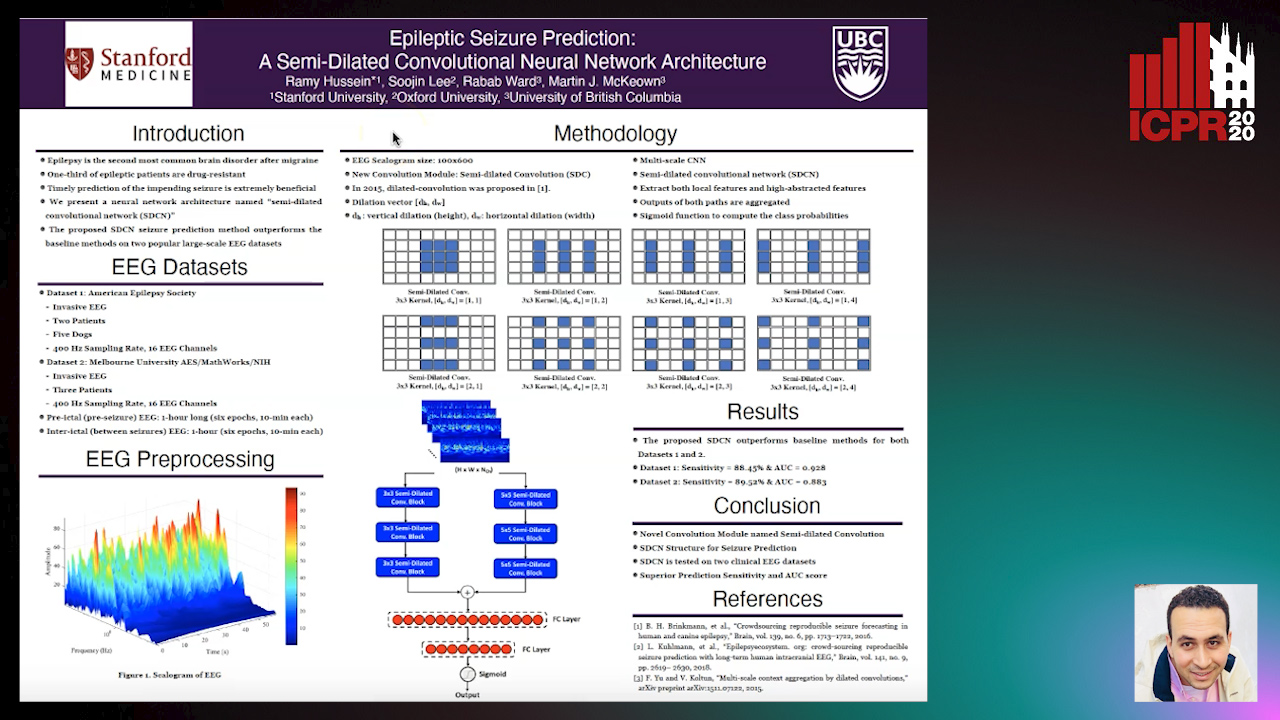

Epileptic Seizure Prediction: A Semi-Dilated Convolutional Neural Network Architecture

Ramy Hussein, Rabab K. Ward, Soojin Lee, Martin Mckeown

Auto-TLDR; Semi-Dilated Convolutional Network for Seizure Prediction using EEG Scalograms

Kernel-based Graph Convolutional Networks

Auto-TLDR; Spatial Graph Convolutional Networks in Recurrent Kernel Hilbert Space

Abstract Slides Poster Similar

Wireless Localisation in WiFi Using Novel Deep Architectures

Peizheng Li, Han Cui, Aftab Khan, Usman Raza, Robert Piechocki, Angela Doufexi, Tim Farnham

Auto-TLDR; Deep Neural Network for Indoor Localisation of WiFi Devices in Indoor Environments

Abstract Slides Poster Similar

Recognizing Bengali Word Images - A Zero-Shot Learning Perspective

Sukalpa Chanda, Daniël Arjen Willem Haitink, Prashant Kumar Prasad, Jochem Baas, Umapada Pal, Lambert Schomaker

Auto-TLDR; Zero-Shot Learning for Word Recognition in Bengali Script

Abstract Slides Poster Similar

S2I-Bird: Sound-To-Image Generation of Bird Species Using Generative Adversarial Networks

Joo Yong Shim, Joongheon Kim, Jong-Kook Kim

Auto-TLDR; Generating bird images from sound using conditional generative adversarial networks

Abstract Slides Poster Similar

Unsupervised Co-Segmentation for Athlete Movements and Live Commentaries Using Crossmodal Temporal Proximity

Yasunori Ohishi, Yuki Tanaka, Kunio Kashino

Auto-TLDR; A guided attention scheme for audio-visual co-segmentation

Abstract Slides Poster Similar

Merged 1D-2D Deep Convolutional Neural Networks for Nerve Detection in Ultrasound Images

Mohammad Alkhatib, Adel Hafiane, Pierre Vieyres

Auto-TLDR; A Deep Neural Network for Deep Neural Networks to Detect Median Nerve in Ultrasound-Guided Regional Anesthesia

Abstract Slides Poster Similar

EEG-Based Cognitive State Assessment Using Deep Ensemble Model and Filter Bank Common Spatial Pattern

Debashis Das Chakladar, Shubhashis Dey, Partha Pratim Roy, Masakazu Iwamura

Auto-TLDR; A Deep Ensemble Model for Cognitive State Assessment using EEG-based Cognitive State Analysis

Abstract Slides Poster Similar