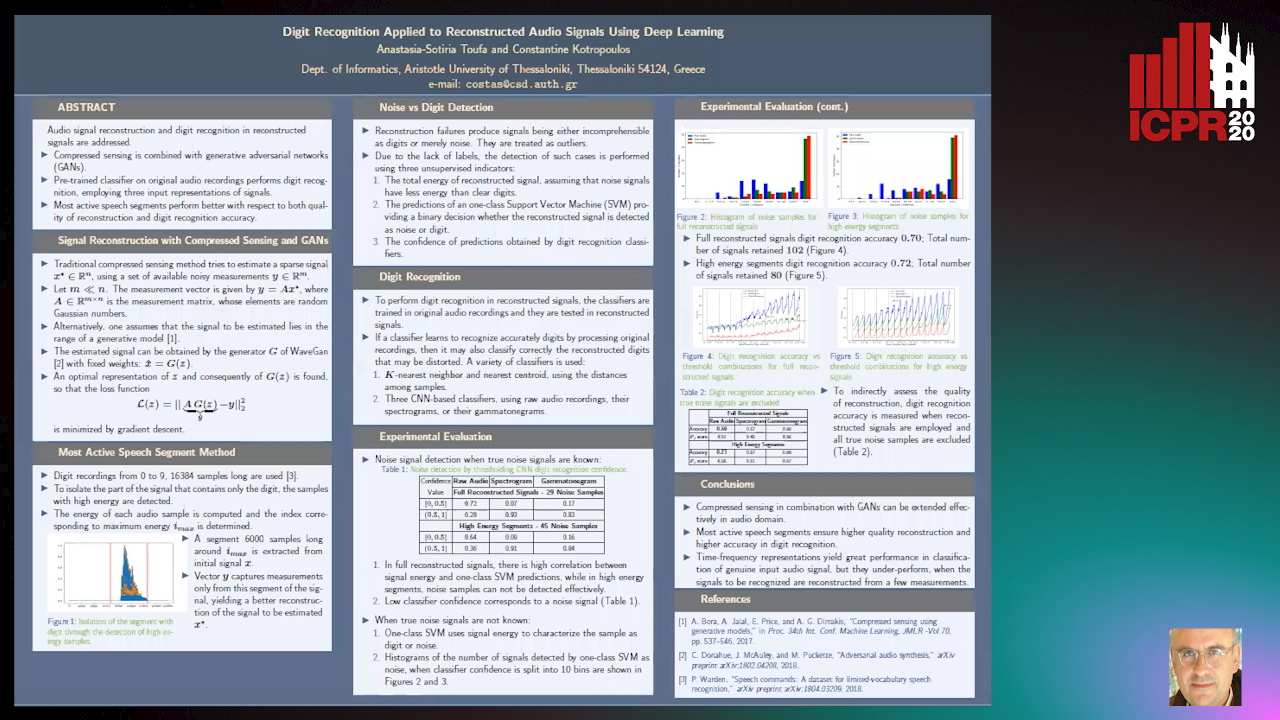

Digit Recognition Applied to Reconstructed Audio Signals Using Deep Learning

Anastasia-Sotiria Toufa,

Constantine Kotropoulos

Auto-TLDR; Compressed Sensing for Digit Recognition in Audio Reconstruction

Similar papers

Hybrid Network for End-To-End Text-Independent Speaker Identification

Wajdi Ghezaiel, Luc Brun, Olivier Lezoray

Auto-TLDR; Text-Independent Speaker Identification with Scattering Wavelet Network and Convolutional Neural Networks

Abstract Slides Poster Similar

Ballroom Dance Recognition from Audio Recordings

Tomas Pavlin, Jan Cech, Jiri Matas

Auto-TLDR; A CNN-based approach to classify ballroom dances given audio recordings

Abstract Slides Poster Similar

DenseRecognition of Spoken Languages

Jaybrata Chakraborty, Bappaditya Chakraborty, Ujjwal Bhattacharya

Auto-TLDR; DenseNet: A Dense Convolutional Network Architecture for Speech Recognition in Indian Languages

Abstract Slides Poster Similar

The Effect of Spectrogram Reconstruction on Automatic Music Transcription: An Alternative Approach to Improve Transcription Accuracy

Kin Wai Cheuk, Yin-Jyun Luo, Emmanouil Benetos, Herremans Dorien

Auto-TLDR; Exploring the effect of spectrogram reconstruction loss on automatic music transcription

The Application of Capsule Neural Network Based CNN for Speech Emotion Recognition

Auto-TLDR; CapCNN: A Capsule Neural Network for Speech Emotion Recognition

Abstract Slides Poster Similar

Which are the factors affecting the performance of audio surveillance systems?

Antonio Greco, Antonio Roberto, Alessia Saggese, Mario Vento

Auto-TLDR; Sound Event Recognition Using Convolutional Neural Networks and Visual Representations on MIVIA Audio Events

A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera, Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Abstract Slides Poster Similar

Audio-Video Detection of the Active Speaker in Meetings

Francisco Madrigal, Frederic Lerasle, Lionel Pibre, Isabelle Ferrané

Auto-TLDR; Active Speaker Detection with Visual and Contextual Information from Meeting Context

Abstract Slides Poster Similar

S2I-Bird: Sound-To-Image Generation of Bird Species Using Generative Adversarial Networks

Joo Yong Shim, Joongheon Kim, Jong-Kook Kim

Auto-TLDR; Generating bird images from sound using conditional generative adversarial networks

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

Adversarially Training for Audio Classifiers

Raymel Alfonso Sallo, Mohammad Esmaeilpour, Patrick Cardinal

Auto-TLDR; Adversarially Training for Robust Neural Networks against Adversarial Attacks

Abstract Slides Poster Similar

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

End-To-End Triplet Loss Based Emotion Embedding System for Speech Emotion Recognition

Puneet Kumar, Sidharth Jain, Balasubramanian Raman, Partha Pratim Roy, Masakazu Iwamura

Auto-TLDR; End-to-End Neural Embedding System for Speech Emotion Recognition

Abstract Slides Poster Similar

Leveraging Synthetic Subject Invariant EEG Signals for Zero Calibration BCI

Nik Khadijah Nik Aznan, Amir Atapour-Abarghouei, Stephen Bonner, Jason Connolly, Toby Breckon

Auto-TLDR; SIS-GAN: Subject Invariant SSVEP Generative Adversarial Network for Brain-Computer Interface

Electroencephalography Signal Processing Based on Textural Features for Monitoring the Driver’s State by a Brain-Computer Interface

Giulia Orrù, Marco Micheletto, Fabio Terranova, Gian Luca Marcialis

Auto-TLDR; One-dimensional Local Binary Pattern Algorithm for Estimating Driver Vigilance in a Brain-Computer Interface System

Abstract Slides Poster Similar

Space-Time Domain Tensor Neural Networks: An Application on Human Pose Classification

Konstantinos Makantasis, Athanasios Voulodimos, Anastasios Doulamis, Nikolaos Doulamis, Nikolaos Bakalos

Auto-TLDR; Tensor-Based Neural Network for Spatiotemporal Pose Classifiaction using Three-Dimensional Skeleton Data

Abstract Slides Poster Similar

Influence of Event Duration on Automatic Wheeze Classification

Bruno M Rocha, Diogo Pessoa, Alda Marques, Paulo Carvalho, Rui Pedro Paiva

Auto-TLDR; Experimental Design of the Non-wheeze Class for Wheeze Classification

Abstract Slides Poster Similar

Detection of Calls from Smart Speaker Devices

Vinay Maddali, David Looney, Kailash Patil

Auto-TLDR; Distinguishing Between Smart Speaker and Cell Devices Using Only the Audio Using a Feature Set

Abstract Slides Poster Similar

AttendAffectNet: Self-Attention Based Networks for Predicting Affective Responses from Movies

Thi Phuong Thao Ha, Bt Balamurali, Herremans Dorien, Roig Gemma

Auto-TLDR; AttendAffectNet: A Self-Attention Based Network for Emotion Prediction from Movies

Abstract Slides Poster Similar

Uncertainty-Aware Data Augmentation for Food Recognition

Eduardo Aguilar, Bhalaji Nagarajan, Rupali Khatun, Marc Bolaños, Petia Radeva

Auto-TLDR; Data Augmentation for Food Recognition Using Epistemic Uncertainty

Abstract Slides Poster Similar

Adversarial Encoder-Multi-Task-Decoder for Multi-Stage Processes

Andre Mendes, Julian Togelius, Leandro Dos Santos Coelho

Auto-TLDR; Multi-Task Learning and Semi-Supervised Learning for Multi-Stage Processes

Signal Generation Using 1d Deep Convolutional Generative Adversarial Networks for Fault Diagnosis of Electrical Machines

Russell Sabir, Daniele Rosato, Sven Hartmann, Clemens Gühmann

Auto-TLDR; Large Dataset Generation from Faulty AC Machines using Deep Convolutional GAN

Abstract Slides Poster Similar

Separation of Aleatoric and Epistemic Uncertainty in Deterministic Deep Neural Networks

Denis Huseljic, Bernhard Sick, Marek Herde, Daniel Kottke

Auto-TLDR; AE-DNN: Modeling Uncertainty in Deep Neural Networks

Abstract Slides Poster Similar

Radar Image Reconstruction from Raw ADC Data Using Parametric Variational Autoencoder with Domain Adaptation

Michael Stephan, Thomas Stadelmayer, Avik Santra, Georg Fischer, Robert Weigel, Fabian Lurz

Auto-TLDR; Parametric Variational Autoencoder-based Human Target Detection and Localization for Frequency Modulated Continuous Wave Radar

Abstract Slides Poster Similar

Audio-Based Near-Duplicate Video Retrieval with Audio Similarity Learning

Pavlos Avgoustinakis, Giorgos Kordopatis-Zilos, Symeon Papadopoulos, Andreas L. Symeonidis, Ioannis Kompatsiaris

Auto-TLDR; AuSiL: Audio Similarity Learning for Near-duplicate Video Retrieval

Abstract Slides Poster Similar

One-Shot Learning for Acoustic Identification of Bird Species in Non-Stationary Environments

Michelangelo Acconcjaioco, Stavros Ntalampiras

Auto-TLDR; One-shot Learning in the Bioacoustics Domain using Siamese Neural Networks

Abstract Slides Poster Similar

Are Multiple Cross-Correlation Identities Better Than Just Two? Improving the Estimate of Time Differences-Of-Arrivals from Blind Audio Signals

Danilo Greco, Jacopo Cavazza, Alessio Del Bue

Auto-TLDR; Improving Blind Channel Identification Using Cross-Correlation Identity for Time Differences-of-Arrivals Estimation

Abstract Slides Poster Similar

Toward Text-Independent Cross-Lingual Speaker Recognition Using English-Mandarin-Taiwanese Dataset

Auto-TLDR; Cross-lingual Speech for Biometric Recognition

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

Spatial Bias in Vision-Based Voice Activity Detection

Kalin Stefanov, Mohammad Adiban, Giampiero Salvi

Auto-TLDR; Spatial Bias in Vision-based Voice Activity Detection in Multiparty Human-Human Interactions

Anticipating Activity from Multimodal Signals

Tiziana Rotondo, Giovanni Maria Farinella, Davide Giacalone, Sebastiano Mauro Strano, Valeria Tomaselli, Sebastiano Battiato

Auto-TLDR; Exploiting Multimodal Signal Embedding Space for Multi-Action Prediction

Abstract Slides Poster Similar

Data Augmentation Via Mixed Class Interpolation Using Cycle-Consistent Generative Adversarial Networks Applied to Cross-Domain Imagery

Hiroshi Sasaki, Chris G. Willcocks, Toby Breckon

Auto-TLDR; C2GMA: A Generative Domain Transfer Model for Non-visible Domain Classification

Abstract Slides Poster Similar

Improving Gravitational Wave Detection with 2D Convolutional Neural Networks

Siyu Fan, Yisen Wang, Yuan Luo, Alexander Michael Schmitt, Shenghua Yu

Auto-TLDR; Two-dimensional Convolutional Neural Networks for Gravitational Wave Detection from Time Series with Background Noise

Improving Mix-And-Separate Training in Audio-Visual Sound Source Separation with an Object Prior

Quan Nguyen, Simone Frintrop, Timo Gerkmann, Mikko Lauri, Julius Richter

Auto-TLDR; Object-Prior: Learning the 1-to-1 correspondence between visual and audio signals by audio- visual sound source methods

Automatic Annotation of Corpora for Emotion Recognition through Facial Expressions Analysis

Alex Mircoli, Claudia Diamantini, Domenico Potena, Emanuele Storti

Auto-TLDR; Automatic annotation of video subtitles on the basis of facial expressions using machine learning algorithms

Abstract Slides Poster Similar

Generative Deep-Neural-Network Mixture Modeling with Semi-Supervised MinMax+EM Learning

Auto-TLDR; Semi-supervised Deep Neural Networks for Generative Mixture Modeling and Clustering

Abstract Slides Poster Similar

Single-Modal Incremental Terrain Clustering from Self-Supervised Audio-Visual Feature Learning

Reina Ishikawa, Ryo Hachiuma, Akiyoshi Kurobe, Hideo Saito

Auto-TLDR; Multi-modal Variational Autoencoder for Terrain Type Clustering

Abstract Slides Poster Similar

Deep Learning on Active Sonar Data Using Bayesian Optimization for Hyperparameter Tuning

Henrik Berg, Karl Thomas Hjelmervik

Auto-TLDR; Bayesian Optimization for Sonar Operations in Littoral Environments

Abstract Slides Poster Similar

Fully Convolutional Neural Networks for Raw Eye Tracking Data Segmentation, Generation, and Reconstruction

Wolfgang Fuhl, Yao Rong, Enkelejda Kasneci

Auto-TLDR; Semantic Segmentation of Eye Tracking Data with Fully Convolutional Neural Networks

Abstract Slides Poster Similar

Feature Engineering and Stacked Echo State Networks for Musical Onset Detection

Peter Steiner, Azarakhsh Jalalvand, Simon Stone, Peter Birkholz

Auto-TLDR; Echo State Networks for Onset Detection in Music Analysis

Abstract Slides Poster Similar

Computational Data Analysis for First Quantization Estimation on JPEG Double Compressed Images

Sebastiano Battiato, Oliver Giudice, Francesco Guarnera, Giovanni Puglisi

Auto-TLDR; Exploiting Discrete Cosine Transform Coefficients for Multimedia Forensics

Abstract Slides Poster Similar

On the Use of Benford's Law to Detect GAN-Generated Images

Nicolo Bonettini, Paolo Bestagini, Simone Milani, Stefano Tubaro

Auto-TLDR; Using Benford's Law to Detect GAN-generated Images from Natural Images

Abstract Slides Poster Similar

On the Evaluation of Generative Adversarial Networks by Discriminative Models

Amirsina Torfi, Mohammadreza Beyki, Edward Alan Fox

Auto-TLDR; Domain-agnostic GAN Evaluation with Siamese Neural Networks

Abstract Slides Poster Similar

CardioGAN: An Attention-Based Generative Adversarial Network for Generation of Electrocardiograms

Subhrajyoti Dasgupta, Sudip Das, Ujjwal Bhattacharya

Auto-TLDR; CardioGAN: Generative Adversarial Network for Synthetic Electrocardiogram Signals

Abstract Slides Poster Similar

Learning Visual Voice Activity Detection with an Automatically Annotated Dataset

Stéphane Lathuiliere, Pablo Mesejo, Radu Horaud

Auto-TLDR; Deep Visual Voice Activity Detection with Optical Flow

Estimation of Clinical Tremor Using Spatio-Temporal Adversarial AutoEncoder

Li Zhang, Vidya Koesmahargyo, Isaac Galatzer-Levy

Auto-TLDR; ST-AAE: Spatio-temporal Adversarial Autoencoder for Clinical Assessment of Hand Tremor Frequency and Severity

Abstract Slides Poster Similar

How to Define a Rejection Class Based on Model Learning?

Sarah Laroui, Xavier Descombes, Aurelia Vernay, Florent Villiers, Francois Villalba, Eric Debreuve

Auto-TLDR; An innovative learning strategy for supervised classification that is able, by design, to reject a sample as not belonging to any of the known classes

Abstract Slides Poster Similar

Video Analytics Gait Trend Measurement for Fall Prevention and Health Monitoring

Lawrence O'Gorman, Xinyi Liu, Md Imran Sarker, Mariofanna Milanova

Auto-TLDR; Towards Health Monitoring of Gait with Deep Learning

Abstract Slides Poster Similar