A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera,

Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Similar papers

Discriminative Multi-Level Reconstruction under Compact Latent Space for One-Class Novelty Detection

Jaewoo Park, Yoon Gyo Jung, Andrew Teoh

Auto-TLDR; Discriminative Compact AE for One-Class novelty detection and Adversarial Example Detection

Evaluation of Anomaly Detection Algorithms for the Real-World Applications

Marija Ivanovska, Domen Tabernik, Danijel Skocaj, Janez Pers

Auto-TLDR; Evaluating Anomaly Detection Algorithms for Practical Applications

Abstract Slides Poster Similar

AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault, Hervé Le Borgne, Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Abstract Slides Poster Similar

Modeling the Distribution of Normal Data in Pre-Trained Deep Features for Anomaly Detection

Oliver Rippel, Patrick Mertens, Dorit Merhof

Auto-TLDR; Deep Feature Representations for Anomaly Detection in Images

Abstract Slides Poster Similar

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

Generative Latent Implicit Conditional Optimization When Learning from Small Sample

Auto-TLDR; GLICO: Generative Latent Implicit Conditional Optimization for Small Sample Learning

Abstract Slides Poster Similar

Improved anomaly detection by training an autoencoder with skip connections on images corrupted with Stain-shaped noise

Anne-Sophie Collin, Christophe De Vleeschouwer

Auto-TLDR; Autoencoder with Skip Connections for Anomaly Detection

Abstract Slides Poster Similar

Video Anomaly Detection by Estimating Likelihood of Representations

Auto-TLDR; Video Anomaly Detection in the latent feature space using a deep probabilistic model

Abstract Slides Poster Similar

Variational Deep Embedding Clustering by Augmented Mutual Information Maximization

Qiang Ji, Yanfeng Sun, Yongli Hu, Baocai Yin

Auto-TLDR; Clustering by Augmented Mutual Information maximization for Deep Embedding

Abstract Slides Poster Similar

Pretraining Image Encoders without Reconstruction Via Feature Prediction Loss

Gustav Grund Pihlgren, Fredrik Sandin, Marcus Liwicki

Auto-TLDR; Feature Prediction Loss for Autoencoder-based Pretraining of Image Encoders

GAN-Based Gaussian Mixture Model Responsibility Learning

Wanming Huang, Yi Da Xu, Shuai Jiang, Xuan Liang, Ian Oppermann

Auto-TLDR; Posterior Consistency Module for Gaussian Mixture Model

Abstract Slides Poster Similar

Variational Capsule Encoder

Harish Raviprakash, Syed Anwar, Ulas Bagci

Auto-TLDR; Bayesian Capsule Networks for Representation Learning in latent space

Abstract Slides Poster Similar

IDA-GAN: A Novel Imbalanced Data Augmentation GAN

Auto-TLDR; IDA-GAN: Generative Adversarial Networks for Imbalanced Data Augmentation

Abstract Slides Poster Similar

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Mutual Information Based Method for Unsupervised Disentanglement of Video Representation

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; MIPAE: Mutual Information Predictive Auto-Encoder for Video Prediction

Abstract Slides Poster Similar

Reducing the Variance of Variational Estimates of Mutual Information by Limiting the Critic's Hypothesis Space to RKHS

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; Mutual Information Estimation from Variational Lower Bounds Using a Critic's Hypothesis Space

Semantics-Guided Representation Learning with Applications to Visual Synthesis

Jia-Wei Yan, Ci-Siang Lin, Fu-En Yang, Yu-Jhe Li, Yu-Chiang Frank Wang

Auto-TLDR; Learning Interpretable and Interpolatable Latent Representations for Visual Synthesis

Abstract Slides Poster Similar

On-Manifold Adversarial Data Augmentation Improves Uncertainty Calibration

Kanil Patel, William Beluch, Dan Zhang, Michael Pfeiffer, Bin Yang

Auto-TLDR; On-Manifold Adversarial Data Augmentation for Uncertainty Estimation

Separation of Aleatoric and Epistemic Uncertainty in Deterministic Deep Neural Networks

Denis Huseljic, Bernhard Sick, Marek Herde, Daniel Kottke

Auto-TLDR; AE-DNN: Modeling Uncertainty in Deep Neural Networks

Abstract Slides Poster Similar

Variational Inference with Latent Space Quantization for Adversarial Resilience

Vinay Kyatham, Deepak Mishra, Prathosh A.P.

Auto-TLDR; A Generalized Defense Mechanism for Adversarial Attacks on Data Manifolds

Abstract Slides Poster Similar

Disentangled Representation Learning for Controllable Image Synthesis: An Information-Theoretic Perspective

Shichang Tang, Xu Zhou, Xuming He, Yi Ma

Auto-TLDR; Controllable Image Synthesis in Deep Generative Models using Variational Auto-Encoder

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

Beyond Cross-Entropy: Learning Highly Separable Feature Distributions for Robust and Accurate Classification

Arslan Ali, Andrea Migliorati, Tiziano Bianchi, Enrico Magli

Auto-TLDR; Gaussian class-conditional simplex loss for adversarial robust multiclass classifiers

Abstract Slides Poster Similar

Adaptive Image Compression Using GAN Based Semantic-Perceptual Residual Compensation

Ruojing Wang, Zitang Sun, Sei-Ichiro Kamata, Weili Chen

Auto-TLDR; Adaptive Image Compression using GAN based Semantic-Perceptual Residual Compensation

Abstract Slides Poster Similar

Boundary Optimised Samples Training for Detecting Out-Of-Distribution Images

Luca Marson, Vladimir Li, Atsuto Maki

Auto-TLDR; Boundary Optimised Samples for Out-of-Distribution Input Detection in Deep Convolutional Networks

Abstract Slides Poster Similar

Interpolation in Auto Encoders with Bridge Processes

Carl Ringqvist, Henrik Hult, Judith Butepage, Hedvig Kjellstrom

Auto-TLDR; Stochastic interpolations from auto encoders trained on flattened sequences

Abstract Slides Poster Similar

GAP: Quantifying the Generative Adversarial Set and Class Feature Applicability of Deep Neural Networks

Edward Collier, Supratik Mukhopadhyay

Auto-TLDR; Approximating Adversarial Learning in Deep Neural Networks Using Set and Class Adversaries

Abstract Slides Poster Similar

Multi-Modal Deep Clustering: Unsupervised Partitioning of Images

Auto-TLDR; Multi-Modal Deep Clustering for Unlabeled Images

Abstract Slides Poster Similar

Generative Deep-Neural-Network Mixture Modeling with Semi-Supervised MinMax+EM Learning

Auto-TLDR; Semi-supervised Deep Neural Networks for Generative Mixture Modeling and Clustering

Abstract Slides Poster Similar

Automatic Detection of Stationary Waves in the Venus’ Atmosphere Using Deep Generative Models

Minori Narita, Daiki Kimura, Takeshi Imamura

Auto-TLDR; Anomaly Detection of Large Bow-shaped Structures on the Venus Clouds using Variational Auto-encoder and Attention Maps

Abstract Slides Poster Similar

Auto Encoding Explanatory Examples with Stochastic Paths

Cesar Ali Ojeda Marin, Ramses J. Sanchez, Kostadin Cvejoski, Bogdan Georgiev

Auto-TLDR; Semantic Stochastic Path: Explaining a Classifier's Decision Making Process using latent codes

Abstract Slides Poster Similar

Local Clustering with Mean Teacher for Semi-Supervised Learning

Zexi Chen, Benjamin Dutton, Bharathkumar Ramachandra, Tianfu Wu, Ranga Raju Vatsavai

Auto-TLDR; Local Clustering for Semi-supervised Learning

Attack-Agnostic Adversarial Detection on Medical Data Using Explainable Machine Learning

Matthew Watson, Noura Al Moubayed

Auto-TLDR; Explainability-based Detection of Adversarial Samples on EHR and Chest X-Ray Data

Abstract Slides Poster Similar

Enlarging Discriminative Power by Adding an Extra Class in Unsupervised Domain Adaptation

Hai Tran, Sumyeong Ahn, Taeyoung Lee, Yung Yi

Auto-TLDR; Unsupervised Domain Adaptation using Artificial Classes

Abstract Slides Poster Similar

Parallel Network to Learn Novelty from the Known

Shuaiyuan Du, Chaoyi Hong, Zhiyu Pan, Chen Feng, Zhiguo Cao

Auto-TLDR; Trainable Parallel Network for Pseudo-Novel Detection

Abstract Slides Poster Similar

Adversarial Encoder-Multi-Task-Decoder for Multi-Stage Processes

Andre Mendes, Julian Togelius, Leandro Dos Santos Coelho

Auto-TLDR; Multi-Task Learning and Semi-Supervised Learning for Multi-Stage Processes

On the Evaluation of Generative Adversarial Networks by Discriminative Models

Amirsina Torfi, Mohammadreza Beyki, Edward Alan Fox

Auto-TLDR; Domain-agnostic GAN Evaluation with Siamese Neural Networks

Abstract Slides Poster Similar

Semi-Supervised Class Incremental Learning

Alexis Lechat, Stéphane Herbin, Frederic Jurie

Auto-TLDR; incremental class learning with non-annotated batches

Abstract Slides Poster Similar

Adaptive Noise Injection for Training Stochastic Student Networks from Deterministic Teachers

Yi Xiang Marcus Tan, Yuval Elovici, Alexander Binder

Auto-TLDR; Adaptive Stochastic Networks for Adversarial Attacks

Learning Interpretable Representation for 3D Point Clouds

Feng-Guang Su, Ci-Siang Lin, Yu-Chiang Frank Wang

Auto-TLDR; Disentangling Body-type and Pose Information from 3D Point Clouds Using Adversarial Learning

Abstract Slides Poster Similar

Augmentation of Small Training Data Using GANs for Enhancing the Performance of Image Classification

Auto-TLDR; Generative Adversarial Network for Image Training Data Augmentation

Abstract Slides Poster Similar

NeuralFP: Out-Of-Distribution Detection Using Fingerprints of Neural Networks

Wei-Han Lee, Steve Millman, Nirmit Desai, Mudhakar Srivatsa, Changchang Liu

Auto-TLDR; NeuralFP: Detecting Out-of-Distribution Records Using Neural Network Models

Abstract Slides Poster Similar

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

Unsupervised Detection of Pulmonary Opacities for Computer-Aided Diagnosis of COVID-19 on CT Images

Rui Xu, Xiao Cao, Yufeng Wang, Yen-Wei Chen, Xinchen Ye, Lin Lin, Wenchao Zhu, Chao Chen, Fangyi Xu, Yong Zhou, Hongjie Hu, Shoji Kido, Noriyuki Tomiyama

Auto-TLDR; A computer-aided diagnosis of COVID-19 from CT images using unsupervised pulmonary opacity detection

Abstract Slides Poster Similar

Directed Variational Cross-encoder Network for Few-Shot Multi-image Co-segmentation

Sayan Banerjee, Divakar Bhat S, Subhasis Chaudhuri, Rajbabu Velmurugan

Auto-TLDR; Directed Variational Inference Cross Encoder for Class Agnostic Co-Segmentation of Multiple Images

Abstract Slides Poster Similar

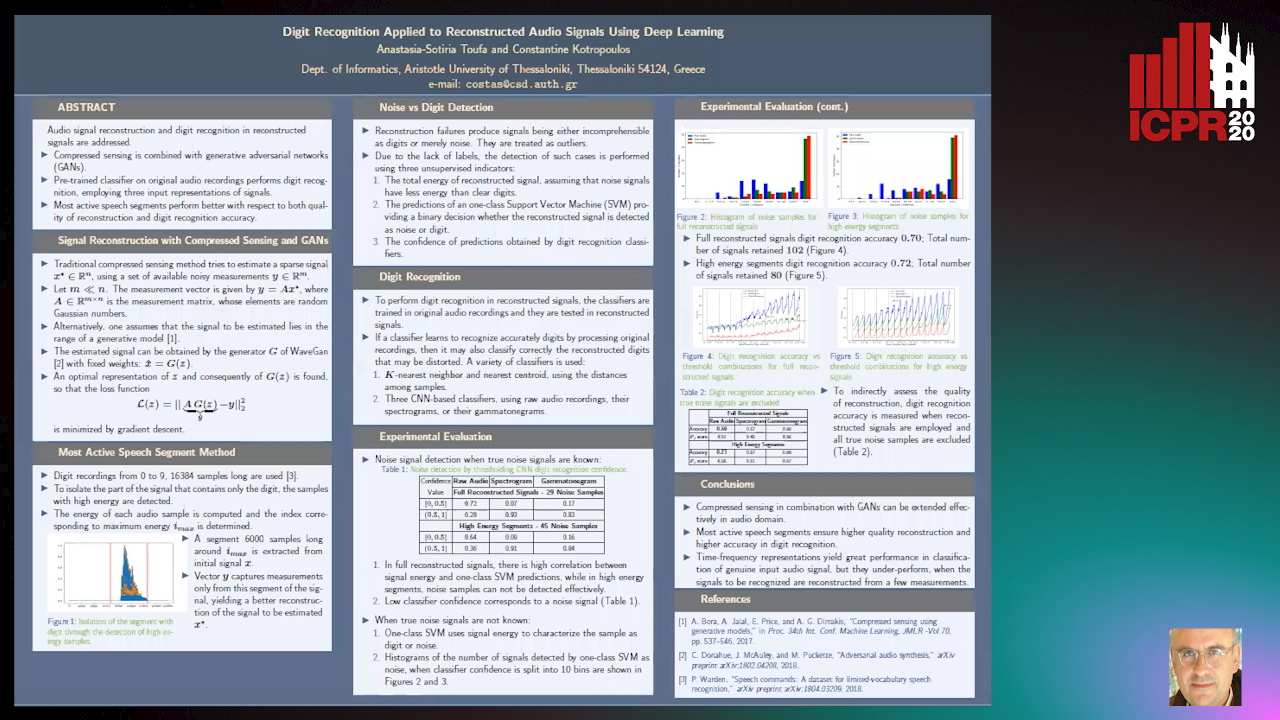

Digit Recognition Applied to Reconstructed Audio Signals Using Deep Learning

Anastasia-Sotiria Toufa, Constantine Kotropoulos

Auto-TLDR; Compressed Sensing for Digit Recognition in Audio Reconstruction

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Data Augmentation Via Mixed Class Interpolation Using Cycle-Consistent Generative Adversarial Networks Applied to Cross-Domain Imagery

Hiroshi Sasaki, Chris G. Willcocks, Toby Breckon

Auto-TLDR; C2GMA: A Generative Domain Transfer Model for Non-visible Domain Classification

Abstract Slides Poster Similar