Semantics-Guided Representation Learning with Applications to Visual Synthesis

Jia-Wei Yan,

Ci-Siang Lin,

Fu-En Yang,

Yu-Jhe Li,

Yu-Chiang Frank Wang

Auto-TLDR; Learning Interpretable and Interpolatable Latent Representations for Visual Synthesis

Similar papers

Learning Interpretable Representation for 3D Point Clouds

Feng-Guang Su, Ci-Siang Lin, Yu-Chiang Frank Wang

Auto-TLDR; Disentangling Body-type and Pose Information from 3D Point Clouds Using Adversarial Learning

Abstract Slides Poster Similar

Disentangled Representation Learning for Controllable Image Synthesis: An Information-Theoretic Perspective

Shichang Tang, Xu Zhou, Xuming He, Yi Ma

Auto-TLDR; Controllable Image Synthesis in Deep Generative Models using Variational Auto-Encoder

Abstract Slides Poster Similar

Disentangle, Assemble, and Synthesize: Unsupervised Learning to Disentangle Appearance and Location

Hiroaki Aizawa, Hirokatsu Kataoka, Yutaka Satoh, Kunihito Kato

Auto-TLDR; Generative Adversarial Networks with Structural Constraint for controllability of latent space

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

Variational Deep Embedding Clustering by Augmented Mutual Information Maximization

Qiang Ji, Yanfeng Sun, Yongli Hu, Baocai Yin

Auto-TLDR; Clustering by Augmented Mutual Information maximization for Deep Embedding

Abstract Slides Poster Similar

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera, Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Abstract Slides Poster Similar

AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault, Hervé Le Borgne, Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Abstract Slides Poster Similar

Learning Low-Shot Generative Networks for Cross-Domain Data

Hsuan-Kai Kao, Cheng-Che Lee, Wei-Chen Chiu

Auto-TLDR; Learning Generators for Cross-Domain Data under Low-Shot Learning

Abstract Slides Poster Similar

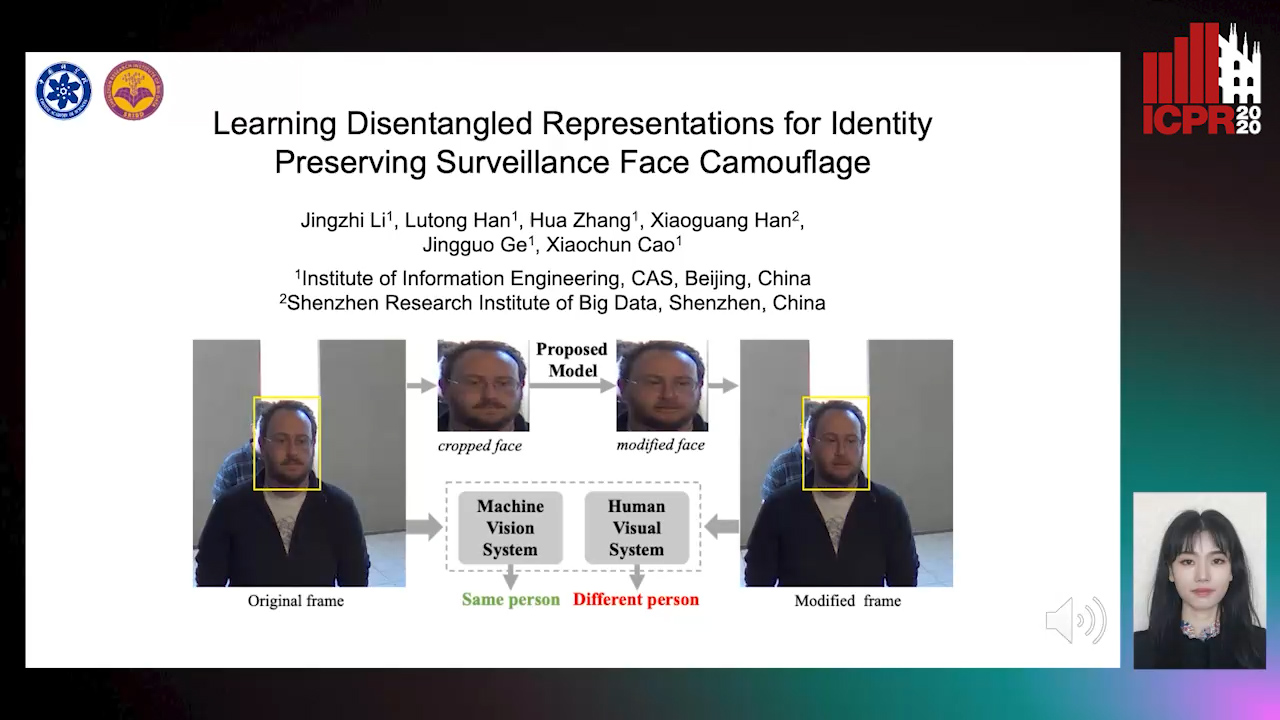

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

Variational Capsule Encoder

Harish Raviprakash, Syed Anwar, Ulas Bagci

Auto-TLDR; Bayesian Capsule Networks for Representation Learning in latent space

Abstract Slides Poster Similar

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

Interpolation in Auto Encoders with Bridge Processes

Carl Ringqvist, Henrik Hult, Judith Butepage, Hedvig Kjellstrom

Auto-TLDR; Stochastic interpolations from auto encoders trained on flattened sequences

Abstract Slides Poster Similar

Interpreting the Latent Space of GANs Via Correlation Analysis for Controllable Concept Manipulation

Ziqiang Li, Rentuo Tao, Hongjing Niu, Bin Li

Auto-TLDR; Exploring latent space of GANs by analyzing correlation between latent variables and semantic contents in generated images

Abstract Slides Poster Similar

Domain Generalized Person Re-Identification Via Cross-Domain Episodic Learning

Ci-Siang Lin, Yuan Chia Cheng, Yu-Chiang Frank Wang

Auto-TLDR; Domain-Invariant Person Re-identification with Episodic Learning

Abstract Slides Poster Similar

Reducing the Variance of Variational Estimates of Mutual Information by Limiting the Critic's Hypothesis Space to RKHS

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; Mutual Information Estimation from Variational Lower Bounds Using a Critic's Hypothesis Space

Mutual Information Based Method for Unsupervised Disentanglement of Video Representation

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; MIPAE: Mutual Information Predictive Auto-Encoder for Video Prediction

Abstract Slides Poster Similar

Unsupervised Disentangling of Viewpoint and Residues Variations by Substituting Representations for Robust Face Recognition

Minsu Kim, Joanna Hong, Junho Kim, Hong Joo Lee, Yong Man Ro

Auto-TLDR; Unsupervised Disentangling of Identity, viewpoint, and Residue Representations for Robust Face Recognition

Abstract Slides Poster Similar

A Self-Supervised GAN for Unsupervised Few-Shot Object Recognition

Auto-TLDR; Self-supervised Few-Shot Object Recognition with a Triplet GAN

Abstract Slides Poster Similar

Discriminative Multi-Level Reconstruction under Compact Latent Space for One-Class Novelty Detection

Jaewoo Park, Yoon Gyo Jung, Andrew Teoh

Auto-TLDR; Discriminative Compact AE for One-Class novelty detection and Adversarial Example Detection

Generative Latent Implicit Conditional Optimization When Learning from Small Sample

Auto-TLDR; GLICO: Generative Latent Implicit Conditional Optimization for Small Sample Learning

Abstract Slides Poster Similar

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

IDA-GAN: A Novel Imbalanced Data Augmentation GAN

Auto-TLDR; IDA-GAN: Generative Adversarial Networks for Imbalanced Data Augmentation

Abstract Slides Poster Similar

Unsupervised Face Manipulation Via Hallucination

Keerthy Kusumam, Enrique Sanchez, Georgios Tzimiropoulos

Auto-TLDR; Unpaired Face Image Manipulation using Autoencoders

Abstract Slides Poster Similar

SATGAN: Augmenting Age Biased Dataset for Cross-Age Face Recognition

Wenshuang Liu, Wenting Chen, Yuanlue Zhu, Linlin Shen

Auto-TLDR; SATGAN: Stable Age Translation GAN for Cross-Age Face Recognition

Abstract Slides Poster Similar

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Unsupervised Contrastive Photo-To-Caricature Translation Based on Auto-Distortion

Yuhe Ding, Xin Ma, Mandi Luo, Aihua Zheng, Ran He

Auto-TLDR; Unsupervised contrastive photo-to-caricature translation with style loss

Abstract Slides Poster Similar

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

Pose Variation Adaptation for Person Re-Identification

Lei Zhang, Na Jiang, Qishuai Diao, Yue Xu, Zhong Zhou, Wei Wu

Auto-TLDR; Pose Transfer Generative Adversarial Network for Person Re-identification

Abstract Slides Poster Similar

On the Evaluation of Generative Adversarial Networks by Discriminative Models

Amirsina Torfi, Mohammadreza Beyki, Edward Alan Fox

Auto-TLDR; Domain-agnostic GAN Evaluation with Siamese Neural Networks

Abstract Slides Poster Similar

GAN-Based Gaussian Mixture Model Responsibility Learning

Wanming Huang, Yi Da Xu, Shuai Jiang, Xuan Liang, Ian Oppermann

Auto-TLDR; Posterior Consistency Module for Gaussian Mixture Model

Abstract Slides Poster Similar

Exemplar Guided Cross-Spectral Face Hallucination Via Mutual Information Disentanglement

Haoxue Wu, Huaibo Huang, Aijing Yu, Jie Cao, Zhen Lei, Ran He

Auto-TLDR; Exemplar Guided Cross-Spectral Face Hallucination with Structural Representation Learning

Abstract Slides Poster Similar

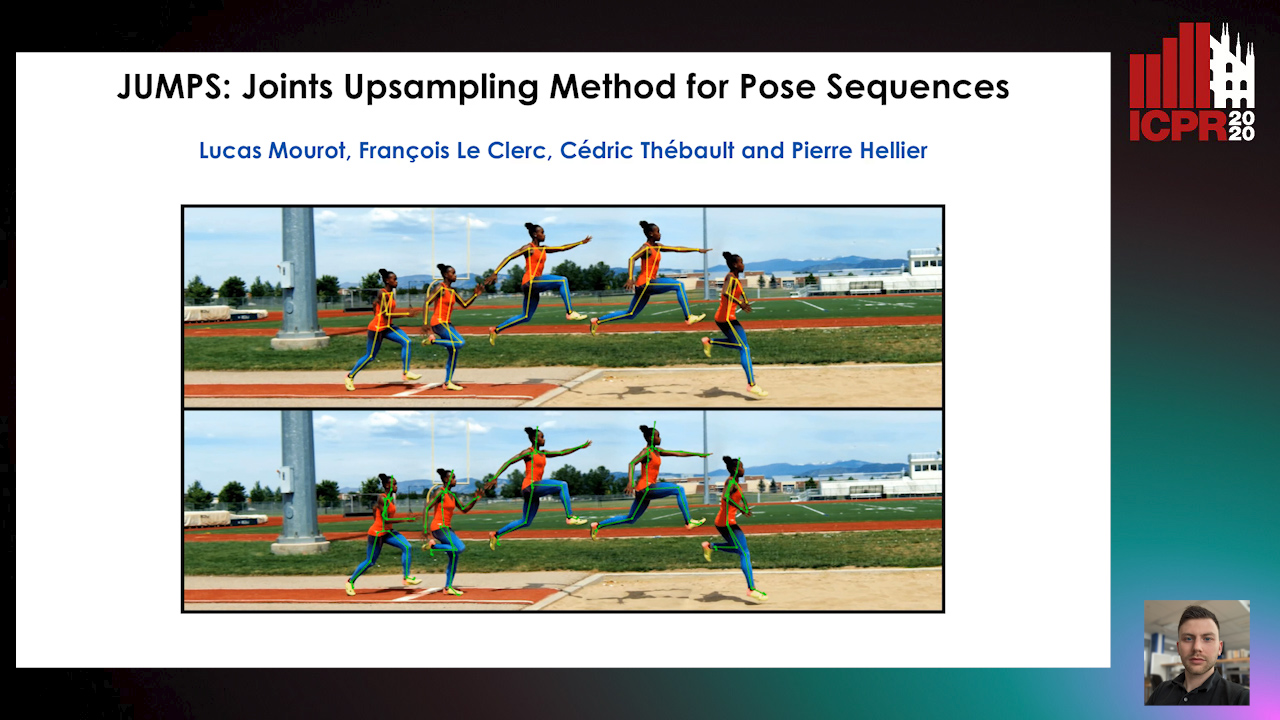

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

Adversarially Constrained Interpolation for Unsupervised Domain Adaptation

Mohamed Azzam, Aurele Tohokantche Gnanha, Hau-San Wong, Si Wu

Auto-TLDR; Unsupervised Domain Adaptation with Domain Mixup Strategy

Abstract Slides Poster Similar

Multi-Modal Deep Clustering: Unsupervised Partitioning of Images

Auto-TLDR; Multi-Modal Deep Clustering for Unlabeled Images

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Gaoang Wang, Chen Lin, Tianqiang Liu, Mingwei He, Jiebo Luo

Auto-TLDR; DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Abstract Slides Poster Similar

Few-Shot Font Generation with Deep Metric Learning

Haruka Aoki, Koki Tsubota, Hikaru Ikuta, Kiyoharu Aizawa

Auto-TLDR; Deep Metric Learning for Japanese Typographic Font Synthesis

Abstract Slides Poster Similar

Future Urban Scenes Generation through Vehicles Synthesis

Alessandro Simoni, Luca Bergamini, Andrea Palazzi, Simone Calderara, Rita Cucchiara

Auto-TLDR; Predicting the Future of an Urban Scene with a Novel View Synthesis Paradigm

Abstract Slides Poster Similar

Local Clustering with Mean Teacher for Semi-Supervised Learning

Zexi Chen, Benjamin Dutton, Bharathkumar Ramachandra, Tianfu Wu, Ranga Raju Vatsavai

Auto-TLDR; Local Clustering for Semi-supervised Learning

Generative Deep-Neural-Network Mixture Modeling with Semi-Supervised MinMax+EM Learning

Auto-TLDR; Semi-supervised Deep Neural Networks for Generative Mixture Modeling and Clustering

Abstract Slides Poster Similar

A Quantitative Evaluation Framework of Video De-Identification Methods

Sathya Bursic, Alessandro D'Amelio, Marco Granato, Giuliano Grossi, Raffaella Lanzarotti

Auto-TLDR; Face de-identification using photo-reality and facial expressions

Abstract Slides Poster Similar

Cam-Softmax for Discriminative Deep Feature Learning

Tamas Suveges, Stephen James Mckenna

Auto-TLDR; Cam-Softmax: A Generalisation of Activations and Softmax for Deep Feature Spaces

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

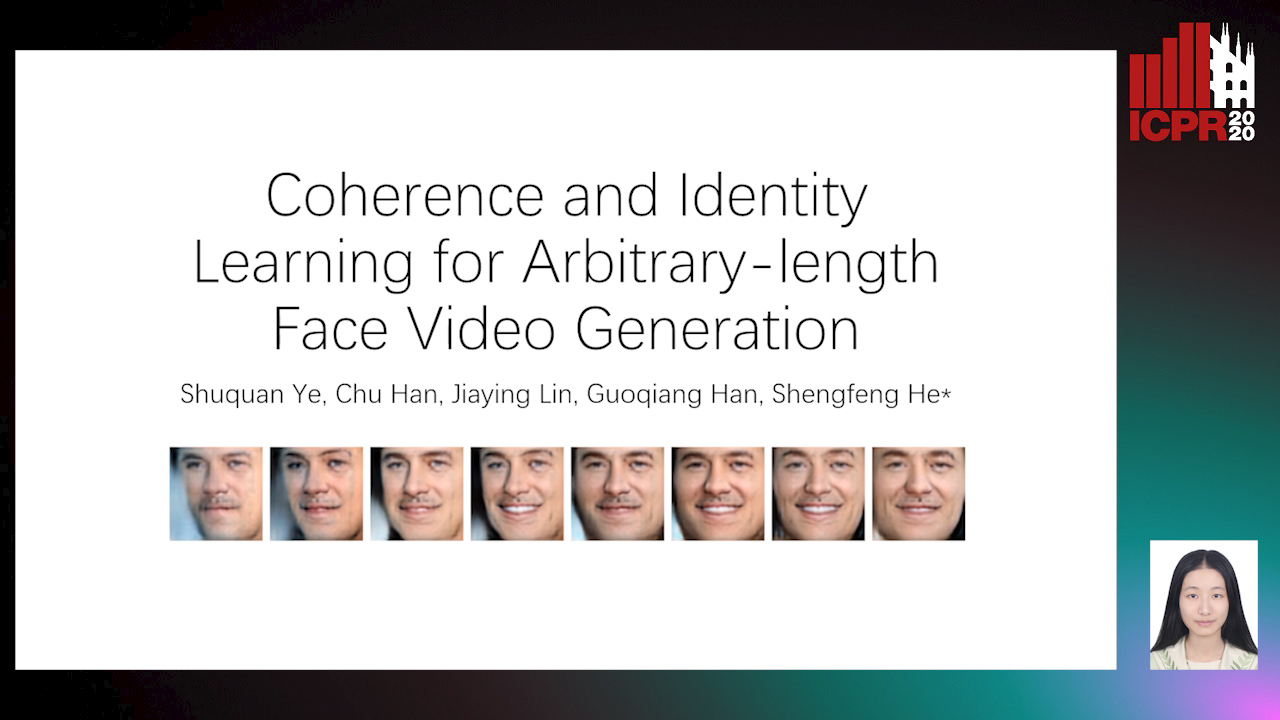

Coherence and Identity Learning for Arbitrary-Length Face Video Generation

Shuquan Ye, Chu Han, Jiaying Lin, Guoqiang Han, Shengfeng He

Auto-TLDR; Face Video Synthesis Using Identity-Aware GAN and Face Coherence Network

Abstract Slides Poster Similar

Galaxy Image Translation with Semi-Supervised Noise-Reconstructed Generative Adversarial Networks

Qiufan Lin, Dominique Fouchez, Jérôme Pasquet

Auto-TLDR; Semi-supervised Image Translation with Generative Adversarial Networks Using Paired and Unpaired Images

Abstract Slides Poster Similar

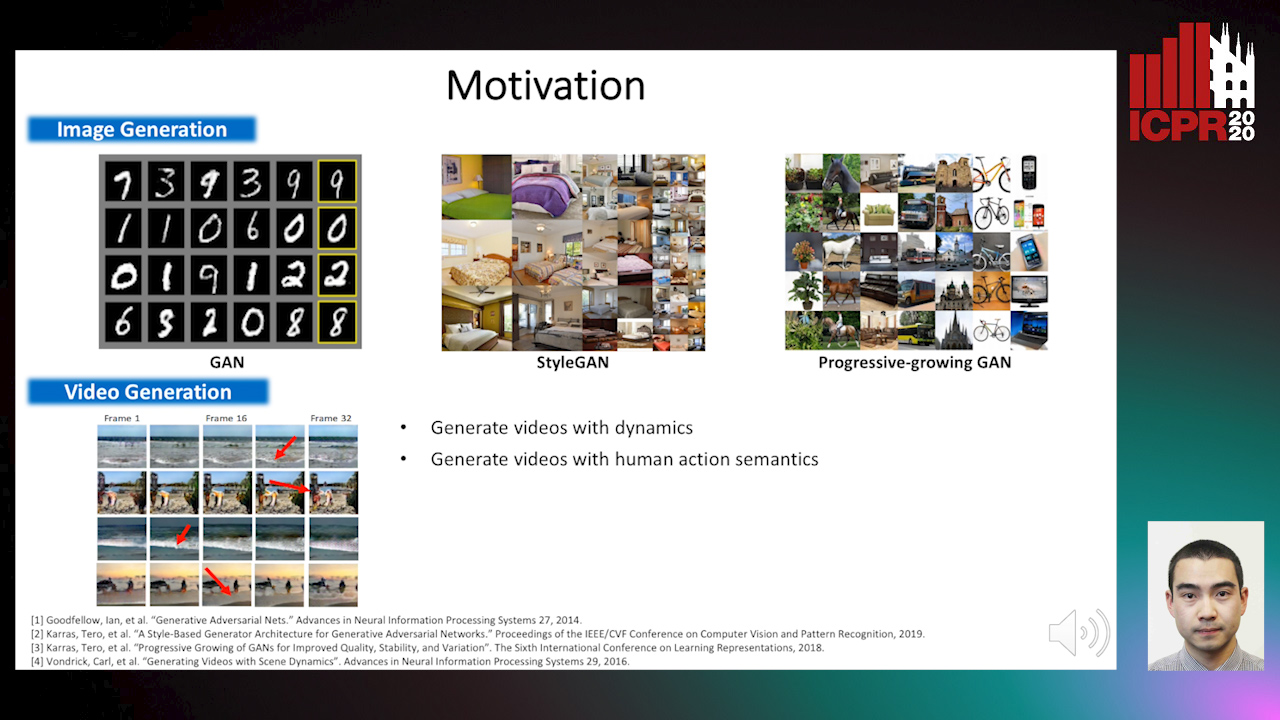

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Pose-Robust Face Recognition by Deep Meta Capsule Network-Based Equivariant Embedding

Fangyu Wu, Jeremy Simon Smith, Wenjin Lu, Bailing Zhang

Auto-TLDR; Deep Meta Capsule Network-based Equivariant Embedding Model for Pose-Robust Face Recognition