Local Facial Attribute Transfer through Inpainting

Ricard Durall,

Franz-Josef Pfreundt,

Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Similar papers

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

Mask-Based Style-Controlled Image Synthesis Using a Mask Style Encoder

Jaehyeong Cho, Wataru Shimoda, Keiji Yanai

Auto-TLDR; Style-controlled Image Synthesis from Semantic Segmentation masks using GANs

Abstract Slides Poster Similar

Pixel-based Facial Expression Synthesis

Auto-TLDR; pixel-based facial expression synthesis using GANs

Abstract Slides Poster Similar

Semantic-Guided Inpainting Network for Complex Urban Scenes Manipulation

Pierfrancesco Ardino, Yahui Liu, Elisa Ricci, Bruno Lepri, Marco De Nadai

Auto-TLDR; Semantic-Guided Inpainting of Complex Urban Scene Using Semantic Segmentation and Generation

Abstract Slides Poster Similar

Image Inpainting with Contrastive Relation Network

Xiaoqiang Zhou, Junjie Li, Zilei Wang, Ran He, Tieniu Tan

Auto-TLDR; Two-Stage Inpainting with Graph-based Relation Network

Galaxy Image Translation with Semi-Supervised Noise-Reconstructed Generative Adversarial Networks

Qiufan Lin, Dominique Fouchez, Jérôme Pasquet

Auto-TLDR; Semi-supervised Image Translation with Generative Adversarial Networks Using Paired and Unpaired Images

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

Continuous Learning of Face Attribute Synthesis

Ning Xin, Shaohui Xu, Fangzhe Nan, Xiaoli Dong, Weijun Li, Yuanzhou Yao

Auto-TLDR; Continuous Learning for Face Attribute Synthesis

Abstract Slides Poster Similar

Identity-Preserved Face Beauty Transformation with Conditional Generative Adversarial Networks

Auto-TLDR; Identity-preserved face beauty transformation using conditional GANs

Abstract Slides Poster Similar

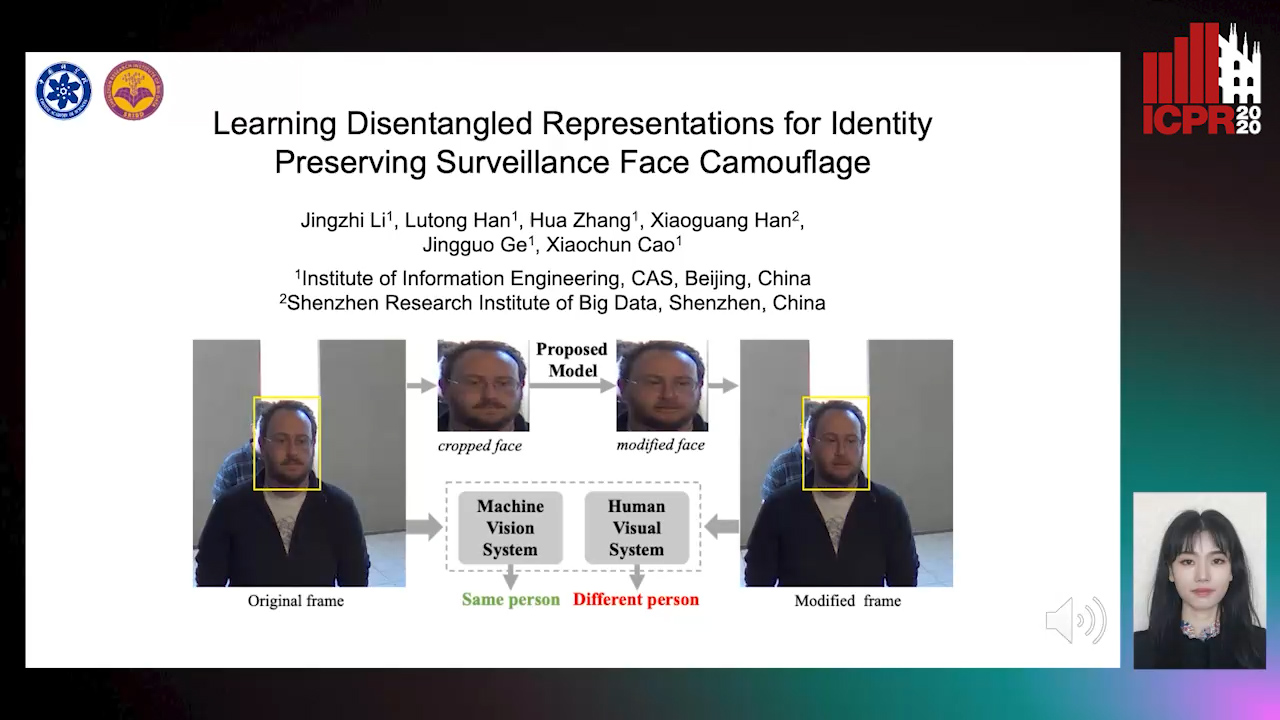

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

GarmentGAN: Photo-Realistic Adversarial Fashion Transfer

Amir Hossein Raffiee, Michael Sollami

Auto-TLDR; GarmentGAN: A Generative Adversarial Network for Image-Based Garment Transfer

Abstract Slides Poster Similar

Cycle-Consistent Adversarial Networks and Fast Adaptive Bi-Dimensional Empirical Mode Decomposition for Style Transfer

Elissavet Batziou, Petros Alvanitopoulos, Konstantinos Ioannidis, Ioannis Patras, Stefanos Vrochidis, Ioannis Kompatsiaris

Auto-TLDR; FABEMD: Fast and Adaptive Bidimensional Empirical Mode Decomposition for Style Transfer on Images

Abstract Slides Poster Similar

Multi-Domain Image-To-Image Translation with Adaptive Inference Graph

The Phuc Nguyen, Stéphane Lathuiliere, Elisa Ricci

Auto-TLDR; Adaptive Graph Structure for Multi-Domain Image-to-Image Translation

Abstract Slides Poster Similar

Attributes Aware Face Generation with Generative Adversarial Networks

Zheng Yuan, Jie Zhang, Shiguang Shan, Xilin Chen

Auto-TLDR; AFGAN: A Generative Adversarial Network for Attributes Aware Face Image Generation

Abstract Slides Poster Similar

Detail Fusion GAN: High-Quality Translation for Unpaired Images with GAN-Based Data Augmentation

Ling Li, Yaochen Li, Chuan Wu, Hang Dong, Peilin Jiang, Fei Wang

Auto-TLDR; Data Augmentation with GAN-based Generative Adversarial Network

Abstract Slides Poster Similar

UCCTGAN: Unsupervised Clothing Color Transformation Generative Adversarial Network

Shuming Sun, Xiaoqiang Li, Jide Li

Auto-TLDR; An Unsupervised Clothing Color Transformation Generative Adversarial Network

Abstract Slides Poster Similar

Disentangled Representation Learning for Controllable Image Synthesis: An Information-Theoretic Perspective

Shichang Tang, Xu Zhou, Xuming He, Yi Ma

Auto-TLDR; Controllable Image Synthesis in Deep Generative Models using Variational Auto-Encoder

Abstract Slides Poster Similar

SECI-GAN: Semantic and Edge Completion for Dynamic Objects Removal

Francesco Pinto, Andrea Romanoni, Matteo Matteucci, Phil Torr

Auto-TLDR; SECI-GAN: Semantic and Edge Conditioned Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

SATGAN: Augmenting Age Biased Dataset for Cross-Age Face Recognition

Wenshuang Liu, Wenting Chen, Yuanlue Zhu, Linlin Shen

Auto-TLDR; SATGAN: Stable Age Translation GAN for Cross-Age Face Recognition

Abstract Slides Poster Similar

A GAN-Based Blind Inpainting Method for Masonry Wall Images

Yahya Ibrahim, Balázs Nagy, Csaba Benedek

Auto-TLDR; An End-to-End Blind Inpainting Algorithm for Masonry Wall Images

Abstract Slides Poster Similar

AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault, Hervé Le Borgne, Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Abstract Slides Poster Similar

Unsupervised Face Manipulation Via Hallucination

Keerthy Kusumam, Enrique Sanchez, Georgios Tzimiropoulos

Auto-TLDR; Unpaired Face Image Manipulation using Autoencoders

Abstract Slides Poster Similar

Cascade Attention Guided Residue Learning GAN for Cross-Modal Translation

Bin Duan, Wei Wang, Hao Tang, Hugo Latapie, Yan Yan

Auto-TLDR; Cascade Attention-Guided Residue GAN for Cross-modal Audio-Visual Learning

Abstract Slides Poster Similar

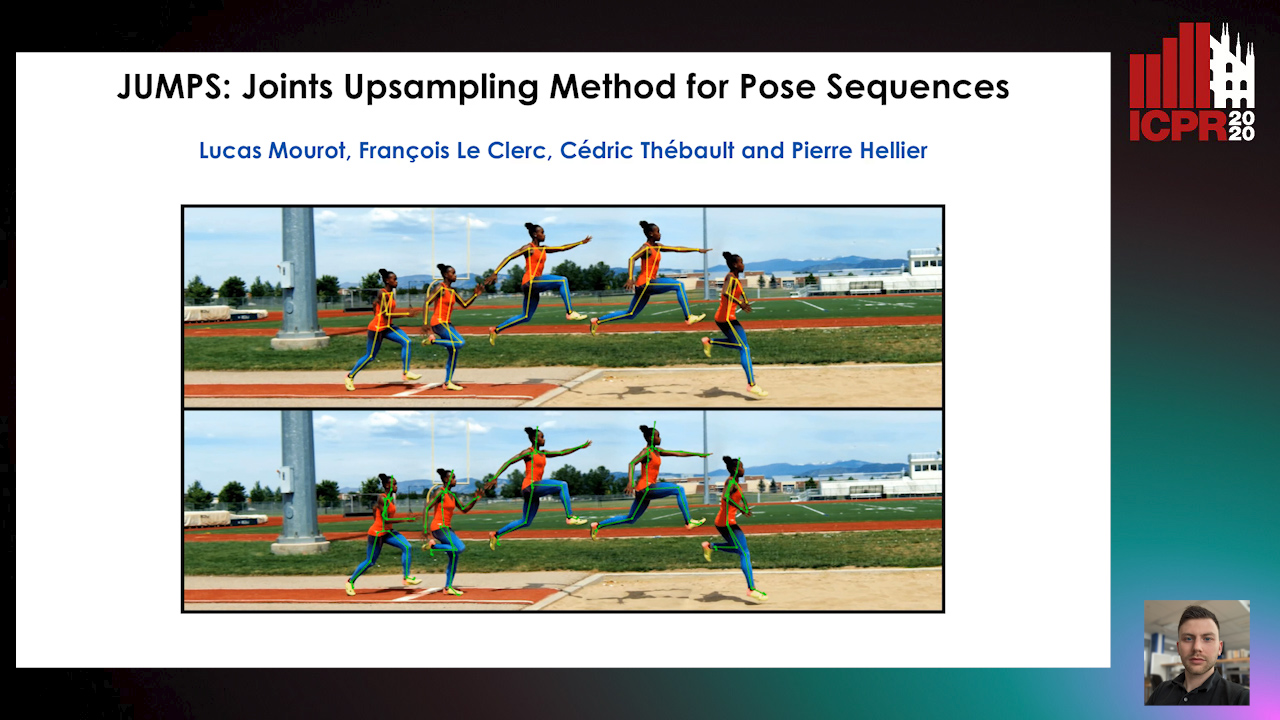

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

Stylized-Colorization for Line Arts

Tzu-Ting Fang, Minh Duc Vo, Akihiro Sugimoto, Shang-Hong Lai

Auto-TLDR; Stylized-colorization using GAN-based End-to-End Model for Anime

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

Multi-Laplacian GAN with Edge Enhancement for Face Super Resolution

Auto-TLDR; Face Image Super-Resolution with Enhanced Edge Information

Abstract Slides Poster Similar

Local-Global Interactive Network for Face Age Transformation

Jie Song, Ping Wei, Huan Li, Yongchi Zhang, Nanning Zheng

Auto-TLDR; A Novel Local-Global Interaction Framework for Long-span Face Age Transformation

Abstract Slides Poster Similar

Augmented Cyclic Consistency Regularization for Unpaired Image-To-Image Translation

Takehiko Ohkawa, Naoto Inoue, Hirokatsu Kataoka, Nakamasa Inoue

Auto-TLDR; Augmented Cyclic Consistency Regularization for Unpaired Image-to-Image Translation

Abstract Slides Poster Similar

An Unsupervised Approach towards Varying Human Skin Tone Using Generative Adversarial Networks

Debapriya Roy, Diganta Mukherjee, Bhabatosh Chanda

Auto-TLDR; Unsupervised Skin Tone Change Using Augmented Reality Based Models

Abstract Slides Poster Similar

Learning Low-Shot Generative Networks for Cross-Domain Data

Hsuan-Kai Kao, Cheng-Che Lee, Wei-Chen Chiu

Auto-TLDR; Learning Generators for Cross-Domain Data under Low-Shot Learning

Abstract Slides Poster Similar

Learning Image Inpainting from Incomplete Images using Self-Supervision

Sriram Yenamandra, Rohit Kumar Jena, Ansh Khurana, Suyash Awate

Auto-TLDR; Unsupervised Deep Neural Network for Semantic Image Inpainting

Abstract Slides Poster Similar

Attention2AngioGAN: Synthesizing Fluorescein Angiography from Retinal Fundus Images Using Generative Adversarial Networks

Sharif Amit Kamran, Khondker Fariha Hossain, Alireza Tavakkoli, Stewart Lee Zuckerbrod

Auto-TLDR; Fluorescein Angiography from Fundus Images using Attention-based Generative Networks

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

Controllable Face Aging

Auto-TLDR; A controllable face aging method via attribute disentanglement generative adversarial network

Abstract Slides Poster Similar

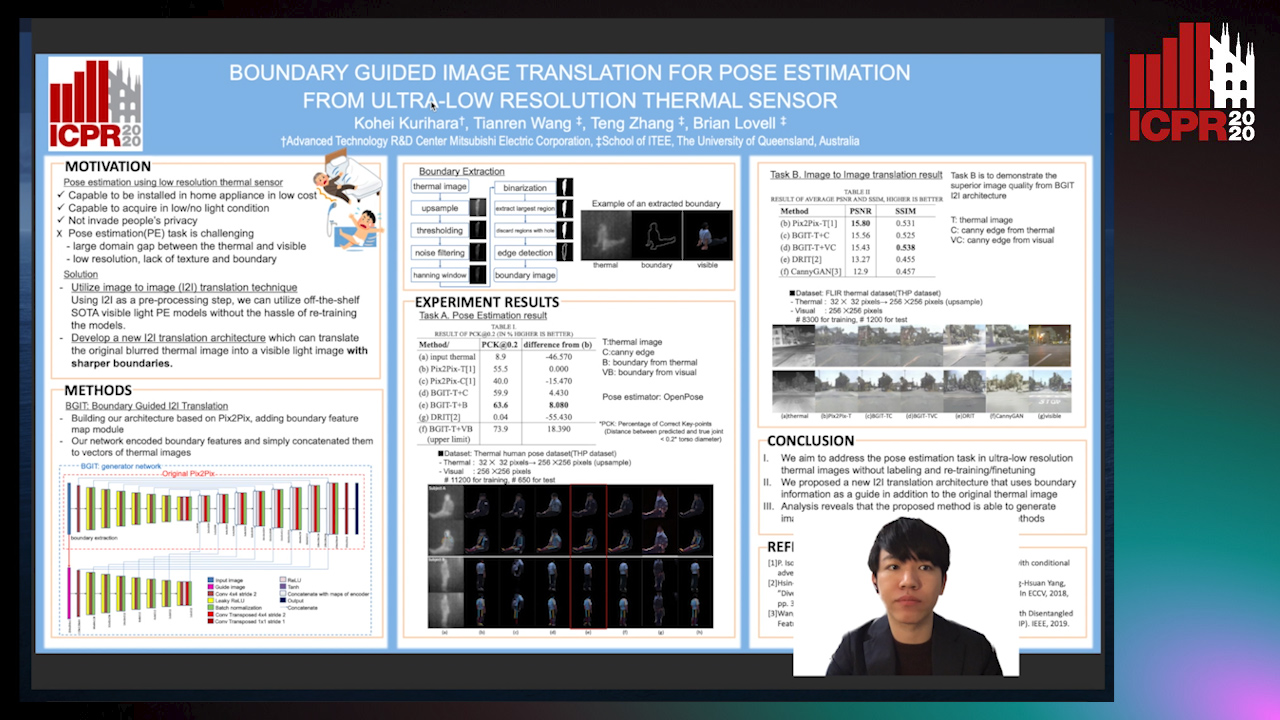

Boundary Guided Image Translation for Pose Estimation from Ultra-Low Resolution Thermal Sensor

Kohei Kurihara, Tianren Wang, Teng Zhang, Brian Carrington Lovell

Auto-TLDR; Pose Estimation on Low-Resolution Thermal Images Using Image-to-Image Translation Architecture

Abstract Slides Poster Similar

Future Urban Scenes Generation through Vehicles Synthesis

Alessandro Simoni, Luca Bergamini, Andrea Palazzi, Simone Calderara, Rita Cucchiara

Auto-TLDR; Predicting the Future of an Urban Scene with a Novel View Synthesis Paradigm

Abstract Slides Poster Similar

Unsupervised Contrastive Photo-To-Caricature Translation Based on Auto-Distortion

Yuhe Ding, Xin Ma, Mandi Luo, Aihua Zheng, Ran He

Auto-TLDR; Unsupervised contrastive photo-to-caricature translation with style loss

Abstract Slides Poster Similar

Age Gap Reducer-GAN for Recognizing Age-Separated Faces

Daksha Yadav, Naman Kohli, Mayank Vatsa, Richa Singh, Afzel Noore

Auto-TLDR; Generative Adversarial Network for Age-separated Face Recognition

Abstract Slides Poster Similar

Disentangle, Assemble, and Synthesize: Unsupervised Learning to Disentangle Appearance and Location

Hiroaki Aizawa, Hirokatsu Kataoka, Yutaka Satoh, Kunihito Kato

Auto-TLDR; Generative Adversarial Networks with Structural Constraint for controllability of latent space

Abstract Slides Poster Similar

GAP: Quantifying the Generative Adversarial Set and Class Feature Applicability of Deep Neural Networks

Edward Collier, Supratik Mukhopadhyay

Auto-TLDR; Approximating Adversarial Learning in Deep Neural Networks Using Set and Class Adversaries

Abstract Slides Poster Similar

Efficient Shadow Detection and Removal Using Synthetic Data with Domain Adaptation

Rui Guo, Babajide Ayinde, Hao Sun

Auto-TLDR; Shadow Detection and Removal with Domain Adaptation and Synthetic Image Database

SIDGAN: Single Image Dehazing without Paired Supervision

Pan Wei, Xin Wang, Lei Wang, Ji Xiang, Zihan Wang

Auto-TLDR; DehazeGAN: An End-to-End Generative Adversarial Network for Image Dehazing

Abstract Slides Poster Similar

Robust Pedestrian Detection in Thermal Imagery Using Synthesized Images

My Kieu, Lorenzo Berlincioni, Leonardo Galteri, Marco Bertini, Andrew Bagdanov, Alberto Del Bimbo

Auto-TLDR; Improving Pedestrian Detection in the thermal domain using Generative Adversarial Network

Abstract Slides Poster Similar

Data Augmentation Via Mixed Class Interpolation Using Cycle-Consistent Generative Adversarial Networks Applied to Cross-Domain Imagery

Hiroshi Sasaki, Chris G. Willcocks, Toby Breckon

Auto-TLDR; C2GMA: A Generative Domain Transfer Model for Non-visible Domain Classification

Abstract Slides Poster Similar

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

Unsupervised Multi-Task Domain Adaptation

Auto-TLDR; Unsupervised Domain Adaptation with Multi-task Learning for Image Recognition

Abstract Slides Poster Similar

Towards Artifacts-Free Image Defogging

Gabriele Graffieti, Davide Maltoni

Auto-TLDR; CurL-Defog: Learning Based Defogging with CycleGAN and HArD