SECI-GAN: Semantic and Edge Completion for Dynamic Objects Removal

Francesco Pinto,

Andrea Romanoni,

Matteo Matteucci,

Phil Torr

Auto-TLDR; SECI-GAN: Semantic and Edge Conditioned Inpainting Generative Adversarial Network

Similar papers

Semantic-Guided Inpainting Network for Complex Urban Scenes Manipulation

Pierfrancesco Ardino, Yahui Liu, Elisa Ricci, Bruno Lepri, Marco De Nadai

Auto-TLDR; Semantic-Guided Inpainting of Complex Urban Scene Using Semantic Segmentation and Generation

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

Image Inpainting with Contrastive Relation Network

Xiaoqiang Zhou, Junjie Li, Zilei Wang, Ran He, Tieniu Tan

Auto-TLDR; Two-Stage Inpainting with Graph-based Relation Network

A GAN-Based Blind Inpainting Method for Masonry Wall Images

Yahya Ibrahim, Balázs Nagy, Csaba Benedek

Auto-TLDR; An End-to-End Blind Inpainting Algorithm for Masonry Wall Images

Abstract Slides Poster Similar

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

Future Urban Scenes Generation through Vehicles Synthesis

Alessandro Simoni, Luca Bergamini, Andrea Palazzi, Simone Calderara, Rita Cucchiara

Auto-TLDR; Predicting the Future of an Urban Scene with a Novel View Synthesis Paradigm

Abstract Slides Poster Similar

Mask-Based Style-Controlled Image Synthesis Using a Mask Style Encoder

Jaehyeong Cho, Wataru Shimoda, Keiji Yanai

Auto-TLDR; Style-controlled Image Synthesis from Semantic Segmentation masks using GANs

Abstract Slides Poster Similar

Global-Local Attention Network for Semantic Segmentation in Aerial Images

Minglong Li, Lianlei Shan, Weiqiang Wang

Auto-TLDR; GLANet: Global-Local Attention Network for Semantic Segmentation

Abstract Slides Poster Similar

A NoGAN Approach for Image and Video Restoration and Compression Artifact Removal

Mameli Filippo, Marco Bertini, Leonardo Galteri, Alberto Del Bimbo

Auto-TLDR; Deep Neural Network for Image and Video Compression Artifact Removal and Restoration

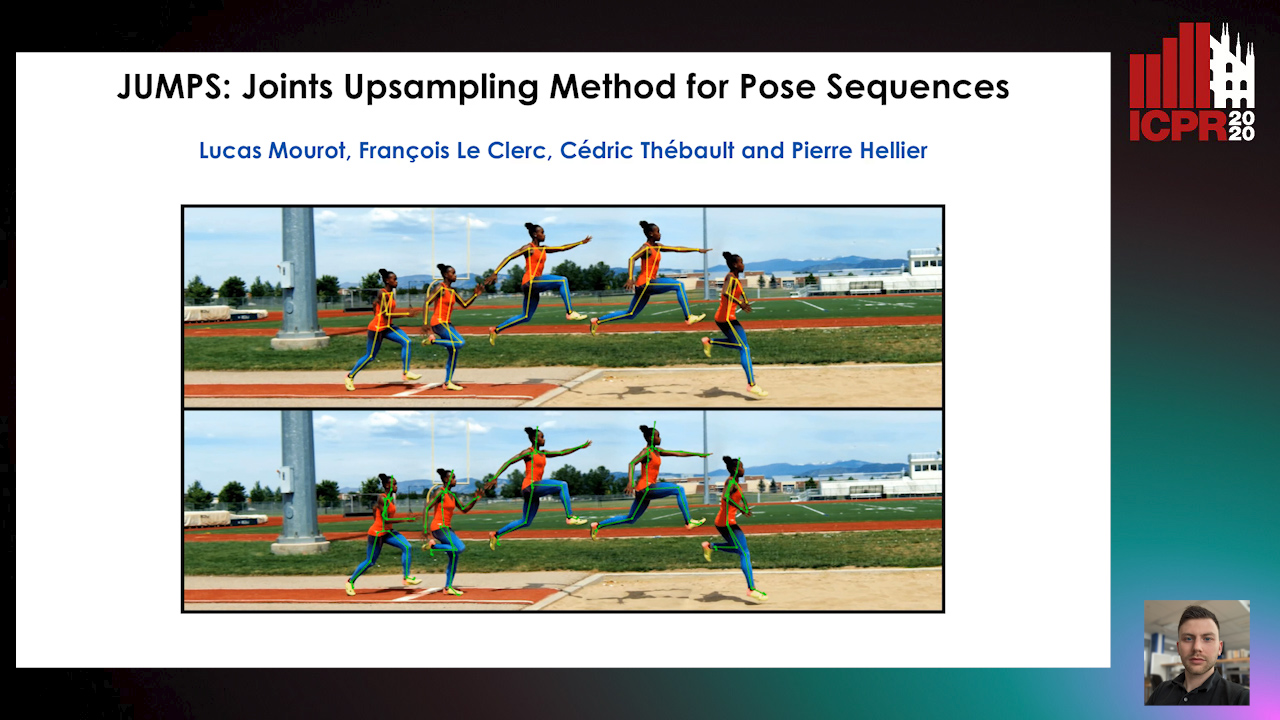

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

Towards Artifacts-Free Image Defogging

Gabriele Graffieti, Davide Maltoni

Auto-TLDR; CurL-Defog: Learning Based Defogging with CycleGAN and HArD

Learning Image Inpainting from Incomplete Images using Self-Supervision

Sriram Yenamandra, Rohit Kumar Jena, Ansh Khurana, Suyash Awate

Auto-TLDR; Unsupervised Deep Neural Network for Semantic Image Inpainting

Abstract Slides Poster Similar

Boosting High-Level Vision with Joint Compression Artifacts Reduction and Super-Resolution

Xiaoyu Xiang, Qian Lin, Jan Allebach

Auto-TLDR; A Context-Aware Joint CAR and SR Neural Network for High-Resolution Text Recognition and Face Detection

Abstract Slides Poster Similar

SIDGAN: Single Image Dehazing without Paired Supervision

Pan Wei, Xin Wang, Lei Wang, Ji Xiang, Zihan Wang

Auto-TLDR; DehazeGAN: An End-to-End Generative Adversarial Network for Image Dehazing

Abstract Slides Poster Similar

Thermal Image Enhancement Using Generative Adversarial Network for Pedestrian Detection

Mohamed Amine Marnissi, Hajer Fradi, Anis Sahbani, Najoua Essoukri Ben Amara

Auto-TLDR; Improving Visual Quality of Infrared Images for Pedestrian Detection Using Generative Adversarial Network

Abstract Slides Poster Similar

Hybrid Approach for 3D Head Reconstruction: Using Neural Networks and Visual Geometry

Oussema Bouafif, Bogdan Khomutenko, Mohammed Daoudi

Auto-TLDR; Recovering 3D Head Geometry from a Single Image using Deep Learning and Geometric Techniques

Abstract Slides Poster Similar

Explorable Tone Mapping Operators

Su Chien-Chuan, Yu-Lun Liu, Hung Jin Lin, Ren Wang, Chia-Ping Chen, Yu-Lin Chang, Soo-Chang Pei

Auto-TLDR; Learning-based multimodal tone-mapping from HDR images

Abstract Slides Poster Similar

Multi-Laplacian GAN with Edge Enhancement for Face Super Resolution

Auto-TLDR; Face Image Super-Resolution with Enhanced Edge Information

Abstract Slides Poster Similar

UCCTGAN: Unsupervised Clothing Color Transformation Generative Adversarial Network

Shuming Sun, Xiaoqiang Li, Jide Li

Auto-TLDR; An Unsupervised Clothing Color Transformation Generative Adversarial Network

Abstract Slides Poster Similar

Machine-Learned Regularization and Polygonization of Building Segmentation Masks

Stefano Zorzi, Ksenia Bittner, Friedrich Fraundorfer

Auto-TLDR; Automatic Regularization and Polygonization of Building Segmentation masks using Generative Adversarial Network

Abstract Slides Poster Similar

Adaptive Image Compression Using GAN Based Semantic-Perceptual Residual Compensation

Ruojing Wang, Zitang Sun, Sei-Ichiro Kamata, Weili Chen

Auto-TLDR; Adaptive Image Compression using GAN based Semantic-Perceptual Residual Compensation

Abstract Slides Poster Similar

EdgeNet: Semantic Scene Completion from a Single RGB-D Image

Aloisio Dourado, Teofilo De Campos, Adrian Hilton, Hansung Kim

Auto-TLDR; Semantic Scene Completion using 3D Depth and RGB Information

Abstract Slides Poster Similar

Towards Efficient 3D Point Cloud Scene Completion Via Novel Depth View Synthesis

Haiyan Wang, Liang Yang, Xuejian Rong, Ying-Li Tian

Auto-TLDR; 3D Point Cloud Completion with Depth View Synthesis and Depth View synthesis

Few-Shot Font Generation with Deep Metric Learning

Haruka Aoki, Koki Tsubota, Hikaru Ikuta, Kiyoharu Aizawa

Auto-TLDR; Deep Metric Learning for Japanese Typographic Font Synthesis

Abstract Slides Poster Similar

Exploring Severe Occlusion: Multi-Person 3D Pose Estimation with Gated Convolution

Renshu Gu, Gaoang Wang, Jenq-Neng Hwang

Auto-TLDR; 3D Human Pose Estimation for Multi-Human Videos with Occlusion

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

Edge-Aware Monocular Dense Depth Estimation with Morphology

Zhi Li, Xiaoyang Zhu, Haitao Yu, Qi Zhang, Yongshi Jiang

Auto-TLDR; Spatio-Temporally Smooth Dense Depth Maps Using Only a CPU

Abstract Slides Poster Similar

Dynamic Guided Network for Monocular Depth Estimation

Xiaoxia Xing, Yinghao Cai, Yiping Yang, Dayong Wen

Auto-TLDR; DGNet: Dynamic Guidance Upsampling for Self-attention-Decoding for Monocular Depth Estimation

Abstract Slides Poster Similar

FatNet: A Feature-Attentive Network for 3D Point Cloud Processing

Chaitanya Kaul, Nick Pears, Suresh Manandhar

Auto-TLDR; Feature-Attentive Neural Networks for Point Cloud Classification and Segmentation

Attention2AngioGAN: Synthesizing Fluorescein Angiography from Retinal Fundus Images Using Generative Adversarial Networks

Sharif Amit Kamran, Khondker Fariha Hossain, Alireza Tavakkoli, Stewart Lee Zuckerbrod

Auto-TLDR; Fluorescein Angiography from Fundus Images using Attention-based Generative Networks

Abstract Slides Poster Similar

GarmentGAN: Photo-Realistic Adversarial Fashion Transfer

Amir Hossein Raffiee, Michael Sollami

Auto-TLDR; GarmentGAN: A Generative Adversarial Network for Image-Based Garment Transfer

Abstract Slides Poster Similar

Deep Realistic Novel View Generation for City-Scale Aerial Images

Koundinya Nouduri, Ke Gao, Joshua Fraser, Shizeng Yao, Hadi Aliakbarpour, Filiz Bunyak, Kannappan Palaniappan

Auto-TLDR; End-to-End 3D Voxel Renderer for Multi-View Stereo Data Generation and Evaluation

Abstract Slides Poster Similar

Novel View Synthesis from a 6-DoF Pose by Two-Stage Networks

Xiang Guo, Bo Li, Yuchao Dai, Tongxin Zhang, Hui Deng

Auto-TLDR; Novel View Synthesis from a 6-DoF Pose Using Generative Adversarial Network

Abstract Slides Poster Similar

GSTO: Gated Scale-Transfer Operation for Multi-Scale Feature Learning in Semantic Segmentation

Zhuoying Wang, Yongtao Wang, Zhi Tang, Yangyan Li, Ying Chen, Haibin Ling, Weisi Lin

Auto-TLDR; Gated Scale-Transfer Operation for Semantic Segmentation

Abstract Slides Poster Similar

Improved anomaly detection by training an autoencoder with skip connections on images corrupted with Stain-shaped noise

Anne-Sophie Collin, Christophe De Vleeschouwer

Auto-TLDR; Autoencoder with Skip Connections for Anomaly Detection

Abstract Slides Poster Similar

Detail Fusion GAN: High-Quality Translation for Unpaired Images with GAN-Based Data Augmentation

Ling Li, Yaochen Li, Chuan Wu, Hang Dong, Peilin Jiang, Fei Wang

Auto-TLDR; Data Augmentation with GAN-based Generative Adversarial Network

Abstract Slides Poster Similar

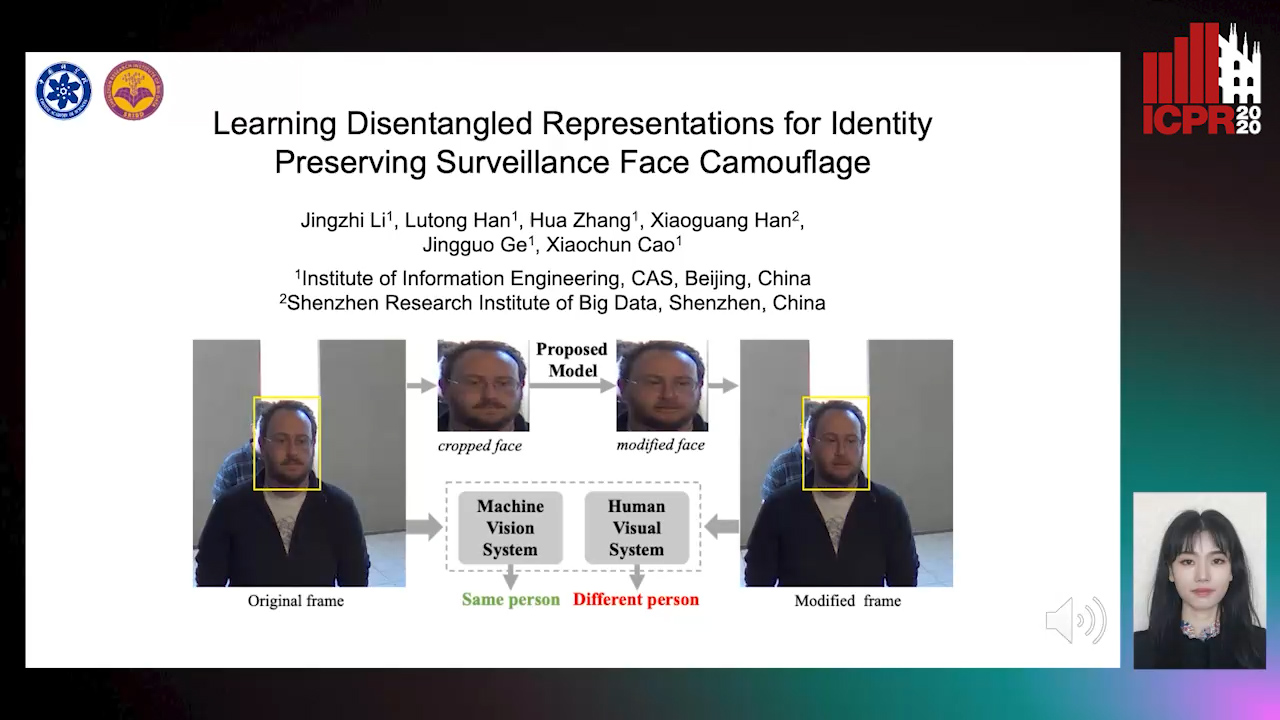

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

3D Semantic Labeling of Photogrammetry Meshes Based on Active Learning

Mengqi Rong, Shuhan Shen, Zhanyi Hu

Auto-TLDR; 3D Semantic Expression of Urban Scenes Based on Active Learning

Abstract Slides Poster Similar

Selective Kernel and Motion-Emphasized Loss Based Attention-Guided Network for HDR Imaging of Dynamic Scenes

Yipeng Deng, Qin Liu, Takeshi Ikenaga

Auto-TLDR; SK-AHDRNet: A Deep Network with attention module and motion-emphasized loss function to produce ghost-free HDR images

Abstract Slides Poster Similar

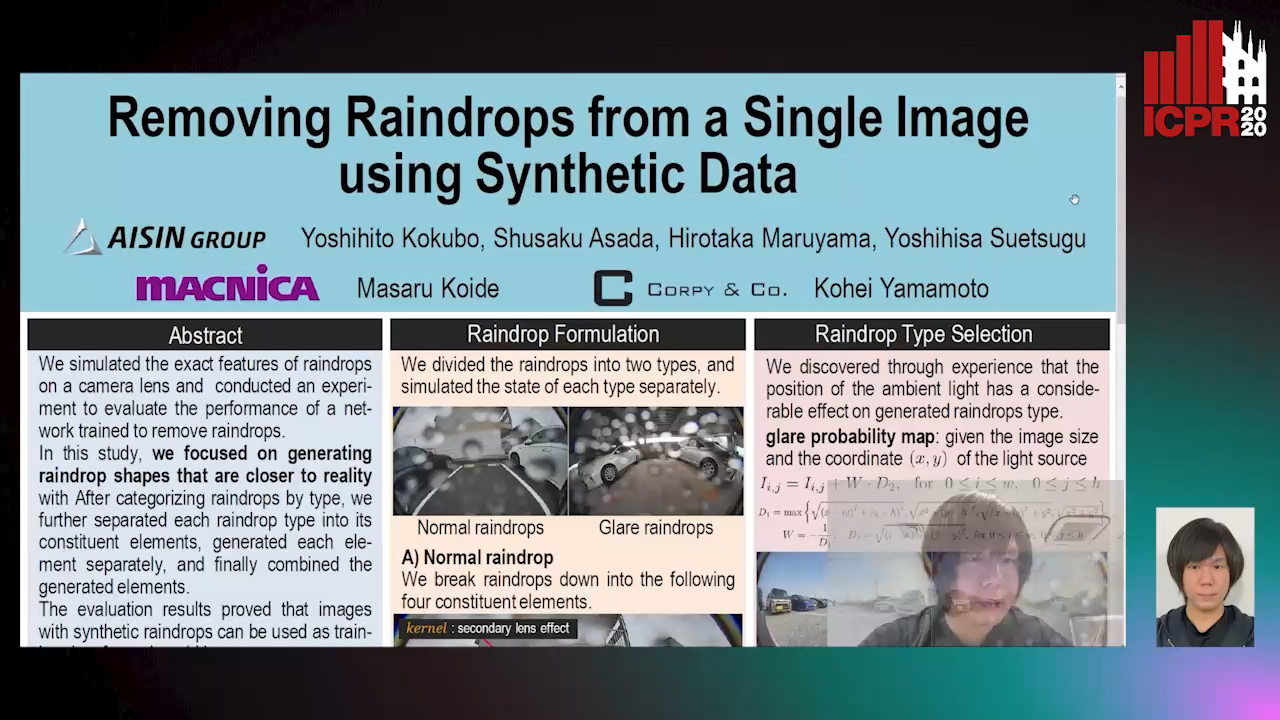

Removing Raindrops from a Single Image Using Synthetic Data

Yoshihito Kokubo, Shusaku Asada, Hirotaka Maruyama, Masaru Koide, Kohei Yamamoto, Yoshihisa Suetsugu

Auto-TLDR; Raindrop Removal Using Synthetic Raindrop Data

Abstract Slides Poster Similar

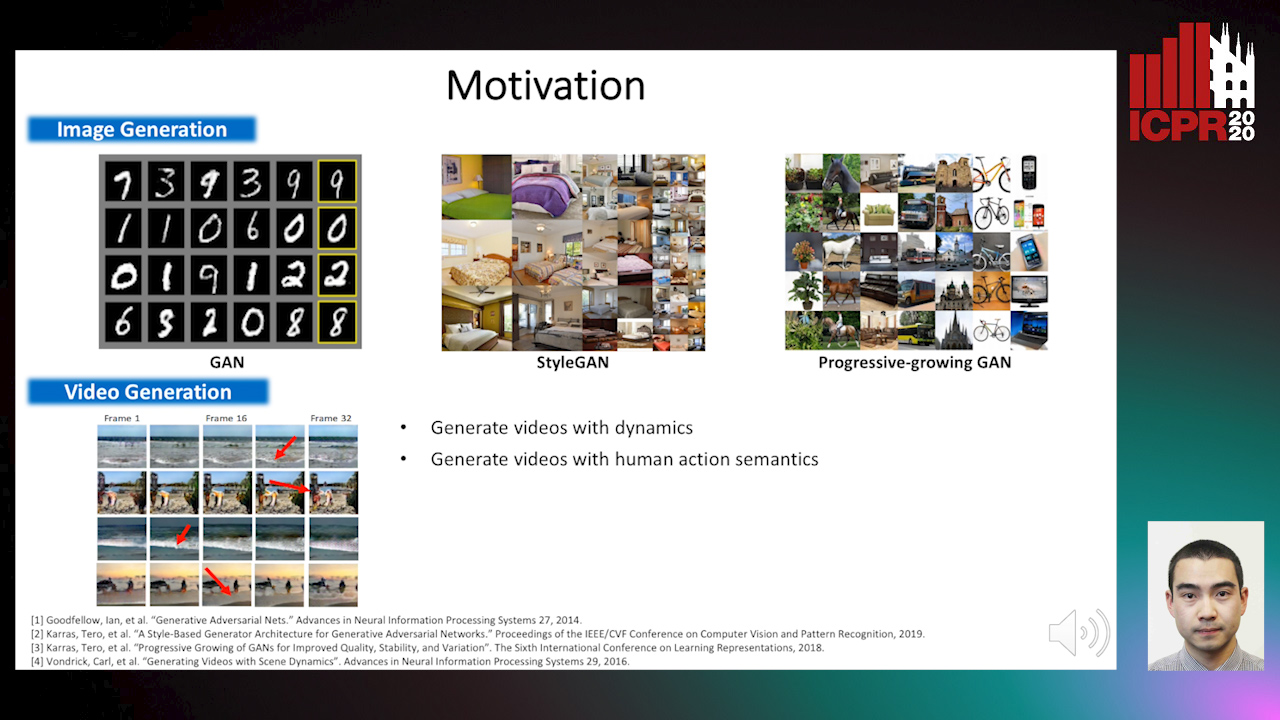

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Cycle-Consistent Adversarial Networks and Fast Adaptive Bi-Dimensional Empirical Mode Decomposition for Style Transfer

Elissavet Batziou, Petros Alvanitopoulos, Konstantinos Ioannidis, Ioannis Patras, Stefanos Vrochidis, Ioannis Kompatsiaris

Auto-TLDR; FABEMD: Fast and Adaptive Bidimensional Empirical Mode Decomposition for Style Transfer on Images

Abstract Slides Poster Similar

Enhancing Semantic Segmentation of Aerial Images with Inhibitory Neurons

Ihsan Ullah, Sean Reilly, Michael Madden

Auto-TLDR; Lateral Inhibition in Deep Neural Networks for Object Recognition and Semantic Segmentation

Abstract Slides Poster Similar

Deep Photo Relighting by Integrating Both 2D and 3D Lighting Information

Takashi Machida, Satoru Nakanishi

Auto-TLDR; DPR: Deep Photorelighting for Image Detection/Classification and Data Augmentation

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

Multiple Future Prediction Leveraging Synthetic Trajectories

Lorenzo Berlincioni, Federico Becattini, Lorenzo Seidenari, Alberto Del Bimbo

Auto-TLDR; Synthetic Trajectory Prediction using Markov Chains

Abstract Slides Poster Similar

Stylized-Colorization for Line Arts

Tzu-Ting Fang, Minh Duc Vo, Akihiro Sugimoto, Shang-Hong Lai

Auto-TLDR; Stylized-colorization using GAN-based End-to-End Model for Anime

Abstract Slides Poster Similar