AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault,

Hervé Le Borgne,

Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Similar papers

Disentangled Representation Learning for Controllable Image Synthesis: An Information-Theoretic Perspective

Shichang Tang, Xu Zhou, Xuming He, Yi Ma

Auto-TLDR; Controllable Image Synthesis in Deep Generative Models using Variational Auto-Encoder

Abstract Slides Poster Similar

GAN-Based Gaussian Mixture Model Responsibility Learning

Wanming Huang, Yi Da Xu, Shuai Jiang, Xuan Liang, Ian Oppermann

Auto-TLDR; Posterior Consistency Module for Gaussian Mixture Model

Abstract Slides Poster Similar

Mutual Information Based Method for Unsupervised Disentanglement of Video Representation

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; MIPAE: Mutual Information Predictive Auto-Encoder for Video Prediction

Abstract Slides Poster Similar

Reducing the Variance of Variational Estimates of Mutual Information by Limiting the Critic's Hypothesis Space to RKHS

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; Mutual Information Estimation from Variational Lower Bounds Using a Critic's Hypothesis Space

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera, Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Abstract Slides Poster Similar

Learning Low-Shot Generative Networks for Cross-Domain Data

Hsuan-Kai Kao, Cheng-Che Lee, Wei-Chen Chiu

Auto-TLDR; Learning Generators for Cross-Domain Data under Low-Shot Learning

Abstract Slides Poster Similar

Semantic-Guided Inpainting Network for Complex Urban Scenes Manipulation

Pierfrancesco Ardino, Yahui Liu, Elisa Ricci, Bruno Lepri, Marco De Nadai

Auto-TLDR; Semantic-Guided Inpainting of Complex Urban Scene Using Semantic Segmentation and Generation

Abstract Slides Poster Similar

IDA-GAN: A Novel Imbalanced Data Augmentation GAN

Auto-TLDR; IDA-GAN: Generative Adversarial Networks for Imbalanced Data Augmentation

Abstract Slides Poster Similar

Unsupervised Face Manipulation Via Hallucination

Keerthy Kusumam, Enrique Sanchez, Georgios Tzimiropoulos

Auto-TLDR; Unpaired Face Image Manipulation using Autoencoders

Abstract Slides Poster Similar

Pretraining Image Encoders without Reconstruction Via Feature Prediction Loss

Gustav Grund Pihlgren, Fredrik Sandin, Marcus Liwicki

Auto-TLDR; Feature Prediction Loss for Autoencoder-based Pretraining of Image Encoders

Variational Deep Embedding Clustering by Augmented Mutual Information Maximization

Qiang Ji, Yanfeng Sun, Yongli Hu, Baocai Yin

Auto-TLDR; Clustering by Augmented Mutual Information maximization for Deep Embedding

Abstract Slides Poster Similar

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

Generative Latent Implicit Conditional Optimization When Learning from Small Sample

Auto-TLDR; GLICO: Generative Latent Implicit Conditional Optimization for Small Sample Learning

Abstract Slides Poster Similar

Galaxy Image Translation with Semi-Supervised Noise-Reconstructed Generative Adversarial Networks

Qiufan Lin, Dominique Fouchez, Jérôme Pasquet

Auto-TLDR; Semi-supervised Image Translation with Generative Adversarial Networks Using Paired and Unpaired Images

Abstract Slides Poster Similar

Ω-GAN: Object Manifold Embedding GAN for Image Generation by Disentangling Parameters into Pose and Shape Manifolds

Yasutomo Kawanishi, Daisuke Deguchi, Ichiro Ide, Hiroshi Murase

Auto-TLDR; Object Manifold Embedding GAN with Parametric Sampling and Object Identity Loss

Abstract Slides Poster Similar

Multi-Domain Image-To-Image Translation with Adaptive Inference Graph

The Phuc Nguyen, Stéphane Lathuiliere, Elisa Ricci

Auto-TLDR; Adaptive Graph Structure for Multi-Domain Image-to-Image Translation

Abstract Slides Poster Similar

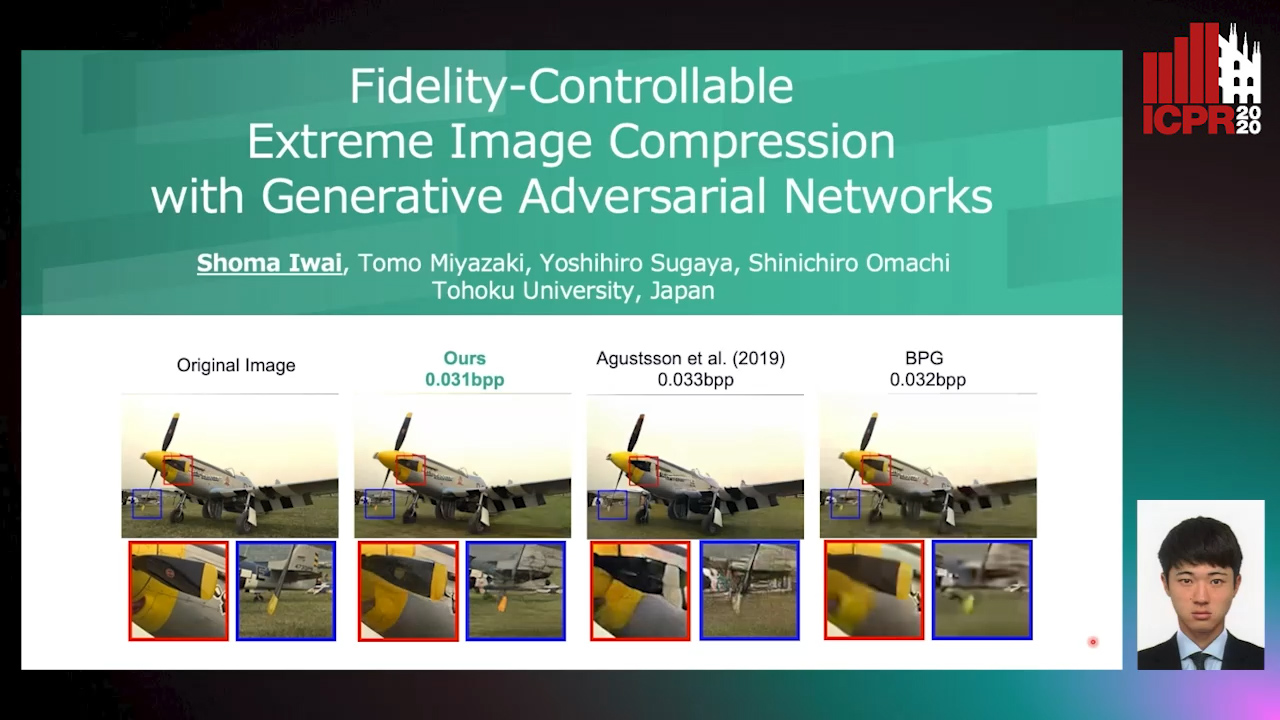

Fidelity-Controllable Extreme Image Compression with Generative Adversarial Networks

Shoma Iwai, Tomo Miyazaki, Yoshihiro Sugaya, Shinichiro Omachi

Auto-TLDR; GAN-based Image Compression at Low Bitrates

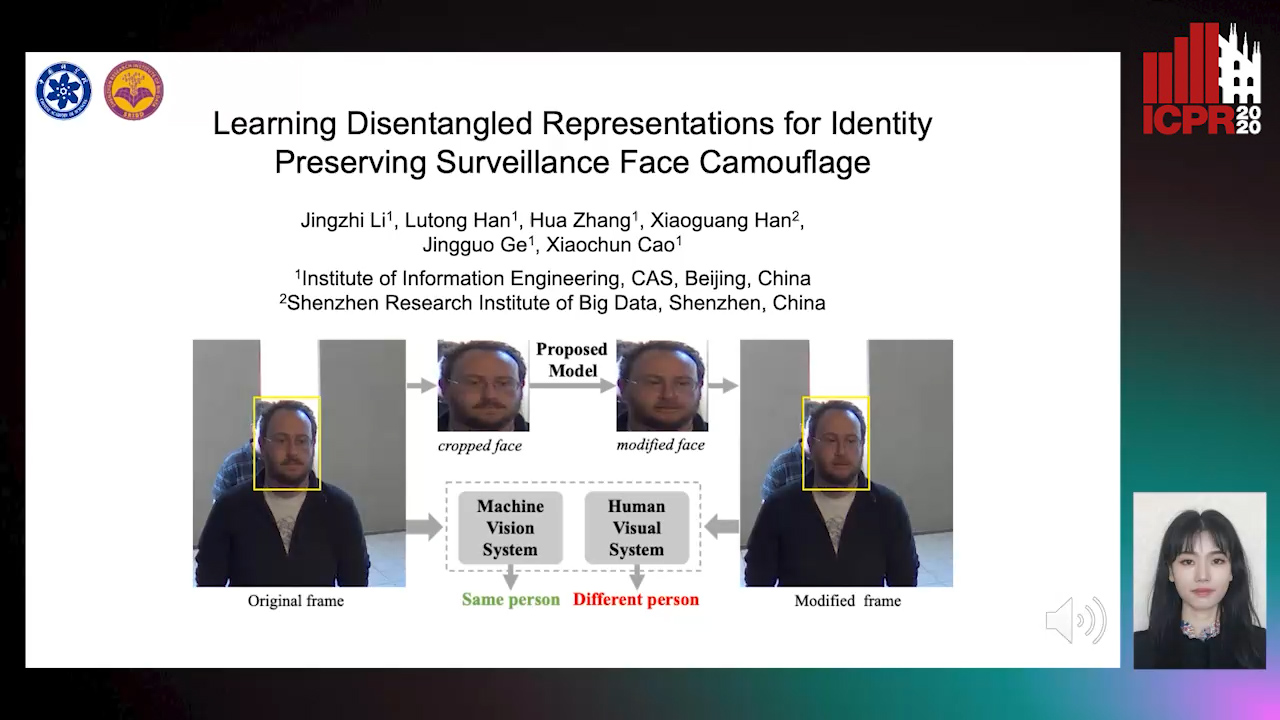

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

On the Evaluation of Generative Adversarial Networks by Discriminative Models

Amirsina Torfi, Mohammadreza Beyki, Edward Alan Fox

Auto-TLDR; Domain-agnostic GAN Evaluation with Siamese Neural Networks

Abstract Slides Poster Similar

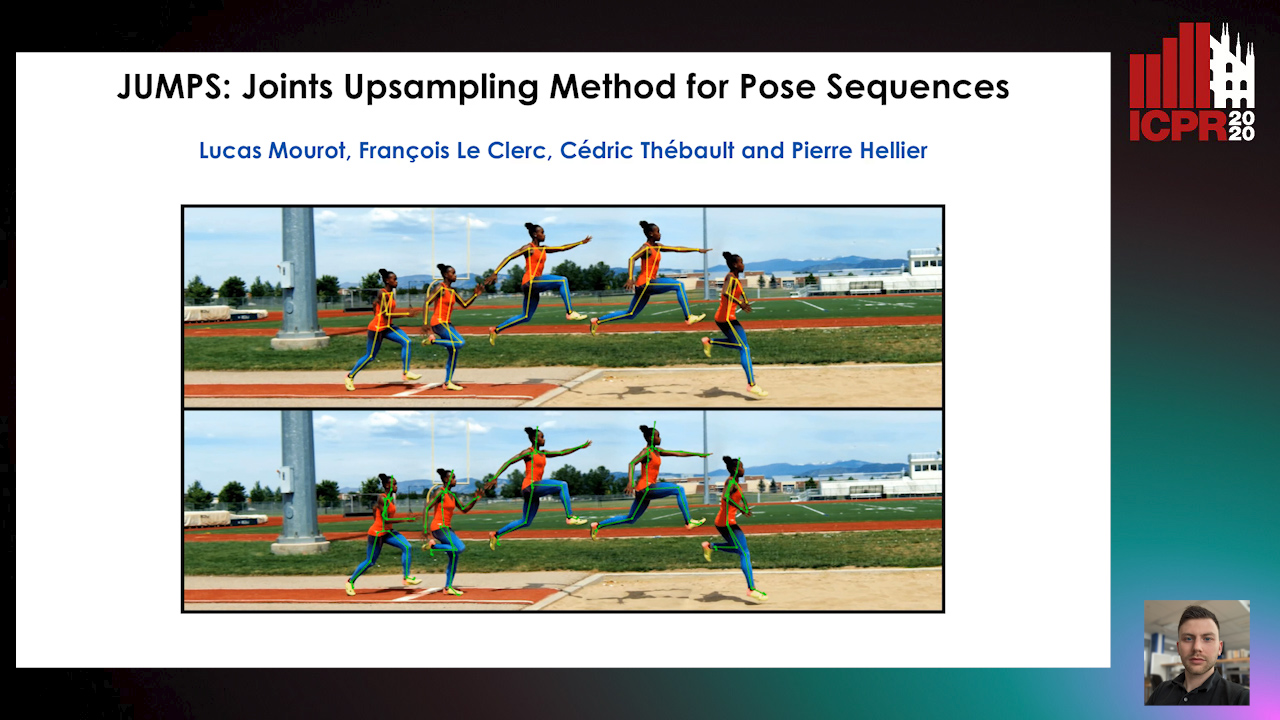

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

Separation of Aleatoric and Epistemic Uncertainty in Deterministic Deep Neural Networks

Denis Huseljic, Bernhard Sick, Marek Herde, Daniel Kottke

Auto-TLDR; AE-DNN: Modeling Uncertainty in Deep Neural Networks

Abstract Slides Poster Similar

Semantics-Guided Representation Learning with Applications to Visual Synthesis

Jia-Wei Yan, Ci-Siang Lin, Fu-En Yang, Yu-Jhe Li, Yu-Chiang Frank Wang

Auto-TLDR; Learning Interpretable and Interpolatable Latent Representations for Visual Synthesis

Abstract Slides Poster Similar

Variational Inference with Latent Space Quantization for Adversarial Resilience

Vinay Kyatham, Deepak Mishra, Prathosh A.P.

Auto-TLDR; A Generalized Defense Mechanism for Adversarial Attacks on Data Manifolds

Abstract Slides Poster Similar

Adversarial Knowledge Distillation for a Compact Generator

Hideki Tsunashima, Shigeo Morishima, Junji Yamato, Qiu Chen, Hirokatsu Kataoka

Auto-TLDR; Adversarial Knowledge Distillation for Generative Adversarial Nets

Abstract Slides Poster Similar

Auto Encoding Explanatory Examples with Stochastic Paths

Cesar Ali Ojeda Marin, Ramses J. Sanchez, Kostadin Cvejoski, Bogdan Georgiev

Auto-TLDR; Semantic Stochastic Path: Explaining a Classifier's Decision Making Process using latent codes

Abstract Slides Poster Similar

Variational Capsule Encoder

Harish Raviprakash, Syed Anwar, Ulas Bagci

Auto-TLDR; Bayesian Capsule Networks for Representation Learning in latent space

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Generative Deep-Neural-Network Mixture Modeling with Semi-Supervised MinMax+EM Learning

Auto-TLDR; Semi-supervised Deep Neural Networks for Generative Mixture Modeling and Clustering

Abstract Slides Poster Similar

Generating Private Data Surrogates for Vision Related Tasks

Ryan Webster, Julien Rabin, Loic Simon, Frederic Jurie

Auto-TLDR; Generative Adversarial Networks for Membership Inference Attacks

Abstract Slides Poster Similar

Mask-Based Style-Controlled Image Synthesis Using a Mask Style Encoder

Jaehyeong Cho, Wataru Shimoda, Keiji Yanai

Auto-TLDR; Style-controlled Image Synthesis from Semantic Segmentation masks using GANs

Abstract Slides Poster Similar

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Disentangle, Assemble, and Synthesize: Unsupervised Learning to Disentangle Appearance and Location

Hiroaki Aizawa, Hirokatsu Kataoka, Yutaka Satoh, Kunihito Kato

Auto-TLDR; Generative Adversarial Networks with Structural Constraint for controllability of latent space

Abstract Slides Poster Similar

Explorable Tone Mapping Operators

Su Chien-Chuan, Yu-Lun Liu, Hung Jin Lin, Ren Wang, Chia-Ping Chen, Yu-Lin Chang, Soo-Chang Pei

Auto-TLDR; Learning-based multimodal tone-mapping from HDR images

Abstract Slides Poster Similar

Interpolation in Auto Encoders with Bridge Processes

Carl Ringqvist, Henrik Hult, Judith Butepage, Hedvig Kjellstrom

Auto-TLDR; Stochastic interpolations from auto encoders trained on flattened sequences

Abstract Slides Poster Similar

Attributes Aware Face Generation with Generative Adversarial Networks

Zheng Yuan, Jie Zhang, Shiguang Shan, Xilin Chen

Auto-TLDR; AFGAN: A Generative Adversarial Network for Attributes Aware Face Image Generation

Abstract Slides Poster Similar

Learning Interpretable Representation for 3D Point Clouds

Feng-Guang Su, Ci-Siang Lin, Yu-Chiang Frank Wang

Auto-TLDR; Disentangling Body-type and Pose Information from 3D Point Clouds Using Adversarial Learning

Abstract Slides Poster Similar

Coherence and Identity Learning for Arbitrary-Length Face Video Generation

Shuquan Ye, Chu Han, Jiaying Lin, Guoqiang Han, Shengfeng He

Auto-TLDR; Face Video Synthesis Using Identity-Aware GAN and Face Coherence Network

Abstract Slides Poster Similar

Hierarchical Mixtures of Generators for Adversarial Learning

Alper Ahmetoğlu, Ethem Alpaydin

Auto-TLDR; Hierarchical Mixture of Generative Adversarial Networks

A Quantitative Evaluation Framework of Video De-Identification Methods

Sathya Bursic, Alessandro D'Amelio, Marco Granato, Giuliano Grossi, Raffaella Lanzarotti

Auto-TLDR; Face de-identification using photo-reality and facial expressions

Abstract Slides Poster Similar

Discriminative Multi-Level Reconstruction under Compact Latent Space for One-Class Novelty Detection

Jaewoo Park, Yoon Gyo Jung, Andrew Teoh

Auto-TLDR; Discriminative Compact AE for One-Class novelty detection and Adversarial Example Detection

Improved anomaly detection by training an autoencoder with skip connections on images corrupted with Stain-shaped noise

Anne-Sophie Collin, Christophe De Vleeschouwer

Auto-TLDR; Autoencoder with Skip Connections for Anomaly Detection

Abstract Slides Poster Similar

Continuous Learning of Face Attribute Synthesis

Ning Xin, Shaohui Xu, Fangzhe Nan, Xiaoli Dong, Weijun Li, Yuanzhou Yao

Auto-TLDR; Continuous Learning for Face Attribute Synthesis

Abstract Slides Poster Similar

Epitomic Variational Graph Autoencoder

Rayyan Ahmad Khan, Muhammad Umer Anwaar, Martin Kleinsteuber

Auto-TLDR; EVGAE: A Generative Variational Autoencoder for Graph Data

Abstract Slides Poster Similar

UCCTGAN: Unsupervised Clothing Color Transformation Generative Adversarial Network

Shuming Sun, Xiaoqiang Li, Jide Li

Auto-TLDR; An Unsupervised Clothing Color Transformation Generative Adversarial Network

Abstract Slides Poster Similar