Image Representation Learning by Transformation Regression

Xifeng Guo,

Jiyuan Liu,

Sihang Zhou,

En Zhu,

Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Similar papers

Multi-Modal Deep Clustering: Unsupervised Partitioning of Images

Auto-TLDR; Multi-Modal Deep Clustering for Unlabeled Images

Abstract Slides Poster Similar

Progressive Cluster Purification for Unsupervised Feature Learning

Yifei Zhang, Chang Liu, Yu Zhou, Wei Wang, Weiping Wang, Qixiang Ye

Auto-TLDR; Progressive Cluster Purification for Unsupervised Feature Learning

Abstract Slides Poster Similar

Variational Deep Embedding Clustering by Augmented Mutual Information Maximization

Qiang Ji, Yanfeng Sun, Yongli Hu, Baocai Yin

Auto-TLDR; Clustering by Augmented Mutual Information maximization for Deep Embedding

Abstract Slides Poster Similar

Generative Latent Implicit Conditional Optimization When Learning from Small Sample

Auto-TLDR; GLICO: Generative Latent Implicit Conditional Optimization for Small Sample Learning

Abstract Slides Poster Similar

Variational Capsule Encoder

Harish Raviprakash, Syed Anwar, Ulas Bagci

Auto-TLDR; Bayesian Capsule Networks for Representation Learning in latent space

Abstract Slides Poster Similar

Contextual Classification Using Self-Supervised Auxiliary Models for Deep Neural Networks

Sebastian Palacio, Philipp Engler, Jörn Hees, Andreas Dengel

Auto-TLDR; Self-Supervised Autogenous Learning for Deep Neural Networks

Abstract Slides Poster Similar

A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera, Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Abstract Slides Poster Similar

Semantics-Guided Representation Learning with Applications to Visual Synthesis

Jia-Wei Yan, Ci-Siang Lin, Fu-En Yang, Yu-Jhe Li, Yu-Chiang Frank Wang

Auto-TLDR; Learning Interpretable and Interpolatable Latent Representations for Visual Synthesis

Abstract Slides Poster Similar

Self-Supervised Domain Adaptation with Consistency Training

Liang Xiao, Jiaolong Xu, Dawei Zhao, Zhiyu Wang, Li Wang, Yiming Nie, Bin Dai

Auto-TLDR; Unsupervised Domain Adaptation for Image Classification

Abstract Slides Poster Similar

Semi-Supervised Class Incremental Learning

Alexis Lechat, Stéphane Herbin, Frederic Jurie

Auto-TLDR; incremental class learning with non-annotated batches

Abstract Slides Poster Similar

The Color Out of Space: Learning Self-Supervised Representations for Earth Observation Imagery

Stefano Vincenzi, Angelo Porrello, Pietro Buzzega, Marco Cipriano, Pietro Fronte, Roberto Cuccu, Carla Ippoliti, Annamaria Conte, Simone Calderara

Auto-TLDR; Satellite Image Representation Learning for Remote Sensing

Abstract Slides Poster Similar

IDA-GAN: A Novel Imbalanced Data Augmentation GAN

Auto-TLDR; IDA-GAN: Generative Adversarial Networks for Imbalanced Data Augmentation

Abstract Slides Poster Similar

Norm Loss: An Efficient yet Effective Regularization Method for Deep Neural Networks

Theodoros Georgiou, Sebastian Schmitt, Thomas Baeck, Wei Chen, Michael Lew

Auto-TLDR; Weight Soft-Regularization with Oblique Manifold for Convolutional Neural Network Training

Abstract Slides Poster Similar

A Self-Supervised GAN for Unsupervised Few-Shot Object Recognition

Auto-TLDR; Self-supervised Few-Shot Object Recognition with a Triplet GAN

Abstract Slides Poster Similar

Pretraining Image Encoders without Reconstruction Via Feature Prediction Loss

Gustav Grund Pihlgren, Fredrik Sandin, Marcus Liwicki

Auto-TLDR; Feature Prediction Loss for Autoencoder-based Pretraining of Image Encoders

Feature-Aware Unsupervised Learning with Joint Variational Attention and Automatic Clustering

Wang Ru, Lin Li, Peipei Wang, Liu Peiyu

Auto-TLDR; Deep Variational Attention Encoder-Decoder for Clustering

Abstract Slides Poster Similar

Local Clustering with Mean Teacher for Semi-Supervised Learning

Zexi Chen, Benjamin Dutton, Bharathkumar Ramachandra, Tianfu Wu, Ranga Raju Vatsavai

Auto-TLDR; Local Clustering for Semi-supervised Learning

A Close Look at Deep Learning with Small Data

Auto-TLDR; Low-Complex Neural Networks for Small Data Conditions

Abstract Slides Poster Similar

Quaternion Capsule Networks

Barış Özcan, Furkan Kınlı, Mustafa Furkan Kirac

Auto-TLDR; Quaternion Capsule Networks for Object Recognition

Abstract Slides Poster Similar

Learning from Web Data: Improving Crowd Counting Via Semi-Supervised Learning

Auto-TLDR; Semi-supervised Crowd Counting Baseline for Deep Neural Networks

Abstract Slides Poster Similar

Learning to Rank for Active Learning: A Listwise Approach

Minghan Li, Xialei Liu, Joost Van De Weijer, Bogdan Raducanu

Auto-TLDR; Learning Loss for Active Learning

MaxDropout: Deep Neural Network Regularization Based on Maximum Output Values

Claudio Filipi Gonçalves Santos, Danilo Colombo, Mateus Roder, Joao Paulo Papa

Auto-TLDR; MaxDropout: A Regularizer for Deep Neural Networks

Abstract Slides Poster Similar

AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault, Hervé Le Borgne, Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Abstract Slides Poster Similar

WeightAlign: Normalizing Activations by Weight Alignment

Xiangwei Shi, Yunqiang Li, Xin Liu, Jan Van Gemert

Auto-TLDR; WeightAlign: Normalization of Activations without Sample Statistics

Abstract Slides Poster Similar

Ancient Document Layout Analysis: Autoencoders Meet Sparse Coding

Homa Davoudi, Marco Fiorucci, Arianna Traviglia

Auto-TLDR; Unsupervised Unsupervised Representation Learning for Document Layout Analysis

Abstract Slides Poster Similar

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

Joint Supervised and Self-Supervised Learning for 3D Real World Challenges

Antonio Alliegro, Davide Boscaini, Tatiana Tommasi

Auto-TLDR; Self-supervision for 3D Shape Classification and Segmentation in Point Clouds

Enlarging Discriminative Power by Adding an Extra Class in Unsupervised Domain Adaptation

Hai Tran, Sumyeong Ahn, Taeyoung Lee, Yung Yi

Auto-TLDR; Unsupervised Domain Adaptation using Artificial Classes

Abstract Slides Poster Similar

Towards Robust Learning with Different Label Noise Distributions

Diego Ortego, Eric Arazo, Paul Albert, Noel E O'Connor, Kevin Mcguinness

Auto-TLDR; Distribution Robust Pseudo-Labeling with Semi-supervised Learning

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

Self-Supervised Learning for Astronomical Image Classification

Ana Martinazzo, Mateus Espadoto, Nina S. T. Hirata

Auto-TLDR; Unlabeled Astronomical Images for Deep Neural Network Pre-training

Abstract Slides Poster Similar

Learning Interpretable Representation for 3D Point Clouds

Feng-Guang Su, Ci-Siang Lin, Yu-Chiang Frank Wang

Auto-TLDR; Disentangling Body-type and Pose Information from 3D Point Clouds Using Adversarial Learning

Abstract Slides Poster Similar

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

Feature-Dependent Cross-Connections in Multi-Path Neural Networks

Dumindu Tissera, Kasun Vithanage, Rukshan Wijesinghe, Kumara Kahatapitiya, Subha Fernando, Ranga Rodrigo

Auto-TLDR; Multi-path Networks for Adaptive Feature Extraction

Abstract Slides Poster Similar

Pose-Robust Face Recognition by Deep Meta Capsule Network-Based Equivariant Embedding

Fangyu Wu, Jeremy Simon Smith, Wenjin Lu, Bailing Zhang

Auto-TLDR; Deep Meta Capsule Network-based Equivariant Embedding Model for Pose-Robust Face Recognition

Complementing Representation Deficiency in Few-Shot Image Classification: A Meta-Learning Approach

Xian Zhong, Cheng Gu, Wenxin Huang, Lin Li, Shuqin Chen, Chia-Wen Lin

Auto-TLDR; Meta-learning with Complementary Representations Network for Few-Shot Learning

Abstract Slides Poster Similar

Adaptive Image Compression Using GAN Based Semantic-Perceptual Residual Compensation

Ruojing Wang, Zitang Sun, Sei-Ichiro Kamata, Weili Chen

Auto-TLDR; Adaptive Image Compression using GAN based Semantic-Perceptual Residual Compensation

Abstract Slides Poster Similar

Graph-Based Interpolation of Feature Vectors for Accurate Few-Shot Classification

Yuqing Hu, Vincent Gripon, Stéphane Pateux

Auto-TLDR; Transductive Learning for Few-Shot Classification using Graph Neural Networks

Abstract Slides Poster Similar

Rethinking Deep Active Learning: Using Unlabeled Data at Model Training

Oriane Siméoni, Mateusz Budnik, Yannis Avrithis, Guillaume Gravier

Auto-TLDR; Unlabeled Data for Active Learning

Abstract Slides Poster Similar

Neuron-Based Network Pruning Based on Majority Voting

Ali Alqahtani, Xianghua Xie, Ehab Essa, Mark W. Jones

Auto-TLDR; Large-Scale Neural Network Pruning using Majority Voting

Abstract Slides Poster Similar

Understanding When Spatial Transformer Networks Do Not Support Invariance, and What to Do about It

Lukas Finnveden, Ylva Jansson, Tony Lindeberg

Auto-TLDR; Spatial Transformer Networks are unable to support invariance when transforming CNN feature maps

Abstract Slides Poster Similar

Beyond Cross-Entropy: Learning Highly Separable Feature Distributions for Robust and Accurate Classification

Arslan Ali, Andrea Migliorati, Tiziano Bianchi, Enrico Magli

Auto-TLDR; Gaussian class-conditional simplex loss for adversarial robust multiclass classifiers

Abstract Slides Poster Similar

Augmented Bi-Path Network for Few-Shot Learning

Baoming Yan, Chen Zhou, Bo Zhao, Kan Guo, Yang Jiang, Xiaobo Li, Zhang Ming, Yizhou Wang

Auto-TLDR; Augmented Bi-path Network for Few-shot Learning

Abstract Slides Poster Similar

Revisiting ImprovedGAN with Metric Learning for Semi-Supervised Learning

Jaewoo Park, Yoon Gyo Jung, Andrew Teoh

Auto-TLDR; Improving ImprovedGAN with Metric Learning for Semi-supervised Learning

Abstract Slides Poster Similar

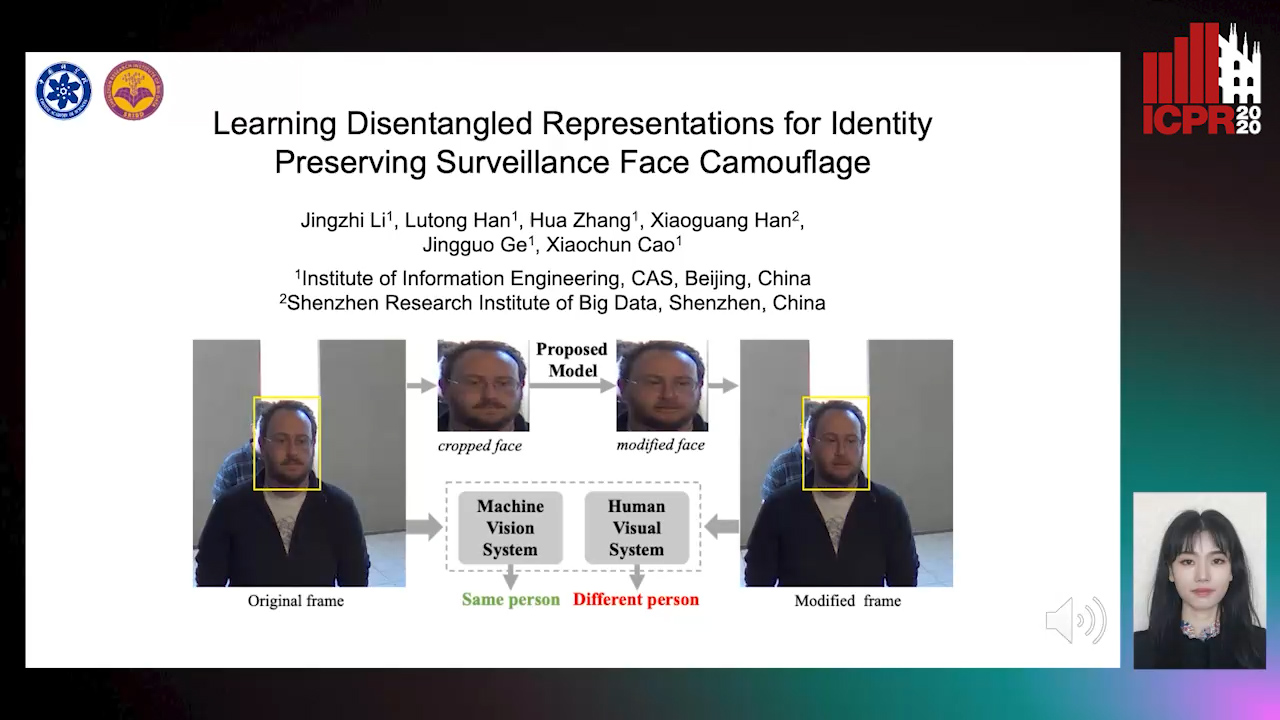

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

Improving Batch Normalization with Skewness Reduction for Deep Neural Networks

Pak Lun Kevin Ding, Martin Sarah, Baoxin Li

Auto-TLDR; Batch Normalization with Skewness Reduction

Abstract Slides Poster Similar

Augmentation of Small Training Data Using GANs for Enhancing the Performance of Image Classification

Auto-TLDR; Generative Adversarial Network for Image Training Data Augmentation

Abstract Slides Poster Similar