Local-Global Interactive Network for Face Age Transformation

Jie Song,

Ping Wei,

Huan Li,

Yongchi Zhang,

Nanning Zheng

Auto-TLDR; A Novel Local-Global Interaction Framework for Long-span Face Age Transformation

Similar papers

Controllable Face Aging

Auto-TLDR; A controllable face aging method via attribute disentanglement generative adversarial network

Abstract Slides Poster Similar

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

Age Gap Reducer-GAN for Recognizing Age-Separated Faces

Daksha Yadav, Naman Kohli, Mayank Vatsa, Richa Singh, Afzel Noore

Auto-TLDR; Generative Adversarial Network for Age-separated Face Recognition

Abstract Slides Poster Similar

Identity-Preserved Face Beauty Transformation with Conditional Generative Adversarial Networks

Auto-TLDR; Identity-preserved face beauty transformation using conditional GANs

Abstract Slides Poster Similar

SATGAN: Augmenting Age Biased Dataset for Cross-Age Face Recognition

Wenshuang Liu, Wenting Chen, Yuanlue Zhu, Linlin Shen

Auto-TLDR; SATGAN: Stable Age Translation GAN for Cross-Age Face Recognition

Abstract Slides Poster Similar

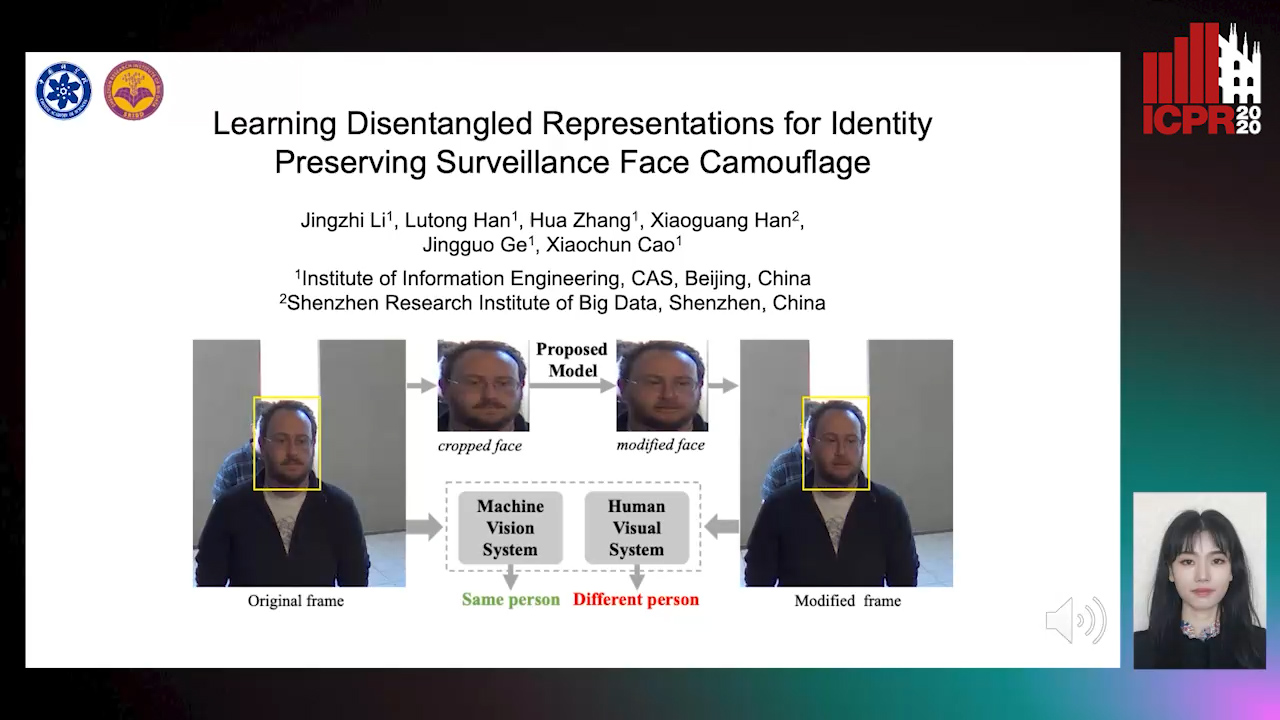

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

Attributes Aware Face Generation with Generative Adversarial Networks

Zheng Yuan, Jie Zhang, Shiguang Shan, Xilin Chen

Auto-TLDR; AFGAN: A Generative Adversarial Network for Attributes Aware Face Image Generation

Abstract Slides Poster Similar

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

Multi-Laplacian GAN with Edge Enhancement for Face Super Resolution

Auto-TLDR; Face Image Super-Resolution with Enhanced Edge Information

Abstract Slides Poster Similar

Pixel-based Facial Expression Synthesis

Auto-TLDR; pixel-based facial expression synthesis using GANs

Abstract Slides Poster Similar

Attention2AngioGAN: Synthesizing Fluorescein Angiography from Retinal Fundus Images Using Generative Adversarial Networks

Sharif Amit Kamran, Khondker Fariha Hossain, Alireza Tavakkoli, Stewart Lee Zuckerbrod

Auto-TLDR; Fluorescein Angiography from Fundus Images using Attention-based Generative Networks

Abstract Slides Poster Similar

Identifying Missing Children: Face Age-Progression Via Deep Feature Aging

Debayan Deb, Divyansh Aggarwal, Anil Jain

Auto-TLDR; Aging Face Features for Missing Children Identification

Contrastive Data Learning for Facial Pose and Illumination Normalization

Auto-TLDR; Pose and Illumination Normalization with Contrast Data Learning for Face Recognition

Abstract Slides Poster Similar

Unsupervised Face Manipulation Via Hallucination

Keerthy Kusumam, Enrique Sanchez, Georgios Tzimiropoulos

Auto-TLDR; Unpaired Face Image Manipulation using Autoencoders

Abstract Slides Poster Similar

Continuous Learning of Face Attribute Synthesis

Ning Xin, Shaohui Xu, Fangzhe Nan, Xiaoli Dong, Weijun Li, Yuanzhou Yao

Auto-TLDR; Continuous Learning for Face Attribute Synthesis

Abstract Slides Poster Similar

Coherence and Identity Learning for Arbitrary-Length Face Video Generation

Shuquan Ye, Chu Han, Jiaying Lin, Guoqiang Han, Shengfeng He

Auto-TLDR; Face Video Synthesis Using Identity-Aware GAN and Face Coherence Network

Abstract Slides Poster Similar

Exemplar Guided Cross-Spectral Face Hallucination Via Mutual Information Disentanglement

Haoxue Wu, Huaibo Huang, Aijing Yu, Jie Cao, Zhen Lei, Ran He

Auto-TLDR; Exemplar Guided Cross-Spectral Face Hallucination with Structural Representation Learning

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

Face Super-Resolution Network with Incremental Enhancement of Facial Parsing Information

Shuang Liu, Chengyi Xiong, Zhirong Gao

Auto-TLDR; Learning-based Face Super-Resolution with Incremental Boosting Facial Parsing Information

Abstract Slides Poster Similar

Mask-Based Style-Controlled Image Synthesis Using a Mask Style Encoder

Jaehyeong Cho, Wataru Shimoda, Keiji Yanai

Auto-TLDR; Style-controlled Image Synthesis from Semantic Segmentation masks using GANs

Abstract Slides Poster Similar

Cycle-Consistent Adversarial Networks and Fast Adaptive Bi-Dimensional Empirical Mode Decomposition for Style Transfer

Elissavet Batziou, Petros Alvanitopoulos, Konstantinos Ioannidis, Ioannis Patras, Stefanos Vrochidis, Ioannis Kompatsiaris

Auto-TLDR; FABEMD: Fast and Adaptive Bidimensional Empirical Mode Decomposition for Style Transfer on Images

Abstract Slides Poster Similar

UCCTGAN: Unsupervised Clothing Color Transformation Generative Adversarial Network

Shuming Sun, Xiaoqiang Li, Jide Li

Auto-TLDR; An Unsupervised Clothing Color Transformation Generative Adversarial Network

Abstract Slides Poster Similar

Talking Face Generation Via Learning Semantic and Temporal Synchronous Landmarks

Aihua Zheng, Feixia Zhu, Hao Zhu, Mandi Luo, Ran He

Auto-TLDR; A semantic and temporal synchronous landmark learning method for talking face generation

Abstract Slides Poster Similar

Learning Semantic Representations Via Joint 3D Face Reconstruction and Facial Attribute Estimation

Zichun Weng, Youjun Xiang, Xianfeng Li, Juntao Liang, Wanliang Huo, Yuli Fu

Auto-TLDR; Joint Framework for 3D Face Reconstruction with Facial Attribute Estimation

Abstract Slides Poster Similar

An Unsupervised Approach towards Varying Human Skin Tone Using Generative Adversarial Networks

Debapriya Roy, Diganta Mukherjee, Bhabatosh Chanda

Auto-TLDR; Unsupervised Skin Tone Change Using Augmented Reality Based Models

Abstract Slides Poster Similar

Super-Resolution Guided Pore Detection for Fingerprint Recognition

Syeda Nyma Ferdous, Ali Dabouei, Jeremy Dawson, Nasser M. Nasarabadi

Auto-TLDR; Super-Resolution Generative Adversarial Network for Fingerprint Recognition Using Pore Features

Abstract Slides Poster Similar

Generating Private Data Surrogates for Vision Related Tasks

Ryan Webster, Julien Rabin, Loic Simon, Frederic Jurie

Auto-TLDR; Generative Adversarial Networks for Membership Inference Attacks

Abstract Slides Poster Similar

Cascade Attention Guided Residue Learning GAN for Cross-Modal Translation

Bin Duan, Wei Wang, Hao Tang, Hugo Latapie, Yan Yan

Auto-TLDR; Cascade Attention-Guided Residue GAN for Cross-modal Audio-Visual Learning

Abstract Slides Poster Similar

Pose Variation Adaptation for Person Re-Identification

Lei Zhang, Na Jiang, Qishuai Diao, Yue Xu, Zhong Zhou, Wei Wu

Auto-TLDR; Pose Transfer Generative Adversarial Network for Person Re-identification

Abstract Slides Poster Similar

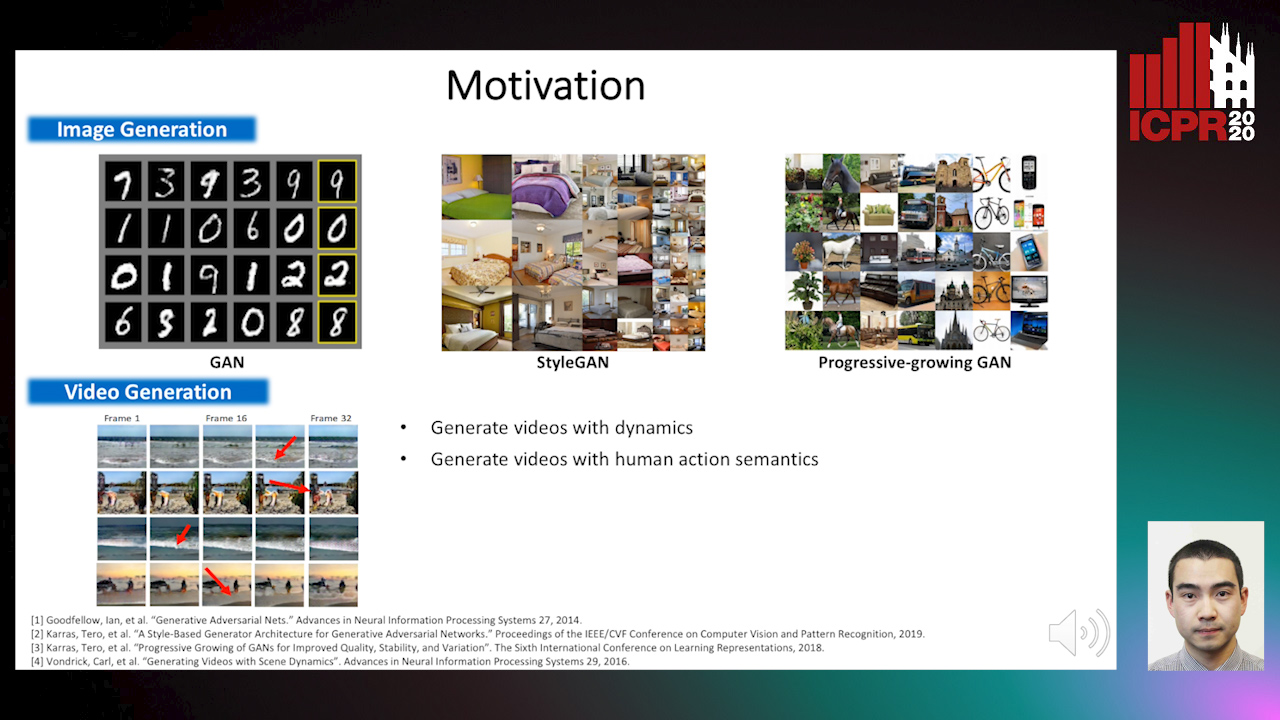

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Detail Fusion GAN: High-Quality Translation for Unpaired Images with GAN-Based Data Augmentation

Ling Li, Yaochen Li, Chuan Wu, Hang Dong, Peilin Jiang, Fei Wang

Auto-TLDR; Data Augmentation with GAN-based Generative Adversarial Network

Abstract Slides Poster Similar

Makeup Style Transfer on Low-Quality Images with Weighted Multi-Scale Attention

Daniel Organisciak, Edmond S. L. Ho, Shum Hubert P. H.

Auto-TLDR; Facial Makeup Style Transfer for Low-Resolution Images Using Multi-Scale Spatial Attention

Abstract Slides Poster Similar

Semi-Supervised Generative Adversarial Networks with a Pair of Complementary Generators for Retinopathy Screening

Yingpeng Xie, Qiwei Wan, Hai Xie, En-Leng Tan, Yanwu Xu, Baiying Lei

Auto-TLDR; Generative Adversarial Networks for Retinopathy Diagnosis via Fundus Images

Abstract Slides Poster Similar

DFH-GAN: A Deep Face Hashing with Generative Adversarial Network

Bo Xiao, Lanxiang Zhou, Yifei Wang, Qiangfang Xu

Auto-TLDR; Deep Face Hashing with GAN for Face Image Retrieval

Abstract Slides Poster Similar

Image Inpainting with Contrastive Relation Network

Xiaoqiang Zhou, Junjie Li, Zilei Wang, Ran He, Tieniu Tan

Auto-TLDR; Two-Stage Inpainting with Graph-based Relation Network

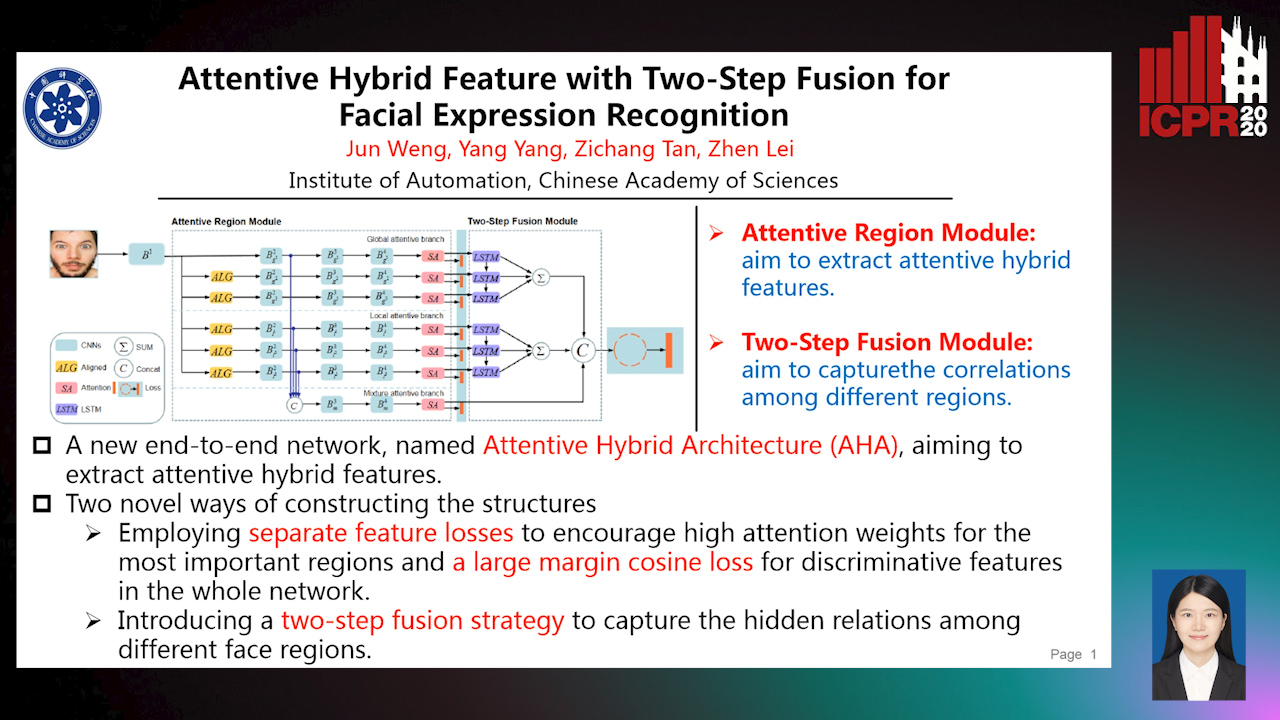

Attentive Hybrid Feature Based a Two-Step Fusion for Facial Expression Recognition

Jun Weng, Yang Yang, Zichang Tan, Zhen Lei

Auto-TLDR; Attentive Hybrid Architecture for Facial Expression Recognition

Abstract Slides Poster Similar

Small Object Detection by Generative and Discriminative Learning

Yi Gu, Jie Li, Chentao Wu, Weijia Jia, Jianping Chen

Auto-TLDR; Generative and Discriminative Learning for Small Object Detection

Abstract Slides Poster Similar

Thermal Image Enhancement Using Generative Adversarial Network for Pedestrian Detection

Mohamed Amine Marnissi, Hajer Fradi, Anis Sahbani, Najoua Essoukri Ben Amara

Auto-TLDR; Improving Visual Quality of Infrared Images for Pedestrian Detection Using Generative Adversarial Network

Abstract Slides Poster Similar

Deep Multi-Task Learning for Facial Expression Recognition and Synthesis Based on Selective Feature Sharing

Rui Zhao, Tianshan Liu, Jun Xiao, P. K. Daniel Lun, Kin-Man Lam

Auto-TLDR; Multi-task Learning for Facial Expression Recognition and Synthesis

Abstract Slides Poster Similar

Face Anti-Spoofing Using Spatial Pyramid Pooling

Lei Shi, Zhuo Zhou, Zhenhua Guo

Auto-TLDR; Spatial Pyramid Pooling for Face Anti-Spoofing

Abstract Slides Poster Similar

Explorable Tone Mapping Operators

Su Chien-Chuan, Yu-Lun Liu, Hung Jin Lin, Ren Wang, Chia-Ping Chen, Yu-Lin Chang, Soo-Chang Pei

Auto-TLDR; Learning-based multimodal tone-mapping from HDR images

Abstract Slides Poster Similar

Position-Aware and Symmetry Enhanced GAN for Radial Distortion Correction

Yongjie Shi, Xin Tong, Jingsi Wen, He Zhao, Xianghua Ying, Jinshi Hongbin Zha

Auto-TLDR; Generative Adversarial Network for Radial Distorted Image Correction

Abstract Slides Poster Similar

GarmentGAN: Photo-Realistic Adversarial Fashion Transfer

Amir Hossein Raffiee, Michael Sollami

Auto-TLDR; GarmentGAN: A Generative Adversarial Network for Image-Based Garment Transfer

Abstract Slides Poster Similar

MBD-GAN: Model-Based Image Deblurring with a Generative Adversarial Network

Auto-TLDR; Model-Based Deblurring GAN for Inverse Imaging

Abstract Slides Poster Similar

GAP: Quantifying the Generative Adversarial Set and Class Feature Applicability of Deep Neural Networks

Edward Collier, Supratik Mukhopadhyay

Auto-TLDR; Approximating Adversarial Learning in Deep Neural Networks Using Set and Class Adversaries

Abstract Slides Poster Similar

Adaptive Image Compression Using GAN Based Semantic-Perceptual Residual Compensation

Ruojing Wang, Zitang Sun, Sei-Ichiro Kamata, Weili Chen

Auto-TLDR; Adaptive Image Compression using GAN based Semantic-Perceptual Residual Compensation

Abstract Slides Poster Similar

Galaxy Image Translation with Semi-Supervised Noise-Reconstructed Generative Adversarial Networks

Qiufan Lin, Dominique Fouchez, Jérôme Pasquet

Auto-TLDR; Semi-supervised Image Translation with Generative Adversarial Networks Using Paired and Unpaired Images

Abstract Slides Poster Similar

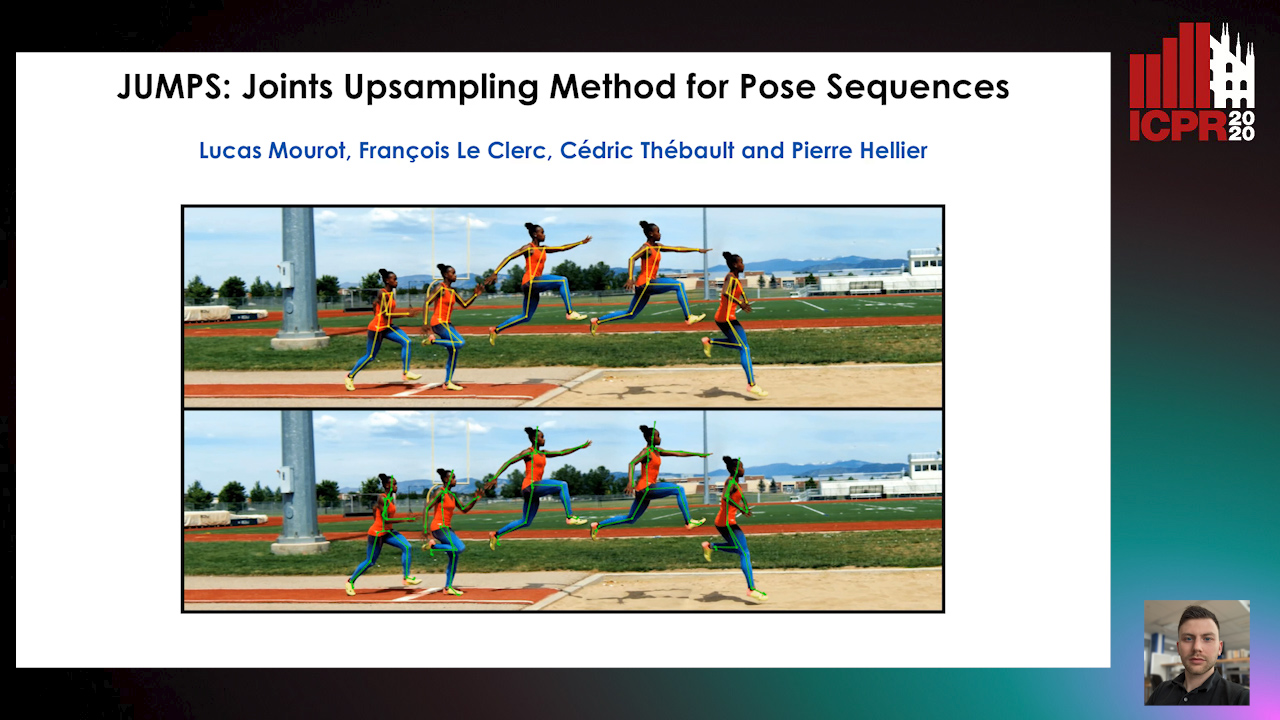

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar