GAP: Quantifying the Generative Adversarial Set and Class Feature Applicability of Deep Neural Networks

Edward Collier,

Supratik Mukhopadhyay

Auto-TLDR; Approximating Adversarial Learning in Deep Neural Networks Using Set and Class Adversaries

Similar papers

Hierarchical Mixtures of Generators for Adversarial Learning

Alper Ahmetoğlu, Ethem Alpaydin

Auto-TLDR; Hierarchical Mixture of Generative Adversarial Networks

Enlarging Discriminative Power by Adding an Extra Class in Unsupervised Domain Adaptation

Hai Tran, Sumyeong Ahn, Taeyoung Lee, Yung Yi

Auto-TLDR; Unsupervised Domain Adaptation using Artificial Classes

Abstract Slides Poster Similar

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

Data Augmentation Via Mixed Class Interpolation Using Cycle-Consistent Generative Adversarial Networks Applied to Cross-Domain Imagery

Hiroshi Sasaki, Chris G. Willcocks, Toby Breckon

Auto-TLDR; C2GMA: A Generative Domain Transfer Model for Non-visible Domain Classification

Abstract Slides Poster Similar

On the Evaluation of Generative Adversarial Networks by Discriminative Models

Amirsina Torfi, Mohammadreza Beyki, Edward Alan Fox

Auto-TLDR; Domain-agnostic GAN Evaluation with Siamese Neural Networks

Abstract Slides Poster Similar

A Simple Domain Shifting Network for Generating Low Quality Images

Guruprasad Hegde, Avinash Nittur Ramesh, Kanchana Vaishnavi Gandikota, Michael Möller, Roman Obermaisser

Auto-TLDR; Robotic Image Classification Using Quality degrading networks

Abstract Slides Poster Similar

Learning Low-Shot Generative Networks for Cross-Domain Data

Hsuan-Kai Kao, Cheng-Che Lee, Wei-Chen Chiu

Auto-TLDR; Learning Generators for Cross-Domain Data under Low-Shot Learning

Abstract Slides Poster Similar

Unsupervised Multi-Task Domain Adaptation

Auto-TLDR; Unsupervised Domain Adaptation with Multi-task Learning for Image Recognition

Abstract Slides Poster Similar

Unsupervised Domain Adaptation with Multiple Domain Discriminators and Adaptive Self-Training

Teo Spadotto, Marco Toldo, Umberto Michieli, Pietro Zanuttigh

Auto-TLDR; Unsupervised Domain Adaptation for Semantic Segmentation of Urban Scenes

Abstract Slides Poster Similar

Semi-Supervised Generative Adversarial Networks with a Pair of Complementary Generators for Retinopathy Screening

Yingpeng Xie, Qiwei Wan, Hai Xie, En-Leng Tan, Yanwu Xu, Baiying Lei

Auto-TLDR; Generative Adversarial Networks for Retinopathy Diagnosis via Fundus Images

Abstract Slides Poster Similar

Mask-Based Style-Controlled Image Synthesis Using a Mask Style Encoder

Jaehyeong Cho, Wataru Shimoda, Keiji Yanai

Auto-TLDR; Style-controlled Image Synthesis from Semantic Segmentation masks using GANs

Abstract Slides Poster Similar

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

Class Conditional Alignment for Partial Domain Adaptation

Mohsen Kheirandishfard, Fariba Zohrizadeh, Farhad Kamangar

Auto-TLDR; Multi-class Adversarial Adaptation for Partial Domain Adaptation

Abstract Slides Poster Similar

Efficient Shadow Detection and Removal Using Synthetic Data with Domain Adaptation

Rui Guo, Babajide Ayinde, Hao Sun

Auto-TLDR; Shadow Detection and Removal with Domain Adaptation and Synthetic Image Database

Signal Generation Using 1d Deep Convolutional Generative Adversarial Networks for Fault Diagnosis of Electrical Machines

Russell Sabir, Daniele Rosato, Sven Hartmann, Clemens Gühmann

Auto-TLDR; Large Dataset Generation from Faulty AC Machines using Deep Convolutional GAN

Abstract Slides Poster Similar

Galaxy Image Translation with Semi-Supervised Noise-Reconstructed Generative Adversarial Networks

Qiufan Lin, Dominique Fouchez, Jérôme Pasquet

Auto-TLDR; Semi-supervised Image Translation with Generative Adversarial Networks Using Paired and Unpaired Images

Abstract Slides Poster Similar

Quantifying the Use of Domain Randomization

Mohammad Ani, Hector Basevi, Ales Leonardis

Auto-TLDR; Evaluating Domain Randomization for Synthetic Image Generation by directly measuring the difference between realistic and synthetic data distributions

Abstract Slides Poster Similar

Attention2AngioGAN: Synthesizing Fluorescein Angiography from Retinal Fundus Images Using Generative Adversarial Networks

Sharif Amit Kamran, Khondker Fariha Hossain, Alireza Tavakkoli, Stewart Lee Zuckerbrod

Auto-TLDR; Fluorescein Angiography from Fundus Images using Attention-based Generative Networks

Abstract Slides Poster Similar

A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera, Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Abstract Slides Poster Similar

Cross-Domain Semantic Segmentation of Urban Scenes Via Multi-Level Feature Alignment

Bin Zhang, Shengjie Zhao, Rongqing Zhang

Auto-TLDR; Cross-Domain Semantic Segmentation Using Generative Adversarial Networks

Abstract Slides Poster Similar

Supervised Domain Adaptation Using Graph Embedding

Lukas Hedegaard, Omar Ali Sheikh-Omar, Alexandros Iosifidis

Auto-TLDR; Domain Adaptation from the Perspective of Multi-view Graph Embedding and Dimensionality Reduction

Abstract Slides Poster Similar

Detail Fusion GAN: High-Quality Translation for Unpaired Images with GAN-Based Data Augmentation

Ling Li, Yaochen Li, Chuan Wu, Hang Dong, Peilin Jiang, Fei Wang

Auto-TLDR; Data Augmentation with GAN-based Generative Adversarial Network

Abstract Slides Poster Similar

High Resolution Face Age Editing

Xu Yao, Gilles Puy, Alasdair Newson, Yann Gousseau, Pierre Hellier

Auto-TLDR; An Encoder-Decoder Architecture for Face Age editing on High Resolution Images

Abstract Slides Poster Similar

Few-Shot Font Generation with Deep Metric Learning

Haruka Aoki, Koki Tsubota, Hikaru Ikuta, Kiyoharu Aizawa

Auto-TLDR; Deep Metric Learning for Japanese Typographic Font Synthesis

Abstract Slides Poster Similar

IDA-GAN: A Novel Imbalanced Data Augmentation GAN

Auto-TLDR; IDA-GAN: Generative Adversarial Networks for Imbalanced Data Augmentation

Abstract Slides Poster Similar

Leveraging Synthetic Subject Invariant EEG Signals for Zero Calibration BCI

Nik Khadijah Nik Aznan, Amir Atapour-Abarghouei, Stephen Bonner, Jason Connolly, Toby Breckon

Auto-TLDR; SIS-GAN: Subject Invariant SSVEP Generative Adversarial Network for Brain-Computer Interface

DAPC: Domain Adaptation People Counting Via Style-Level Transfer Learning and Scene-Aware Estimation

Na Jiang, Xingsen Wen, Zhiping Shi

Auto-TLDR; Domain Adaptation People counting via Style-Level Transfer Learning and Scene-Aware Estimation

Abstract Slides Poster Similar

UCCTGAN: Unsupervised Clothing Color Transformation Generative Adversarial Network

Shuming Sun, Xiaoqiang Li, Jide Li

Auto-TLDR; An Unsupervised Clothing Color Transformation Generative Adversarial Network

Abstract Slides Poster Similar

Adversarial Knowledge Distillation for a Compact Generator

Hideki Tsunashima, Shigeo Morishima, Junji Yamato, Qiu Chen, Hirokatsu Kataoka

Auto-TLDR; Adversarial Knowledge Distillation for Generative Adversarial Nets

Abstract Slides Poster Similar

Adversarially Constrained Interpolation for Unsupervised Domain Adaptation

Mohamed Azzam, Aurele Tohokantche Gnanha, Hau-San Wong, Si Wu

Auto-TLDR; Unsupervised Domain Adaptation with Domain Mixup Strategy

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

A Unified Framework for Distance-Aware Domain Adaptation

Fei Wang, Youdong Ding, Huan Liang, Yuzhen Gao, Wenqi Che

Auto-TLDR; distance-aware domain adaptation

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

GarmentGAN: Photo-Realistic Adversarial Fashion Transfer

Amir Hossein Raffiee, Michael Sollami

Auto-TLDR; GarmentGAN: A Generative Adversarial Network for Image-Based Garment Transfer

Abstract Slides Poster Similar

Disentangle, Assemble, and Synthesize: Unsupervised Learning to Disentangle Appearance and Location

Hiroaki Aizawa, Hirokatsu Kataoka, Yutaka Satoh, Kunihito Kato

Auto-TLDR; Generative Adversarial Networks with Structural Constraint for controllability of latent space

Abstract Slides Poster Similar

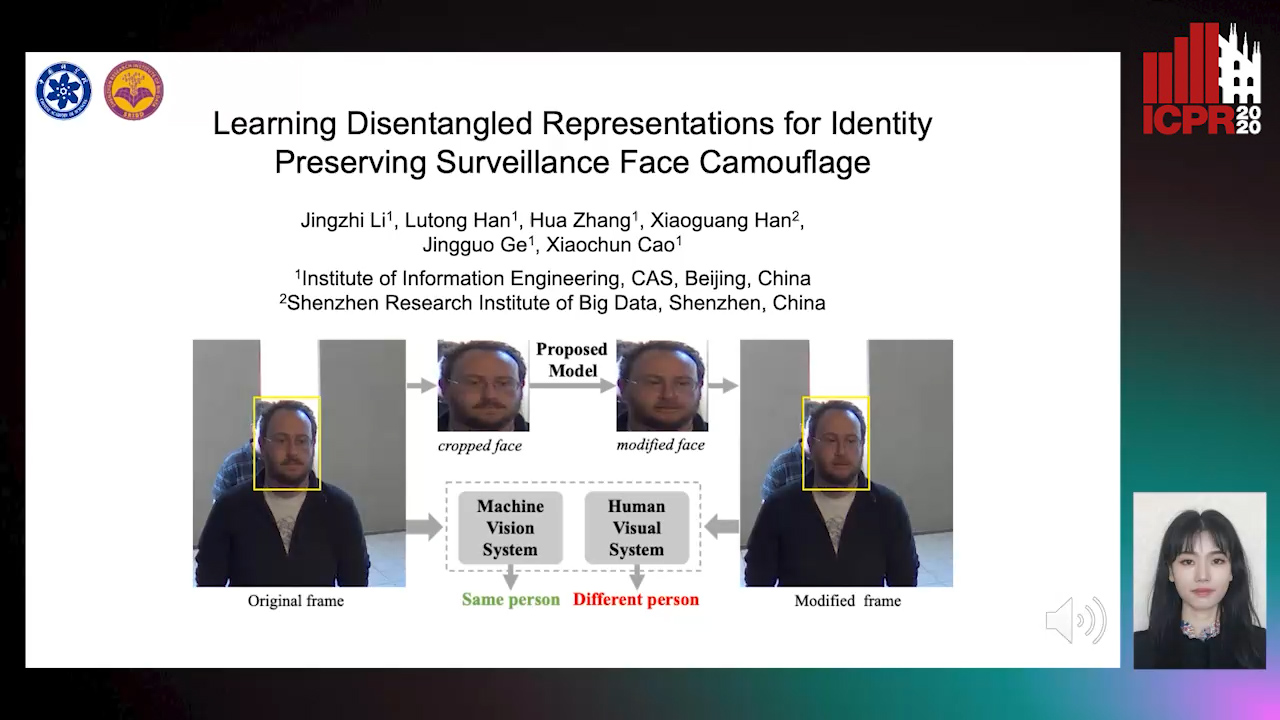

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

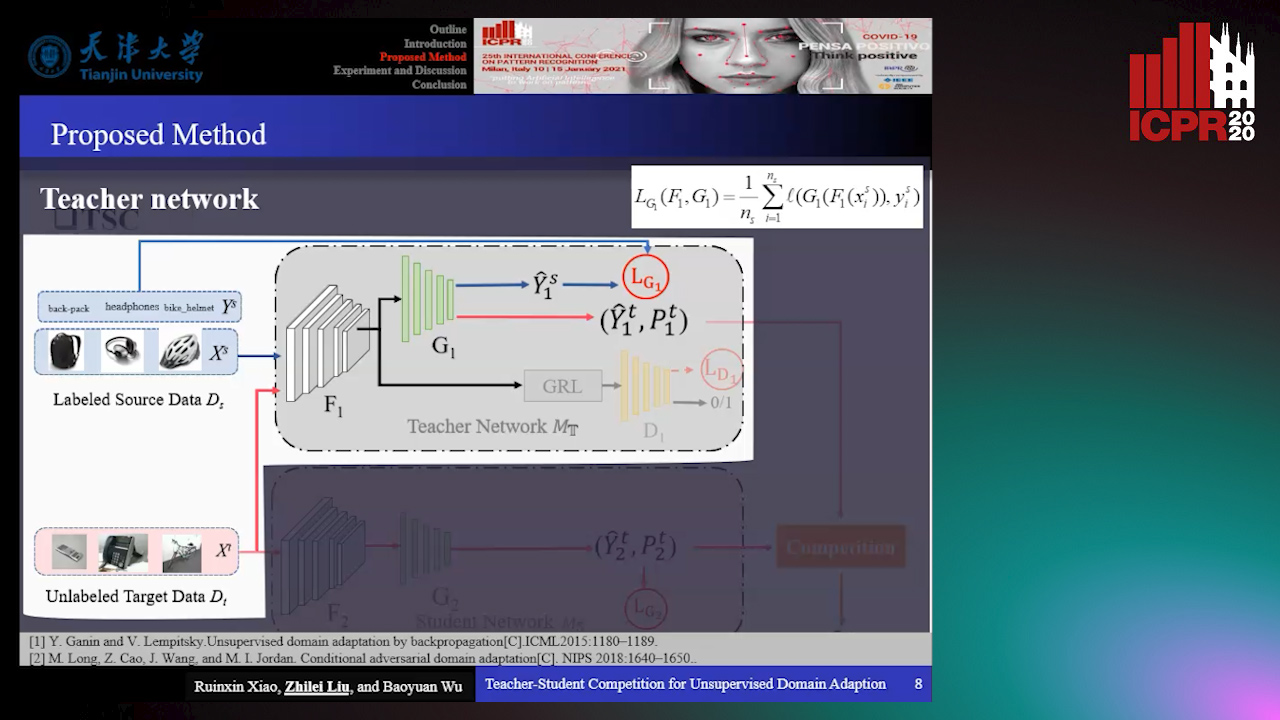

Teacher-Student Competition for Unsupervised Domain Adaptation

Ruixin Xiao, Zhilei Liu, Baoyuan Wu

Auto-TLDR; Unsupervised Domain Adaption with Teacher-Student Competition

Abstract Slides Poster Similar

Foreground-Focused Domain Adaption for Object Detection

Auto-TLDR; Unsupervised Domain Adaptation for Unsupervised Object Detection

Cascade Attention Guided Residue Learning GAN for Cross-Modal Translation

Bin Duan, Wei Wang, Hao Tang, Hugo Latapie, Yan Yan

Auto-TLDR; Cascade Attention-Guided Residue GAN for Cross-modal Audio-Visual Learning

Abstract Slides Poster Similar

Augmented Cyclic Consistency Regularization for Unpaired Image-To-Image Translation

Takehiko Ohkawa, Naoto Inoue, Hirokatsu Kataoka, Nakamasa Inoue

Auto-TLDR; Augmented Cyclic Consistency Regularization for Unpaired Image-to-Image Translation

Abstract Slides Poster Similar

Generating Private Data Surrogates for Vision Related Tasks

Ryan Webster, Julien Rabin, Loic Simon, Frederic Jurie

Auto-TLDR; Generative Adversarial Networks for Membership Inference Attacks

Abstract Slides Poster Similar

Generative Latent Implicit Conditional Optimization When Learning from Small Sample

Auto-TLDR; GLICO: Generative Latent Implicit Conditional Optimization for Small Sample Learning

Abstract Slides Poster Similar

Interpreting the Latent Space of GANs Via Correlation Analysis for Controllable Concept Manipulation

Ziqiang Li, Rentuo Tao, Hongjing Niu, Bin Li

Auto-TLDR; Exploring latent space of GANs by analyzing correlation between latent variables and semantic contents in generated images

Abstract Slides Poster Similar

Spatial-Aware GAN for Unsupervised Person Re-Identification

Fangneng Zhan, Changgong Zhang

Auto-TLDR; Unsupervised Unsupervised Domain Adaptation for Person Re-Identification

Identity-Preserved Face Beauty Transformation with Conditional Generative Adversarial Networks

Auto-TLDR; Identity-preserved face beauty transformation using conditional GANs

Abstract Slides Poster Similar

Open Set Domain Recognition Via Attention-Based GCN and Semantic Matching Optimization

Xinxing He, Yuan Yuan, Zhiyu Jiang

Auto-TLDR; Attention-based GCN and Semantic Matching Optimization for Open Set Domain Recognition

Abstract Slides Poster Similar

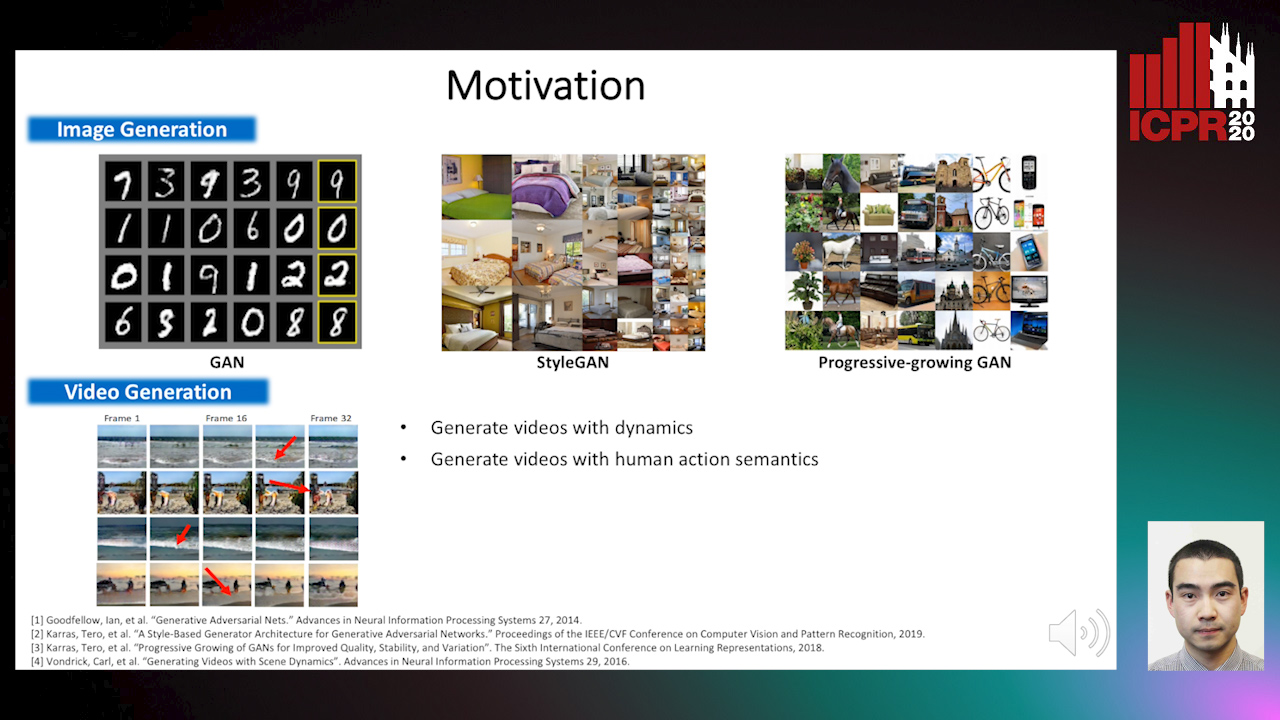

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Revisiting ImprovedGAN with Metric Learning for Semi-Supervised Learning

Jaewoo Park, Yoon Gyo Jung, Andrew Teoh

Auto-TLDR; Improving ImprovedGAN with Metric Learning for Semi-supervised Learning

Abstract Slides Poster Similar