CardioGAN: An Attention-Based Generative Adversarial Network for Generation of Electrocardiograms

Subhrajyoti Dasgupta,

Sudip Das,

Ujjwal Bhattacharya

Auto-TLDR; CardioGAN: Generative Adversarial Network for Synthetic Electrocardiogram Signals

Similar papers

End-To-End Multi-Task Learning of Missing Value Imputation and Forecasting in Time-Series Data

Jinhee Kim, Taesung Kim, Jang-Ho Choi, Jaegul Choo

Auto-TLDR; Time-Series Prediction with Denoising and Imputation of Missing Data

Abstract Slides Poster Similar

Signal Generation Using 1d Deep Convolutional Generative Adversarial Networks for Fault Diagnosis of Electrical Machines

Russell Sabir, Daniele Rosato, Sven Hartmann, Clemens Gühmann

Auto-TLDR; Large Dataset Generation from Faulty AC Machines using Deep Convolutional GAN

Abstract Slides Poster Similar

EasiECG: A Novel Inter-Patient Arrhythmia Classification Method Using ECG Waves

Chuanqi Han, Ruoran Huang, Fang Yu, Xi Huang, Li Cui

Auto-TLDR; EasiECG: Attention-based Convolution Factorization Machines for Arrhythmia Classification

Abstract Slides Poster Similar

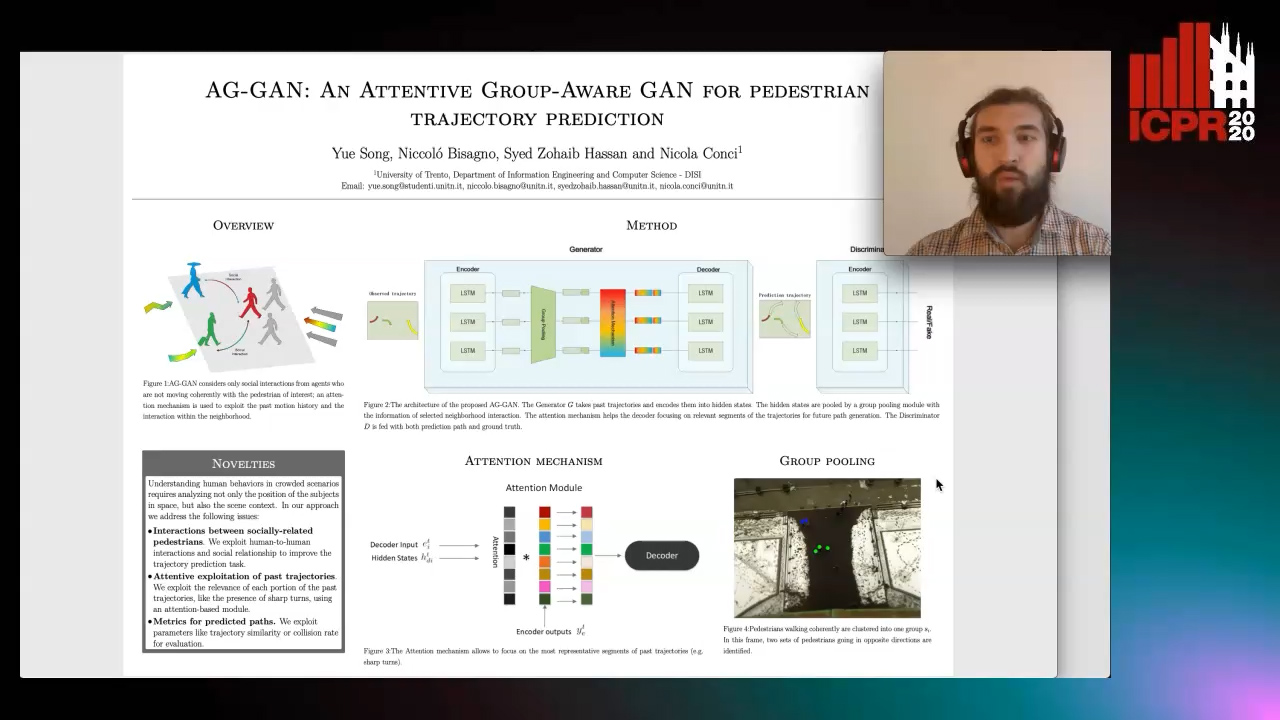

AG-GAN: An Attentive Group-Aware GAN for Pedestrian Trajectory Prediction

Yue Song, Niccolò Bisagno, Syed Zohaib Hassan, Nicola Conci

Auto-TLDR; An attentive group-aware GAN for motion prediction in crowded scenarios

Abstract Slides Poster Similar

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

On the Evaluation of Generative Adversarial Networks by Discriminative Models

Amirsina Torfi, Mohammadreza Beyki, Edward Alan Fox

Auto-TLDR; Domain-agnostic GAN Evaluation with Siamese Neural Networks

Abstract Slides Poster Similar

Multi-Scale and Attention Based ResNet for Heartbeat Classification

Haojie Zhang, Gongping Yang, Yuwen Huang, Feng Yuan, Yilong Yin

Auto-TLDR; A Multi-Scale and Attention based ResNet for ECG heartbeat classification in intra-patient and inter-patient paradigms

Abstract Slides Poster Similar

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

SAGE: Sequential Attribute Generator for Analyzing Glioblastomas Using Limited Dataset

Padmaja Jonnalagedda, Brent Weinberg, Jason Allen, Taejin Min, Shiv Bhanu, Bir Bhanu

Auto-TLDR; SAGE: Generative Adversarial Networks for Imaging Biomarker Detection and Prediction

Abstract Slides Poster Similar

Disentangle, Assemble, and Synthesize: Unsupervised Learning to Disentangle Appearance and Location

Hiroaki Aizawa, Hirokatsu Kataoka, Yutaka Satoh, Kunihito Kato

Auto-TLDR; Generative Adversarial Networks with Structural Constraint for controllability of latent space

Abstract Slides Poster Similar

Time Series Data Augmentation for Neural Networks by Time Warping with a Discriminative Teacher

Brian Kenji Iwana, Seiichi Uchida

Auto-TLDR; Guided Warping for Time Series Data Augmentation

Abstract Slides Poster Similar

Leveraging Synthetic Subject Invariant EEG Signals for Zero Calibration BCI

Nik Khadijah Nik Aznan, Amir Atapour-Abarghouei, Stephen Bonner, Jason Connolly, Toby Breckon

Auto-TLDR; SIS-GAN: Subject Invariant SSVEP Generative Adversarial Network for Brain-Computer Interface

DAG-Net: Double Attentive Graph Neural Network for Trajectory Forecasting

Alessio Monti, Alessia Bertugli, Simone Calderara, Rita Cucchiara

Auto-TLDR; Recurrent Generative Model for Multi-modal Human Motion Behaviour in Urban Environments

Abstract Slides Poster Similar

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

Switching Dynamical Systems with Deep Neural Networks

Cesar Ali Ojeda Marin, Kostadin Cvejoski, Bogdan Georgiev, Ramses J. Sanchez

Auto-TLDR; Variational RNN for Switching Dynamics

Abstract Slides Poster Similar

Semi-Supervised Generative Adversarial Networks with a Pair of Complementary Generators for Retinopathy Screening

Yingpeng Xie, Qiwei Wan, Hai Xie, En-Leng Tan, Yanwu Xu, Baiying Lei

Auto-TLDR; Generative Adversarial Networks for Retinopathy Diagnosis via Fundus Images

Abstract Slides Poster Similar

Trajectory-User Link with Attention Recurrent Networks

Tao Sun, Yongjun Xu, Fei Wang, Lin Wu, 塘文 钱, Zezhi Shao

Auto-TLDR; TULAR: Trajectory-User Link with Attention Recurrent Neural Networks

Abstract Slides Poster Similar

AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault, Hervé Le Borgne, Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Abstract Slides Poster Similar

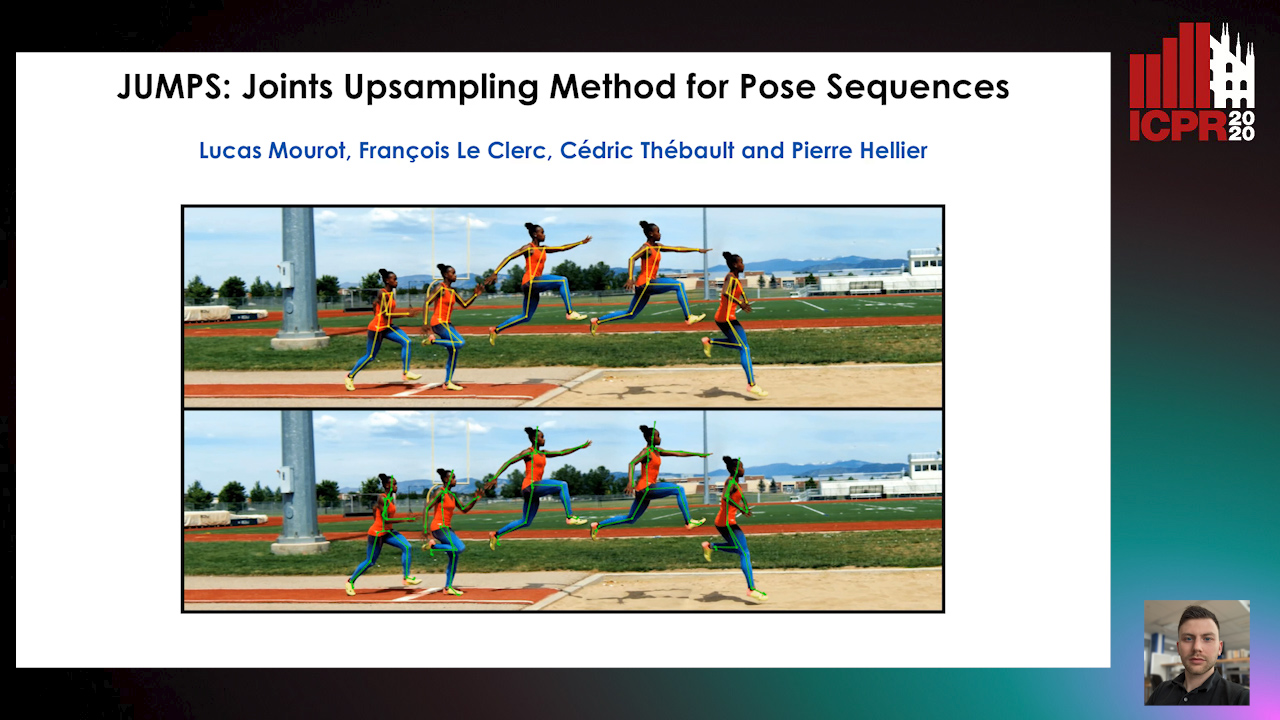

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

Estimation of Clinical Tremor Using Spatio-Temporal Adversarial AutoEncoder

Li Zhang, Vidya Koesmahargyo, Isaac Galatzer-Levy

Auto-TLDR; ST-AAE: Spatio-temporal Adversarial Autoencoder for Clinical Assessment of Hand Tremor Frequency and Severity

Abstract Slides Poster Similar

Learning to Take Directions One Step at a Time

Qiyang Hu, Adrian Wälchli, Tiziano Portenier, Matthias Zwicker, Paolo Favaro

Auto-TLDR; Generating a Sequence of Motion Strokes from a Single Image

Abstract Slides Poster Similar

Deep Learning Based Sepsis Intervention: The Modelling and Prediction of Severe Sepsis Onset

Auto-TLDR; Predicting Sepsis onset by up to six hours prior using a boosted cascading training methodology and adjustable margin hinge loss function

Abstract Slides Poster Similar

DeepBEV: A Conditional Adversarial Network for Bird’s Eye View Generation

Auto-TLDR; A Generative Adversarial Network for Semantic Object Representation in Autonomous Vehicles

Abstract Slides Poster Similar

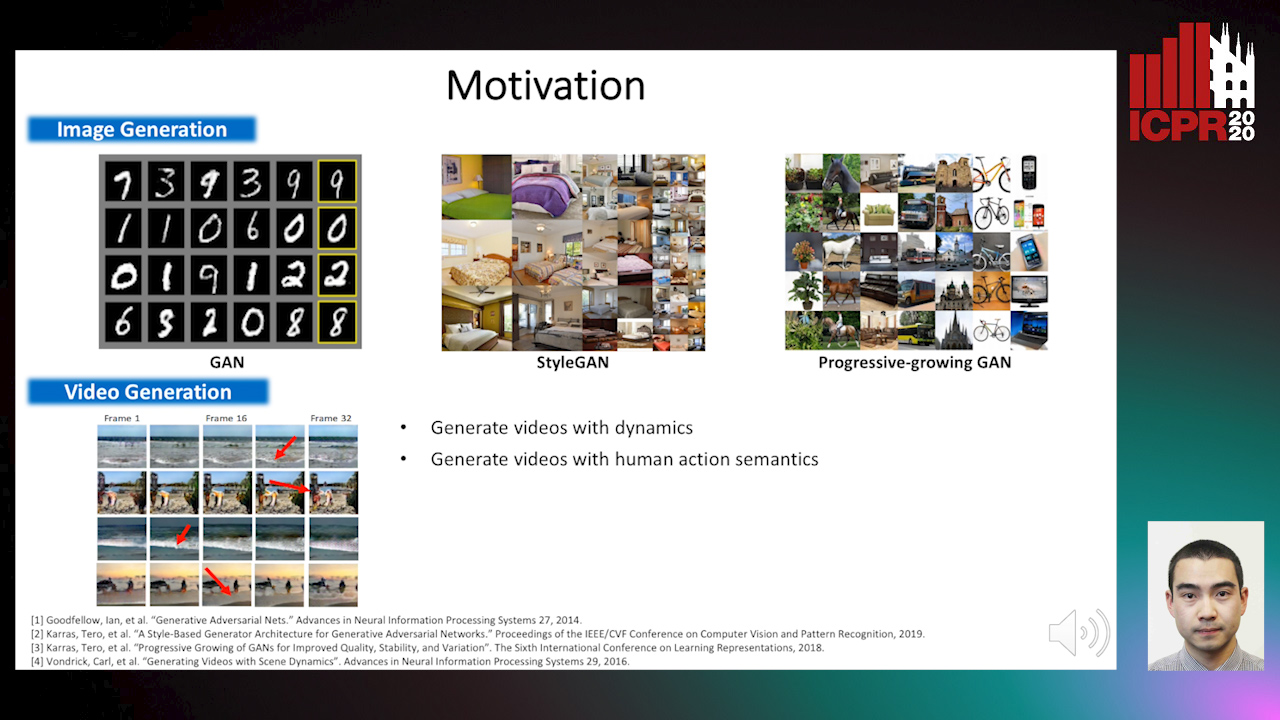

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

S2I-Bird: Sound-To-Image Generation of Bird Species Using Generative Adversarial Networks

Joo Yong Shim, Joongheon Kim, Jong-Kook Kim

Auto-TLDR; Generating bird images from sound using conditional generative adversarial networks

Abstract Slides Poster Similar

PrivAttNet: Predicting Privacy Risks in Images Using Visual Attention

Chen Zhang, Thivya Kandappu, Vigneshwaran Subbaraju

Auto-TLDR; PrivAttNet: A Visual Attention Based Approach for Privacy Sensitivity in Images

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

MedZip: 3D Medical Images Lossless Compressor Using Recurrent Neural Network (LSTM)

Omniah Nagoor, Joss Whittle, Jingjing Deng, Benjamin Mora, Mark W. Jones

Auto-TLDR; Recurrent Neural Network for Lossless Medical Image Compression using Long Short-Term Memory

A Quantitative Evaluation Framework of Video De-Identification Methods

Sathya Bursic, Alessandro D'Amelio, Marco Granato, Giuliano Grossi, Raffaella Lanzarotti

Auto-TLDR; Face de-identification using photo-reality and facial expressions

Abstract Slides Poster Similar

Global Feature Aggregation for Accident Anticipation

Mishal Fatima, Umar Karim Khan, Chong Min Kyung

Auto-TLDR; Feature Aggregation for Predicting Accidents in Video Sequences

GAN-Based Gaussian Mixture Model Responsibility Learning

Wanming Huang, Yi Da Xu, Shuai Jiang, Xuan Liang, Ian Oppermann

Auto-TLDR; Posterior Consistency Module for Gaussian Mixture Model

Abstract Slides Poster Similar

Cascade Attention Guided Residue Learning GAN for Cross-Modal Translation

Bin Duan, Wei Wang, Hao Tang, Hugo Latapie, Yan Yan

Auto-TLDR; Cascade Attention-Guided Residue GAN for Cross-modal Audio-Visual Learning

Abstract Slides Poster Similar

Detail-Revealing Deep Low-Dose CT Reconstruction

Xinchen Ye, Yuyao Xu, Rui Xu, Shoji Kido, Noriyuki Tomiyama

Auto-TLDR; A Dual-branch Aggregation Network for Low-Dose CT Reconstruction

Abstract Slides Poster Similar

Text Recognition in Real Scenarios with a Few Labeled Samples

Jinghuang Lin, Cheng Zhanzhan, Fan Bai, Yi Niu, Shiliang Pu, Shuigeng Zhou

Auto-TLDR; Few-shot Adversarial Sequence Domain Adaptation for Scene Text Recognition

Abstract Slides Poster Similar

Attributes Aware Face Generation with Generative Adversarial Networks

Zheng Yuan, Jie Zhang, Shiguang Shan, Xilin Chen

Auto-TLDR; AFGAN: A Generative Adversarial Network for Attributes Aware Face Image Generation

Abstract Slides Poster Similar

Quantifying the Use of Domain Randomization

Mohammad Ani, Hector Basevi, Ales Leonardis

Auto-TLDR; Evaluating Domain Randomization for Synthetic Image Generation by directly measuring the difference between realistic and synthetic data distributions

Abstract Slides Poster Similar

GAP: Quantifying the Generative Adversarial Set and Class Feature Applicability of Deep Neural Networks

Edward Collier, Supratik Mukhopadhyay

Auto-TLDR; Approximating Adversarial Learning in Deep Neural Networks Using Set and Class Adversaries

Abstract Slides Poster Similar

A Low-Complexity R-Peak Detection Algorithm with Adaptive Thresholding for Wearable Devices

Tiago Rodrigues, Hugo Plácido Da Silva, Ana Luisa Nobre Fred, Sirisack Samoutphonh

Auto-TLDR; Real-Time and Low-Complexity R-peak Detection for Single Lead ECG Signals

Abstract Slides Poster Similar

Age Gap Reducer-GAN for Recognizing Age-Separated Faces

Daksha Yadav, Naman Kohli, Mayank Vatsa, Richa Singh, Afzel Noore

Auto-TLDR; Generative Adversarial Network for Age-separated Face Recognition

Abstract Slides Poster Similar

Hierarchical Mixtures of Generators for Adversarial Learning

Alper Ahmetoğlu, Ethem Alpaydin

Auto-TLDR; Hierarchical Mixture of Generative Adversarial Networks

Stratified Multi-Task Learning for Robust Spotting of Scene Texts

Kinjal Dasgupta, Sudip Das, Ujjwal Bhattacharya

Auto-TLDR; Feature Representation Block for Multi-task Learning of Scene Text

Enriching Video Captions with Contextual Text

Philipp Rimle, Pelin Dogan, Markus Gross

Auto-TLDR; Contextualized Video Captioning Using Contextual Text

Abstract Slides Poster Similar

Reducing the Variance of Variational Estimates of Mutual Information by Limiting the Critic's Hypothesis Space to RKHS

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; Mutual Information Estimation from Variational Lower Bounds Using a Critic's Hypothesis Space

Mutual Information Based Method for Unsupervised Disentanglement of Video Representation

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; MIPAE: Mutual Information Predictive Auto-Encoder for Video Prediction

Abstract Slides Poster Similar

Data Augmentation Via Mixed Class Interpolation Using Cycle-Consistent Generative Adversarial Networks Applied to Cross-Domain Imagery

Hiroshi Sasaki, Chris G. Willcocks, Toby Breckon

Auto-TLDR; C2GMA: A Generative Domain Transfer Model for Non-visible Domain Classification

Abstract Slides Poster Similar

A Neural Lip-Sync Framework for Synthesizing Photorealistic Virtual News Anchors

Ruobing Zheng, Zhou Zhu, Bo Song, Ji Changjiang

Auto-TLDR; Lip-sync: Synthesis of a Virtual News Anchor for Low-Delayed Applications

Abstract Slides Poster Similar

Constructing Geographic and Long-term Temporal Graph for Traffic Forecasting

Yiwen Sun, Yulu Wang, Kun Fu, Zheng Wang, Changshui Zhang, Jieping Ye

Auto-TLDR; GLT-GCRNN: Geographic and Long-term Temporal Graph Convolutional Recurrent Neural Network for Traffic Forecasting

Abstract Slides Poster Similar