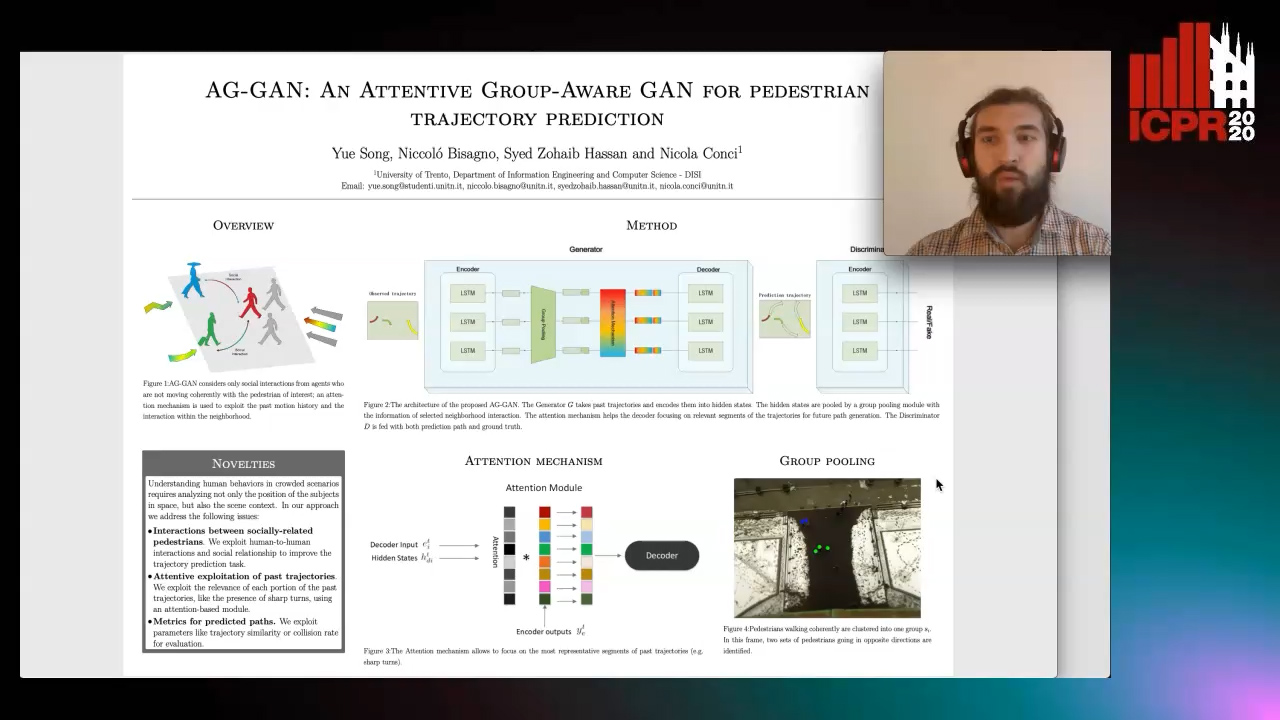

AG-GAN: An Attentive Group-Aware GAN for Pedestrian Trajectory Prediction

Yue Song,

Niccolò Bisagno,

Syed Zohaib Hassan,

Nicola Conci

Auto-TLDR; An attentive group-aware GAN for motion prediction in crowded scenarios

Similar papers

DAG-Net: Double Attentive Graph Neural Network for Trajectory Forecasting

Alessio Monti, Alessia Bertugli, Simone Calderara, Rita Cucchiara

Auto-TLDR; Recurrent Generative Model for Multi-modal Human Motion Behaviour in Urban Environments

Abstract Slides Poster Similar

Transformer Networks for Trajectory Forecasting

Francesco Giuliari, Hasan Irtiza, Marco Cristani, Fabio Galasso

Auto-TLDR; TransformerNetworks for Trajectory Prediction of People Interactions

Abstract Slides Poster Similar

Multiple Future Prediction Leveraging Synthetic Trajectories

Lorenzo Berlincioni, Federico Becattini, Lorenzo Seidenari, Alberto Del Bimbo

Auto-TLDR; Synthetic Trajectory Prediction using Markov Chains

Abstract Slides Poster Similar

Trajectory-User Link with Attention Recurrent Networks

Tao Sun, Yongjun Xu, Fei Wang, Lin Wu, 塘文 钱, Zezhi Shao

Auto-TLDR; TULAR: Trajectory-User Link with Attention Recurrent Neural Networks

Abstract Slides Poster Similar

What and How? Jointly Forecasting Human Action and Pose

Yanjun Zhu, Yanxia Zhang, Qiong Liu, Andreas Girgensohn

Auto-TLDR; Forecasting Human Actions and Motion Trajectories with Joint Action Classification and Pose Regression

Abstract Slides Poster Similar

Future Urban Scenes Generation through Vehicles Synthesis

Alessandro Simoni, Luca Bergamini, Andrea Palazzi, Simone Calderara, Rita Cucchiara

Auto-TLDR; Predicting the Future of an Urban Scene with a Novel View Synthesis Paradigm

Abstract Slides Poster Similar

AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Maximilian Kraus, Seyed Majid Azimi, Emec Ercelik, Reza Bahmanyar, Peter Reinartz, Alois Knoll

Auto-TLDR; AerialMPTNet: A novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features

Abstract Slides Poster Similar

CardioGAN: An Attention-Based Generative Adversarial Network for Generation of Electrocardiograms

Subhrajyoti Dasgupta, Sudip Das, Ujjwal Bhattacharya

Auto-TLDR; CardioGAN: Generative Adversarial Network for Synthetic Electrocardiogram Signals

Abstract Slides Poster Similar

Constructing Geographic and Long-term Temporal Graph for Traffic Forecasting

Yiwen Sun, Yulu Wang, Kun Fu, Zheng Wang, Changshui Zhang, Jieping Ye

Auto-TLDR; GLT-GCRNN: Geographic and Long-term Temporal Graph Convolutional Recurrent Neural Network for Traffic Forecasting

Abstract Slides Poster Similar

Context Visual Information-Based Deliberation Network for Video Captioning

Min Lu, Xueyong Li, Caihua Liu

Auto-TLDR; Context visual information-based deliberation network for video captioning

Abstract Slides Poster Similar

Switching Dynamical Systems with Deep Neural Networks

Cesar Ali Ojeda Marin, Kostadin Cvejoski, Bogdan Georgiev, Ramses J. Sanchez

Auto-TLDR; Variational RNN for Switching Dynamics

Abstract Slides Poster Similar

Learning to Take Directions One Step at a Time

Qiyang Hu, Adrian Wälchli, Tiziano Portenier, Matthias Zwicker, Paolo Favaro

Auto-TLDR; Generating a Sequence of Motion Strokes from a Single Image

Abstract Slides Poster Similar

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

Towards Practical Compressed Video Action Recognition: A Temporal Enhanced Multi-Stream Network

Bing Li, Longteng Kong, Dongming Zhang, Xiuguo Bao, Di Huang, Yunhong Wang

Auto-TLDR; TEMSN: Temporal Enhanced Multi-Stream Network for Compressed Video Action Recognition

Abstract Slides Poster Similar

Mutual Information Based Method for Unsupervised Disentanglement of Video Representation

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; MIPAE: Mutual Information Predictive Auto-Encoder for Video Prediction

Abstract Slides Poster Similar

Reducing the Variance of Variational Estimates of Mutual Information by Limiting the Critic's Hypothesis Space to RKHS

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; Mutual Information Estimation from Variational Lower Bounds Using a Critic's Hypothesis Space

Global Feature Aggregation for Accident Anticipation

Mishal Fatima, Umar Karim Khan, Chong Min Kyung

Auto-TLDR; Feature Aggregation for Predicting Accidents in Video Sequences

Visual Oriented Encoder: Integrating Multimodal and Multi-Scale Contexts for Video Captioning

Auto-TLDR; Visual Oriented Encoder for Video Captioning

Abstract Slides Poster Similar

Image Sequence Based Cyclist Action Recognition Using Multi-Stream 3D Convolution

Stefan Zernetsch, Steven Schreck, Viktor Kress, Konrad Doll, Bernhard Sick

Auto-TLDR; 3D-ConvNet: A Multi-stream 3D Convolutional Neural Network for Detecting Cyclists in Real World Traffic Situations

Abstract Slides Poster Similar

End-To-End Multi-Task Learning of Missing Value Imputation and Forecasting in Time-Series Data

Jinhee Kim, Taesung Kim, Jang-Ho Choi, Jaegul Choo

Auto-TLDR; Time-Series Prediction with Denoising and Imputation of Missing Data

Abstract Slides Poster Similar

Attentive Visual Semantic Specialized Network for Video Captioning

Jesus Perez-Martin, Benjamin Bustos, Jorge Pérez

Auto-TLDR; Adaptive Visual Semantic Specialized Network for Video Captioning

Abstract Slides Poster Similar

Translating Adult's Focus of Attention to Elderly's

Onkar Krishna, Go Irie, Takahito Kawanishi, Kunio Kashino, Kiyoharu Aizawa

Auto-TLDR; Elderly Focus of Attention Prediction Using Deep Image-to-Image Translation

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

SDMA: Saliency Driven Mutual Cross Attention for Multi-Variate Time Series

Auto-TLDR; Salient-Driven Mutual Cross Attention for Intelligent Time Series Analytics

Abstract Slides Poster Similar

Time Series Data Augmentation for Neural Networks by Time Warping with a Discriminative Teacher

Brian Kenji Iwana, Seiichi Uchida

Auto-TLDR; Guided Warping for Time Series Data Augmentation

Abstract Slides Poster Similar

PIN: A Novel Parallel Interactive Network for Spoken Language Understanding

Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou

Auto-TLDR; Parallel Interactive Network for Spoken Language Understanding

Abstract Slides Poster Similar

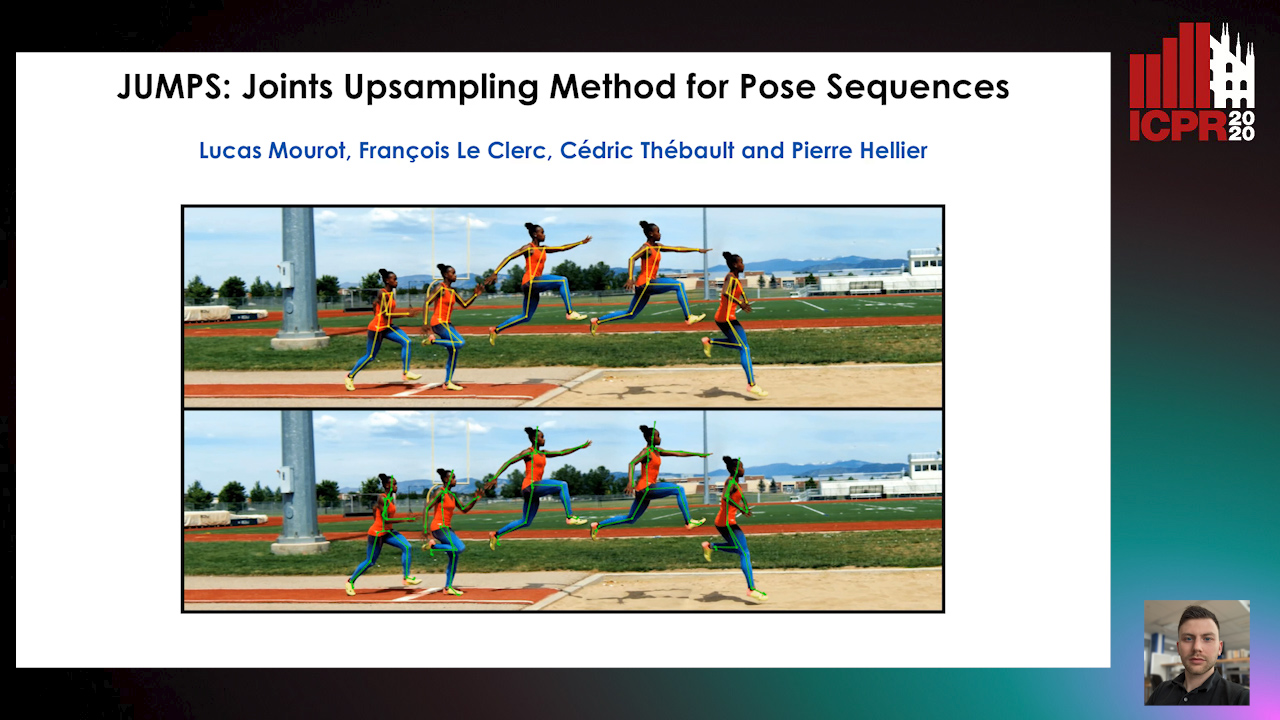

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

Interpretable Structured Learning with Sparse Gated Sequence Encoder for Protein-Protein Interaction Prediction

Kishan K C, Feng Cui, Anne Haake, Rui Li

Auto-TLDR; Predicting Protein-Protein Interactions Using Sequence Representations

Abstract Slides Poster Similar

Attention-Driven Body Pose Encoding for Human Activity Recognition

Bappaditya Debnath, Swagat Kumar, Marry O'Brien, Ardhendu Behera

Auto-TLDR; Attention-based Body Pose Encoding for Human Activity Recognition

Abstract Slides Poster Similar

Self-Supervised Joint Encoding of Motion and Appearance for First Person Action Recognition

Mirco Planamente, Andrea Bottino, Barbara Caputo

Auto-TLDR; A Single Stream Architecture for Egocentric Action Recognition from the First-Person Point of View

Abstract Slides Poster Similar

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

Let's Play Music: Audio-Driven Performance Video Generation

Hao Zhu, Yi Li, Feixia Zhu, Aihua Zheng, Ran He

Auto-TLDR; APVG: Audio-driven Performance Video Generation Using Structured Temporal UNet

Abstract Slides Poster Similar

PoseCVAE: Anomalous Human Activity Detection

Yashswi Jain, Ashvini Kumar Sharma, Rajbabu Velmurugan, Biplab Banerjee

Auto-TLDR; PoseCVAE: Anomalous Human Activity Detection Using Generative Modeling

Abstract Slides Poster Similar

Dual-Mode Iterative Denoiser: Tackling the Weak Label for Anomaly Detection

Auto-TLDR; A Dual-Mode Iterative Denoiser for Crowd Anomaly Detection

Abstract Slides Poster Similar

Map-Based Temporally Consistent Geolocalization through Learning Motion Trajectories

Auto-TLDR; Exploiting Motion Trajectories for Geolocalization of Object on Topological Map using Recurrent Neural Network

Abstract Slides Poster Similar

Self-Supervised Learning of Dynamic Representations for Static Images

Siyang Song, Enrique Sanchez, Linlin Shen, Michel Valstar

Auto-TLDR; Facial Action Unit Intensity Estimation and Affect Estimation from Still Images with Multiple Temporal Scale

Abstract Slides Poster Similar

Trajectory Representation Learning for Multi-Task NMRDP Planning

Firas Jarboui, Vianney Perchet

Auto-TLDR; Exploring Non Markovian Reward Decision Processes for Reinforcement Learning

Abstract Slides Poster Similar

Explore and Explain: Self-Supervised Navigation and Recounting

Roberto Bigazzi, Federico Landi, Marcella Cornia, Silvia Cascianelli, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Exploring a Photorealistic Environment for Explanation and Navigation

Hierarchical Multimodal Attention for Deep Video Summarization

Melissa Sanabria, Frederic Precioso, Thomas Menguy

Auto-TLDR; Automatic Summarization of Professional Soccer Matches Using Event-Stream Data and Multi- Instance Learning

Abstract Slides Poster Similar

Exploring Severe Occlusion: Multi-Person 3D Pose Estimation with Gated Convolution

Renshu Gu, Gaoang Wang, Jenq-Neng Hwang

Auto-TLDR; 3D Human Pose Estimation for Multi-Human Videos with Occlusion

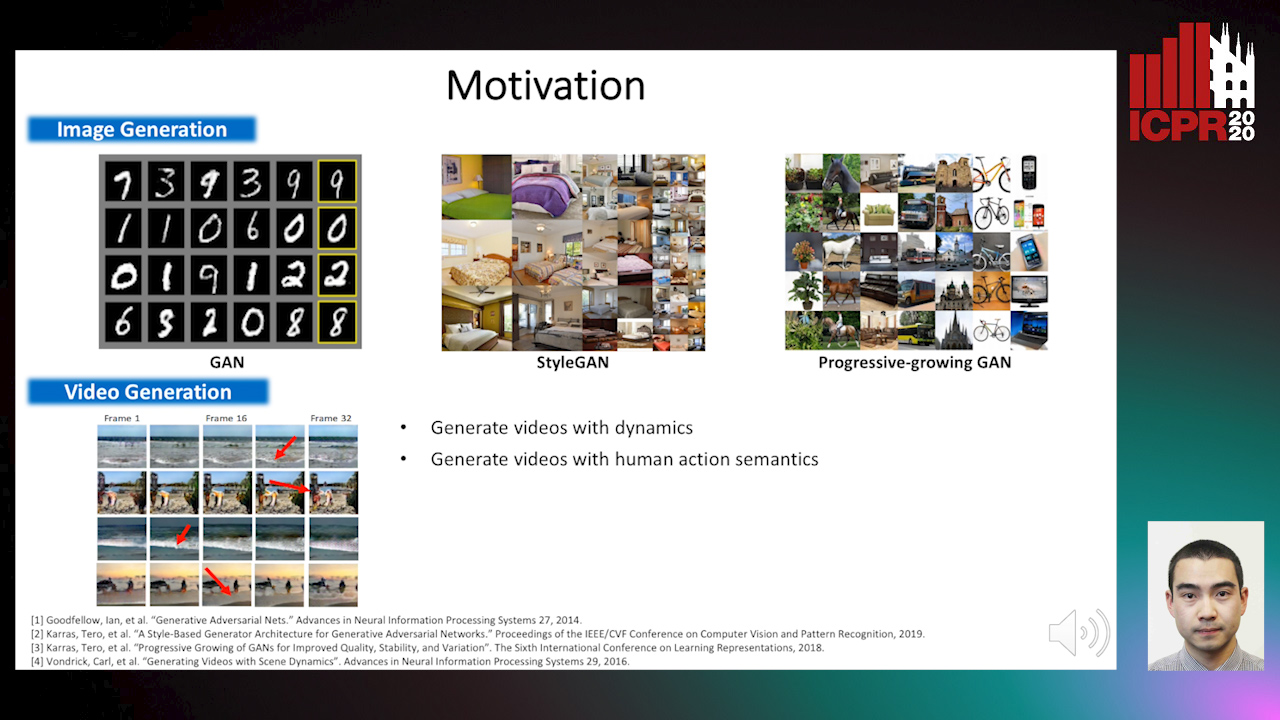

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Text Synopsis Generation for Egocentric Videos

Aidean Sharghi, Niels Lobo, Mubarak Shah

Auto-TLDR; Egocentric Video Summarization Using Multi-task Learning for End-to-End Learning

Machine-Learned Regularization and Polygonization of Building Segmentation Masks

Stefano Zorzi, Ksenia Bittner, Friedrich Fraundorfer

Auto-TLDR; Automatic Regularization and Polygonization of Building Segmentation masks using Generative Adversarial Network

Abstract Slides Poster Similar

Local Facial Attribute Transfer through Inpainting

Ricard Durall, Franz-Josef Pfreundt, Janis Keuper

Auto-TLDR; Attribute Transfer Inpainting Generative Adversarial Network

Abstract Slides Poster Similar

Unsupervised 3D Human Pose Estimation in Multi-view-multi-pose Video

Cheng Sun, Diego Thomas, Hiroshi Kawasaki

Auto-TLDR; Unsupervised 3D Human Pose Estimation from 2D Videos Using Generative Adversarial Network

Abstract Slides Poster Similar

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

Semantic Segmentation for Pedestrian Detection from Motion in Temporal Domain

Auto-TLDR; Motion Profile: Recognizing Pedestrians along with their Motion Directions in a Temporal Way

Abstract Slides Poster Similar

Better Prior Knowledge Improves Human-Pose-Based Extrinsic Camera Calibration

Olivier Moliner, Sangxia Huang, Kalle Åström

Auto-TLDR; Improving Human-pose-based Extrinsic Calibration for Multi-Camera Systems

Abstract Slides Poster Similar