Interpretable Structured Learning with Sparse Gated Sequence Encoder for Protein-Protein Interaction Prediction

Kishan K C,

Feng Cui,

Anne Haake,

Rui Li

Auto-TLDR; Predicting Protein-Protein Interactions Using Sequence Representations

Similar papers

Trajectory-User Link with Attention Recurrent Networks

Tao Sun, Yongjun Xu, Fei Wang, Lin Wu, 塘文 钱, Zezhi Shao

Auto-TLDR; TULAR: Trajectory-User Link with Attention Recurrent Neural Networks

Abstract Slides Poster Similar

Temporal Pattern Detection in Time-Varying Graphical Models

Federico Tomasi, Veronica Tozzo, Annalisa Barla

Auto-TLDR; A dynamical network inference model that leverages on kernels to consider general temporal patterns

Abstract Slides Poster Similar

Region and Relations Based Multi Attention Network for Graph Classification

Manasvi Aggarwal, M. Narasimha Murty

Auto-TLDR; R2POOL: A Graph Pooling Layer for Non-euclidean Structures

Abstract Slides Poster Similar

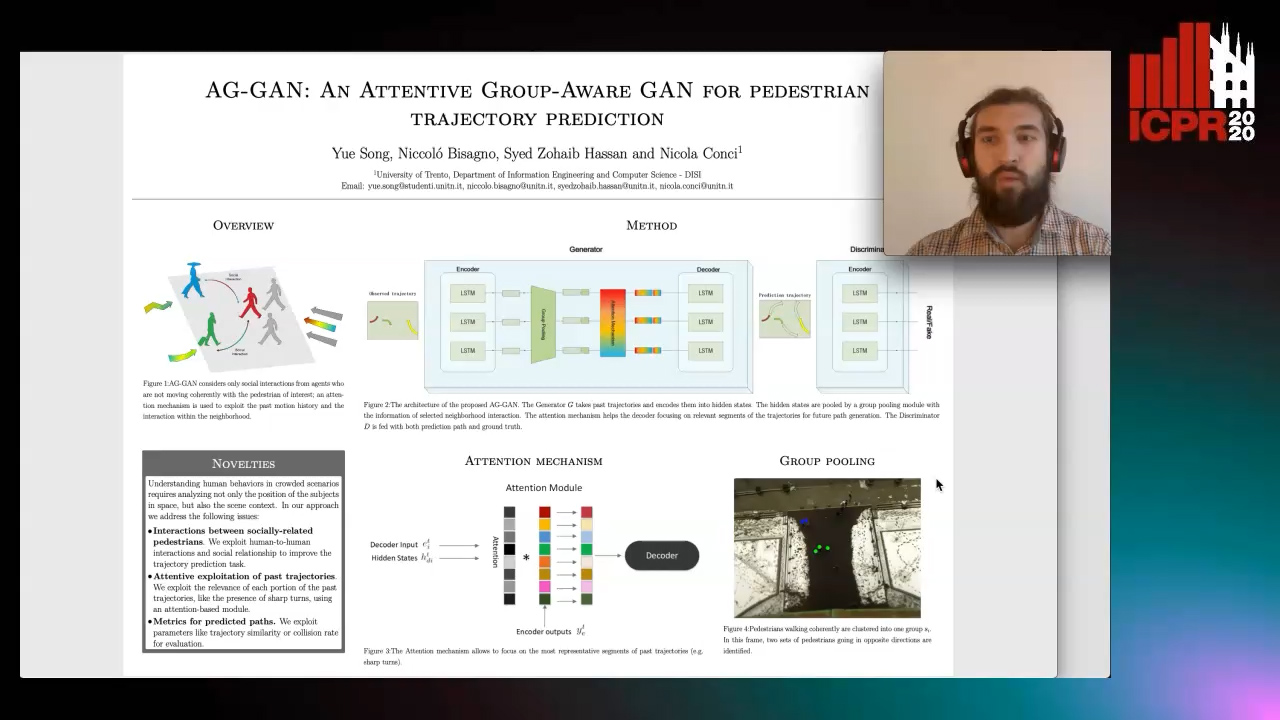

AG-GAN: An Attentive Group-Aware GAN for Pedestrian Trajectory Prediction

Yue Song, Niccolò Bisagno, Syed Zohaib Hassan, Nicola Conci

Auto-TLDR; An attentive group-aware GAN for motion prediction in crowded scenarios

Abstract Slides Poster Similar

End-To-End Multi-Task Learning of Missing Value Imputation and Forecasting in Time-Series Data

Jinhee Kim, Taesung Kim, Jang-Ho Choi, Jaegul Choo

Auto-TLDR; Time-Series Prediction with Denoising and Imputation of Missing Data

Abstract Slides Poster Similar

Recursive Convolutional Neural Networks for Epigenomics

Aikaterini Symeonidi, Anguelos Nicolaou, Frank Johannes, Vincent Christlein

Auto-TLDR; Recursive Convolutional Neural Networks for Epigenomic Data Analysis

Abstract Slides Poster Similar

Aggregating Dependent Gaussian Experts in Local Approximation

Auto-TLDR; A novel approach for aggregating the Gaussian experts by detecting strong violations of conditional independence

Abstract Slides Poster Similar

Road Network Metric Learning for Estimated Time of Arrival

Yiwen Sun, Kun Fu, Zheng Wang, Changshui Zhang, Jieping Ye

Auto-TLDR; Road Network Metric Learning for Estimated Time of Arrival (RNML-ETA)

Abstract Slides Poster Similar

Switching Dynamical Systems with Deep Neural Networks

Cesar Ali Ojeda Marin, Kostadin Cvejoski, Bogdan Georgiev, Ramses J. Sanchez

Auto-TLDR; Variational RNN for Switching Dynamics

Abstract Slides Poster Similar

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

PIN: A Novel Parallel Interactive Network for Spoken Language Understanding

Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou

Auto-TLDR; Parallel Interactive Network for Spoken Language Understanding

Abstract Slides Poster Similar

Total Whitening for Online Signature Verification Based on Deep Representation

Xiaomeng Wu, Akisato Kimura, Kunio Kashino, Seiichi Uchida

Auto-TLDR; Total Whitening for Online Signature Verification

Abstract Slides Poster Similar

Automatically Mining Relevant Variable Interactions Via Sparse Bayesian Learning

Ryoichiro Yafune, Daisuke Sakuma, Yasuo Tabei, Noritaka Saito, Hiroto Saigo

Auto-TLDR; Sparse Bayes for Interpretable Non-linear Prediction

Abstract Slides Poster Similar

EasiECG: A Novel Inter-Patient Arrhythmia Classification Method Using ECG Waves

Chuanqi Han, Ruoran Huang, Fang Yu, Xi Huang, Li Cui

Auto-TLDR; EasiECG: Attention-based Convolution Factorization Machines for Arrhythmia Classification

Abstract Slides Poster Similar

Constructing Geographic and Long-term Temporal Graph for Traffic Forecasting

Yiwen Sun, Yulu Wang, Kun Fu, Zheng Wang, Changshui Zhang, Jieping Ye

Auto-TLDR; GLT-GCRNN: Geographic and Long-term Temporal Graph Convolutional Recurrent Neural Network for Traffic Forecasting

Abstract Slides Poster Similar

Privacy Attributes-Aware Message Passing Neural Network for Visual Privacy Attributes Classification

Hanbin Hong, Wentao Bao, Yuan Hong, Yu Kong

Auto-TLDR; Privacy Attributes-Aware Message Passing Neural Network for Visual Privacy Attribute Classification

Abstract Slides Poster Similar

Epitomic Variational Graph Autoencoder

Rayyan Ahmad Khan, Muhammad Umer Anwaar, Martin Kleinsteuber

Auto-TLDR; EVGAE: A Generative Variational Autoencoder for Graph Data

Abstract Slides Poster Similar

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

Multi-Graph Convolutional Network for Relationship-Driven Stock Movement Prediction

Jiexia Ye, Juanjuan Zhao, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; Multi-GCGRU: A Deep Learning Framework for Stock Price Prediction with Cross Effect

Abstract Slides Poster Similar

Cross-Lingual Text Image Recognition Via Multi-Task Sequence to Sequence Learning

Zhuo Chen, Fei Yin, Xu-Yao Zhang, Qing Yang, Cheng-Lin Liu

Auto-TLDR; Cross-Lingual Text Image Recognition with Multi-task Learning

Abstract Slides Poster Similar

Dimensionality Reduction for Data Visualization and Linear Classification, and the Trade-Off between Robustness and Classification Accuracy

Martin Becker, Jens Lippel, Thomas Zielke

Auto-TLDR; Robustness Assessment of Deep Autoencoder for Data Visualization using Scatter Plots

Abstract Slides Poster Similar

Temporal Collaborative Filtering with Graph Convolutional Neural Networks

Esther Rodrigo-Bonet, Minh Duc Nguyen, Nikos Deligiannis

Auto-TLDR; Temporal Collaborative Filtering with Graph-Neural-Network-based Neural Networks

Abstract Slides Poster Similar

Global Context-Based Network with Transformer for Image2latex

Nuo Pang, Chun Yang, Xiaobin Zhu, Jixuan Li, Xu-Cheng Yin

Auto-TLDR; Image2latex with Global Context block and Transformer

Abstract Slides Poster Similar

CardioGAN: An Attention-Based Generative Adversarial Network for Generation of Electrocardiograms

Subhrajyoti Dasgupta, Sudip Das, Ujjwal Bhattacharya

Auto-TLDR; CardioGAN: Generative Adversarial Network for Synthetic Electrocardiogram Signals

Abstract Slides Poster Similar

RNN Training along Locally Optimal Trajectories via Frank-Wolfe Algorithm

Yun Yue, Ming Li, Venkatesh Saligrama, Ziming Zhang

Auto-TLDR; Frank-Wolfe Algorithm for Efficient Training of RNNs

Abstract Slides Poster Similar

Transfer Learning with Graph Neural Networks for Short-Term Highway Traffic Forecasting

Tanwi Mallick, Prasanna Balaprakash, Eric Rask, Jane Macfarlane

Auto-TLDR; Transfer Learning for Highway Traffic Forecasting on Unseen Traffic Networks

Abstract Slides Poster Similar

Edge-Aware Graph Attention Network for Ratio of Edge-User Estimation in Mobile Networks

Jiehui Deng, Sheng Wan, Xiang Wang, Enmei Tu, Xiaolin Huang, Jie Yang, Chen Gong

Auto-TLDR; EAGAT: Edge-Aware Graph Attention Network for Automatic REU Estimation in Mobile Networks

Abstract Slides Poster Similar

DAG-Net: Double Attentive Graph Neural Network for Trajectory Forecasting

Alessio Monti, Alessia Bertugli, Simone Calderara, Rita Cucchiara

Auto-TLDR; Recurrent Generative Model for Multi-modal Human Motion Behaviour in Urban Environments

Abstract Slides Poster Similar

Nonlinear Ranking Loss on Riemannian Potato Embedding

Byung Hyung Kim, Yoonje Suh, Honggu Lee, Sungho Jo

Auto-TLDR; Riemannian Potato for Rank-based Metric Learning

Abstract Slides Poster Similar

An Efficient Empirical Solver for Localized Multiple Kernel Learning Via DNNs

Auto-TLDR; Localized Multiple Kernel Learning using LMKL-Net

Abstract Slides Poster Similar

Attentive Visual Semantic Specialized Network for Video Captioning

Jesus Perez-Martin, Benjamin Bustos, Jorge Pérez

Auto-TLDR; Adaptive Visual Semantic Specialized Network for Video Captioning

Abstract Slides Poster Similar

Learning Sign-Constrained Support Vector Machines

Kenya Tajima, Kouhei Tsuchida, Esmeraldo Ronnie Rey Zara, Naoya Ohta, Tsuyoshi Kato

Auto-TLDR; Constrained Sign Constraints for Learning Linear Support Vector Machine

Attention-Based Deep Metric Learning for Near-Duplicate Video Retrieval

Kuan-Hsun Wang, Chia Chun Cheng, Yi-Ling Chen, Yale Song, Shang-Hong Lai

Auto-TLDR; Attention-based Deep Metric Learning for Near-duplicate Video Retrieval

Feature-Aware Unsupervised Learning with Joint Variational Attention and Automatic Clustering

Wang Ru, Lin Li, Peipei Wang, Liu Peiyu

Auto-TLDR; Deep Variational Attention Encoder-Decoder for Clustering

Abstract Slides Poster Similar

A General Model for Learning Node and Graph Representations Jointly

Auto-TLDR; Joint Community Detection/Dynamic Routing for Graph Classification

Abstract Slides Poster Similar

An Intransitivity Model for Matchup and Pairwise Comparison

Yan Gu, Jiuding Duan, Hisashi Kashima

Auto-TLDR; Blade-Chest: A Low-Rank Matrix Approach for Probabilistic Ranking of Players

Abstract Slides Poster Similar

Malware Detection by Exploiting Deep Learning over Binary Programs

Panpan Qi, Zhaoqi Zhang, Wei Wang, Chang Yao

Auto-TLDR; End-to-End Malware Detection without Feature Engineering

Abstract Slides Poster Similar

Picture-To-Amount (PITA): Predicting Relative Ingredient Amounts from Food Images

Jiatong Li, Fangda Han, Ricardo Guerrero, Vladimir Pavlovic

Auto-TLDR; PITA: A Deep Learning Architecture for Predicting the Relative Amount of Ingredients from Food Images

Abstract Slides Poster Similar

Context Matters: Self-Attention for Sign Language Recognition

Fares Ben Slimane, Mohamed Bouguessa

Auto-TLDR; Attentional Network for Continuous Sign Language Recognition

Abstract Slides Poster Similar

Leveraging Quadratic Spherical Mutual Information Hashing for Fast Image Retrieval

Nikolaos Passalis, Anastasios Tefas

Auto-TLDR; Quadratic Mutual Information for Large-Scale Hashing and Information Retrieval

Abstract Slides Poster Similar

Assortative-Constrained Stochastic Block Models

Daniel Gribel, Thibaut Vidal, Michel Gendreau

Auto-TLDR; Constrained Stochastic Block Models for Assortative Communities in Neural Networks

Abstract Slides Poster Similar

Space-Time Domain Tensor Neural Networks: An Application on Human Pose Classification

Konstantinos Makantasis, Athanasios Voulodimos, Anastasios Doulamis, Nikolaos Doulamis, Nikolaos Bakalos

Auto-TLDR; Tensor-Based Neural Network for Spatiotemporal Pose Classifiaction using Three-Dimensional Skeleton Data

Abstract Slides Poster Similar

Transformer Networks for Trajectory Forecasting

Francesco Giuliari, Hasan Irtiza, Marco Cristani, Fabio Galasso

Auto-TLDR; TransformerNetworks for Trajectory Prediction of People Interactions

Abstract Slides Poster Similar

Global Feature Aggregation for Accident Anticipation

Mishal Fatima, Umar Karim Khan, Chong Min Kyung

Auto-TLDR; Feature Aggregation for Predicting Accidents in Video Sequences

Variational Deep Embedding Clustering by Augmented Mutual Information Maximization

Qiang Ji, Yanfeng Sun, Yongli Hu, Baocai Yin

Auto-TLDR; Clustering by Augmented Mutual Information maximization for Deep Embedding

Abstract Slides Poster Similar

Dual Path Multi-Modal High-Order Features for Textual Content Based Visual Question Answering

Yanan Li, Yuetan Lin, Hongrui Zhao, Donghui Wang

Auto-TLDR; TextVQA: An End-to-End Visual Question Answering Model for Text-Based VQA

SDMA: Saliency Driven Mutual Cross Attention for Multi-Variate Time Series

Auto-TLDR; Salient-Driven Mutual Cross Attention for Intelligent Time Series Analytics

Abstract Slides Poster Similar

More Correlations Better Performance: Fully Associative Networks for Multi-Label Image Classification

Auto-TLDR; Fully Associative Network for Fully Exploiting Correlation Information in Multi-Label Classification

Abstract Slides Poster Similar