Attentive Visual Semantic Specialized Network for Video Captioning

Jesus Perez-Martin,

Benjamin Bustos,

Jorge Pérez

Auto-TLDR; Adaptive Visual Semantic Specialized Network for Video Captioning

Similar papers

Context Visual Information-Based Deliberation Network for Video Captioning

Min Lu, Xueyong Li, Caihua Liu

Auto-TLDR; Context visual information-based deliberation network for video captioning

Abstract Slides Poster Similar

Visual Oriented Encoder: Integrating Multimodal and Multi-Scale Contexts for Video Captioning

Auto-TLDR; Visual Oriented Encoder for Video Captioning

Abstract Slides Poster Similar

Enriching Video Captions with Contextual Text

Philipp Rimle, Pelin Dogan, Markus Gross

Auto-TLDR; Contextualized Video Captioning Using Contextual Text

Abstract Slides Poster Similar

Text Synopsis Generation for Egocentric Videos

Aidean Sharghi, Niels Lobo, Mubarak Shah

Auto-TLDR; Egocentric Video Summarization Using Multi-task Learning for End-to-End Learning

PIN: A Novel Parallel Interactive Network for Spoken Language Understanding

Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou

Auto-TLDR; Parallel Interactive Network for Spoken Language Understanding

Abstract Slides Poster Similar

A Novel Actor Dual-Critic Model for Remote Sensing Image Captioning

Ruchika Chavhan, Biplab Banerjee, Xiao Xiang Zhu, Subhasis Chaudhuri

Auto-TLDR; Actor Dual-Critic Training for Remote Sensing Image Captioning Using Deep Reinforcement Learning

Abstract Slides Poster Similar

Multi-Scale 2D Representation Learning for Weakly-Supervised Moment Retrieval

Ding Li, Rui Wu, Zhizhong Zhang, Yongqiang Tang, Wensheng Zhang

Auto-TLDR; Multi-scale 2D Representation Learning for Weakly Supervised Video Moment Retrieval

Abstract Slides Poster Similar

MAGNet: Multi-Region Attention-Assisted Grounding of Natural Language Queries at Phrase Level

Amar Shrestha, Krittaphat Pugdeethosapol, Haowen Fang, Qinru Qiu

Auto-TLDR; MAGNet: A Multi-Region Attention-Aware Grounding Network for Free-form Textual Queries

Abstract Slides Poster Similar

Dual Path Multi-Modal High-Order Features for Textual Content Based Visual Question Answering

Yanan Li, Yuetan Lin, Hongrui Zhao, Donghui Wang

Auto-TLDR; TextVQA: An End-to-End Visual Question Answering Model for Text-Based VQA

Tackling Contradiction Detection in German Using Machine Translation and End-To-End Recurrent Neural Networks

Maren Pielka, Rafet Sifa, Lars Patrick Hillebrand, David Biesner, Rajkumar Ramamurthy, Anna Ladi, Christian Bauckhage

Auto-TLDR; Contradiction Detection in Natural Language Inference using Recurrent Neural Networks

Abstract Slides Poster Similar

Transformer Reasoning Network for Image-Text Matching and Retrieval

Nicola Messina, Fabrizio Falchi, Andrea Esuli, Giuseppe Amato

Auto-TLDR; A Transformer Encoder Reasoning Network for Image-Text Matching in Large-Scale Information Retrieval

Abstract Slides Poster Similar

Context Matters: Self-Attention for Sign Language Recognition

Fares Ben Slimane, Mohamed Bouguessa

Auto-TLDR; Attentional Network for Continuous Sign Language Recognition

Abstract Slides Poster Similar

Integrating Historical States and Co-Attention Mechanism for Visual Dialog

Tianling Jiang, Yi Ji, Chunping Liu

Auto-TLDR; Integrating Historical States and Co-attention for Visual Dialog

Abstract Slides Poster Similar

A Novel Attention-Based Aggregation Function to Combine Vision and Language

Matteo Stefanini, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Fully-Attentive Reduction for Vision and Language

Abstract Slides Poster Similar

Cross-Lingual Text Image Recognition Via Multi-Task Sequence to Sequence Learning

Zhuo Chen, Fei Yin, Xu-Yao Zhang, Qing Yang, Cheng-Lin Liu

Auto-TLDR; Cross-Lingual Text Image Recognition with Multi-task Learning

Abstract Slides Poster Similar

GCNs-Based Context-Aware Short Text Similarity Model

Auto-TLDR; Context-Aware Graph Convolutional Network for Text Similarity

Abstract Slides Poster Similar

MA-LSTM: A Multi-Attention Based LSTM for Complex Pattern Extraction

Jingjie Guo, Kelang Tian, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; MA-LSTM: Multiple Attention based recurrent neural network for forget gate

Abstract Slides Poster Similar

Explore and Explain: Self-Supervised Navigation and Recounting

Roberto Bigazzi, Federico Landi, Marcella Cornia, Silvia Cascianelli, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Exploring a Photorealistic Environment for Explanation and Navigation

Multi-Modal Contextual Graph Neural Network for Text Visual Question Answering

Yaoyuan Liang, Xin Wang, Xuguang Duan, Wenwu Zhu

Auto-TLDR; Multi-modal Contextual Graph Neural Network for Text Visual Question Answering

Abstract Slides Poster Similar

Extracting Action Hierarchies from Action Labels and their Use in Deep Action Recognition

Konstadinos Bacharidis, Antonis Argyros

Auto-TLDR; Exploiting the Information Content of Language Label Associations for Human Action Recognition

Abstract Slides Poster Similar

Cross-Supervised Joint-Event-Extraction with Heterogeneous Information Networks

Yue Wang, Zhuo Xu, Yao Wan, Lu Bai, Lixin Cui, Qian Zhao, Edwin Hancock, Philip Yu

Auto-TLDR; Joint-Event-extraction from Unstructured corpora using Structural Information Network

Abstract Slides Poster Similar

Trajectory-User Link with Attention Recurrent Networks

Tao Sun, Yongjun Xu, Fei Wang, Lin Wu, 塘文 钱, Zezhi Shao

Auto-TLDR; TULAR: Trajectory-User Link with Attention Recurrent Neural Networks

Abstract Slides Poster Similar

Flow-Guided Spatial Attention Tracking for Egocentric Activity Recognition

Auto-TLDR; flow-guided spatial attention tracking for egocentric activity recognition

Abstract Slides Poster Similar

Efficient Sentence Embedding Via Semantic Subspace Analysis

Bin Wang, Fenxiao Chen, Yun Cheng Wang, C.-C. Jay Kuo

Auto-TLDR; S3E: Semantic Subspace Sentence Embedding

Abstract Slides Poster Similar

PICK: Processing Key Information Extraction from Documents Using Improved Graph Learning-Convolutional Networks

Wenwen Yu, Ning Lu, Xianbiao Qi, Ping Gong, Rong Xiao

Auto-TLDR; PICK: A Graph Learning Framework for Key Information Extraction from Documents

Abstract Slides Poster Similar

Continuous Sign Language Recognition with Iterative Spatiotemporal Fine-Tuning

Kenessary Koishybay, Medet Mukushev, Anara Sandygulova

Auto-TLDR; A Deep Neural Network for Continuous Sign Language Recognition with Iterative Gloss Recognition

Abstract Slides Poster Similar

Zero-Shot Text Classification with Semantically Extended Graph Convolutional Network

Tengfei Liu, Yongli Hu, Junbin Gao, Yanfeng Sun, Baocai Yin

Auto-TLDR; Semantically Extended Graph Convolutional Network for Zero-shot Text Classification

Abstract Slides Poster Similar

Global Feature Aggregation for Accident Anticipation

Mishal Fatima, Umar Karim Khan, Chong Min Kyung

Auto-TLDR; Feature Aggregation for Predicting Accidents in Video Sequences

SAT-Net: Self-Attention and Temporal Fusion for Facial Action Unit Detection

Zhihua Li, Zheng Zhang, Lijun Yin

Auto-TLDR; Temporal Fusion and Self-Attention Network for Facial Action Unit Detection

Abstract Slides Poster Similar

A CNN-RNN Framework for Image Annotation from Visual Cues and Social Network Metadata

Tobia Tesan, Pasquale Coscia, Lamberto Ballan

Auto-TLDR; Context-Based Image Annotation with Multiple Semantic Embeddings and Recurrent Neural Networks

Abstract Slides Poster Similar

Reinforcement Learning with Dual Attention Guided Graph Convolution for Relation Extraction

Zhixin Li, Yaru Sun, Suqin Tang, Canlong Zhang, Huifang Ma

Auto-TLDR; Dual Attention Graph Convolutional Network for Relation Extraction

Abstract Slides Poster Similar

ConvMath : A Convolutional Sequence Network for Mathematical Expression Recognition

Zuoyu Yan, Xiaode Zhang, Liangcai Gao, Ke Yuan, Zhi Tang

Auto-TLDR; Convolutional Sequence Modeling for Mathematical Expressions Recognition

Abstract Slides Poster Similar

Global Context-Based Network with Transformer for Image2latex

Nuo Pang, Chun Yang, Xiaobin Zhu, Jixuan Li, Xu-Cheng Yin

Auto-TLDR; Image2latex with Global Context block and Transformer

Abstract Slides Poster Similar

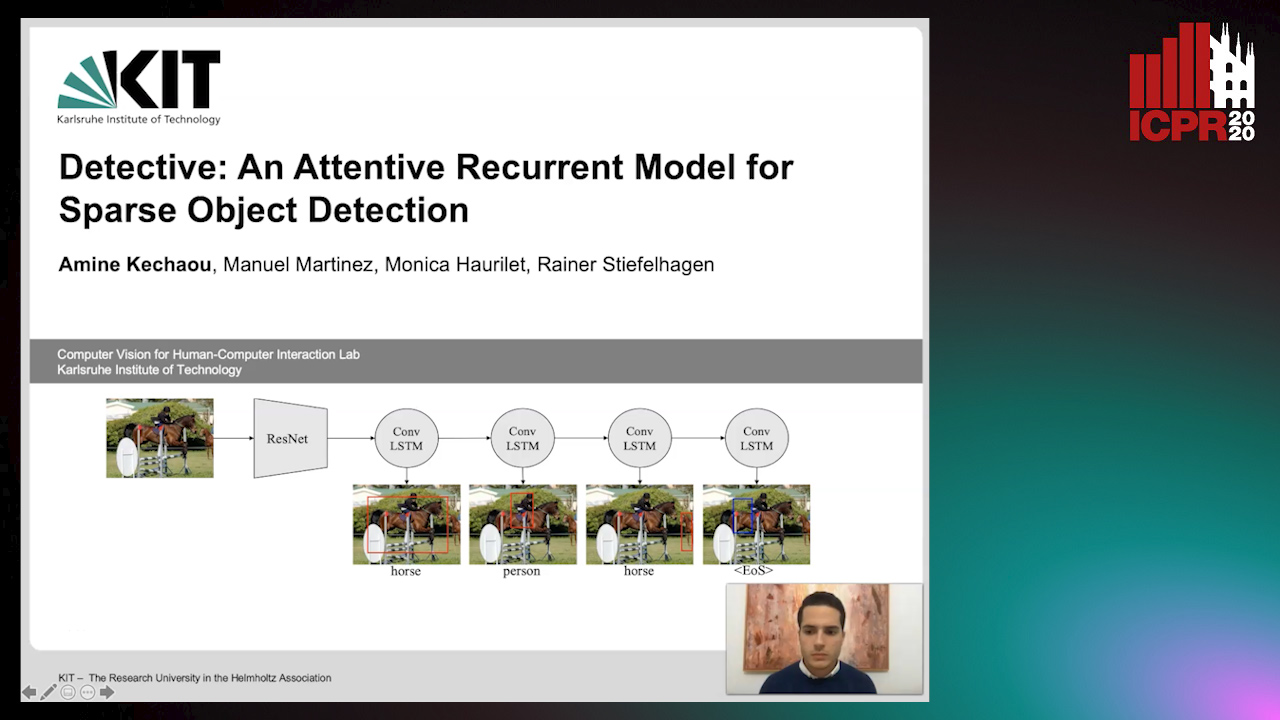

Detective: An Attentive Recurrent Model for Sparse Object Detection

Amine Kechaou, Manuel Martinez, Monica Haurilet, Rainer Stiefelhagen

Auto-TLDR; Detective: An attentive object detector that identifies objects in images in a sequential manner

Abstract Slides Poster Similar

Developing Motion Code Embedding for Action Recognition in Videos

Maxat Alibayev, David Andrea Paulius, Yu Sun

Auto-TLDR; Motion Embedding via Motion Codes for Action Recognition

Abstract Slides Poster Similar

Revisiting Sequence-To-Sequence Video Object Segmentation with Multi-Task Loss and Skip-Memory

Fatemeh Azimi, Benjamin Bischke, Sebastian Palacio, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Sequence-to-Sequence Learning for Video Object Segmentation

Abstract Slides Poster Similar

KoreALBERT: Pretraining a Lite BERT Model for Korean Language Understanding

Hyunjae Lee, Jaewoong Yun, Bongkyu Hwang, Seongho Joe, Seungjai Min, Youngjune Gwon

Auto-TLDR; KoreALBERT: A monolingual ALBERT model for Korean language understanding

Abstract Slides Poster Similar

Evaluation of BERT and ALBERT Sentence Embedding Performance on Downstream NLP Tasks

Hyunjin Choi, Judong Kim, Seongho Joe, Youngjune Gwon

Auto-TLDR; Sentence Embedding Models for BERT and ALBERT: A Comparison and Evaluation

Abstract Slides Poster Similar

PrivAttNet: Predicting Privacy Risks in Images Using Visual Attention

Chen Zhang, Thivya Kandappu, Vigneshwaran Subbaraju

Auto-TLDR; PrivAttNet: A Visual Attention Based Approach for Privacy Sensitivity in Images

Abstract Slides Poster Similar

VSR++: Improving Visual Semantic Reasoning for Fine-Grained Image-Text Matching

Hui Yuan, Yan Huang, Dongbo Zhang, Zerui Chen, Wenlong Cheng, Liang Wang

Auto-TLDR; Improving Visual Semantic Reasoning for Fine-Grained Image-Text Matching

Abstract Slides Poster Similar

Multi-Stage Attention Based Visual Question Answering

Aakansha Mishra, Ashish Anand, Prithwijit Guha

Auto-TLDR; Alternative Bi-directional Attention for Visual Question Answering

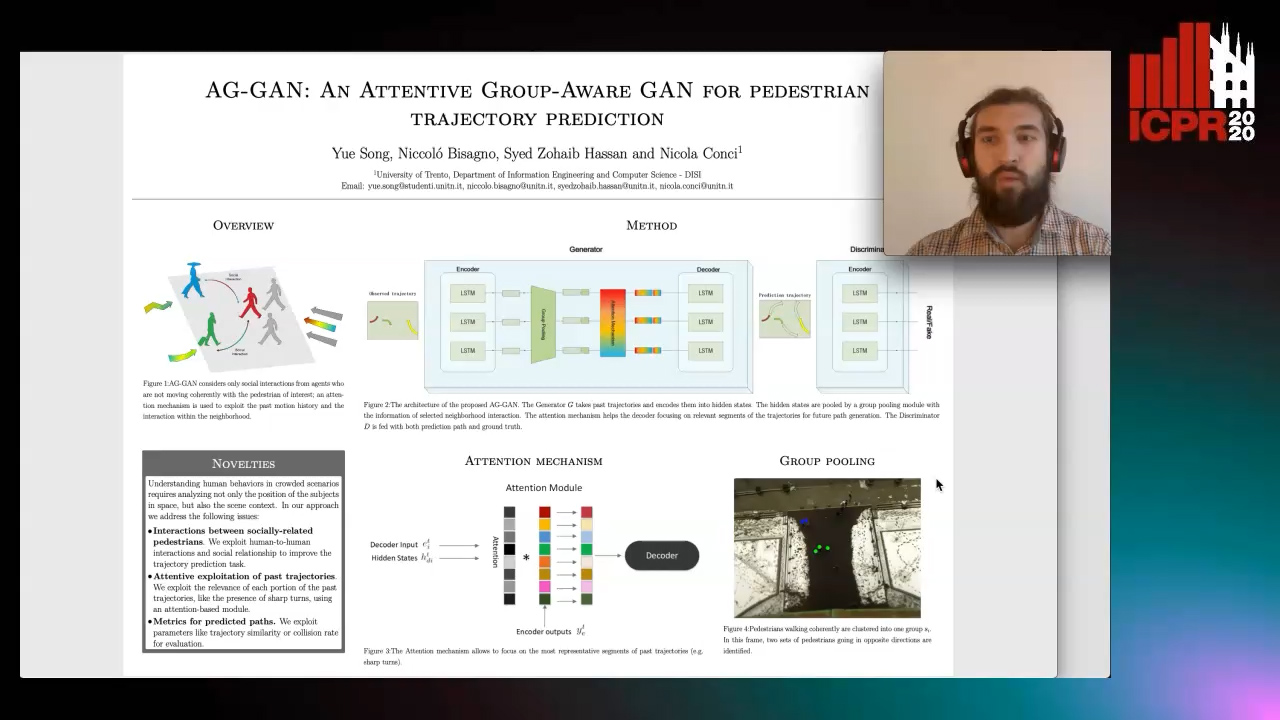

AG-GAN: An Attentive Group-Aware GAN for Pedestrian Trajectory Prediction

Yue Song, Niccolò Bisagno, Syed Zohaib Hassan, Nicola Conci

Auto-TLDR; An attentive group-aware GAN for motion prediction in crowded scenarios

Abstract Slides Poster Similar

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

ActionSpotter: Deep Reinforcement Learning Framework for Temporal Action Spotting in Videos

Guillaume Vaudaux-Ruth, Adrien Chan-Hon-Tong, Catherine Achard

Auto-TLDR; ActionSpotter: A Reinforcement Learning Algorithm for Action Spotting in Video

Abstract Slides Poster Similar

Moto: Enhancing Embedding with Multiple Joint Factors for Chinese Text Classification

Xunzhu Tang, Rujie Zhu, Tiezhu Sun

Auto-TLDR; Moto: Enhancing Embedding with Multiple J\textbf{o}int Fac\textBF{to}rs

Abstract Slides Poster Similar

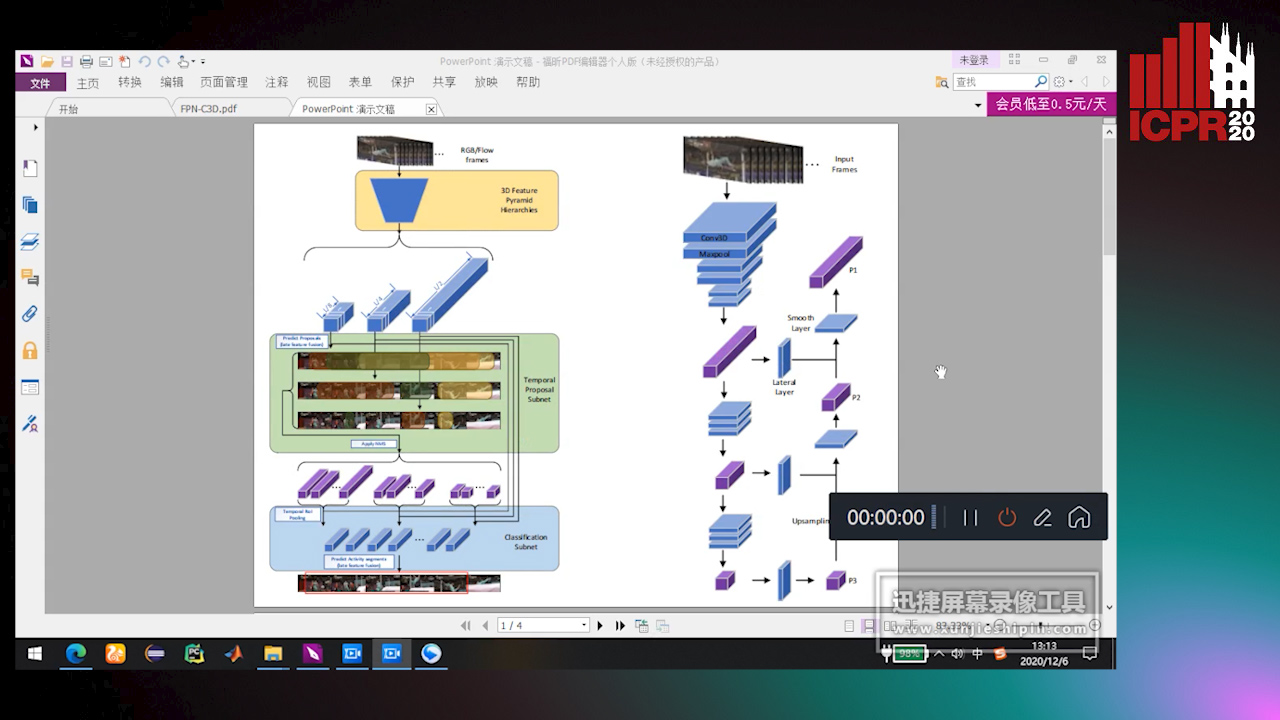

Feature Pyramid Hierarchies for Multi-Scale Temporal Action Detection

Auto-TLDR; Temporal Action Detection using Pyramid Hierarchies and Multi-scale Feature Maps

Abstract Slides Poster Similar

Learning Neural Textual Representations for Citation Recommendation

Thanh Binh Kieu, Inigo Jauregi Unanue, Son Bao Pham, Xuan-Hieu Phan, M. Piccardi

Auto-TLDR; Sentence-BERT cascaded with Siamese and triplet networks for citation recommendation

Abstract Slides Poster Similar

Automatic Student Network Search for Knowledge Distillation

Zhexi Zhang, Wei Zhu, Junchi Yan, Peng Gao, Guotong Xie

Auto-TLDR; NAS-KD: Knowledge Distillation for BERT

Abstract Slides Poster Similar