VSR++: Improving Visual Semantic Reasoning for Fine-Grained Image-Text Matching

Hui Yuan,

Yan Huang,

Dongbo Zhang,

Zerui Chen,

Wenlong Cheng,

Liang Wang

Auto-TLDR; Improving Visual Semantic Reasoning for Fine-Grained Image-Text Matching

Similar papers

A Novel Attention-Based Aggregation Function to Combine Vision and Language

Matteo Stefanini, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Fully-Attentive Reduction for Vision and Language

Abstract Slides Poster Similar

Transformer Reasoning Network for Image-Text Matching and Retrieval

Nicola Messina, Fabrizio Falchi, Andrea Esuli, Giuseppe Amato

Auto-TLDR; A Transformer Encoder Reasoning Network for Image-Text Matching in Large-Scale Information Retrieval

Abstract Slides Poster Similar

Beyond the Deep Metric Learning: Enhance the Cross-Modal Matching with Adversarial Discriminative Domain Regularization

Li Ren, Kai Li, Liqiang Wang, Kien Hua

Auto-TLDR; Adversarial Discriminative Domain Regularization for Efficient Cross-Modal Matching

Abstract Slides Poster Similar

Dual Path Multi-Modal High-Order Features for Textual Content Based Visual Question Answering

Yanan Li, Yuetan Lin, Hongrui Zhao, Donghui Wang

Auto-TLDR; TextVQA: An End-to-End Visual Question Answering Model for Text-Based VQA

MAGNet: Multi-Region Attention-Assisted Grounding of Natural Language Queries at Phrase Level

Amar Shrestha, Krittaphat Pugdeethosapol, Haowen Fang, Qinru Qiu

Auto-TLDR; MAGNet: A Multi-Region Attention-Aware Grounding Network for Free-form Textual Queries

Abstract Slides Poster Similar

Integrating Historical States and Co-Attention Mechanism for Visual Dialog

Tianling Jiang, Yi Ji, Chunping Liu

Auto-TLDR; Integrating Historical States and Co-attention for Visual Dialog

Abstract Slides Poster Similar

Multi-Scale 2D Representation Learning for Weakly-Supervised Moment Retrieval

Ding Li, Rui Wu, Zhizhong Zhang, Yongqiang Tang, Wensheng Zhang

Auto-TLDR; Multi-scale 2D Representation Learning for Weakly Supervised Video Moment Retrieval

Abstract Slides Poster Similar

Cross-Media Hash Retrieval Using Multi-head Attention Network

Zhixin Li, Feng Ling, Chuansheng Xu, Canlong Zhang, Huifang Ma

Auto-TLDR; Unsupervised Cross-Media Hash Retrieval Using Multi-Head Attention Network

Abstract Slides Poster Similar

Multi-Modal Contextual Graph Neural Network for Text Visual Question Answering

Yaoyuan Liang, Xin Wang, Xuguang Duan, Wenwu Zhu

Auto-TLDR; Multi-modal Contextual Graph Neural Network for Text Visual Question Answering

Abstract Slides Poster Similar

More Correlations Better Performance: Fully Associative Networks for Multi-Label Image Classification

Auto-TLDR; Fully Associative Network for Fully Exploiting Correlation Information in Multi-Label Classification

Abstract Slides Poster Similar

Multi-Stage Attention Based Visual Question Answering

Aakansha Mishra, Ashish Anand, Prithwijit Guha

Auto-TLDR; Alternative Bi-directional Attention for Visual Question Answering

Webly Supervised Image-Text Embedding with Noisy Tag Refinement

Niluthpol Mithun, Ravdeep Pasricha, Evangelos Papalexakis, Amit Roy-Chowdhury

Auto-TLDR; Robust Joint Embedding for Image-Text Retrieval Using Web Images

Answer-Checking in Context: A Multi-Modal Fully Attention Network for Visual Question Answering

Hantao Huang, Tao Han, Wei Han, Deep Yap Deep Yap, Cheng-Ming Chiang

Auto-TLDR; Fully Attention Based Visual Question Answering

Abstract Slides Poster Similar

Using Scene Graphs for Detecting Visual Relationships

Anurag Tripathi, Siddharth Srivastava, Brejesh Lall, Santanu Chaudhury

Auto-TLDR; Relationship Detection using Context Aligned Scene Graph Embeddings

Abstract Slides Poster Similar

Object Detection Using Dual Graph Network

Shengjia Chen, Zhixin Li, Feicheng Huang, Canlong Zhang, Huifang Ma

Auto-TLDR; A Graph Convolutional Network for Object Detection with Key Relation Information

Cross-Lingual Text Image Recognition Via Multi-Task Sequence to Sequence Learning

Zhuo Chen, Fei Yin, Xu-Yao Zhang, Qing Yang, Cheng-Lin Liu

Auto-TLDR; Cross-Lingual Text Image Recognition with Multi-task Learning

Abstract Slides Poster Similar

Question-Agnostic Attention for Visual Question Answering

Moshiur R Farazi, Salman Hameed Khan, Nick Barnes

Auto-TLDR; Question-Agnostic Attention for Visual Question Answering

Abstract Slides Poster Similar

Label Incorporated Graph Neural Networks for Text Classification

Yuan Xin, Linli Xu, Junliang Guo, Jiquan Li, Xin Sheng, Yuanyuan Zhou

Auto-TLDR; Graph Neural Networks for Semi-supervised Text Classification

Abstract Slides Poster Similar

Attentive Visual Semantic Specialized Network for Video Captioning

Jesus Perez-Martin, Benjamin Bustos, Jorge Pérez

Auto-TLDR; Adaptive Visual Semantic Specialized Network for Video Captioning

Abstract Slides Poster Similar

Context Visual Information-Based Deliberation Network for Video Captioning

Min Lu, Xueyong Li, Caihua Liu

Auto-TLDR; Context visual information-based deliberation network for video captioning

Abstract Slides Poster Similar

Aggregating Object Features Based on Attention Weights for Fine-Grained Image Retrieval

Hongli Lin, Yongqi Song, Zixuan Zeng, Weisheng Wang

Auto-TLDR; DSAW: Unsupervised Dual-selection for Fine-Grained Image Retrieval

Context for Object Detection Via Lightweight Global and Mid-Level Representations

Mesut Erhan Unal, Adriana Kovashka

Auto-TLDR; Context-Based Object Detection with Semantic Similarity

Abstract Slides Poster Similar

Zero-Shot Text Classification with Semantically Extended Graph Convolutional Network

Tengfei Liu, Yongli Hu, Junbin Gao, Yanfeng Sun, Baocai Yin

Auto-TLDR; Semantically Extended Graph Convolutional Network for Zero-shot Text Classification

Abstract Slides Poster Similar

Multi-Scale Relational Reasoning with Regional Attention for Visual Question Answering

Auto-TLDR; Question-Guided Relational Reasoning for Visual Question Answering

Abstract Slides Poster Similar

Reinforcement Learning with Dual Attention Guided Graph Convolution for Relation Extraction

Zhixin Li, Yaru Sun, Suqin Tang, Canlong Zhang, Huifang Ma

Auto-TLDR; Dual Attention Graph Convolutional Network for Relation Extraction

Abstract Slides Poster Similar

Picture-To-Amount (PITA): Predicting Relative Ingredient Amounts from Food Images

Jiatong Li, Fangda Han, Ricardo Guerrero, Vladimir Pavlovic

Auto-TLDR; PITA: A Deep Learning Architecture for Predicting the Relative Amount of Ingredients from Food Images

Abstract Slides Poster Similar

PIN: A Novel Parallel Interactive Network for Spoken Language Understanding

Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou

Auto-TLDR; Parallel Interactive Network for Spoken Language Understanding

Abstract Slides Poster Similar

Adaptive Word Embedding Module for Semantic Reasoning in Large-Scale Detection

Yu Zhang, Xiaoyu Wu, Ruolin Zhu

Auto-TLDR; Adaptive Word Embedding Module for Object Detection

Abstract Slides Poster Similar

Unsupervised Co-Segmentation for Athlete Movements and Live Commentaries Using Crossmodal Temporal Proximity

Yasunori Ohishi, Yuki Tanaka, Kunio Kashino

Auto-TLDR; A guided attention scheme for audio-visual co-segmentation

Abstract Slides Poster Similar

GCNs-Based Context-Aware Short Text Similarity Model

Auto-TLDR; Context-Aware Graph Convolutional Network for Text Similarity

Abstract Slides Poster Similar

MEG: Multi-Evidence GNN for Multimodal Semantic Forensics

Ekraam Sabir, Ayush Jaiswal, Wael Abdalmageed, Prem Natarajan

Auto-TLDR; Scalable Image Repurposing Detection with Graph Neural Network Based Model

Abstract Slides Poster Similar

Visual Oriented Encoder: Integrating Multimodal and Multi-Scale Contexts for Video Captioning

Auto-TLDR; Visual Oriented Encoder for Video Captioning

Abstract Slides Poster Similar

Gaussian Constrained Attention Network for Scene Text Recognition

Zhi Qiao, Xugong Qin, Yu Zhou, Fei Yang, Weiping Wang

Auto-TLDR; Gaussian Constrained Attention Network for Scene Text Recognition

Abstract Slides Poster Similar

PICK: Processing Key Information Extraction from Documents Using Improved Graph Learning-Convolutional Networks

Wenwen Yu, Ning Lu, Xianbiao Qi, Ping Gong, Rong Xiao

Auto-TLDR; PICK: A Graph Learning Framework for Key Information Extraction from Documents

Abstract Slides Poster Similar

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

Attentive Part-Aware Networks for Partial Person Re-Identification

Lijuan Huo, Chunfeng Song, Zhengyi Liu, Zhaoxiang Zhang

Auto-TLDR; Part-Aware Learning for Partial Person Re-identification

Abstract Slides Poster Similar

JECL: Joint Embedding and Cluster Learning for Image-Text Pairs

Sean Yang, Kuan-Hao Huang, Bill Howe

Auto-TLDR; JECL: Clustering Image-Caption Pairs with Parallel Encoders and Regularized Clusters

Price Suggestion for Online Second-Hand Items

Liang Han, Zhaozheng Yin, Zhurong Xia, Li Guo, Mingqian Tang, Rong Jin

Auto-TLDR; An Intelligent Price Suggestion System for Online Second-hand Items

Abstract Slides Poster Similar

Global Context-Based Network with Transformer for Image2latex

Nuo Pang, Chun Yang, Xiaobin Zhu, Jixuan Li, Xu-Cheng Yin

Auto-TLDR; Image2latex with Global Context block and Transformer

Abstract Slides Poster Similar

A Multi-Head Self-Relation Network for Scene Text Recognition

Zhou Junwei, Hongchao Gao, Jiao Dai, Dongqin Liu, Jizhong Han

Auto-TLDR; Multi-head Self-relation Network for Scene Text Recognition

Abstract Slides Poster Similar

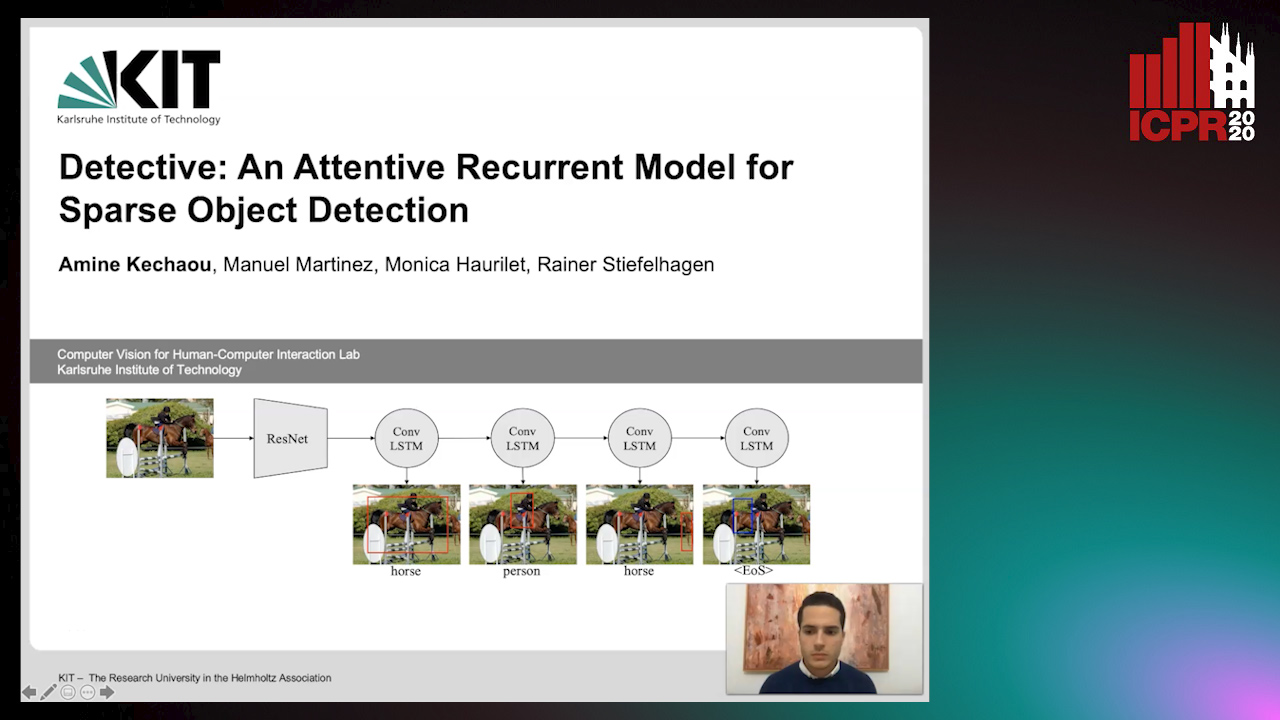

Detective: An Attentive Recurrent Model for Sparse Object Detection

Amine Kechaou, Manuel Martinez, Monica Haurilet, Rainer Stiefelhagen

Auto-TLDR; Detective: An attentive object detector that identifies objects in images in a sequential manner

Abstract Slides Poster Similar

You Ought to Look Around: Precise, Large Span Action Detection

Ge Pan, Zhang Han, Fan Yu, Yonghong Song, Yuanlin Zhang, Han Yuan

Auto-TLDR; YOLA: Local Feature Extraction for Action Localization with Variable receptive field

MEAN: A Multi-Element Attention Based Network for Scene Text Recognition

Ruijie Yan, Liangrui Peng, Shanyu Xiao, Gang Yao, Jaesik Min

Auto-TLDR; Multi-element Attention Network for Scene Text Recognition

Abstract Slides Poster Similar

Enriching Video Captions with Contextual Text

Philipp Rimle, Pelin Dogan, Markus Gross

Auto-TLDR; Contextualized Video Captioning Using Contextual Text

Abstract Slides Poster Similar

VSB^2-Net: Visual-Semantic Bi-Branch Network for Zero-Shot Hashing

Xin Li, Xiangfeng Wang, Bo Jin, Wenjie Zhang, Jun Wang, Hongyuan Zha

Auto-TLDR; VSB^2-Net: inductive zero-shot hashing for image retrieval

Abstract Slides Poster Similar

Text Recognition in Real Scenarios with a Few Labeled Samples

Jinghuang Lin, Cheng Zhanzhan, Fan Bai, Yi Niu, Shiliang Pu, Shuigeng Zhou

Auto-TLDR; Few-shot Adversarial Sequence Domain Adaptation for Scene Text Recognition

Abstract Slides Poster Similar

Augmented Bi-Path Network for Few-Shot Learning

Baoming Yan, Chen Zhou, Bo Zhao, Kan Guo, Yang Jiang, Xiaobo Li, Zhang Ming, Yizhou Wang

Auto-TLDR; Augmented Bi-path Network for Few-shot Learning

Abstract Slides Poster Similar

G-FAN: Graph-Based Feature Aggregation Network for Video Face Recognition

He Zhao, Yongjie Shi, Xin Tong, Jingsi Wen, Xianghua Ying, Jinshi Hongbin Zha

Auto-TLDR; Graph-based Feature Aggregation Network for Video Face Recognition

Abstract Slides Poster Similar