Beyond the Deep Metric Learning: Enhance the Cross-Modal Matching with Adversarial Discriminative Domain Regularization

Li Ren,

Kai Li,

Liqiang Wang,

Kien Hua

Auto-TLDR; Adversarial Discriminative Domain Regularization for Efficient Cross-Modal Matching

Similar papers

VSR++: Improving Visual Semantic Reasoning for Fine-Grained Image-Text Matching

Hui Yuan, Yan Huang, Dongbo Zhang, Zerui Chen, Wenlong Cheng, Liang Wang

Auto-TLDR; Improving Visual Semantic Reasoning for Fine-Grained Image-Text Matching

Abstract Slides Poster Similar

Transformer Reasoning Network for Image-Text Matching and Retrieval

Nicola Messina, Fabrizio Falchi, Andrea Esuli, Giuseppe Amato

Auto-TLDR; A Transformer Encoder Reasoning Network for Image-Text Matching in Large-Scale Information Retrieval

Abstract Slides Poster Similar

A Novel Attention-Based Aggregation Function to Combine Vision and Language

Matteo Stefanini, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Fully-Attentive Reduction for Vision and Language

Abstract Slides Poster Similar

Webly Supervised Image-Text Embedding with Noisy Tag Refinement

Niluthpol Mithun, Ravdeep Pasricha, Evangelos Papalexakis, Amit Roy-Chowdhury

Auto-TLDR; Robust Joint Embedding for Image-Text Retrieval Using Web Images

Cross-Media Hash Retrieval Using Multi-head Attention Network

Zhixin Li, Feng Ling, Chuansheng Xu, Canlong Zhang, Huifang Ma

Auto-TLDR; Unsupervised Cross-Media Hash Retrieval Using Multi-Head Attention Network

Abstract Slides Poster Similar

MAGNet: Multi-Region Attention-Assisted Grounding of Natural Language Queries at Phrase Level

Amar Shrestha, Krittaphat Pugdeethosapol, Haowen Fang, Qinru Qiu

Auto-TLDR; MAGNet: A Multi-Region Attention-Aware Grounding Network for Free-form Textual Queries

Abstract Slides Poster Similar

Integrating Historical States and Co-Attention Mechanism for Visual Dialog

Tianling Jiang, Yi Ji, Chunping Liu

Auto-TLDR; Integrating Historical States and Co-attention for Visual Dialog

Abstract Slides Poster Similar

Dual Path Multi-Modal High-Order Features for Textual Content Based Visual Question Answering

Yanan Li, Yuetan Lin, Hongrui Zhao, Donghui Wang

Auto-TLDR; TextVQA: An End-to-End Visual Question Answering Model for Text-Based VQA

RGB-Infrared Person Re-Identification Via Image Modality Conversion

Huangpeng Dai, Qing Xie, Yanchun Ma, Yongjian Liu, Shengwu Xiong

Auto-TLDR; CE2L: A Novel Network for Cross-Modality Re-identification with Feature Alignment

Abstract Slides Poster Similar

VSB^2-Net: Visual-Semantic Bi-Branch Network for Zero-Shot Hashing

Xin Li, Xiangfeng Wang, Bo Jin, Wenjie Zhang, Jun Wang, Hongyuan Zha

Auto-TLDR; VSB^2-Net: inductive zero-shot hashing for image retrieval

Abstract Slides Poster Similar

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

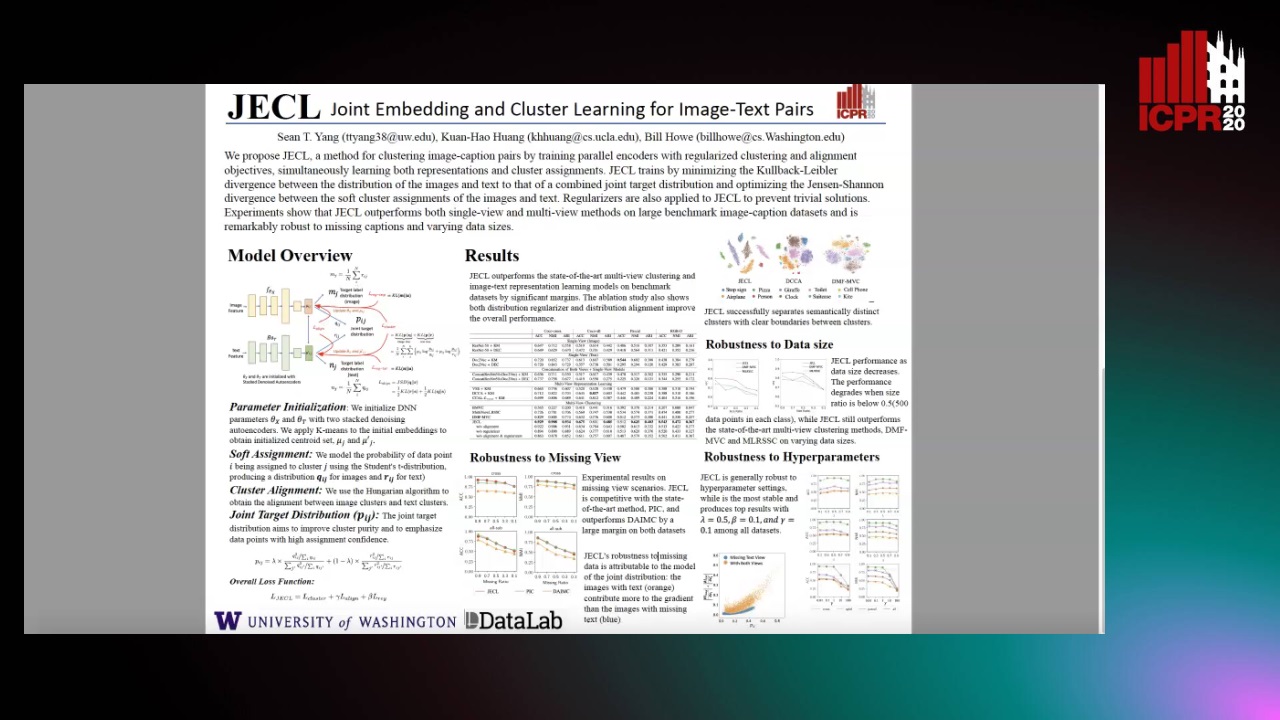

JECL: Joint Embedding and Cluster Learning for Image-Text Pairs

Sean Yang, Kuan-Hao Huang, Bill Howe

Auto-TLDR; JECL: Clustering Image-Caption Pairs with Parallel Encoders and Regularized Clusters

Multi-Stage Attention Based Visual Question Answering

Aakansha Mishra, Ashish Anand, Prithwijit Guha

Auto-TLDR; Alternative Bi-directional Attention for Visual Question Answering

CANU-ReID: A Conditional Adversarial Network for Unsupervised Person Re-IDentification

Guillaume Delorme, Yihong Xu, Stéphane Lathuiliere, Radu Horaud, Xavier Alameda-Pineda

Auto-TLDR; Unsupervised Person Re-Identification with Clustering and Adversarial Learning

Learning Low-Shot Generative Networks for Cross-Domain Data

Hsuan-Kai Kao, Cheng-Che Lee, Wei-Chen Chiu

Auto-TLDR; Learning Generators for Cross-Domain Data under Low-Shot Learning

Abstract Slides Poster Similar

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

Picture-To-Amount (PITA): Predicting Relative Ingredient Amounts from Food Images

Jiatong Li, Fangda Han, Ricardo Guerrero, Vladimir Pavlovic

Auto-TLDR; PITA: A Deep Learning Architecture for Predicting the Relative Amount of Ingredients from Food Images

Abstract Slides Poster Similar

Class Conditional Alignment for Partial Domain Adaptation

Mohsen Kheirandishfard, Fariba Zohrizadeh, Farhad Kamangar

Auto-TLDR; Multi-class Adversarial Adaptation for Partial Domain Adaptation

Abstract Slides Poster Similar

Cascade Attention Guided Residue Learning GAN for Cross-Modal Translation

Bin Duan, Wei Wang, Hao Tang, Hugo Latapie, Yan Yan

Auto-TLDR; Cascade Attention-Guided Residue GAN for Cross-modal Audio-Visual Learning

Abstract Slides Poster Similar

Unsupervised Multi-Task Domain Adaptation

Auto-TLDR; Unsupervised Domain Adaptation with Multi-task Learning for Image Recognition

Abstract Slides Poster Similar

Unsupervised Domain Adaptation with Multiple Domain Discriminators and Adaptive Self-Training

Teo Spadotto, Marco Toldo, Umberto Michieli, Pietro Zanuttigh

Auto-TLDR; Unsupervised Domain Adaptation for Semantic Segmentation of Urban Scenes

Abstract Slides Poster Similar

Randomized Transferable Machine

Auto-TLDR; Randomized Transferable Machine for Suboptimal Feature-based Transfer Learning

Abstract Slides Poster Similar

Adaptive L2 Regularization in Person Re-Identification

Xingyang Ni, Liang Fang, Heikki Juhani Huttunen

Auto-TLDR; AdaptiveReID: Adaptive L2 Regularization for Person Re-identification

Abstract Slides Poster Similar

Foreground-Focused Domain Adaption for Object Detection

Auto-TLDR; Unsupervised Domain Adaptation for Unsupervised Object Detection

Visual Oriented Encoder: Integrating Multimodal and Multi-Scale Contexts for Video Captioning

Auto-TLDR; Visual Oriented Encoder for Video Captioning

Abstract Slides Poster Similar

Progressive Learning Algorithm for Efficient Person Re-Identification

Zhen Li, Hanyang Shao, Liang Niu, Nian Xue

Auto-TLDR; Progressive Learning Algorithm for Large-Scale Person Re-Identification

Abstract Slides Poster Similar

Multi-Scale 2D Representation Learning for Weakly-Supervised Moment Retrieval

Ding Li, Rui Wu, Zhizhong Zhang, Yongqiang Tang, Wensheng Zhang

Auto-TLDR; Multi-scale 2D Representation Learning for Weakly Supervised Video Moment Retrieval

Abstract Slides Poster Similar

Learning Neural Textual Representations for Citation Recommendation

Thanh Binh Kieu, Inigo Jauregi Unanue, Son Bao Pham, Xuan-Hieu Phan, M. Piccardi

Auto-TLDR; Sentence-BERT cascaded with Siamese and triplet networks for citation recommendation

Abstract Slides Poster Similar

Deep Top-Rank Counter Metric for Person Re-Identification

Chen Chen, Hao Dou, Xiyuan Hu, Silong Peng

Auto-TLDR; Deep Top-Rank Counter Metric for Person Re-identification

Abstract Slides Poster Similar

Text Recognition in Real Scenarios with a Few Labeled Samples

Jinghuang Lin, Cheng Zhanzhan, Fan Bai, Yi Niu, Shiliang Pu, Shuigeng Zhou

Auto-TLDR; Few-shot Adversarial Sequence Domain Adaptation for Scene Text Recognition

Abstract Slides Poster Similar

Enlarging Discriminative Power by Adding an Extra Class in Unsupervised Domain Adaptation

Hai Tran, Sumyeong Ahn, Taeyoung Lee, Yung Yi

Auto-TLDR; Unsupervised Domain Adaptation using Artificial Classes

Abstract Slides Poster Similar

Text Synopsis Generation for Egocentric Videos

Aidean Sharghi, Niels Lobo, Mubarak Shah

Auto-TLDR; Egocentric Video Summarization Using Multi-task Learning for End-to-End Learning

Semantics to Space(S2S): Embedding Semantics into Spatial Space for Zero-Shot Verb-Object Query Inferencing

Auto-TLDR; Semantics-to-Space: Deep Zero-Shot Learning for Verb-Object Interaction with Vectors

Abstract Slides Poster Similar

P ≈ NP, at Least in Visual Question Answering

Shailza Jolly, Sebastian Palacio, Joachim Folz, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Polar vs Non-Polar VQA: A Cross-over Analysis of Feature Spaces for Joint Training

Attention-Based Deep Metric Learning for Near-Duplicate Video Retrieval

Kuan-Hsun Wang, Chia Chun Cheng, Yi-Ling Chen, Yale Song, Shang-Hong Lai

Auto-TLDR; Attention-based Deep Metric Learning for Near-duplicate Video Retrieval

Unsupervised Co-Segmentation for Athlete Movements and Live Commentaries Using Crossmodal Temporal Proximity

Yasunori Ohishi, Yuki Tanaka, Kunio Kashino

Auto-TLDR; A guided attention scheme for audio-visual co-segmentation

Abstract Slides Poster Similar

Nonlinear Ranking Loss on Riemannian Potato Embedding

Byung Hyung Kim, Yoonje Suh, Honggu Lee, Sungho Jo

Auto-TLDR; Riemannian Potato for Rank-based Metric Learning

Abstract Slides Poster Similar

Few-Shot Font Generation with Deep Metric Learning

Haruka Aoki, Koki Tsubota, Hikaru Ikuta, Kiyoharu Aizawa

Auto-TLDR; Deep Metric Learning for Japanese Typographic Font Synthesis

Abstract Slides Poster Similar

Multi-Level Deep Learning Vehicle Re-Identification Using Ranked-Based Loss Functions

Eleni Kamenou, Jesus Martinez-Del-Rincon, Paul Miller, Patricia Devlin - Hill

Auto-TLDR; Multi-Level Re-identification Network for Vehicle Re-Identification

Abstract Slides Poster Similar

Domain Generalized Person Re-Identification Via Cross-Domain Episodic Learning

Ci-Siang Lin, Yuan Chia Cheng, Yu-Chiang Frank Wang

Auto-TLDR; Domain-Invariant Person Re-identification with Episodic Learning

Abstract Slides Poster Similar

Multi-Modal Contextual Graph Neural Network for Text Visual Question Answering

Yaoyuan Liang, Xin Wang, Xuguang Duan, Wenwu Zhu

Auto-TLDR; Multi-modal Contextual Graph Neural Network for Text Visual Question Answering

Abstract Slides Poster Similar

Open Set Domain Recognition Via Attention-Based GCN and Semantic Matching Optimization

Xinxing He, Yuan Yuan, Zhiyu Jiang

Auto-TLDR; Attention-based GCN and Semantic Matching Optimization for Open Set Domain Recognition

Abstract Slides Poster Similar

Price Suggestion for Online Second-Hand Items

Liang Han, Zhaozheng Yin, Zhurong Xia, Li Guo, Mingqian Tang, Rong Jin

Auto-TLDR; An Intelligent Price Suggestion System for Online Second-hand Items

Abstract Slides Poster Similar

MEG: Multi-Evidence GNN for Multimodal Semantic Forensics

Ekraam Sabir, Ayush Jaiswal, Wael Abdalmageed, Prem Natarajan

Auto-TLDR; Scalable Image Repurposing Detection with Graph Neural Network Based Model

Abstract Slides Poster Similar

Supervised Domain Adaptation Using Graph Embedding

Lukas Hedegaard, Omar Ali Sheikh-Omar, Alexandros Iosifidis

Auto-TLDR; Domain Adaptation from the Perspective of Multi-view Graph Embedding and Dimensionality Reduction

Abstract Slides Poster Similar

Adversarially Constrained Interpolation for Unsupervised Domain Adaptation

Mohamed Azzam, Aurele Tohokantche Gnanha, Hau-San Wong, Si Wu

Auto-TLDR; Unsupervised Domain Adaptation with Domain Mixup Strategy

Abstract Slides Poster Similar

Attentive Visual Semantic Specialized Network for Video Captioning

Jesus Perez-Martin, Benjamin Bustos, Jorge Pérez

Auto-TLDR; Adaptive Visual Semantic Specialized Network for Video Captioning

Abstract Slides Poster Similar

Sequential Domain Adaptation through Elastic Weight Consolidation for Sentiment Analysis

Avinash Madasu, Anvesh Rao Vijjini

Auto-TLDR; Sequential Domain Adaptation using Elastic Weight Consolidation for Sentiment Analysis

Abstract Slides Poster Similar