Multi-Graph Convolutional Network for Relationship-Driven Stock Movement Prediction

Jiexia Ye,

Juanjuan Zhao,

Kejiang Ye,

Cheng-Zhong Xu

Auto-TLDR; Multi-GCGRU: A Deep Learning Framework for Stock Price Prediction with Cross Effect

Similar papers

Constructing Geographic and Long-term Temporal Graph for Traffic Forecasting

Yiwen Sun, Yulu Wang, Kun Fu, Zheng Wang, Changshui Zhang, Jieping Ye

Auto-TLDR; GLT-GCRNN: Geographic and Long-term Temporal Graph Convolutional Recurrent Neural Network for Traffic Forecasting

Abstract Slides Poster Similar

Geographic-Semantic-Temporal Hypergraph Convolutional Network for Traffic Flow Prediction

Kesu Wang, Jing Chen, Shijie Liao, Jiaxin Hou, Qingyu Xiong

Auto-TLDR; Geographic-semantic-temporal convolutional network for traffic flow prediction

Transfer Learning with Graph Neural Networks for Short-Term Highway Traffic Forecasting

Tanwi Mallick, Prasanna Balaprakash, Eric Rask, Jane Macfarlane

Auto-TLDR; Transfer Learning for Highway Traffic Forecasting on Unseen Traffic Networks

Abstract Slides Poster Similar

Edge-Aware Graph Attention Network for Ratio of Edge-User Estimation in Mobile Networks

Jiehui Deng, Sheng Wan, Xiang Wang, Enmei Tu, Xiaolin Huang, Jie Yang, Chen Gong

Auto-TLDR; EAGAT: Edge-Aware Graph Attention Network for Automatic REU Estimation in Mobile Networks

Abstract Slides Poster Similar

AOAM: Automatic Optimization of Adjacency Matrix for Graph Convolutional Network

Yuhang Zhang, Hongshuai Ren, Jiexia Ye, Xitong Gao, Yang Wang, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; Adjacency Matrix for Graph Convolutional Network in Non-Euclidean Space

Abstract Slides Poster Similar

MA-LSTM: A Multi-Attention Based LSTM for Complex Pattern Extraction

Jingjie Guo, Kelang Tian, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; MA-LSTM: Multiple Attention based recurrent neural network for forget gate

Abstract Slides Poster Similar

Graph Convolutional Neural Networks for Power Line Outage Identification

Auto-TLDR; Graph Convolutional Networks for Power Line Outage Identification

GCNs-Based Context-Aware Short Text Similarity Model

Auto-TLDR; Context-Aware Graph Convolutional Network for Text Similarity

Abstract Slides Poster Similar

Label Incorporated Graph Neural Networks for Text Classification

Yuan Xin, Linli Xu, Junliang Guo, Jiquan Li, Xin Sheng, Yuanyuan Zhou

Auto-TLDR; Graph Neural Networks for Semi-supervised Text Classification

Abstract Slides Poster Similar

Trajectory-User Link with Attention Recurrent Networks

Tao Sun, Yongjun Xu, Fei Wang, Lin Wu, 塘文 钱, Zezhi Shao

Auto-TLDR; TULAR: Trajectory-User Link with Attention Recurrent Neural Networks

Abstract Slides Poster Similar

A Two-Stream Recurrent Network for Skeleton-Based Human Interaction Recognition

Qianhui Men, Edmond S. L. Ho, Shum Hubert P. H., Howard Leung

Auto-TLDR; Two-Stream Recurrent Neural Network for Human-Human Interaction Recognition

Abstract Slides Poster Similar

Learning Connectivity with Graph Convolutional Networks

Auto-TLDR; Learning Graph Convolutional Networks Using Topological Properties of Graphs

Abstract Slides Poster Similar

More Correlations Better Performance: Fully Associative Networks for Multi-Label Image Classification

Auto-TLDR; Fully Associative Network for Fully Exploiting Correlation Information in Multi-Label Classification

Abstract Slides Poster Similar

Zero-Shot Text Classification with Semantically Extended Graph Convolutional Network

Tengfei Liu, Yongli Hu, Junbin Gao, Yanfeng Sun, Baocai Yin

Auto-TLDR; Semantically Extended Graph Convolutional Network for Zero-shot Text Classification

Abstract Slides Poster Similar

Cross-Supervised Joint-Event-Extraction with Heterogeneous Information Networks

Yue Wang, Zhuo Xu, Yao Wan, Lu Bai, Lixin Cui, Qian Zhao, Edwin Hancock, Philip Yu

Auto-TLDR; Joint-Event-extraction from Unstructured corpora using Structural Information Network

Abstract Slides Poster Similar

PIN: A Novel Parallel Interactive Network for Spoken Language Understanding

Peilin Zhou, Zhiqi Huang, Fenglin Liu, Yuexian Zou

Auto-TLDR; Parallel Interactive Network for Spoken Language Understanding

Abstract Slides Poster Similar

Data Normalization for Bilinear Structures in High-Frequency Financial Time-Series

Dat Thanh Tran, Juho Kanniainen, Moncef Gabbouj, Alexandros Iosifidis

Auto-TLDR; Bilinear Normalization for Financial Time-Series Analysis and Forecasting

Abstract Slides Poster Similar

Reinforcement Learning with Dual Attention Guided Graph Convolution for Relation Extraction

Zhixin Li, Yaru Sun, Suqin Tang, Canlong Zhang, Huifang Ma

Auto-TLDR; Dual Attention Graph Convolutional Network for Relation Extraction

Abstract Slides Poster Similar

Recurrent Graph Convolutional Networks for Skeleton-Based Action Recognition

Guangming Zhu, Lu Yang, Liang Zhang, Peiyi Shen, Juan Song

Auto-TLDR; Recurrent Graph Convolutional Network for Human Action Recognition

Abstract Slides Poster Similar

Kernel-based Graph Convolutional Networks

Auto-TLDR; Spatial Graph Convolutional Networks in Recurrent Kernel Hilbert Space

Abstract Slides Poster Similar

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

PICK: Processing Key Information Extraction from Documents Using Improved Graph Learning-Convolutional Networks

Wenwen Yu, Ning Lu, Xianbiao Qi, Ping Gong, Rong Xiao

Auto-TLDR; PICK: A Graph Learning Framework for Key Information Extraction from Documents

Abstract Slides Poster Similar

On the Global Self-attention Mechanism for Graph Convolutional Networks

Auto-TLDR; Global Self-Attention Mechanism for Graph Convolutional Networks

Detecting and Adapting to Crisis Pattern with Context Based Deep Reinforcement Learning

Eric Benhamou, David Saltiel Saltiel, Jean-Jacques Ohana Ohana, Jamal Atif Atif

Auto-TLDR; Deep Reinforcement Learning for Financial Crisis Detection and Dis-Investment

Abstract Slides Poster Similar

Channel-Wise Dense Connection Graph Convolutional Network for Skeleton-Based Action Recognition

Michael Lao Banteng, Zhiyong Wu

Auto-TLDR; Two-stream channel-wise dense connection GCN for human action recognition

Abstract Slides Poster Similar

End-To-End Multi-Task Learning of Missing Value Imputation and Forecasting in Time-Series Data

Jinhee Kim, Taesung Kim, Jang-Ho Choi, Jaegul Choo

Auto-TLDR; Time-Series Prediction with Denoising and Imputation of Missing Data

Abstract Slides Poster Similar

Enhanced User Interest and Expertise Modeling for Expert Recommendation

Tongze He, Caili Guo, Yunfei Chu

Auto-TLDR; A Unified Framework for Expert Recommendation in Community Question Answering

Abstract Slides Poster Similar

Emerging Relation Network and Task Embedding for Multi-Task Regression Problems

Auto-TLDR; A Comparative Study of Multi-Task Learning for Non-linear Time Series Problems

Abstract Slides Poster Similar

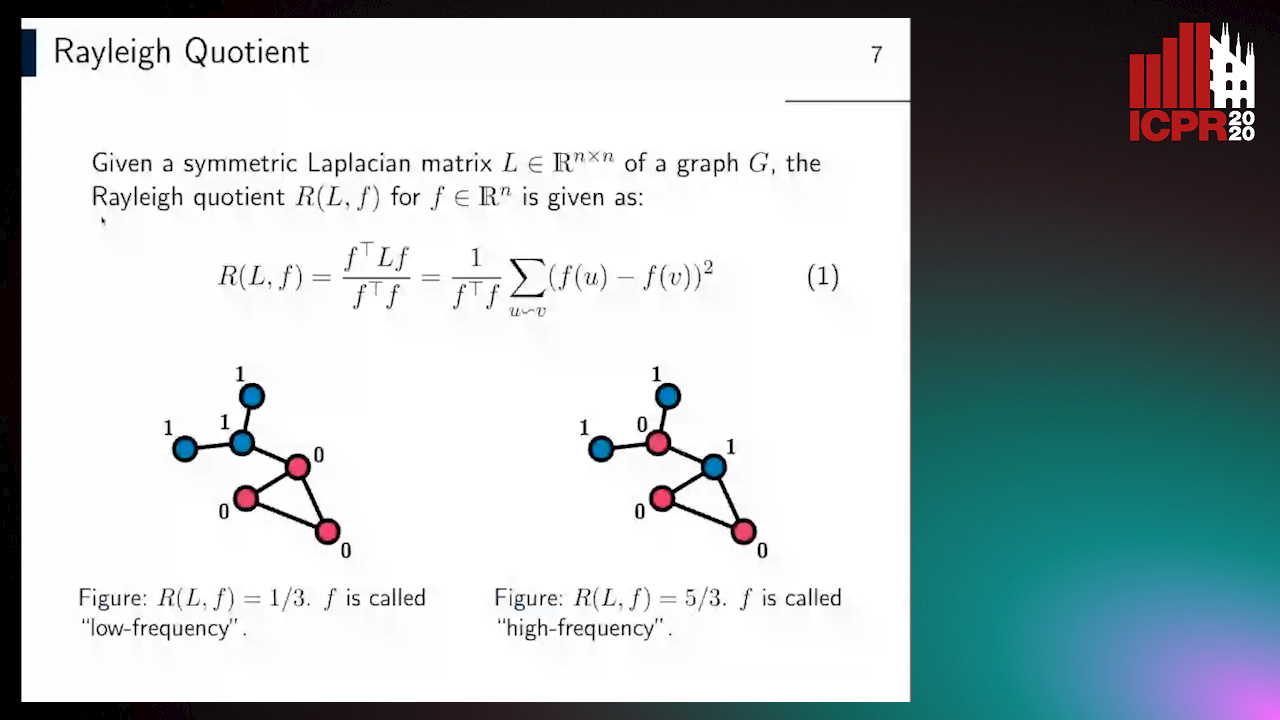

Revisiting Graph Neural Networks: Graph Filtering Perspective

Hoang Nguyen-Thai, Takanori Maehara, Tsuyoshi Murata

Auto-TLDR; Two-Layers Graph Convolutional Network with Graph Filters Neural Network

Abstract Slides Poster Similar

What Nodes Vote To? Graph Classification without Readout Phase

Yuxing Tian, Zheng Liu, Weiding Liu, Zeyu Zhang, Yanwen Qu

Auto-TLDR; node voting based graph classification with convolutional operator

Abstract Slides Poster Similar

Temporal Collaborative Filtering with Graph Convolutional Neural Networks

Esther Rodrigo-Bonet, Minh Duc Nguyen, Nikos Deligiannis

Auto-TLDR; Temporal Collaborative Filtering with Graph-Neural-Network-based Neural Networks

Abstract Slides Poster Similar

Moto: Enhancing Embedding with Multiple Joint Factors for Chinese Text Classification

Xunzhu Tang, Rujie Zhu, Tiezhu Sun

Auto-TLDR; Moto: Enhancing Embedding with Multiple J\textbf{o}int Fac\textBF{to}rs

Abstract Slides Poster Similar

Region and Relations Based Multi Attention Network for Graph Classification

Manasvi Aggarwal, M. Narasimha Murty

Auto-TLDR; R2POOL: A Graph Pooling Layer for Non-euclidean Structures

Abstract Slides Poster Similar

SAT-Net: Self-Attention and Temporal Fusion for Facial Action Unit Detection

Zhihua Li, Zheng Zhang, Lijun Yin

Auto-TLDR; Temporal Fusion and Self-Attention Network for Facial Action Unit Detection

Abstract Slides Poster Similar

A General Model for Learning Node and Graph Representations Jointly

Auto-TLDR; Joint Community Detection/Dynamic Routing for Graph Classification

Abstract Slides Poster Similar

Classification of Intestinal Gland Cell-Graphs Using Graph Neural Networks

Linda Studer, Jannis Wallau, Heather Dawson, Inti Zlobec, Andreas Fischer

Auto-TLDR; Graph Neural Networks for Classification of Dysplastic Gland Glands using Graph Neural Networks

Abstract Slides Poster Similar

Video-Based Facial Expression Recognition Using Graph Convolutional Networks

Daizong Liu, Hongting Zhang, Pan Zhou

Auto-TLDR; Graph Convolutional Network for Video-based Facial Expression Recognition

Abstract Slides Poster Similar

Temporal Attention-Augmented Graph Convolutional Network for Efficient Skeleton-Based Human Action Recognition

Negar Heidari, Alexandros Iosifidis

Auto-TLDR; Temporal Attention Module for Efficient Graph Convolutional Network-based Action Recognition

Abstract Slides Poster Similar

Global Feature Aggregation for Accident Anticipation

Mishal Fatima, Umar Karim Khan, Chong Min Kyung

Auto-TLDR; Feature Aggregation for Predicting Accidents in Video Sequences

Privacy Attributes-Aware Message Passing Neural Network for Visual Privacy Attributes Classification

Hanbin Hong, Wentao Bao, Yuan Hong, Yu Kong

Auto-TLDR; Privacy Attributes-Aware Message Passing Neural Network for Visual Privacy Attribute Classification

Abstract Slides Poster Similar

Visual Oriented Encoder: Integrating Multimodal and Multi-Scale Contexts for Video Captioning

Auto-TLDR; Visual Oriented Encoder for Video Captioning

Abstract Slides Poster Similar

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

Road Network Metric Learning for Estimated Time of Arrival

Yiwen Sun, Kun Fu, Zheng Wang, Changshui Zhang, Jieping Ye

Auto-TLDR; Road Network Metric Learning for Estimated Time of Arrival (RNML-ETA)

Abstract Slides Poster Similar

MFI: Multi-Range Feature Interchange for Video Action Recognition

Sikai Bai, Qi Wang, Xuelong Li

Auto-TLDR; Multi-range Feature Interchange Network for Action Recognition in Videos

Abstract Slides Poster Similar

Regularized Flexible Activation Function Combinations for Deep Neural Networks

Renlong Jie, Junbin Gao, Andrey Vasnev, Minh-Ngoc Tran

Auto-TLDR; Flexible Activation in Deep Neural Networks using ReLU and ELUs

Abstract Slides Poster Similar

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

EasiECG: A Novel Inter-Patient Arrhythmia Classification Method Using ECG Waves

Chuanqi Han, Ruoran Huang, Fang Yu, Xi Huang, Li Cui

Auto-TLDR; EasiECG: Attention-based Convolution Factorization Machines for Arrhythmia Classification

Abstract Slides Poster Similar

DAG-Net: Double Attentive Graph Neural Network for Trajectory Forecasting

Alessio Monti, Alessia Bertugli, Simone Calderara, Rita Cucchiara

Auto-TLDR; Recurrent Generative Model for Multi-modal Human Motion Behaviour in Urban Environments

Abstract Slides Poster Similar