Detecting and Adapting to Crisis Pattern with Context Based Deep Reinforcement Learning

Eric Benhamou,

David Saltiel Saltiel,

Jean-Jacques Ohana Ohana,

Jamal Atif Atif

Auto-TLDR; Deep Reinforcement Learning for Financial Crisis Detection and Dis-Investment

Similar papers

Deep Reinforcement Learning on a Budget: 3D Control and Reasoning without a Supercomputer

Edward Beeching, Jilles Steeve Dibangoye, Olivier Simonin, Christian Wolf

Auto-TLDR; Deep Reinforcement Learning in Mobile Robots Using 3D Environment Scenarios

Abstract Slides Poster Similar

The Effect of Multi-Step Methods on Overestimation in Deep Reinforcement Learning

Lingheng Meng, Rob Gorbet, Dana Kulić

Auto-TLDR; Multi-Step DDPG for Deep Reinforcement Learning

Abstract Slides Poster Similar

Learning from Learners: Adapting Reinforcement Learning Agents to Be Competitive in a Card Game

Pablo Vinicius Alves De Barros, Ana Tanevska, Alessandra Sciutti

Auto-TLDR; Adaptive Reinforcement Learning for Competitive Card Games

Abstract Slides Poster Similar

Low Dimensional State Representation Learning with Reward-Shaped Priors

Nicolò Botteghi, Ruben Obbink, Daan Geijs, Mannes Poel, Beril Sirmacek, Christoph Brune, Abeje Mersha, Stefano Stramigioli

Auto-TLDR; Unsupervised Learning for Unsupervised Reinforcement Learning in Robotics

Self-Play or Group Practice: Learning to Play Alternating Markov Game in Multi-Agent System

Chin-Wing Leung, Shuyue Hu, Ho-Fung Leung

Auto-TLDR; Group Practice for Deep Reinforcement Learning

Abstract Slides Poster Similar

Can Reinforcement Learning Lead to Healthy Life?: Simulation Study Based on User Activity Logs

Masami Takahashi, Masahiro Kohjima, Takeshi Kurashima, Hiroyuki Toda

Auto-TLDR; Reinforcement Learning for Healthy Daily Life

Abstract Slides Poster Similar

AVD-Net: Attention Value Decomposition Network for Deep Multi-Agent Reinforcement Learning

Zhang Yuanxin, Huimin Ma, Yu Wang

Auto-TLDR; Attention Value Decomposition Network for Cooperative Multi-agent Reinforcement Learning

Abstract Slides Poster Similar

Meta Learning Via Learned Loss

Sarah Bechtle, Artem Molchanov, Yevgen Chebotar, Edward Thomas Grefenstette, Ludovic Righetti, Gaurav Sukhatme, Franziska Meier

Auto-TLDR; meta-learning for learning parametric loss functions that generalize across different tasks and model architectures

Object-Oriented Map Exploration and Construction Based on Auxiliary Task Aided DRL

Junzhe Xu, Jianhua Zhang, Shengyong Chen, Honghai Liu

Auto-TLDR; Auxiliary Task Aided Deep Reinforcement Learning for Environment Exploration by Autonomous Robots

A Bayesian Approach to Reinforcement Learning of Vision-Based Vehicular Control

Zahra Gharaee, Karl Holmquist, Linbo He, Michael Felsberg

Auto-TLDR; Bayesian Reinforcement Learning for Autonomous Driving

Abstract Slides Poster Similar

Trajectory Representation Learning for Multi-Task NMRDP Planning

Firas Jarboui, Vianney Perchet

Auto-TLDR; Exploring Non Markovian Reward Decision Processes for Reinforcement Learning

Abstract Slides Poster Similar

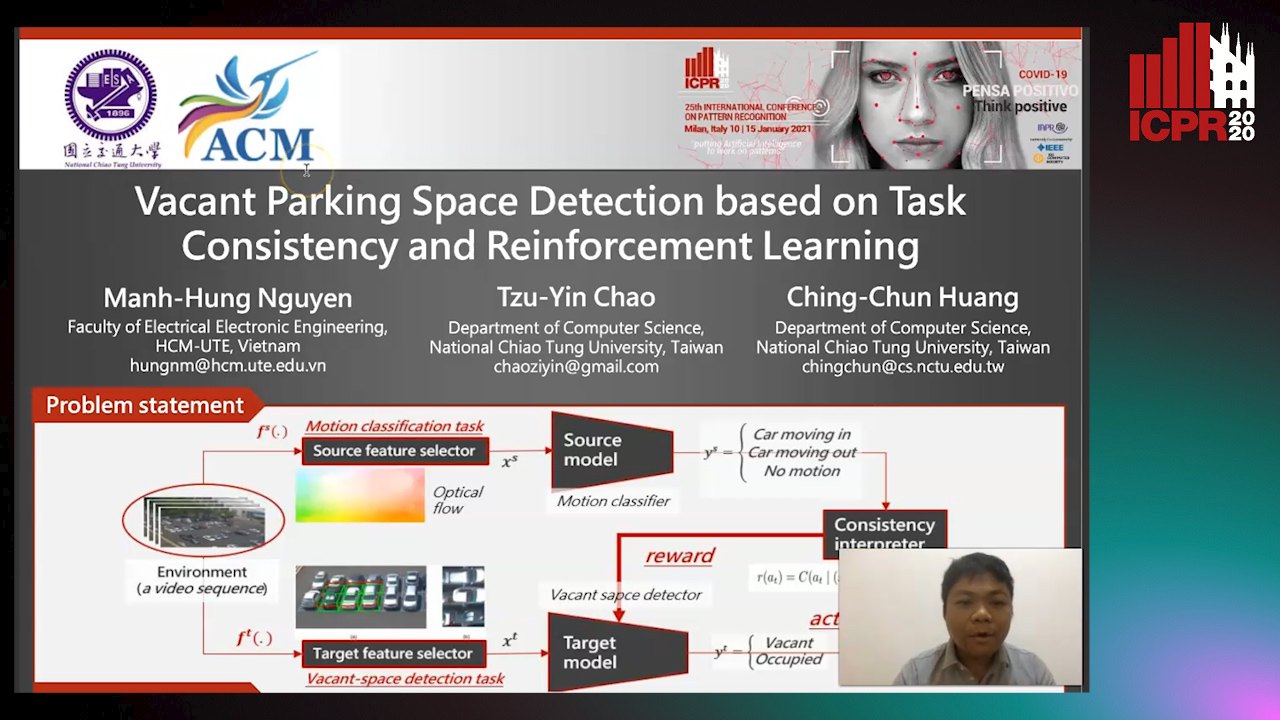

Vacant Parking Space Detection Based on Task Consistency and Reinforcement Learning

Manh Hung Nguyen, Tzu-Yin Chao, Ching-Chun Huang

Auto-TLDR; Vacant Space Detection via Semantic Consistency Learning

Abstract Slides Poster Similar

Deep Reinforcement Learning for Autonomous Driving by Transferring Visual Features

Hongli Zhou, Guanwen Zhang, Wei Zhou

Auto-TLDR; Deep Reinforcement Learning for Autonomous Driving by Transferring Visual Features

Abstract Slides Poster Similar

Adaptive Remote Sensing Image Attribute Learning for Active Object Detection

Nuo Xu, Chunlei Huo, Chunhong Pan

Auto-TLDR; Adaptive Image Attribute Learning for Active Object Detection

Explore and Explain: Self-Supervised Navigation and Recounting

Roberto Bigazzi, Federico Landi, Marcella Cornia, Silvia Cascianelli, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Exploring a Photorealistic Environment for Explanation and Navigation

Multi-Graph Convolutional Network for Relationship-Driven Stock Movement Prediction

Jiexia Ye, Juanjuan Zhao, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; Multi-GCGRU: A Deep Learning Framework for Stock Price Prediction with Cross Effect

Abstract Slides Poster Similar

On Embodied Visual Navigation in Real Environments through Habitat

Marco Rosano, Antonino Furnari, Luigi Gulino, Giovanni Maria Farinella

Auto-TLDR; Learning Navigation Policies on Real World Observations using Real World Images and Sensor and Actuation Noise

Abstract Slides Poster Similar

AOAM: Automatic Optimization of Adjacency Matrix for Graph Convolutional Network

Yuhang Zhang, Hongshuai Ren, Jiexia Ye, Xitong Gao, Yang Wang, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; Adjacency Matrix for Graph Convolutional Network in Non-Euclidean Space

Abstract Slides Poster Similar

A Novel Actor Dual-Critic Model for Remote Sensing Image Captioning

Ruchika Chavhan, Biplab Banerjee, Xiao Xiang Zhu, Subhasis Chaudhuri

Auto-TLDR; Actor Dual-Critic Training for Remote Sensing Image Captioning Using Deep Reinforcement Learning

Abstract Slides Poster Similar

Visual Object Tracking in Drone Images with Deep Reinforcement Learning

Auto-TLDR; A Deep Reinforcement Learning based Single Object Tracker for Drone Applications

Abstract Slides Poster Similar

RLST: A Reinforcement Learning Approach to Scene Text Detection Refinement

Xuan Peng, Zheng Huang, Kai Chen, Jie Guo, Weidong Qiu

Auto-TLDR; Saccadic Eye Movements and Peripheral Vision for Scene Text Detection using Reinforcement Learning

Abstract Slides Poster Similar

ActionSpotter: Deep Reinforcement Learning Framework for Temporal Action Spotting in Videos

Guillaume Vaudaux-Ruth, Adrien Chan-Hon-Tong, Catherine Achard

Auto-TLDR; ActionSpotter: A Reinforcement Learning Algorithm for Action Spotting in Video

Abstract Slides Poster Similar

DAG-Net: Double Attentive Graph Neural Network for Trajectory Forecasting

Alessio Monti, Alessia Bertugli, Simone Calderara, Rita Cucchiara

Auto-TLDR; Recurrent Generative Model for Multi-modal Human Motion Behaviour in Urban Environments

Abstract Slides Poster Similar

Improving Visual Question Answering Using Active Perception on Static Images

Theodoros Bozinis, Nikolaos Passalis, Anastasios Tefas

Auto-TLDR; Fine-Grained Visual Question Answering with Reinforcement Learning-based Active Perception

Abstract Slides Poster Similar

Seasonal Inhomogeneous Nonconsecutive Arrival Process Search and Evaluation

Kimberly Holmgren, Paul Gibby, Joseph Zipkin

Auto-TLDR; SINAPSE: Fitting a Sparse Time Series Model to Seasonal Data

Abstract Slides Poster Similar

Data Normalization for Bilinear Structures in High-Frequency Financial Time-Series

Dat Thanh Tran, Juho Kanniainen, Moncef Gabbouj, Alexandros Iosifidis

Auto-TLDR; Bilinear Normalization for Financial Time-Series Analysis and Forecasting

Abstract Slides Poster Similar

Deep Next-Best-View Planner for Cross-Season Visual Route Classification

Auto-TLDR; Active Visual Place Recognition using Deep Convolutional Neural Network

Abstract Slides Poster Similar

Dealing with Scarce Labelled Data: Semi-Supervised Deep Learning with Mix Match for Covid-19 Detection Using Chest X-Ray Images

Saúl Calderón Ramirez, Raghvendra Giri, Shengxiang Yang, Armaghan Moemeni, Mario Umaña, David Elizondo, Jordina Torrents-Barrena, Miguel A. Molina-Cabello

Auto-TLDR; Semi-supervised Deep Learning for Covid-19 Detection using Chest X-rays

Abstract Slides Poster Similar

Improving Gravitational Wave Detection with 2D Convolutional Neural Networks

Siyu Fan, Yisen Wang, Yuan Luo, Alexander Michael Schmitt, Shenghua Yu

Auto-TLDR; Two-dimensional Convolutional Neural Networks for Gravitational Wave Detection from Time Series with Background Noise

Regularized Flexible Activation Function Combinations for Deep Neural Networks

Renlong Jie, Junbin Gao, Andrey Vasnev, Minh-Ngoc Tran

Auto-TLDR; Flexible Activation in Deep Neural Networks using ReLU and ELUs

Abstract Slides Poster Similar

Recurrent Deep Attention Network for Person Re-Identification

Changhao Wang, Jun Zhou, Xianfei Duan, Guanwen Zhang, Wei Zhou

Auto-TLDR; Recurrent Deep Attention Network for Person Re-identification

Abstract Slides Poster Similar

E-DNAS: Differentiable Neural Architecture Search for Embedded Systems

Javier García López, Antonio Agudo, Francesc Moreno-Noguer

Auto-TLDR; E-DNAS: Differentiable Architecture Search for Light-Weight Networks for Image Classification

Abstract Slides Poster Similar

Switching Dynamical Systems with Deep Neural Networks

Cesar Ali Ojeda Marin, Kostadin Cvejoski, Bogdan Georgiev, Ramses J. Sanchez

Auto-TLDR; Variational RNN for Switching Dynamics

Abstract Slides Poster Similar

Transfer Learning with Graph Neural Networks for Short-Term Highway Traffic Forecasting

Tanwi Mallick, Prasanna Balaprakash, Eric Rask, Jane Macfarlane

Auto-TLDR; Transfer Learning for Highway Traffic Forecasting on Unseen Traffic Networks

Abstract Slides Poster Similar

ILS-SUMM: Iterated Local Search for Unsupervised Video Summarization

Yair Shemer, Daniel Rotman, Nahum Shimkin

Auto-TLDR; ILS-SUMM: Iterated Local Search for Video Summarization

MA-LSTM: A Multi-Attention Based LSTM for Complex Pattern Extraction

Jingjie Guo, Kelang Tian, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; MA-LSTM: Multiple Attention based recurrent neural network for forget gate

Abstract Slides Poster Similar

Learning with Delayed Feedback

Pranavan Theivendiram, Terence Sim

Auto-TLDR; Unsupervised Machine Learning with Delayed Feedback

Abstract Slides Poster Similar

Dual-Memory Model for Incremental Learning: The Handwriting Recognition Use Case

Mélanie Piot, Bérangère Bourdoulous, Aurelia Deshayes, Lionel Prevost

Auto-TLDR; A dual memory model for handwriting recognition

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

Emerging Relation Network and Task Embedding for Multi-Task Regression Problems

Auto-TLDR; A Comparative Study of Multi-Task Learning for Non-linear Time Series Problems

Abstract Slides Poster Similar

Learning Stable Deep Predictive Coding Networks with Weight Norm Supervision

Auto-TLDR; Stability of Predictive Coding Network with Weight Norm Supervision

Abstract Slides Poster Similar

Algorithm Recommendation for Data Streams

Jáder Martins Camboim De Sá, Andre Luis Debiaso Rossi, Gustavo Enrique De Almeida Prado Alves Batista, Luís Paulo Faina Garcia

Auto-TLDR; Meta-Learning for Algorithm Selection in Time-Changing Data Streams

Abstract Slides Poster Similar

Real-Time End-To-End Lane ID Estimation Using Recurrent Networks

Ibrahim Halfaoui, Fahd Bouzaraa, Onay Urfalioglu

Auto-TLDR; Real-Time, Vision-Only Lane Identification Using Monocular Camera

Abstract Slides Poster Similar

Generalization Comparison of Deep Neural Networks Via Output Sensitivity

Mahsa Forouzesh, Farnood Salehi, Patrick Thiran

Auto-TLDR; Generalization of Deep Neural Networks using Sensitivity

Quantifying Model Uncertainty in Inverse Problems Via Bayesian Deep Gradient Descent

Riccardo Barbano, Chen Zhang, Simon Arridge, Bangti Jin

Auto-TLDR; Bayesian Neural Networks for Inverse Reconstruction via Bayesian Knowledge-Aided Computation

Abstract Slides Poster Similar

RNN Training along Locally Optimal Trajectories via Frank-Wolfe Algorithm

Yun Yue, Ming Li, Venkatesh Saligrama, Ziming Zhang

Auto-TLDR; Frank-Wolfe Algorithm for Efficient Training of RNNs

Abstract Slides Poster Similar

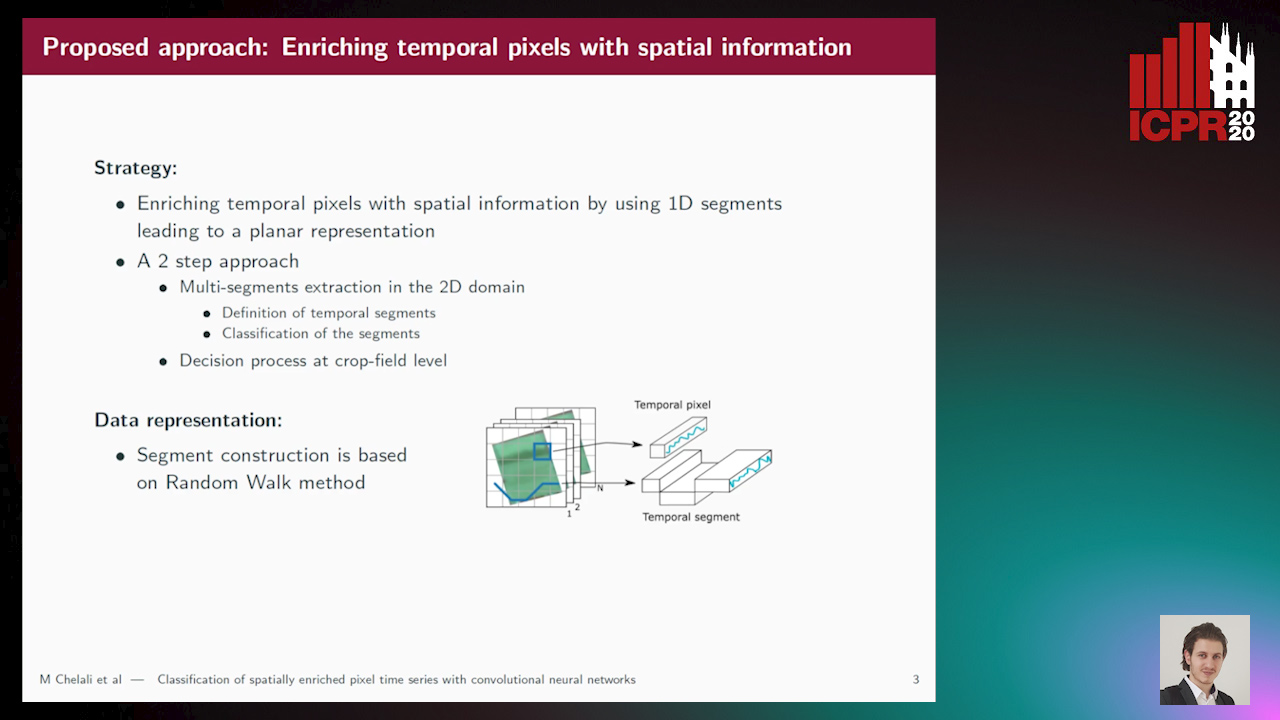

Classification of Spatially Enriched Pixel Time Series with Convolutional Neural Networks

Mohamed Chelali, Camille Kurtz, Anne Puissant, Nicole Vincent

Auto-TLDR; Spatio-Temporal Features Extraction from Satellite Image Time Series Using Random Walk

Abstract Slides Poster Similar

Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

William Prew, Toby Breckon, Magnus Bordewich, Ulrik Beierholm

Auto-TLDR; Improving grasping performance from monocularcolour images in an end-to-end CNN architecture with multi-task learning

Abstract Slides Poster Similar