Dual-Memory Model for Incremental Learning: The Handwriting Recognition Use Case

Mélanie Piot,

Bérangère Bourdoulous,

Aurelia Deshayes,

Lionel Prevost

Auto-TLDR; A dual memory model for handwriting recognition

Similar papers

Semi-Supervised Class Incremental Learning

Alexis Lechat, Stéphane Herbin, Frederic Jurie

Auto-TLDR; incremental class learning with non-annotated batches

Abstract Slides Poster Similar

Class-Incremental Learning with Pre-Allocated Fixed Classifiers

Federico Pernici, Matteo Bruni, Claudio Baecchi, Francesco Turchini, Alberto Del Bimbo

Auto-TLDR; Class-Incremental Learning with Pre-allocated Output Nodes for Fixed Classifier

Abstract Slides Poster Similar

Rethinking Experience Replay: A Bag of Tricks for Continual Learning

Pietro Buzzega, Matteo Boschini, Angelo Porrello, Simone Calderara

Auto-TLDR; Experience Replay for Continual Learning: A Practical Approach

Abstract Slides Poster Similar

Energy Minimum Regularization in Continual Learning

Auto-TLDR; Energy Minimization Regularization for Continuous Learning

Class-Incremental Learning with Topological Schemas of Memory Spaces

Xinyuan Chang, Xiaoyu Tao, Xiaopeng Hong, Xing Wei, Wei Ke, Yihong Gong

Auto-TLDR; Class-incremental Learning with Topological Schematic Model

Abstract Slides Poster Similar

Naturally Constrained Online Expectation Maximization

Daniela Pamplona, Antoine Manzanera

Auto-TLDR; Constrained Online Expectation-Maximization for Probabilistic Principal Components Analysis

Abstract Slides Poster Similar

RSAC: Regularized Subspace Approximation Classifier for Lightweight Continuous Learning

Auto-TLDR; Regularized Subspace Approximation Classifier for Lightweight Continuous Learning

Abstract Slides Poster Similar

Selecting Useful Knowledge from Previous Tasks for Future Learning in a Single Network

Feifei Shi, Peng Wang, Zhongchao Shi, Yong Rui

Auto-TLDR; Continual Learning with Gradient-based Threshold Threshold

Abstract Slides Poster Similar

Learning with Delayed Feedback

Pranavan Theivendiram, Terence Sim

Auto-TLDR; Unsupervised Machine Learning with Delayed Feedback

Abstract Slides Poster Similar

Algorithm Recommendation for Data Streams

Jáder Martins Camboim De Sá, Andre Luis Debiaso Rossi, Gustavo Enrique De Almeida Prado Alves Batista, Luís Paulo Faina Garcia

Auto-TLDR; Meta-Learning for Algorithm Selection in Time-Changing Data Streams

Abstract Slides Poster Similar

Pseudo Rehearsal Using Non Photo-Realistic Images

Bhasker Sri Harsha Suri, Kalidas Yeturu

Auto-TLDR; Pseudo-Rehearsing for Catastrophic Forgetting

Abstract Slides Poster Similar

ARCADe: A Rapid Continual Anomaly Detector

Ahmed Frikha, Denis Krompass, Volker Tresp

Auto-TLDR; ARCADe: A Meta-Learning Approach for Continuous Anomaly Detection

Abstract Slides Poster Similar

Decision Snippet Features

Pascal Welke, Fouad Alkhoury, Christian Bauckhage, Stefan Wrobel

Auto-TLDR; Decision Snippet Features for Interpretability

Abstract Slides Poster Similar

A Novel Random Forest Dissimilarity Measure for Multi-View Learning

Hongliu Cao, Simon Bernard, Robert Sabourin, Laurent Heutte

Auto-TLDR; Multi-view Learning with Random Forest Relation Measure and Instance Hardness

Abstract Slides Poster Similar

Sequential Domain Adaptation through Elastic Weight Consolidation for Sentiment Analysis

Avinash Madasu, Anvesh Rao Vijjini

Auto-TLDR; Sequential Domain Adaptation using Elastic Weight Consolidation for Sentiment Analysis

Abstract Slides Poster Similar

On Learning Random Forests for Random Forest Clustering

Manuele Bicego, Francisco Escolano

Auto-TLDR; Learning Random Forests for Clustering

Abstract Slides Poster Similar

Drift Anticipation with Forgetting to Improve Evolving Fuzzy System

Clément Leroy, Eric Anquetil, Nathalie Girard

Auto-TLDR; A coherent method to integrate forgetting in Evolving Fuzzy System

Abstract Slides Poster Similar

Proximity Isolation Forests

Antonella Mensi, Manuele Bicego, David Tax

Auto-TLDR; Proximity Isolation Forests for Non-vectorial Data

Abstract Slides Poster Similar

Personalized Models in Human Activity Recognition Using Deep Learning

Hamza Amrani, Daniela Micucci, Paolo Napoletano

Auto-TLDR; Incremental Learning for Personalized Human Activity Recognition

Abstract Slides Poster Similar

Hierarchical Routing Mixture of Experts

Wenbo Zhao, Yang Gao, Shahan Ali Memon, Bhiksha Raj, Rita Singh

Auto-TLDR; A Binary Tree-structured Hierarchical Routing Mixture of Experts for Regression

Abstract Slides Poster Similar

A Close Look at Deep Learning with Small Data

Auto-TLDR; Low-Complex Neural Networks for Small Data Conditions

Abstract Slides Poster Similar

Deep Transfer Learning for Alzheimer’s Disease Detection

Nicole Cilia, Claudio De Stefano, Francesco Fontanella, Claudio Marrocco, Mario Molinara, Alessandra Scotto Di Freca

Auto-TLDR; Automatic Detection of Handwriting Alterations for Alzheimer's Disease Diagnosis using Dynamic Features

Abstract Slides Poster Similar

A Heuristic-Based Decision Tree for Connected Components Labeling of 3D Volumes

Maximilian Söchting, Stefano Allegretti, Federico Bolelli, Costantino Grana

Auto-TLDR; Entropy Partitioning Decision Tree for Connected Components Labeling

Abstract Slides Poster Similar

Multimodal Side-Tuning for Document Classification

Stefano Zingaro, Giuseppe Lisanti, Maurizio Gabbrielli

Auto-TLDR; Side-tuning for Multimodal Document Classification

Abstract Slides Poster Similar

How to Define a Rejection Class Based on Model Learning?

Sarah Laroui, Xavier Descombes, Aurelia Vernay, Florent Villiers, Francois Villalba, Eric Debreuve

Auto-TLDR; An innovative learning strategy for supervised classification that is able, by design, to reject a sample as not belonging to any of the known classes

Abstract Slides Poster Similar

Learning Stable Deep Predictive Coding Networks with Weight Norm Supervision

Auto-TLDR; Stability of Predictive Coding Network with Weight Norm Supervision

Abstract Slides Poster Similar

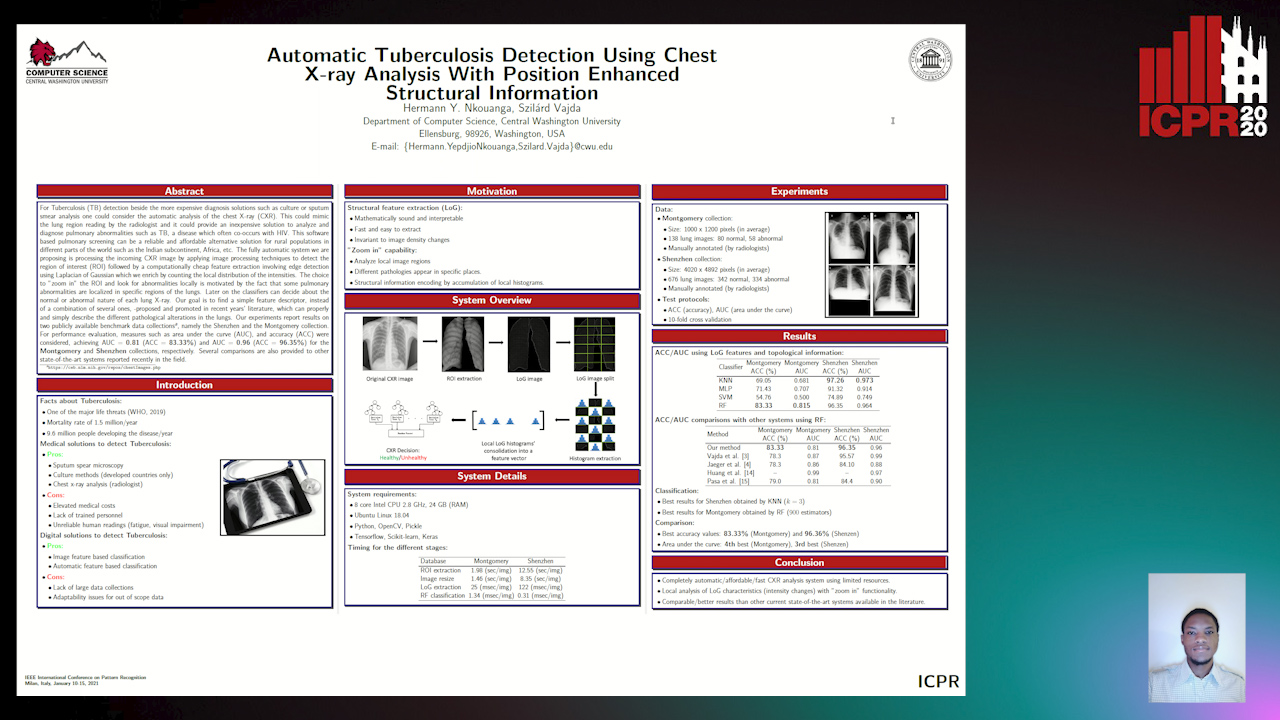

Automatic Tuberculosis Detection Using Chest X-Ray Analysis with Position Enhanced Structural Information

Hermann Jepdjio Nkouanga, Szilard Vajda

Auto-TLDR; Automatic Chest X-ray Screening for Tuberculosis in Rural Population using Localized Region on Interest

Abstract Slides Poster Similar

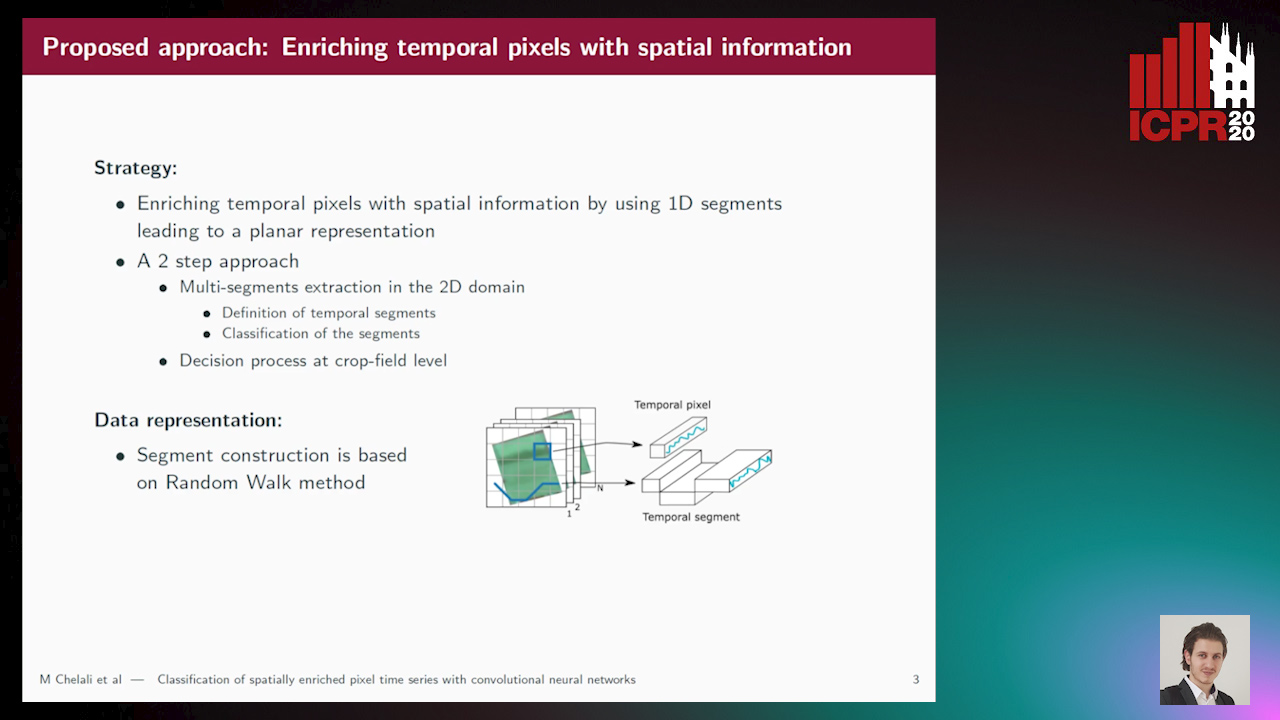

Classification of Spatially Enriched Pixel Time Series with Convolutional Neural Networks

Mohamed Chelali, Camille Kurtz, Anne Puissant, Nicole Vincent

Auto-TLDR; Spatio-Temporal Features Extraction from Satellite Image Time Series Using Random Walk

Abstract Slides Poster Similar

Incrementally Zero-Shot Detection by an Extreme Value Analyzer

Sixiao Zheng, Yanwei Fu, Yanxi Hou

Auto-TLDR; IZSD-EVer: Incremental Zero-Shot Detection for Incremental Learning

Exploring Spatial-Temporal Representations for fNIRS-based Intimacy Detection via an Attention-enhanced Cascade Convolutional Recurrent Neural Network

Chao Li, Qian Zhang, Ziping Zhao

Auto-TLDR; Intimate Relationship Prediction by Attention-enhanced Cascade Convolutional Recurrent Neural Network Using Functional Near-Infrared Spectroscopy

Abstract Slides Poster Similar

Mean Decision Rules Method with Smart Sampling for Fast Large-Scale Binary SVM Classification

Alexandra Makarova, Mikhail Kurbakov, Valentina Sulimova

Auto-TLDR; Improving Mean Decision Rule for Large-Scale Binary SVM Problems

Abstract Slides Poster Similar

An Adaptive Video-To-Video Face Identification System Based on Self-Training

Eric Lopez-Lopez, Carlos V. Regueiro, Xosé M. Pardo

Auto-TLDR; Adaptive Video-to-Video Face Recognition using Dynamic Ensembles of SVM's

Abstract Slides Poster Similar

The Effect of Multi-Step Methods on Overestimation in Deep Reinforcement Learning

Lingheng Meng, Rob Gorbet, Dana Kulić

Auto-TLDR; Multi-Step DDPG for Deep Reinforcement Learning

Abstract Slides Poster Similar

The HisClima Database: Historical Weather Logs for Automatic Transcription and Information Extraction

Verónica Romero, Joan Andreu Sánchez

Auto-TLDR; Automatic Handwritten Text Recognition and Information Extraction from Historical Weather Logs

Abstract Slides Poster Similar

Cross-People Mobile-Phone Based Airwriting Character Recognition

Yunzhe Li, Hui Zheng, He Zhu, Haojun Ai, Xiaowei Dong

Auto-TLDR; Cross-People Airwriting Recognition via Motion Sensor Signal via Deep Neural Network

Abstract Slides Poster Similar

Self-Supervised Joint Encoding of Motion and Appearance for First Person Action Recognition

Mirco Planamente, Andrea Bottino, Barbara Caputo

Auto-TLDR; A Single Stream Architecture for Egocentric Action Recognition from the First-Person Point of View

Abstract Slides Poster Similar

Recursive Convolutional Neural Networks for Epigenomics

Aikaterini Symeonidi, Anguelos Nicolaou, Frank Johannes, Vincent Christlein

Auto-TLDR; Recursive Convolutional Neural Networks for Epigenomic Data Analysis

Abstract Slides Poster Similar

Location Prediction in Real Homes of Older Adults based on K-Means in Low-Resolution Depth Videos

Simon Simonsson, Flávia Dias Casagrande, Evi Zouganeli

Auto-TLDR; Semi-supervised Learning for Location Recognition and Prediction in Smart Homes using Depth Video Cameras

Abstract Slides Poster Similar

RNN Training along Locally Optimal Trajectories via Frank-Wolfe Algorithm

Yun Yue, Ming Li, Venkatesh Saligrama, Ziming Zhang

Auto-TLDR; Frank-Wolfe Algorithm for Efficient Training of RNNs

Abstract Slides Poster Similar

Compression Strategies and Space-Conscious Representations for Deep Neural Networks

Giosuè Marinò, Gregorio Ghidoli, Marco Frasca, Dario Malchiodi

Auto-TLDR; Compression of Large Convolutional Neural Networks by Weight Pruning and Quantization

Abstract Slides Poster Similar

Recursive Recognition of Offline Handwritten Mathematical Expressions

Marco Cotogni, Claudio Cusano, Antonino Nocera

Auto-TLDR; Online Handwritten Mathematical Expression Recognition with Recurrent Neural Network

Abstract Slides Poster Similar

Detecting and Adapting to Crisis Pattern with Context Based Deep Reinforcement Learning

Eric Benhamou, David Saltiel Saltiel, Jean-Jacques Ohana Ohana, Jamal Atif Atif

Auto-TLDR; Deep Reinforcement Learning for Financial Crisis Detection and Dis-Investment

Abstract Slides Poster Similar

Feature Engineering and Stacked Echo State Networks for Musical Onset Detection

Peter Steiner, Azarakhsh Jalalvand, Simon Stone, Peter Birkholz

Auto-TLDR; Echo State Networks for Onset Detection in Music Analysis

Abstract Slides Poster Similar

Creating Classifier Ensembles through Meta-Heuristic Algorithms for Aerial Scene Classification

Álvaro Roberto Ferreira Jr., Gustavo Gustavo Henrique De Rosa, Joao Paulo Papa, Gustavo Carneiro, Fabio Augusto Faria

Auto-TLDR; Univariate Marginal Distribution Algorithm for Aerial Scene Classification Using Meta-Heuristic Optimization

Abstract Slides Poster Similar

Smart Inference for Multidigit Convolutional Neural Network Based Barcode Decoding

Duy-Thao Do, Tolcha Yalew, Tae Joon Jun, Daeyoung Kim

Auto-TLDR; Smart Inference for Barcode Decoding using Deep Convolutional Neural Network

Abstract Slides Poster Similar

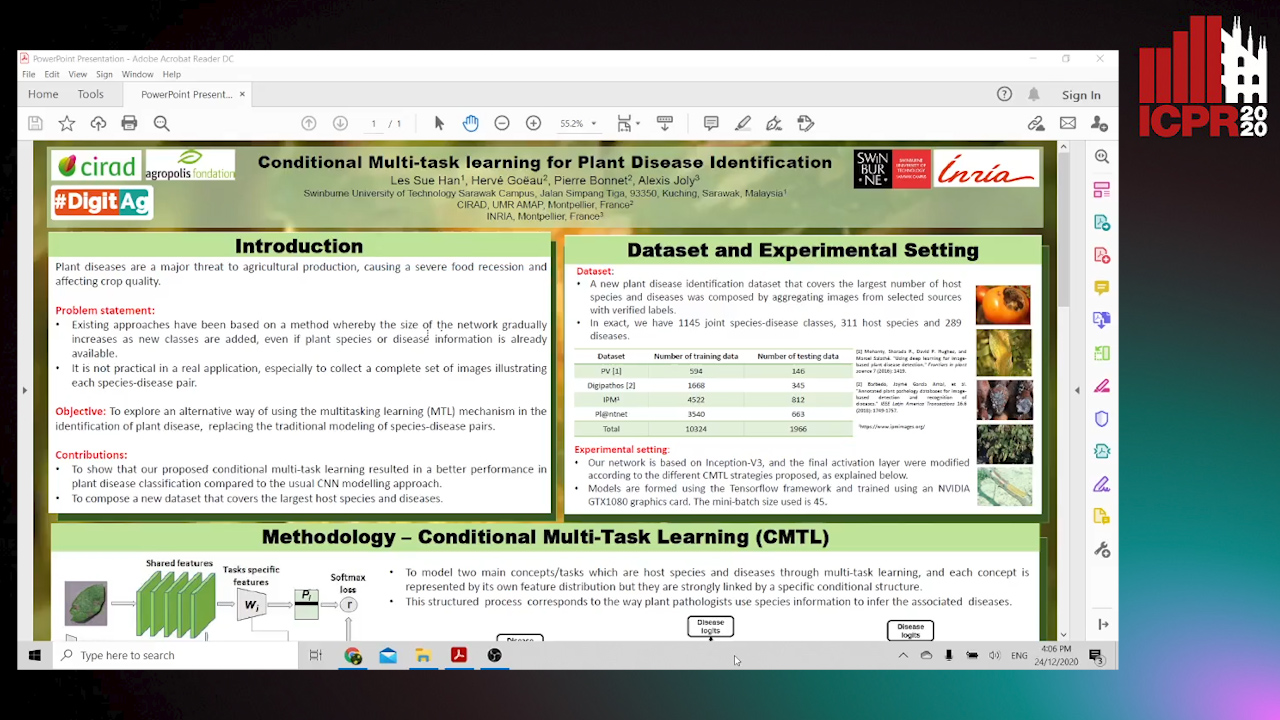

Conditional Multi-Task Learning for Plant Disease Identification

Sue Han Lee, Herve Goëau, Pierre Bonnet, Alexis Joly

Auto-TLDR; A conditional multi-task learning approach for plant disease identification

Abstract Slides Poster Similar

Handwritten Digit String Recognition Using Deep Autoencoder Based Segmentation and ResNet Based Recognition Approach

Anuran Chakraborty, Rajonya De, Samir Malakar, Friedhelm Schwenker, Ram Sarkar

Auto-TLDR; Handwritten Digit Strings Recognition Using Residual Network and Deep Autoencoder Based Segmentation

Abstract Slides Poster Similar

Continuous Learning of Face Attribute Synthesis

Ning Xin, Shaohui Xu, Fangzhe Nan, Xiaoli Dong, Weijun Li, Yuanzhou Yao

Auto-TLDR; Continuous Learning for Face Attribute Synthesis

Abstract Slides Poster Similar