On Learning Random Forests for Random Forest Clustering

Manuele Bicego,

Francisco Escolano

Auto-TLDR; Learning Random Forests for Clustering

Similar papers

Proximity Isolation Forests

Antonella Mensi, Manuele Bicego, David Tax

Auto-TLDR; Proximity Isolation Forests for Non-vectorial Data

Abstract Slides Poster Similar

A Novel Random Forest Dissimilarity Measure for Multi-View Learning

Hongliu Cao, Simon Bernard, Robert Sabourin, Laurent Heutte

Auto-TLDR; Multi-view Learning with Random Forest Relation Measure and Instance Hardness

Abstract Slides Poster Similar

Decision Snippet Features

Pascal Welke, Fouad Alkhoury, Christian Bauckhage, Stefan Wrobel

Auto-TLDR; Decision Snippet Features for Interpretability

Abstract Slides Poster Similar

Hierarchical Routing Mixture of Experts

Wenbo Zhao, Yang Gao, Shahan Ali Memon, Bhiksha Raj, Rita Singh

Auto-TLDR; A Binary Tree-structured Hierarchical Routing Mixture of Experts for Regression

Abstract Slides Poster Similar

PowerHC: Non Linear Normalization of Distances for Advanced Nearest Neighbor Classification

Manuele Bicego, Mauricio Orozco-Alzate

Auto-TLDR; Non linear scaling of distances for advanced nearest neighbor classification

Abstract Slides Poster Similar

PIF: Anomaly detection via preference embedding

Filippo Leveni, Luca Magri, Giacomo Boracchi, Cesare Alippi

Auto-TLDR; PIF: Anomaly Detection with Preference Embedding for Structured Patterns

Abstract Slides Poster Similar

Aggregating Dependent Gaussian Experts in Local Approximation

Auto-TLDR; A novel approach for aggregating the Gaussian experts by detecting strong violations of conditional independence

Abstract Slides Poster Similar

Detecting Rare Cell Populations in Flow Cytometry Data Using UMAP

Lisa Weijler, Markus Diem, Michael Reiter

Auto-TLDR; Unsupervised Manifold Approximation and Projection for Small Cell Population Detection in Flow cytometry Data

Abstract Slides Poster Similar

Temporal Pattern Detection in Time-Varying Graphical Models

Federico Tomasi, Veronica Tozzo, Annalisa Barla

Auto-TLDR; A dynamical network inference model that leverages on kernels to consider general temporal patterns

Abstract Slides Poster Similar

Algorithm Recommendation for Data Streams

Jáder Martins Camboim De Sá, Andre Luis Debiaso Rossi, Gustavo Enrique De Almeida Prado Alves Batista, Luís Paulo Faina Garcia

Auto-TLDR; Meta-Learning for Algorithm Selection in Time-Changing Data Streams

Abstract Slides Poster Similar

Sketch-Based Community Detection Via Representative Node Sampling

Mahlagha Sedghi, Andre Beckus, George Atia

Auto-TLDR; Sketch-based Clustering of Community Detection Using a Small Sketch

Abstract Slides Poster Similar

A Heuristic-Based Decision Tree for Connected Components Labeling of 3D Volumes

Maximilian Söchting, Stefano Allegretti, Federico Bolelli, Costantino Grana

Auto-TLDR; Entropy Partitioning Decision Tree for Connected Components Labeling

Abstract Slides Poster Similar

An Invariance-Guided Stability Criterion for Time Series Clustering Validation

Florent Forest, Alex Mourer, Mustapha Lebbah, Hanane Azzag, Jérôme Lacaille

Auto-TLDR; An invariance-guided method for clustering model selection in time series data

Abstract Slides Poster Similar

Uniform and Non-Uniform Sampling Methods for Sub-Linear Time K-Means Clustering

Auto-TLDR; Sub-linear Time Clustering with Constant Approximation Ratio for K-Means Problem

Abstract Slides Poster Similar

The eXPose Approach to Crosslier Detection

Antonio Barata, Frank Takes, Hendrik Van Den Herik, Cor Veenman

Auto-TLDR; EXPose: Crosslier Detection Based on Supervised Category Modeling

Abstract Slides Poster Similar

Automatic Classification of Human Granulosa Cells in Assisted Reproductive Technology Using Vibrational Spectroscopy Imaging

Marina Paolanti, Emanuele Frontoni, Giorgia Gioacchini, Giorgini Elisabetta, Notarstefano Valentina, Zacà Carlotta, Carnevali Oliana, Andrea Borini, Marco Mameli

Auto-TLDR; Predicting Oocyte Quality in Assisted Reproductive Technology Using Machine Learning Techniques

Abstract Slides Poster Similar

Dual-Memory Model for Incremental Learning: The Handwriting Recognition Use Case

Mélanie Piot, Bérangère Bourdoulous, Aurelia Deshayes, Lionel Prevost

Auto-TLDR; A dual memory model for handwriting recognition

Enhancing Deep Semantic Segmentation of RGB-D Data with Entangled Forests

Matteo Terreran, Elia Bonetto, Stefano Ghidoni

Auto-TLDR; FuseNet: A Lighter Deep Learning Model for Semantic Segmentation

Abstract Slides Poster Similar

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Categorizing the Feature Space for Two-Class Imbalance Learning

Rosa Sicilia, Ermanno Cordelli, Paolo Soda

Auto-TLDR; Efficient Ensemble of Classifiers for Minority Class Inference

Abstract Slides Poster Similar

An Empirical Bayes Approach to Topic Modeling

Auto-TLDR; An Empirical Bayes Based Framework for Topic Modeling in Documents

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

Graph Spectral Feature Learning for Mixed Data of Categorical and Numerical Type

Saswata Sahoo, Souradip Chakraborty

Auto-TLDR; Feature Learning in Mixed Type of Variable by an undirected graph

Abstract Slides Poster Similar

Factor Screening Using Bayesian Active Learning and Gaussian Process Meta-Modelling

Cheng Li, Santu Rana, Andrew William Gill, Dang Nguyen, Sunil Kumar Gupta, Svetha Venkatesh

Auto-TLDR; Data-Efficient Bayesian Active Learning for Factor Screening in Combat Simulations

GPSRL: Learning Semi-Parametric Bayesian Survival Rule Lists from Heterogeneous Patient Data

Ameer Hamza Shakur, Xiaoning Qian, Zhangyang Wang, Bobak Mortazavi, Shuai Huang

Auto-TLDR; Semi-parametric Bayesian Survival Rule List Model for Heterogeneous Survival Data

Watermelon: A Novel Feature Selection Method Based on Bayes Error Rate Estimation and a New Interpretation of Feature Relevance and Redundancy

Auto-TLDR; Feature Selection Using Bayes Error Rate Estimation for Dynamic Feature Selection

Abstract Slides Poster Similar

Scalable Direction-Search-Based Approach to Subspace Clustering

Auto-TLDR; Fast Direction-Search-Based Subspace Clustering

Relative Feature Importance

Gunnar König, Christoph Molnar, Bernd Bischl, Moritz Grosse-Wentrup

Auto-TLDR; Relative Feature Importance for Interpretable Machine Learning

Cluster-Size Constrained Network Partitioning

Maksim Mironov, Konstantin Avrachenkov

Auto-TLDR; Unsupervised Graph Clustering with Stochastic Block Model

Abstract Slides Poster Similar

How to Define a Rejection Class Based on Model Learning?

Sarah Laroui, Xavier Descombes, Aurelia Vernay, Florent Villiers, Francois Villalba, Eric Debreuve

Auto-TLDR; An innovative learning strategy for supervised classification that is able, by design, to reject a sample as not belonging to any of the known classes

Abstract Slides Poster Similar

Assortative-Constrained Stochastic Block Models

Daniel Gribel, Thibaut Vidal, Michel Gendreau

Auto-TLDR; Constrained Stochastic Block Models for Assortative Communities in Neural Networks

Abstract Slides Poster Similar

One Step Clustering Based on A-Contrario Framework for Detection of Alterations in Historical Violins

Alireza Rezaei, Sylvie Le Hégarat-Mascle, Emanuel Aldea, Piercarlo Dondi, Marco Malagodi

Auto-TLDR; A-Contrario Clustering for the Detection of Altered Violins using UVIFL Images

Abstract Slides Poster Similar

Comparison of Stacking-Based Classifier Ensembles Using Euclidean and Riemannian Geometries

Vitaliy Tayanov, Adam Krzyzak, Ching Y Suen

Auto-TLDR; Classifier Stacking in Riemannian Geometries using Cascades of Random Forest and Extra Trees

Abstract Slides Poster Similar

Bayesian Active Learning for Maximal Information Gain on Model Parameters

Kasra Arnavaz, Aasa Feragen, Oswin Krause, Marco Loog

Auto-TLDR; Bayesian assumptions for Bayesian classification

Abstract Slides Poster Similar

A Novel Adaptive Minority Oversampling Technique for Improved Classification in Data Imbalanced Scenarios

Ayush Tripathi, Rupayan Chakraborty, Sunil Kumar Kopparapu

Auto-TLDR; Synthetic Minority OverSampling Technique for Imbalanced Data

Abstract Slides Poster Similar

Encoding Brain Networks through Geodesic Clustering of Functional Connectivity for Multiple Sclerosis Classification

Muhammad Abubakar Yamin, Valsasina Paola, Michael Dayan, Sebastiano Vascon, Tessadori Jacopo, Filippi Massimo, Vittorio Murino, A Rocca Maria, Diego Sona

Auto-TLDR; Geodesic Clustering of Connectivity Matrices for Multiple Sclerosis Classification

Abstract Slides Poster Similar

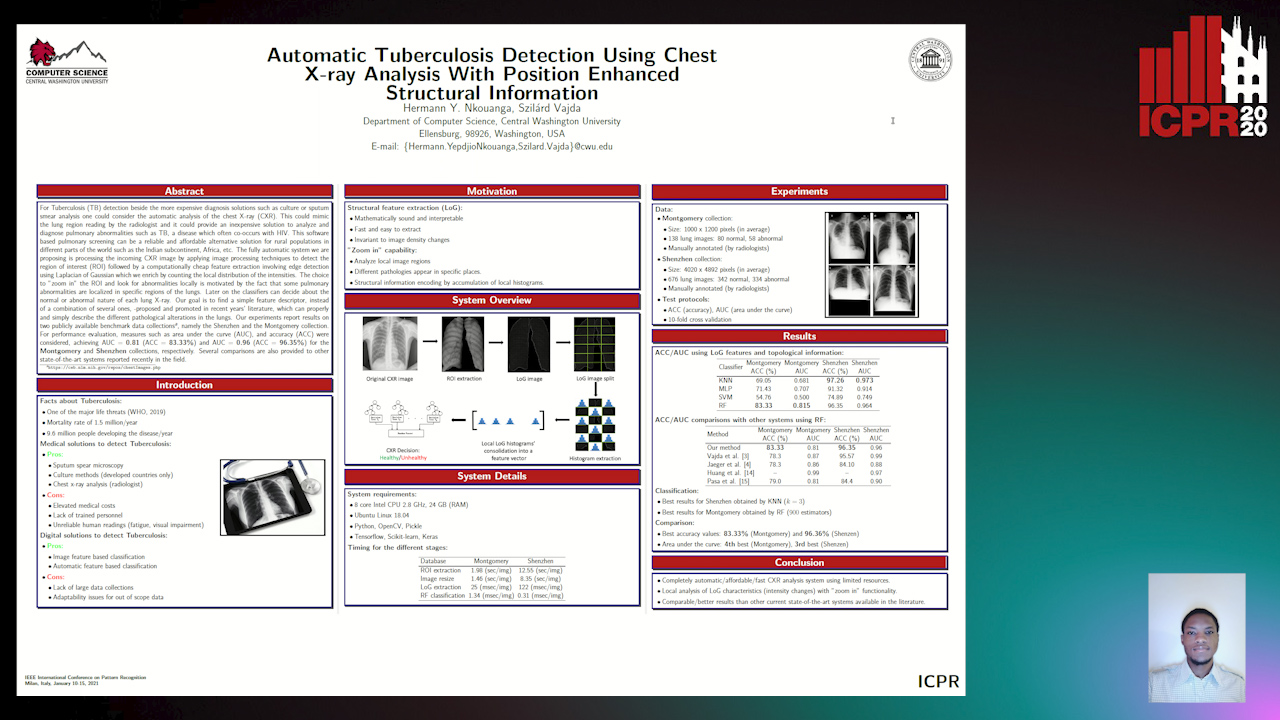

Automatic Tuberculosis Detection Using Chest X-Ray Analysis with Position Enhanced Structural Information

Hermann Jepdjio Nkouanga, Szilard Vajda

Auto-TLDR; Automatic Chest X-ray Screening for Tuberculosis in Rural Population using Localized Region on Interest

Abstract Slides Poster Similar

Using Meta Labels for the Training of Weighting Models in a Sample-Specific Late Fusion Classification Architecture

Peter Bellmann, Patrick Thiam, Friedhelm Schwenker

Auto-TLDR; A Late Fusion Architecture for Multiple Classifier Systems

Abstract Slides Poster Similar

A Cheaper Rectified-Nearest-Feature-Line-Segment Classifier Based on Safe Points

Mauricio Orozco-Alzate, Manuele Bicego

Auto-TLDR; Rectified Nearest Feature Line Segment Segment Classifier

Abstract Slides Poster Similar

Motion Segmentation with Pairwise Matches and Unknown Number of Motions

Federica Arrigoni, Tomas Pajdla, Luca Magri

Auto-TLDR; Motion Segmentation using Multi-Modelfitting andpermutation synchronization

Abstract Slides Poster Similar

N2D: (Not Too) Deep Clustering Via Clustering the Local Manifold of an Autoencoded Embedding

Ryan Mcconville, Raul Santos-Rodriguez, Robert Piechocki, Ian Craddock

Auto-TLDR; Local Manifold Learning for Deep Clustering on Autoencoded Embeddings

Supervised Classification Using Graph-Based Space Partitioning for Multiclass Problems

Nicola Yanev, Ventzeslav Valev, Adam Krzyzak, Karima Ben Suliman

Auto-TLDR; Box Classifier for Multiclass Classification

Abstract Slides Poster Similar

On Morphological Hierarchies for Image Sequences

Caglayan Tuna, Alain Giros, François Merciol, Sébastien Lefèvre

Auto-TLDR; Comparison of Hierarchies for Image Sequences

Abstract Slides Poster Similar

Position-Aware Safe Boundary Interpolation Oversampling

Auto-TLDR; PABIO: Position-Aware Safe Boundary Interpolation-Based Oversampling for Imbalanced Data

Abstract Slides Poster Similar

Deep Transfer Learning for Alzheimer’s Disease Detection

Nicole Cilia, Claudio De Stefano, Francesco Fontanella, Claudio Marrocco, Mario Molinara, Alessandra Scotto Di Freca

Auto-TLDR; Automatic Detection of Handwriting Alterations for Alzheimer's Disease Diagnosis using Dynamic Features

Abstract Slides Poster Similar

Sparse-Dense Subspace Clustering

Shuai Yang, Wenqi Zhu, Yuesheng Zhu

Auto-TLDR; Sparse-Dense Subspace Clustering with Piecewise Correlation Estimation

Abstract Slides Poster Similar

Constrained Spectral Clustering Network with Self-Training

Xinyue Liu, Shichong Yang, Linlin Zong

Auto-TLDR; Constrained Spectral Clustering Network: A Constrained Deep spectral clustering network

Abstract Slides Poster Similar

Weakly Supervised Learning through Rank-Based Contextual Measures

João Gabriel Camacho Presotto, Lucas Pascotti Valem, Nikolas Gomes De Sá, Daniel Carlos Guimaraes Pedronette, Joao Paulo Papa

Auto-TLDR; Exploiting Unlabeled Data for Weakly Supervised Classification of Multimedia Data

Abstract Slides Poster Similar