Sketch-Based Community Detection Via Representative Node Sampling

Mahlagha Sedghi,

Andre Beckus,

George Atia

Auto-TLDR; Sketch-based Clustering of Community Detection Using a Small Sketch

Similar papers

Scalable Direction-Search-Based Approach to Subspace Clustering

Auto-TLDR; Fast Direction-Search-Based Subspace Clustering

Cluster-Size Constrained Network Partitioning

Maksim Mironov, Konstantin Avrachenkov

Auto-TLDR; Unsupervised Graph Clustering with Stochastic Block Model

Abstract Slides Poster Similar

Wasserstein k-Means with Sparse Simplex Projection

Takumi Fukunaga, Hiroyuki Kasai

Auto-TLDR; SSPW $k$-means: Sparse Simplex Projection-based Wasserstein $ k$-Means Algorithm

Abstract Slides Poster Similar

Assortative-Constrained Stochastic Block Models

Daniel Gribel, Thibaut Vidal, Michel Gendreau

Auto-TLDR; Constrained Stochastic Block Models for Assortative Communities in Neural Networks

Abstract Slides Poster Similar

Temporal Pattern Detection in Time-Varying Graphical Models

Federico Tomasi, Veronica Tozzo, Annalisa Barla

Auto-TLDR; A dynamical network inference model that leverages on kernels to consider general temporal patterns

Abstract Slides Poster Similar

Low Rank Representation on Product Grassmann Manifolds for Multi-viewSubspace Clustering

Jipeng Guo, Yanfeng Sun, Junbin Gao, Yongli Hu, Baocai Yin

Auto-TLDR; Low Rank Representation on Product Grassmann Manifold for Multi-View Data Clustering

Abstract Slides Poster Similar

Aggregating Dependent Gaussian Experts in Local Approximation

Auto-TLDR; A novel approach for aggregating the Gaussian experts by detecting strong violations of conditional independence

Abstract Slides Poster Similar

Region and Relations Based Multi Attention Network for Graph Classification

Manasvi Aggarwal, M. Narasimha Murty

Auto-TLDR; R2POOL: A Graph Pooling Layer for Non-euclidean Structures

Abstract Slides Poster Similar

A General Model for Learning Node and Graph Representations Jointly

Auto-TLDR; Joint Community Detection/Dynamic Routing for Graph Classification

Abstract Slides Poster Similar

A Novel Adaptive Minority Oversampling Technique for Improved Classification in Data Imbalanced Scenarios

Ayush Tripathi, Rupayan Chakraborty, Sunil Kumar Kopparapu

Auto-TLDR; Synthetic Minority OverSampling Technique for Imbalanced Data

Abstract Slides Poster Similar

Active Sampling for Pairwise Comparisons via Approximate Message Passing and Information Gain Maximization

Aliaksei Mikhailiuk, Clifford Wilmot, Maria Perez-Ortiz, Dingcheng Yue, Rafal Mantiuk

Auto-TLDR; ASAP: An Active Sampling Algorithm for Pairwise Comparison Data

Encoding Brain Networks through Geodesic Clustering of Functional Connectivity for Multiple Sclerosis Classification

Muhammad Abubakar Yamin, Valsasina Paola, Michael Dayan, Sebastiano Vascon, Tessadori Jacopo, Filippi Massimo, Vittorio Murino, A Rocca Maria, Diego Sona

Auto-TLDR; Geodesic Clustering of Connectivity Matrices for Multiple Sclerosis Classification

Abstract Slides Poster Similar

Classification and Feature Selection Using a Primal-Dual Method and Projections on Structured Constraints

Michel Barlaud, Antonin Chambolle, Jean_Baptiste Caillau

Auto-TLDR; A Constrained Primal-dual Method for Structured Feature Selection on High Dimensional Data

Abstract Slides Poster Similar

Fast Subspace Clustering Based on the Kronecker Product

Lei Zhou, Xiao Bai, Liang Zhang, Jun Zhou, Edwin Hancock

Auto-TLDR; Subspace Clustering with Kronecker Product for Large Scale Datasets

Abstract Slides Poster Similar

A Multi-Task Multi-View Based Multi-Objective Clustering Algorithm

Auto-TLDR; MTMV-MO: Multi-task multi-view multi-objective optimization for multi-task clustering

Abstract Slides Poster Similar

Edge-Aware Graph Attention Network for Ratio of Edge-User Estimation in Mobile Networks

Jiehui Deng, Sheng Wan, Xiang Wang, Enmei Tu, Xiaolin Huang, Jie Yang, Chen Gong

Auto-TLDR; EAGAT: Edge-Aware Graph Attention Network for Automatic REU Estimation in Mobile Networks

Abstract Slides Poster Similar

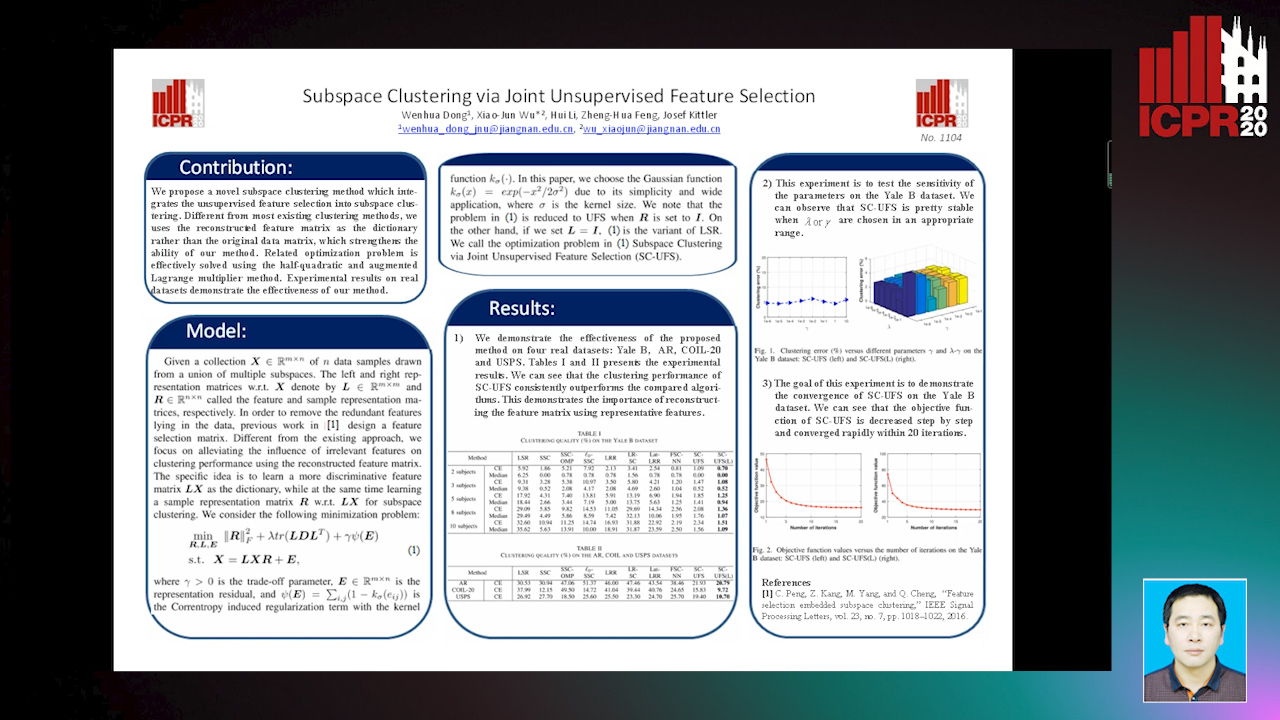

Subspace Clustering Via Joint Unsupervised Feature Selection

Wenhua Dong, Xiaojun Wu, Hui Li, Zhenhua Feng, Josef Kittler

Auto-TLDR; Unsupervised Feature Selection for Subspace Clustering

PIF: Anomaly detection via preference embedding

Filippo Leveni, Luca Magri, Giacomo Boracchi, Cesare Alippi

Auto-TLDR; PIF: Anomaly Detection with Preference Embedding for Structured Patterns

Abstract Slides Poster Similar

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

Learning Connectivity with Graph Convolutional Networks

Auto-TLDR; Learning Graph Convolutional Networks Using Topological Properties of Graphs

Abstract Slides Poster Similar

On Learning Random Forests for Random Forest Clustering

Manuele Bicego, Francisco Escolano

Auto-TLDR; Learning Random Forests for Clustering

Abstract Slides Poster Similar

Unconstrained Vision Guided UAV Based Safe Helicopter Landing

Arindam Sikdar, Abhimanyu Sahu, Debajit Sen, Rohit Mahajan, Ananda Chowdhury

Auto-TLDR; Autonomous Helicopter Landing in Hazardous Environments from Unmanned Aerial Images Using Constrained Graph Clustering

Abstract Slides Poster Similar

3CS Algorithm for Efficient Gaussian Process Model Retrieval

Fabian Berns, Kjeld Schmidt, Ingolf Bracht, Christian Beecks

Auto-TLDR; Efficient retrieval of Gaussian Process Models for large-scale data using divide-&-conquer-based approach

Abstract Slides Poster Similar

Motion Segmentation with Pairwise Matches and Unknown Number of Motions

Federica Arrigoni, Tomas Pajdla, Luca Magri

Auto-TLDR; Motion Segmentation using Multi-Modelfitting andpermutation synchronization

Abstract Slides Poster Similar

Graph Convolutional Neural Networks for Power Line Outage Identification

Auto-TLDR; Graph Convolutional Networks for Power Line Outage Identification

Label Self-Adaption Hashing for Image Retrieval

Jianglin Lu, Zhihui Lai, Hailing Wang, Jie Zhou

Auto-TLDR; Label Self-Adaption Hashing for Large-Scale Image Retrieval

Abstract Slides Poster Similar

Sparse-Dense Subspace Clustering

Shuai Yang, Wenqi Zhu, Yuesheng Zhu

Auto-TLDR; Sparse-Dense Subspace Clustering with Piecewise Correlation Estimation

Abstract Slides Poster Similar

Double Manifolds Regularized Non-Negative Matrix Factorization for Data Representation

Jipeng Guo, Shuai Yin, Yanfeng Sun, Yongli Hu

Auto-TLDR; Double Manifolds Regularized Non-negative Matrix Factorization for Clustering

Abstract Slides Poster Similar

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Uniform and Non-Uniform Sampling Methods for Sub-Linear Time K-Means Clustering

Auto-TLDR; Sub-linear Time Clustering with Constant Approximation Ratio for K-Means Problem

Abstract Slides Poster Similar

On the Global Self-attention Mechanism for Graph Convolutional Networks

Auto-TLDR; Global Self-Attention Mechanism for Graph Convolutional Networks

Graph Spectral Feature Learning for Mixed Data of Categorical and Numerical Type

Saswata Sahoo, Souradip Chakraborty

Auto-TLDR; Feature Learning in Mixed Type of Variable by an undirected graph

Abstract Slides Poster Similar

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

A Spectral Clustering on Grassmann Manifold Via Double Low Rank Constraint

Xinglin Piao, Yongli Hu, Junbin Gao, Yanfeng Sun, Xin Yang, Baocai Yin

Auto-TLDR; Double Low Rank Representation for High-Dimensional Data Clustering on Grassmann Manifold

GraphBGS: Background Subtraction Via Recovery of Graph Signals

Jhony Heriberto Giraldo Zuluaga, Thierry Bouwmans

Auto-TLDR; Graph BackGround Subtraction using Graph Signals

Abstract Slides Poster Similar

Tensor Factorization of Brain Structural Graph for Unsupervised Classification in Multiple Sclerosis

Berardino Barile, Marzullo Aldo, Claudio Stamile, Françoise Durand-Dubief, Dominique Sappey-Marinier

Auto-TLDR; A Fully Automated Tensor-based Algorithm for Multiple Sclerosis Classification based on Structural Connectivity Graph of the White Matter Network

Abstract Slides Poster Similar

AOAM: Automatic Optimization of Adjacency Matrix for Graph Convolutional Network

Yuhang Zhang, Hongshuai Ren, Jiexia Ye, Xitong Gao, Yang Wang, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; Adjacency Matrix for Graph Convolutional Network in Non-Euclidean Space

Abstract Slides Poster Similar

Kernel-based Graph Convolutional Networks

Auto-TLDR; Spatial Graph Convolutional Networks in Recurrent Kernel Hilbert Space

Abstract Slides Poster Similar

Siamese Graph Convolution Network for Face Sketch Recognition

Liang Fan, Xianfang Sun, Paul Rosin

Auto-TLDR; A novel Siamese graph convolution network for face sketch recognition

Abstract Slides Poster Similar

Unveiling Groups of Related Tasks in Multi-Task Learning

Jordan Frecon, Saverio Salzo, Massimiliano Pontil

Auto-TLDR; Continuous Bilevel Optimization for Multi-Task Learning

Abstract Slides Poster Similar

A Heuristic-Based Decision Tree for Connected Components Labeling of 3D Volumes

Maximilian Söchting, Stefano Allegretti, Federico Bolelli, Costantino Grana

Auto-TLDR; Entropy Partitioning Decision Tree for Connected Components Labeling

Abstract Slides Poster Similar

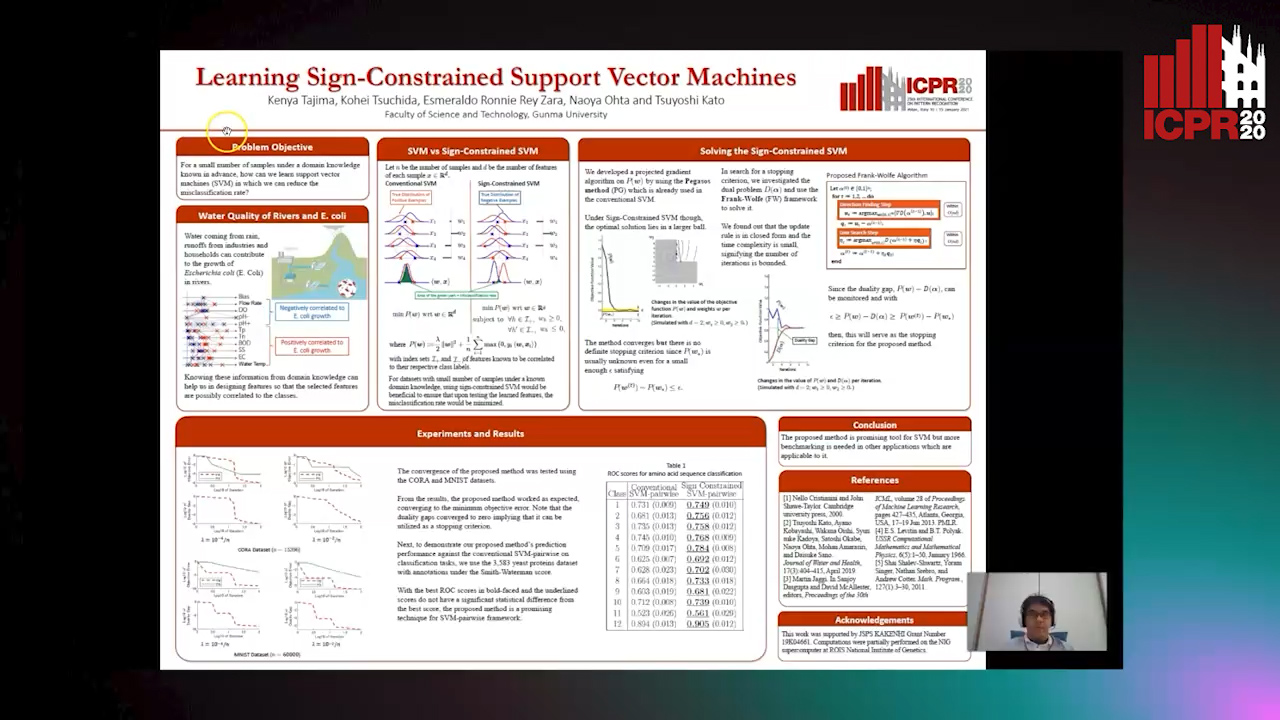

Learning Sign-Constrained Support Vector Machines

Kenya Tajima, Kouhei Tsuchida, Esmeraldo Ronnie Rey Zara, Naoya Ohta, Tsuyoshi Kato

Auto-TLDR; Constrained Sign Constraints for Learning Linear Support Vector Machine

Improved Time-Series Clustering with UMAP Dimension Reduction Method

Clément Pealat, Vincent Cheutet, Guillaume Bouleux

Auto-TLDR; Time Series Clustering with UMAP as a Pre-processing Step

Abstract Slides Poster Similar

Proximity Isolation Forests

Antonella Mensi, Manuele Bicego, David Tax

Auto-TLDR; Proximity Isolation Forests for Non-vectorial Data

Abstract Slides Poster Similar

Soft Label and Discriminant Embedding Estimation for Semi-Supervised Classification

Fadi Dornaika, Abdullah Baradaaji, Youssof El Traboulsi

Auto-TLDR; Semi-supervised Semi-Supervised Learning for Linear Feature Extraction and Label Propagation

Abstract Slides Poster Similar

Anime Sketch Colorization by Component-Based Matching Using Deep Appearance Features and Graph Representation

Thien Do, Pham Van, Anh Nguyen, Trung Dang, Quoc Nguyen, Bach Hoang, Giao Nguyen

Auto-TLDR; Combining Deep Learning and Graph Representation for Sketch Colorization

Abstract Slides Poster Similar

Constrained Spectral Clustering Network with Self-Training

Xinyue Liu, Shichong Yang, Linlin Zong

Auto-TLDR; Constrained Spectral Clustering Network: A Constrained Deep spectral clustering network

Abstract Slides Poster Similar

Mean Decision Rules Method with Smart Sampling for Fast Large-Scale Binary SVM Classification

Alexandra Makarova, Mikhail Kurbakov, Valentina Sulimova

Auto-TLDR; Improving Mean Decision Rule for Large-Scale Binary SVM Problems

Abstract Slides Poster Similar