Double Manifolds Regularized Non-Negative Matrix Factorization for Data Representation

Jipeng Guo,

Shuai Yin,

Yanfeng Sun,

Yongli Hu

Auto-TLDR; Double Manifolds Regularized Non-negative Matrix Factorization for Clustering

Similar papers

Low Rank Representation on Product Grassmann Manifolds for Multi-viewSubspace Clustering

Jipeng Guo, Yanfeng Sun, Junbin Gao, Yongli Hu, Baocai Yin

Auto-TLDR; Low Rank Representation on Product Grassmann Manifold for Multi-View Data Clustering

Abstract Slides Poster Similar

A Spectral Clustering on Grassmann Manifold Via Double Low Rank Constraint

Xinglin Piao, Yongli Hu, Junbin Gao, Yanfeng Sun, Xin Yang, Baocai Yin

Auto-TLDR; Double Low Rank Representation for High-Dimensional Data Clustering on Grassmann Manifold

Embedding Shared Low-Rank and Feature Correlation for Multi-View Data Analysis

Zhan Wang, Lizhi Wang, Hua Huang

Auto-TLDR; embedding shared low-rank and feature correlation for multi-view data analysis

Abstract Slides Poster Similar

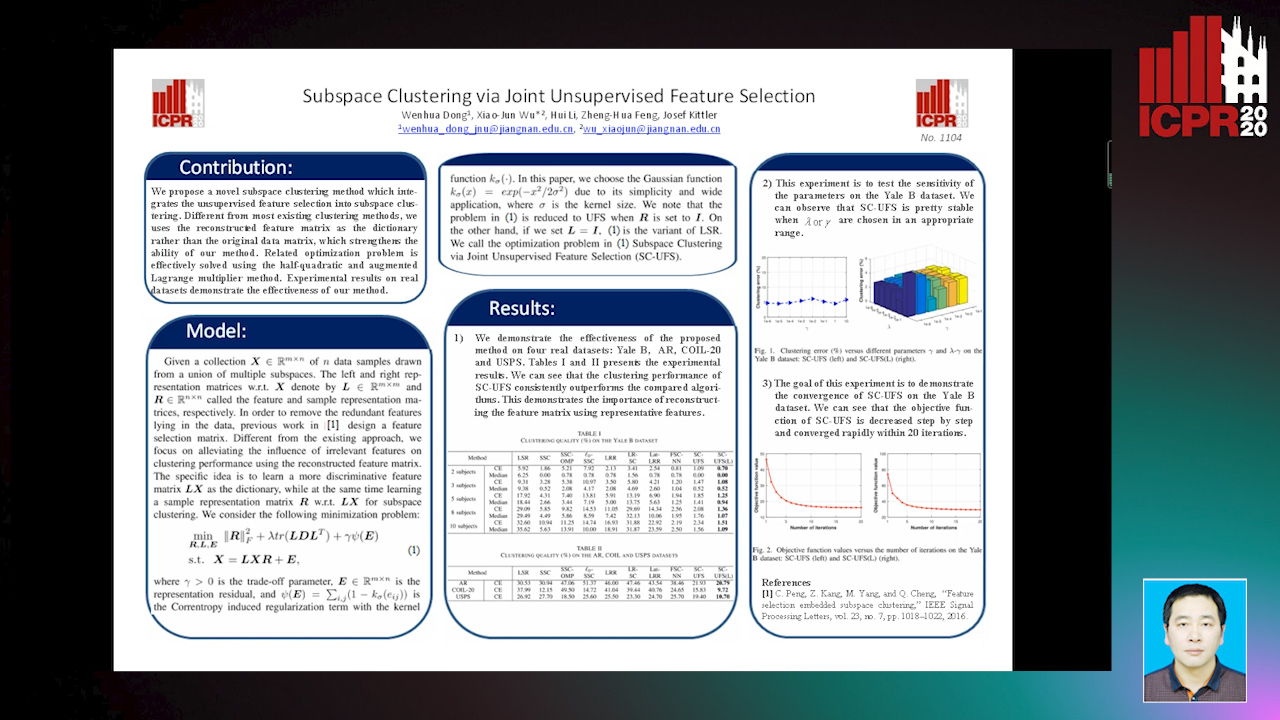

Subspace Clustering Via Joint Unsupervised Feature Selection

Wenhua Dong, Xiaojun Wu, Hui Li, Zhenhua Feng, Josef Kittler

Auto-TLDR; Unsupervised Feature Selection for Subspace Clustering

Fast Subspace Clustering Based on the Kronecker Product

Lei Zhou, Xiao Bai, Liang Zhang, Jun Zhou, Edwin Hancock

Auto-TLDR; Subspace Clustering with Kronecker Product for Large Scale Datasets

Abstract Slides Poster Similar

Soft Label and Discriminant Embedding Estimation for Semi-Supervised Classification

Fadi Dornaika, Abdullah Baradaaji, Youssof El Traboulsi

Auto-TLDR; Semi-supervised Semi-Supervised Learning for Linear Feature Extraction and Label Propagation

Abstract Slides Poster Similar

Label Self-Adaption Hashing for Image Retrieval

Jianglin Lu, Zhihui Lai, Hailing Wang, Jie Zhou

Auto-TLDR; Label Self-Adaption Hashing for Large-Scale Image Retrieval

Abstract Slides Poster Similar

Sparse-Dense Subspace Clustering

Shuai Yang, Wenqi Zhu, Yuesheng Zhu

Auto-TLDR; Sparse-Dense Subspace Clustering with Piecewise Correlation Estimation

Abstract Slides Poster Similar

Fast Discrete Cross-Modal Hashing Based on Label Relaxation and Matrix Factorization

Donglin Zhang, Xiaojun Wu, Zhen Liu, Jun Yu, Josef Kittler

Auto-TLDR; LRMF: Label Relaxation and Discrete Matrix Factorization for Cross-Modal Retrieval

Variational Deep Embedding Clustering by Augmented Mutual Information Maximization

Qiang Ji, Yanfeng Sun, Yongli Hu, Baocai Yin

Auto-TLDR; Clustering by Augmented Mutual Information maximization for Deep Embedding

Abstract Slides Poster Similar

T-SVD Based Non-Convex Tensor Completion and Robust Principal Component Analysis

Auto-TLDR; Non-Convex tensor rank surrogate function and non-convex sparsity measure for tensor recovery

Abstract Slides Poster Similar

A Multi-Task Multi-View Based Multi-Objective Clustering Algorithm

Auto-TLDR; MTMV-MO: Multi-task multi-view multi-objective optimization for multi-task clustering

Abstract Slides Poster Similar

Discrete Semantic Matrix Factorization Hashing for Cross-Modal Retrieval

Jianyang Qin, Lunke Fei, Shaohua Teng, Wei Zhang, Genping Zhao, Haoliang Yuan

Auto-TLDR; Discrete Semantic Matrix Factorization Hashing for Cross-Modal Retrieval

Abstract Slides Poster Similar

Deep Topic Modeling by Multilayer Bootstrap Network and Lasso

Auto-TLDR; Unsupervised Deep Topic Modeling with Multilayer Bootstrap Network and Lasso

Abstract Slides Poster Similar

Scalable Direction-Search-Based Approach to Subspace Clustering

Auto-TLDR; Fast Direction-Search-Based Subspace Clustering

Constrained Spectral Clustering Network with Self-Training

Xinyue Liu, Shichong Yang, Linlin Zong

Auto-TLDR; Constrained Spectral Clustering Network: A Constrained Deep spectral clustering network

Abstract Slides Poster Similar

Feature Extraction by Joint Robust Discriminant Analysis and Inter-Class Sparsity

Auto-TLDR; Robust Discriminant Analysis with Feature Selection and Inter-class Sparsity (RDA_FSIS)

Feature Extraction and Selection Via Robust Discriminant Analysis and Class Sparsity

Auto-TLDR; Hybrid Linear Discriminant Embedding for supervised multi-class classification

Abstract Slides Poster Similar

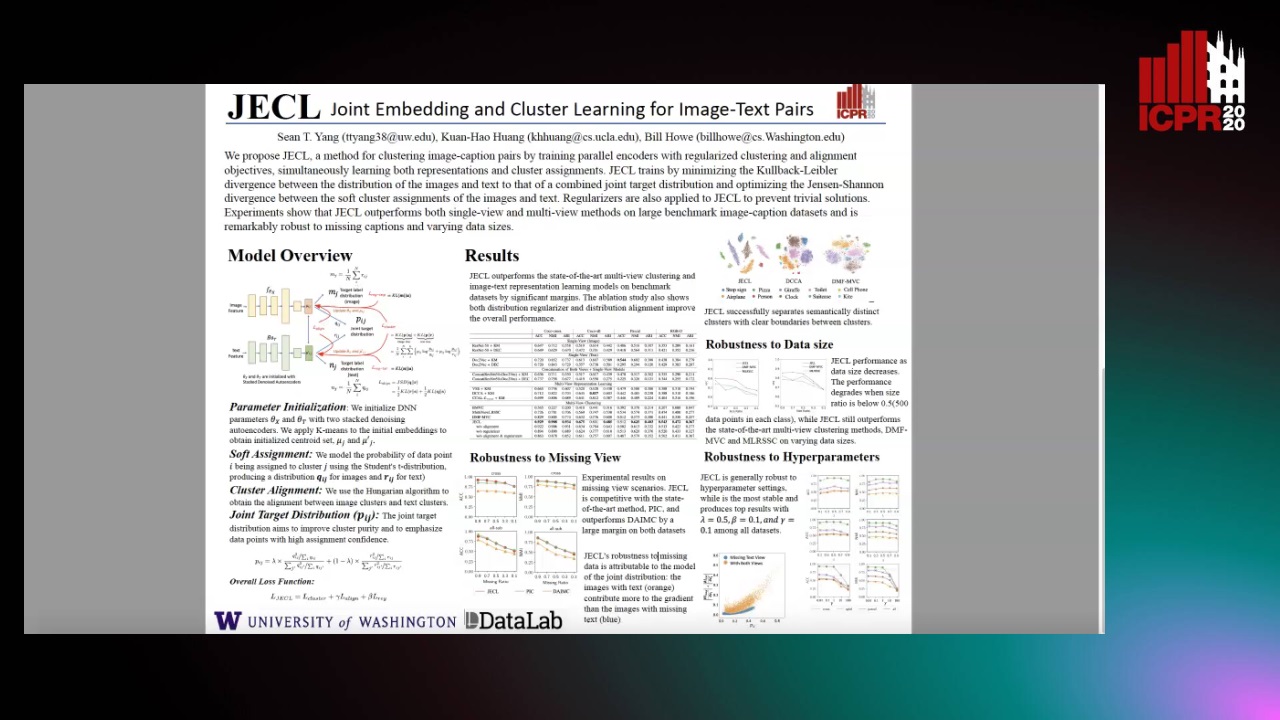

JECL: Joint Embedding and Cluster Learning for Image-Text Pairs

Sean Yang, Kuan-Hao Huang, Bill Howe

Auto-TLDR; JECL: Clustering Image-Caption Pairs with Parallel Encoders and Regularized Clusters

Graph Spectral Feature Learning for Mixed Data of Categorical and Numerical Type

Saswata Sahoo, Souradip Chakraborty

Auto-TLDR; Feature Learning in Mixed Type of Variable by an undirected graph

Abstract Slides Poster Similar

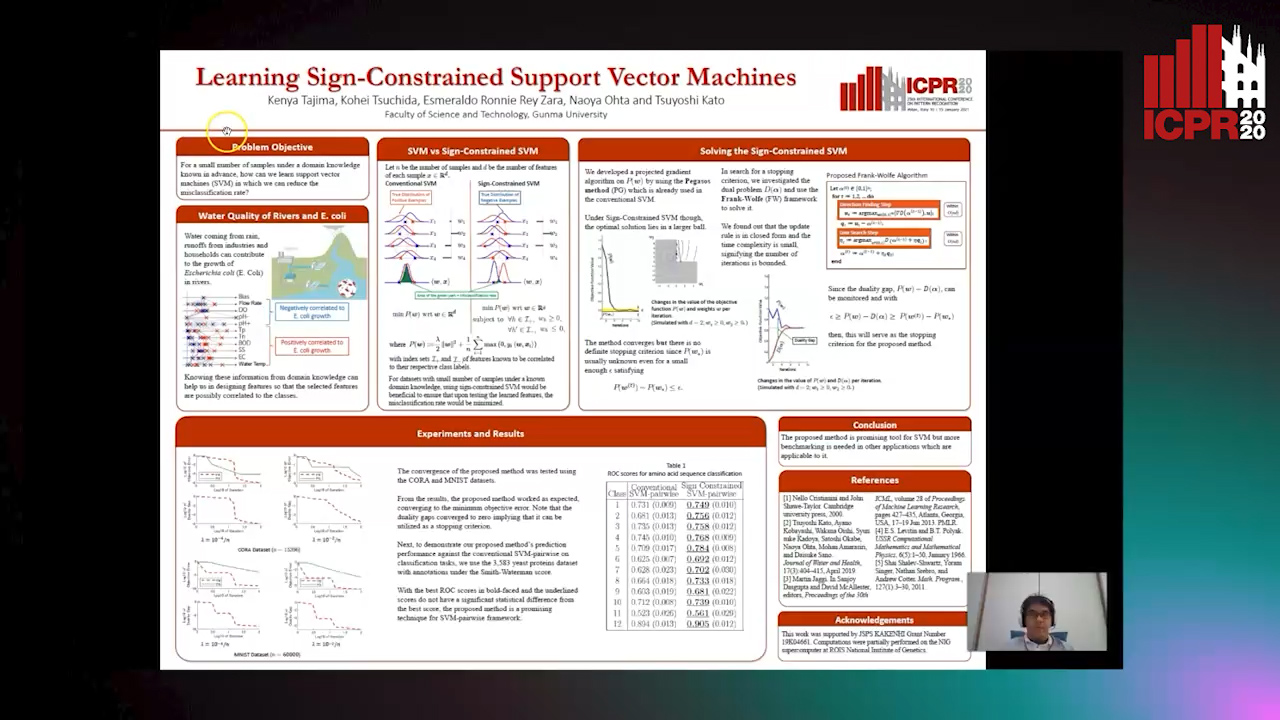

Learning Sign-Constrained Support Vector Machines

Kenya Tajima, Kouhei Tsuchida, Esmeraldo Ronnie Rey Zara, Naoya Ohta, Tsuyoshi Kato

Auto-TLDR; Constrained Sign Constraints for Learning Linear Support Vector Machine

Ultrasound Image Restoration Using Weighted Nuclear Norm Minimization

Hanmei Yang, Ye Luo, Jianwei Lu, Jian Lu

Auto-TLDR; A Nonconvex Low-Rank Matrix Approximation Model for Ultrasound Images Restoration

Sketch-Based Community Detection Via Representative Node Sampling

Mahlagha Sedghi, Andre Beckus, George Atia

Auto-TLDR; Sketch-based Clustering of Community Detection Using a Small Sketch

Abstract Slides Poster Similar

Subspace Clustering for Action Recognition with Covariance Representations and Temporal Pruning

Giancarlo Paoletti, Jacopo Cavazza, Cigdem Beyan, Alessio Del Bue

Auto-TLDR; Unsupervised Learning for Human Action Recognition from Skeletal Data

Feature-Aware Unsupervised Learning with Joint Variational Attention and Automatic Clustering

Wang Ru, Lin Li, Peipei Wang, Liu Peiyu

Auto-TLDR; Deep Variational Attention Encoder-Decoder for Clustering

Abstract Slides Poster Similar

Wasserstein k-Means with Sparse Simplex Projection

Takumi Fukunaga, Hiroyuki Kasai

Auto-TLDR; SSPW $k$-means: Sparse Simplex Projection-based Wasserstein $ k$-Means Algorithm

Abstract Slides Poster Similar

A Randomized Algorithm for Sparse Recovery

Huiyuan Yu, Maggie Cheng, Yingdong Lu

Auto-TLDR; A Constrained Graph Optimization Algorithm for Sparse Signal Recovery

Probabilistic Latent Factor Model for Collaborative Filtering with Bayesian Inference

Jiansheng Fang, Xiaoqing Zhang, Yan Hu, Yanwu Xu, Ming Yang, Jiang Liu

Auto-TLDR; Bayesian Latent Factor Model for Collaborative Filtering

Classification and Feature Selection Using a Primal-Dual Method and Projections on Structured Constraints

Michel Barlaud, Antonin Chambolle, Jean_Baptiste Caillau

Auto-TLDR; A Constrained Primal-dual Method for Structured Feature Selection on High Dimensional Data

Abstract Slides Poster Similar

Webly Supervised Image-Text Embedding with Noisy Tag Refinement

Niluthpol Mithun, Ravdeep Pasricha, Evangelos Papalexakis, Amit Roy-Chowdhury

Auto-TLDR; Robust Joint Embedding for Image-Text Retrieval Using Web Images

Snapshot Hyperspectral Imaging Based on Weighted High-Order Singular Value Regularization

Hua Huang, Cheng Niankai, Lizhi Wang

Auto-TLDR; High-Order Tensor Optimization for Hyperspectral Imaging

Abstract Slides Poster Similar

Joint Learning Multiple Curvature Descriptor for 3D Palmprint Recognition

Lunke Fei, Bob Zhang, Jie Wen, Chunwei Tian, Peng Liu, Shuping Zhao

Auto-TLDR; Joint Feature Learning for 3D palmprint recognition using curvature data vectors

Abstract Slides Poster Similar

Low-Cost Lipschitz-Independent Adaptive Importance Sampling of Stochastic Gradients

Huikang Liu, Xiaolu Wang, Jiajin Li, Man-Cho Anthony So

Auto-TLDR; Adaptive Importance Sampling for Stochastic Gradient Descent

Exploiting Elasticity in Tensor Ranks for Compressing Neural Networks

Jie Ran, Rui Lin, Hayden Kwok-Hay So, Graziano Chesi, Ngai Wong

Auto-TLDR; Nuclear-Norm Rank Minimization Factorization for Deep Neural Networks

Abstract Slides Poster Similar

Randomized Transferable Machine

Auto-TLDR; Randomized Transferable Machine for Suboptimal Feature-based Transfer Learning

Abstract Slides Poster Similar

N2D: (Not Too) Deep Clustering Via Clustering the Local Manifold of an Autoencoded Embedding

Ryan Mcconville, Raul Santos-Rodriguez, Robert Piechocki, Ian Craddock

Auto-TLDR; Local Manifold Learning for Deep Clustering on Autoencoded Embeddings

Unveiling Groups of Related Tasks in Multi-Task Learning

Jordan Frecon, Saverio Salzo, Massimiliano Pontil

Auto-TLDR; Continuous Bilevel Optimization for Multi-Task Learning

Abstract Slides Poster Similar

Object Classification of Remote Sensing Images Based on Optimized Projection Supervised Discrete Hashing

Qianqian Zhang, Yazhou Liu, Quansen Sun

Auto-TLDR; Optimized Projection Supervised Discrete Hashing for Large-Scale Remote Sensing Image Object Classification

Abstract Slides Poster Similar

Supervised Feature Embedding for Classification by Learning Rank-Based Neighborhoods

Ghazaal Sheikhi, Hakan Altincay

Auto-TLDR; Supervised Feature Embedding with Representation Learning of Rank-based Neighborhoods

Learning Connectivity with Graph Convolutional Networks

Auto-TLDR; Learning Graph Convolutional Networks Using Topological Properties of Graphs

Abstract Slides Poster Similar

Edge-Aware Graph Attention Network for Ratio of Edge-User Estimation in Mobile Networks

Jiehui Deng, Sheng Wan, Xiang Wang, Enmei Tu, Xiaolin Huang, Jie Yang, Chen Gong

Auto-TLDR; EAGAT: Edge-Aware Graph Attention Network for Automatic REU Estimation in Mobile Networks

Abstract Slides Poster Similar

GraphBGS: Background Subtraction Via Recovery of Graph Signals

Jhony Heriberto Giraldo Zuluaga, Thierry Bouwmans

Auto-TLDR; Graph BackGround Subtraction using Graph Signals

Abstract Slides Poster Similar

An Intransitivity Model for Matchup and Pairwise Comparison

Yan Gu, Jiuding Duan, Hisashi Kashima

Auto-TLDR; Blade-Chest: A Low-Rank Matrix Approach for Probabilistic Ranking of Players

Abstract Slides Poster Similar

Generative Deep-Neural-Network Mixture Modeling with Semi-Supervised MinMax+EM Learning

Auto-TLDR; Semi-supervised Deep Neural Networks for Generative Mixture Modeling and Clustering

Abstract Slides Poster Similar

Temporal Pattern Detection in Time-Varying Graphical Models

Federico Tomasi, Veronica Tozzo, Annalisa Barla

Auto-TLDR; A dynamical network inference model that leverages on kernels to consider general temporal patterns

Abstract Slides Poster Similar

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

Learning Sparse Deep Neural Networks Using Efficient Structured Projections on Convex Constraints for Green AI

Michel Barlaud, Frederic Guyard

Auto-TLDR; Constrained Deep Neural Network with Constrained Splitting Projection

Abstract Slides Poster Similar

Uniform and Non-Uniform Sampling Methods for Sub-Linear Time K-Means Clustering

Auto-TLDR; Sub-linear Time Clustering with Constant Approximation Ratio for K-Means Problem

Abstract Slides Poster Similar