Learning Sparse Deep Neural Networks Using Efficient Structured Projections on Convex Constraints for Green AI

Michel Barlaud,

Frederic Guyard

Auto-TLDR; Constrained Deep Neural Network with Constrained Splitting Projection

Similar papers

Classification and Feature Selection Using a Primal-Dual Method and Projections on Structured Constraints

Michel Barlaud, Antonin Chambolle, Jean_Baptiste Caillau

Auto-TLDR; A Constrained Primal-dual Method for Structured Feature Selection on High Dimensional Data

Abstract Slides Poster Similar

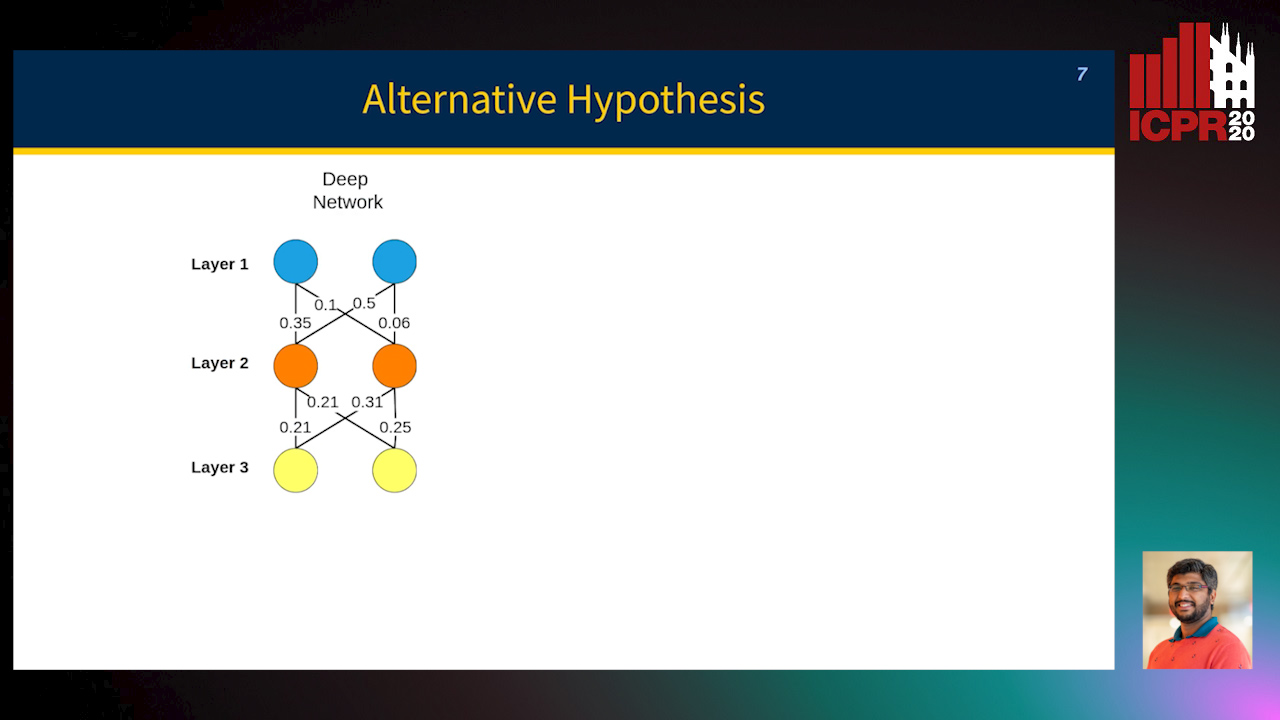

Exploiting Non-Linear Redundancy for Neural Model Compression

Muhammad Ahmed Shah, Raphael Olivier, Bhiksha Raj

Auto-TLDR; Compressing Deep Neural Networks with Linear Dependency

Abstract Slides Poster Similar

HFP: Hardware-Aware Filter Pruning for Deep Convolutional Neural Networks Acceleration

Fang Yu, Chuanqi Han, Pengcheng Wang, Ruoran Huang, Xi Huang, Li Cui

Auto-TLDR; Hardware-Aware Filter Pruning for Convolutional Neural Networks

Abstract Slides Poster Similar

Filter Pruning Using Hierarchical Group Sparse Regularization for Deep Convolutional Neural Networks

Auto-TLDR; Hierarchical Group Sparse Regularization for Sparse Convolutional Neural Networks

Abstract Slides Poster Similar

Progressive Gradient Pruning for Classification, Detection and Domain Adaptation

Le Thanh Nguyen-Meidine, Eric Granger, Marco Pedersoli, Madhu Kiran, Louis-Antoine Blais-Morin

Auto-TLDR; Progressive Gradient Pruning for Iterative Filter Pruning of Convolutional Neural Networks

Abstract Slides Poster Similar

Softer Pruning, Incremental Regularization

Linhang Cai, Zhulin An, Yongjun Xu

Auto-TLDR; Asymptotic SofteR Filter Pruning for Deep Neural Network Pruning

Abstract Slides Poster Similar

MINT: Deep Network Compression Via Mutual Information-Based Neuron Trimming

Madan Ravi Ganesh, Jason Corso, Salimeh Yasaei Sekeh

Auto-TLDR; Mutual Information-based Neuron Trimming for Deep Compression via Pruning

Abstract Slides Poster Similar

Learning to Prune in Training via Dynamic Channel Propagation

Shibo Shen, Rongpeng Li, Zhifeng Zhao, Honggang Zhang, Yugeng Zhou

Auto-TLDR; Dynamic Channel Propagation for Neural Network Pruning

Abstract Slides Poster Similar

RNN Training along Locally Optimal Trajectories via Frank-Wolfe Algorithm

Yun Yue, Ming Li, Venkatesh Saligrama, Ziming Zhang

Auto-TLDR; Frank-Wolfe Algorithm for Efficient Training of RNNs

Abstract Slides Poster Similar

Norm Loss: An Efficient yet Effective Regularization Method for Deep Neural Networks

Theodoros Georgiou, Sebastian Schmitt, Thomas Baeck, Wei Chen, Michael Lew

Auto-TLDR; Weight Soft-Regularization with Oblique Manifold for Convolutional Neural Network Training

Abstract Slides Poster Similar

A Discriminant Information Approach to Deep Neural Network Pruning

Auto-TLDR; Channel Pruning Using Discriminant Information and Reinforcement Learning

Abstract Slides Poster Similar

Exploiting Elasticity in Tensor Ranks for Compressing Neural Networks

Jie Ran, Rui Lin, Hayden Kwok-Hay So, Graziano Chesi, Ngai Wong

Auto-TLDR; Nuclear-Norm Rank Minimization Factorization for Deep Neural Networks

Abstract Slides Poster Similar

On the Information of Feature Maps and Pruning of Deep Neural Networks

Mohammadreza Soltani, Suya Wu, Jie Ding, Robert Ravier, Vahid Tarokh

Auto-TLDR; Compressing Deep Neural Models Using Mutual Information

Abstract Slides Poster Similar

Neuron-Based Network Pruning Based on Majority Voting

Ali Alqahtani, Xianghua Xie, Ehab Essa, Mark W. Jones

Auto-TLDR; Large-Scale Neural Network Pruning using Majority Voting

Abstract Slides Poster Similar

ResNet-Like Architecture with Low Hardware Requirements

Elena Limonova, Daniil Alfonso, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; BM-ResNet: Bipolar Morphological ResNet for Image Classification

Abstract Slides Poster Similar

Slimming ResNet by Slimming Shortcut

Donggyu Joo, Doyeon Kim, Junmo Kim

Auto-TLDR; SSPruning: Slimming Shortcut Pruning on ResNet Based Networks

Abstract Slides Poster Similar

Compression Strategies and Space-Conscious Representations for Deep Neural Networks

Giosuè Marinò, Gregorio Ghidoli, Marco Frasca, Dario Malchiodi

Auto-TLDR; Compression of Large Convolutional Neural Networks by Weight Pruning and Quantization

Abstract Slides Poster Similar

Low-Cost Lipschitz-Independent Adaptive Importance Sampling of Stochastic Gradients

Huikang Liu, Xiaolu Wang, Jiajin Li, Man-Cho Anthony So

Auto-TLDR; Adaptive Importance Sampling for Stochastic Gradient Descent

Attention Based Pruning for Shift Networks

Ghouthi Hacene, Carlos Lassance, Vincent Gripon, Matthieu Courbariaux, Yoshua Bengio

Auto-TLDR; Shift Attention Layers for Efficient Convolutional Layers

Abstract Slides Poster Similar

Activation Density Driven Efficient Pruning in Training

Timothy Foldy-Porto, Yeshwanth Venkatesha, Priyadarshini Panda

Auto-TLDR; Real-Time Neural Network Pruning with Compressed Networks

Abstract Slides Poster Similar

Channel Planting for Deep Neural Networks Using Knowledge Distillation

Kakeru Mitsuno, Yuichiro Nomura, Takio Kurita

Auto-TLDR; Incremental Training for Deep Neural Networks with Knowledge Distillation

Abstract Slides Poster Similar

E-DNAS: Differentiable Neural Architecture Search for Embedded Systems

Javier García López, Antonio Agudo, Francesc Moreno-Noguer

Auto-TLDR; E-DNAS: Differentiable Architecture Search for Light-Weight Networks for Image Classification

Abstract Slides Poster Similar

Unveiling Groups of Related Tasks in Multi-Task Learning

Jordan Frecon, Saverio Salzo, Massimiliano Pontil

Auto-TLDR; Continuous Bilevel Optimization for Multi-Task Learning

Abstract Slides Poster Similar

MaxDropout: Deep Neural Network Regularization Based on Maximum Output Values

Claudio Filipi Gonçalves Santos, Danilo Colombo, Mateus Roder, Joao Paulo Papa

Auto-TLDR; MaxDropout: A Regularizer for Deep Neural Networks

Abstract Slides Poster Similar

Compact CNN Structure Learning by Knowledge Distillation

Waqar Ahmed, Andrea Zunino, Pietro Morerio, Vittorio Murino

Auto-TLDR; Knowledge Distillation for Compressing Deep Convolutional Neural Networks

Abstract Slides Poster Similar

Can Data Placement Be Effective for Neural Networks Classification Tasks? Introducing the Orthogonal Loss

Brais Cancela, Veronica Bolon-Canedo, Amparo Alonso-Betanzos

Auto-TLDR; Spatial Placement for Neural Network Training Loss Functions

Abstract Slides Poster Similar

Efficient Online Subclass Knowledge Distillation for Image Classification

Maria Tzelepi, Nikolaos Passalis, Anastasios Tefas

Auto-TLDR; OSKD: Online Subclass Knowledge Distillation

Abstract Slides Poster Similar

Distilling Spikes: Knowledge Distillation in Spiking Neural Networks

Ravi Kumar Kushawaha, Saurabh Kumar, Biplab Banerjee, Rajbabu Velmurugan

Auto-TLDR; Knowledge Distillation in Spiking Neural Networks for Image Classification

Abstract Slides Poster Similar

An Efficient Empirical Solver for Localized Multiple Kernel Learning Via DNNs

Auto-TLDR; Localized Multiple Kernel Learning using LMKL-Net

Abstract Slides Poster Similar

Nearest Neighbor Classification Based on Activation Space of Convolutional Neural Network

Xinbo Ju, Shuo Shao, Huan Long, Weizhe Wang

Auto-TLDR; Convolutional Neural Network with Convex Hull Based Classifier

Speeding-Up Pruning for Artificial Neural Networks: Introducing Accelerated Iterative Magnitude Pruning

Marco Zullich, Eric Medvet, Felice Andrea Pellegrino, Alessio Ansuini

Auto-TLDR; Iterative Pruning of Artificial Neural Networks with Overparametrization

Abstract Slides Poster Similar

Revisiting the Training of Very Deep Neural Networks without Skip Connections

Oyebade Kayode Oyedotun, Abd El Rahman Shabayek, Djamila Aouada, Bjorn Ottersten

Auto-TLDR; Optimization of Very Deep PlainNets without shortcut connections with 'vanishing and exploding units' activations'

Abstract Slides Poster Similar

Fast Implementation of 4-Bit Convolutional Neural Networks for Mobile Devices

Anton Trusov, Elena Limonova, Dmitry Slugin, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; Efficient Quantized Low-Precision Neural Networks for Mobile Devices

Abstract Slides Poster Similar

Resource-efficient DNNs for Keyword Spotting using Neural Architecture Search and Quantization

David Peter, Wolfgang Roth, Franz Pernkopf

Auto-TLDR; Neural Architecture Search for Keyword Spotting in Limited Resource Environments

Abstract Slides Poster Similar

Beyond Cross-Entropy: Learning Highly Separable Feature Distributions for Robust and Accurate Classification

Arslan Ali, Andrea Migliorati, Tiziano Bianchi, Enrico Magli

Auto-TLDR; Gaussian class-conditional simplex loss for adversarial robust multiclass classifiers

Abstract Slides Poster Similar

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

Hcore-Init: Neural Network Initialization Based on Graph Degeneracy

Stratis Limnios, George Dasoulas, Dimitrios Thilikos, Michalis Vazirgiannis

Auto-TLDR; K-hypercore: Graph Mining for Deep Neural Networks

Abstract Slides Poster Similar

A Close Look at Deep Learning with Small Data

Auto-TLDR; Low-Complex Neural Networks for Small Data Conditions

Abstract Slides Poster Similar

Learning Sign-Constrained Support Vector Machines

Kenya Tajima, Kouhei Tsuchida, Esmeraldo Ronnie Rey Zara, Naoya Ohta, Tsuyoshi Kato

Auto-TLDR; Constrained Sign Constraints for Learning Linear Support Vector Machine

Class-Incremental Learning with Pre-Allocated Fixed Classifiers

Federico Pernici, Matteo Bruni, Claudio Baecchi, Francesco Turchini, Alberto Del Bimbo

Auto-TLDR; Class-Incremental Learning with Pre-allocated Output Nodes for Fixed Classifier

Abstract Slides Poster Similar

Learning Stable Deep Predictive Coding Networks with Weight Norm Supervision

Auto-TLDR; Stability of Predictive Coding Network with Weight Norm Supervision

Abstract Slides Poster Similar

Compression of YOLOv3 Via Block-Wise and Channel-Wise Pruning for Real-Time and Complicated Autonomous Driving Environment Sensing Applications

Jiaqi Li, Yanan Zhao, Li Gao, Feng Cui

Auto-TLDR; Pruning YOLOv3 with Batch Normalization for Autonomous Driving

Abstract Slides Poster Similar

Fine-Tuning DARTS for Image Classification

Muhammad Suhaib Tanveer, Umar Karim Khan, Chong Min Kyung

Auto-TLDR; Fine-Tune Neural Architecture Search using Fixed Operations

Abstract Slides Poster Similar

Ancient Document Layout Analysis: Autoencoders Meet Sparse Coding

Homa Davoudi, Marco Fiorucci, Arianna Traviglia

Auto-TLDR; Unsupervised Unsupervised Representation Learning for Document Layout Analysis

Abstract Slides Poster Similar

Feature Extraction by Joint Robust Discriminant Analysis and Inter-Class Sparsity

Auto-TLDR; Robust Discriminant Analysis with Feature Selection and Inter-class Sparsity (RDA_FSIS)

Generalization Comparison of Deep Neural Networks Via Output Sensitivity

Mahsa Forouzesh, Farnood Salehi, Patrick Thiran

Auto-TLDR; Generalization of Deep Neural Networks using Sensitivity

CQNN: Convolutional Quadratic Neural Networks

Auto-TLDR; Quadratic Neural Network for Image Classification

Abstract Slides Poster Similar

Is the Meta-Learning Idea Able to Improve the Generalization of Deep Neural Networks on the Standard Supervised Learning?

Auto-TLDR; Meta-learning Based Training of Deep Neural Networks for Few-Shot Learning

Abstract Slides Poster Similar