Softer Pruning, Incremental Regularization

Linhang Cai,

Zhulin An,

Yongjun Xu

Auto-TLDR; Asymptotic SofteR Filter Pruning for Deep Neural Network Pruning

Similar papers

Learning to Prune in Training via Dynamic Channel Propagation

Shibo Shen, Rongpeng Li, Zhifeng Zhao, Honggang Zhang, Yugeng Zhou

Auto-TLDR; Dynamic Channel Propagation for Neural Network Pruning

Abstract Slides Poster Similar

HFP: Hardware-Aware Filter Pruning for Deep Convolutional Neural Networks Acceleration

Fang Yu, Chuanqi Han, Pengcheng Wang, Ruoran Huang, Xi Huang, Li Cui

Auto-TLDR; Hardware-Aware Filter Pruning for Convolutional Neural Networks

Abstract Slides Poster Similar

Progressive Gradient Pruning for Classification, Detection and Domain Adaptation

Le Thanh Nguyen-Meidine, Eric Granger, Marco Pedersoli, Madhu Kiran, Louis-Antoine Blais-Morin

Auto-TLDR; Progressive Gradient Pruning for Iterative Filter Pruning of Convolutional Neural Networks

Abstract Slides Poster Similar

Slimming ResNet by Slimming Shortcut

Donggyu Joo, Doyeon Kim, Junmo Kim

Auto-TLDR; SSPruning: Slimming Shortcut Pruning on ResNet Based Networks

Abstract Slides Poster Similar

Filter Pruning Using Hierarchical Group Sparse Regularization for Deep Convolutional Neural Networks

Auto-TLDR; Hierarchical Group Sparse Regularization for Sparse Convolutional Neural Networks

Abstract Slides Poster Similar

On the Information of Feature Maps and Pruning of Deep Neural Networks

Mohammadreza Soltani, Suya Wu, Jie Ding, Robert Ravier, Vahid Tarokh

Auto-TLDR; Compressing Deep Neural Models Using Mutual Information

Abstract Slides Poster Similar

A Discriminant Information Approach to Deep Neural Network Pruning

Auto-TLDR; Channel Pruning Using Discriminant Information and Reinforcement Learning

Abstract Slides Poster Similar

Exploiting Non-Linear Redundancy for Neural Model Compression

Muhammad Ahmed Shah, Raphael Olivier, Bhiksha Raj

Auto-TLDR; Compressing Deep Neural Networks with Linear Dependency

Abstract Slides Poster Similar

Activation Density Driven Efficient Pruning in Training

Timothy Foldy-Porto, Yeshwanth Venkatesha, Priyadarshini Panda

Auto-TLDR; Real-Time Neural Network Pruning with Compressed Networks

Abstract Slides Poster Similar

Exploiting Elasticity in Tensor Ranks for Compressing Neural Networks

Jie Ran, Rui Lin, Hayden Kwok-Hay So, Graziano Chesi, Ngai Wong

Auto-TLDR; Nuclear-Norm Rank Minimization Factorization for Deep Neural Networks

Abstract Slides Poster Similar

Learning Sparse Deep Neural Networks Using Efficient Structured Projections on Convex Constraints for Green AI

Michel Barlaud, Frederic Guyard

Auto-TLDR; Constrained Deep Neural Network with Constrained Splitting Projection

Abstract Slides Poster Similar

Compression of YOLOv3 Via Block-Wise and Channel-Wise Pruning for Real-Time and Complicated Autonomous Driving Environment Sensing Applications

Jiaqi Li, Yanan Zhao, Li Gao, Feng Cui

Auto-TLDR; Pruning YOLOv3 with Batch Normalization for Autonomous Driving

Abstract Slides Poster Similar

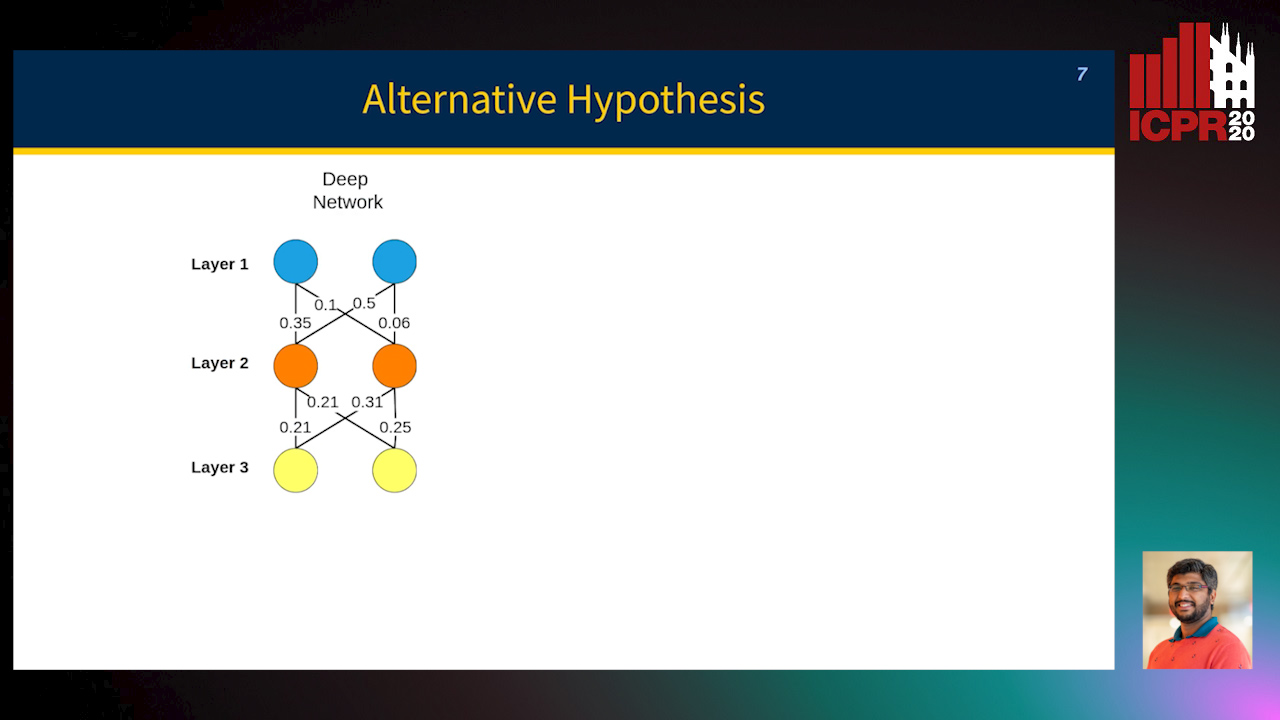

Speeding-Up Pruning for Artificial Neural Networks: Introducing Accelerated Iterative Magnitude Pruning

Marco Zullich, Eric Medvet, Felice Andrea Pellegrino, Alessio Ansuini

Auto-TLDR; Iterative Pruning of Artificial Neural Networks with Overparametrization

Abstract Slides Poster Similar

MINT: Deep Network Compression Via Mutual Information-Based Neuron Trimming

Madan Ravi Ganesh, Jason Corso, Salimeh Yasaei Sekeh

Auto-TLDR; Mutual Information-based Neuron Trimming for Deep Compression via Pruning

Abstract Slides Poster Similar

Channel Planting for Deep Neural Networks Using Knowledge Distillation

Kakeru Mitsuno, Yuichiro Nomura, Takio Kurita

Auto-TLDR; Incremental Training for Deep Neural Networks with Knowledge Distillation

Abstract Slides Poster Similar

Attention Based Pruning for Shift Networks

Ghouthi Hacene, Carlos Lassance, Vincent Gripon, Matthieu Courbariaux, Yoshua Bengio

Auto-TLDR; Shift Attention Layers for Efficient Convolutional Layers

Abstract Slides Poster Similar

Norm Loss: An Efficient yet Effective Regularization Method for Deep Neural Networks

Theodoros Georgiou, Sebastian Schmitt, Thomas Baeck, Wei Chen, Michael Lew

Auto-TLDR; Weight Soft-Regularization with Oblique Manifold for Convolutional Neural Network Training

Abstract Slides Poster Similar

Neuron-Based Network Pruning Based on Majority Voting

Ali Alqahtani, Xianghua Xie, Ehab Essa, Mark W. Jones

Auto-TLDR; Large-Scale Neural Network Pruning using Majority Voting

Abstract Slides Poster Similar

Dynamic Multi-Path Neural Network

Yingcheng Su, Yichao Wu, Ken Chen, Ding Liang, Xiaolin Hu

Auto-TLDR; Dynamic Multi-path Neural Network

P-DIFF: Learning Classifier with Noisy Labels Based on Probability Difference Distributions

Wei Hu, Qihao Zhao, Yangyu Huang, Fan Zhang

Auto-TLDR; P-DIFF: A Simple and Effective Training Paradigm for Deep Neural Network Classifier with Noisy Labels

Abstract Slides Poster Similar

E-DNAS: Differentiable Neural Architecture Search for Embedded Systems

Javier García López, Antonio Agudo, Francesc Moreno-Noguer

Auto-TLDR; E-DNAS: Differentiable Architecture Search for Light-Weight Networks for Image Classification

Abstract Slides Poster Similar

Is the Meta-Learning Idea Able to Improve the Generalization of Deep Neural Networks on the Standard Supervised Learning?

Auto-TLDR; Meta-learning Based Training of Deep Neural Networks for Few-Shot Learning

Abstract Slides Poster Similar

Fast and Accurate Real-Time Semantic Segmentation with Dilated Asymmetric Convolutions

Leonel Rosas-Arias, Gibran Benitez-Garcia, Jose Portillo-Portillo, Gabriel Sanchez-Perez, Keiji Yanai

Auto-TLDR; FASSD-Net: Dilated Asymmetric Pyramidal Fusion for Real-Time Semantic Segmentation

Abstract Slides Poster Similar

Improving Batch Normalization with Skewness Reduction for Deep Neural Networks

Pak Lun Kevin Ding, Martin Sarah, Baoxin Li

Auto-TLDR; Batch Normalization with Skewness Reduction

Abstract Slides Poster Similar

Efficient Online Subclass Knowledge Distillation for Image Classification

Maria Tzelepi, Nikolaos Passalis, Anastasios Tefas

Auto-TLDR; OSKD: Online Subclass Knowledge Distillation

Abstract Slides Poster Similar

How Does DCNN Make Decisions?

Yi Lin, Namin Wang, Xiaoqing Ma, Ziwei Li, Gang Bai

Auto-TLDR; Exploring Deep Convolutional Neural Network's Decision-Making Interpretability

Abstract Slides Poster Similar

Compact CNN Structure Learning by Knowledge Distillation

Waqar Ahmed, Andrea Zunino, Pietro Morerio, Vittorio Murino

Auto-TLDR; Knowledge Distillation for Compressing Deep Convolutional Neural Networks

Abstract Slides Poster Similar

Enhancing Semantic Segmentation of Aerial Images with Inhibitory Neurons

Ihsan Ullah, Sean Reilly, Michael Madden

Auto-TLDR; Lateral Inhibition in Deep Neural Networks for Object Recognition and Semantic Segmentation

Abstract Slides Poster Similar

A Close Look at Deep Learning with Small Data

Auto-TLDR; Low-Complex Neural Networks for Small Data Conditions

Abstract Slides Poster Similar

Rethinking of Deep Models Parameters with Respect to Data Distribution

Shitala Prasad, Dongyun Lin, Yiqun Li, Sheng Dong, Zaw Min Oo

Auto-TLDR; A progressive stepwise training strategy for deep neural networks

Abstract Slides Poster Similar

Compression Strategies and Space-Conscious Representations for Deep Neural Networks

Giosuè Marinò, Gregorio Ghidoli, Marco Frasca, Dario Malchiodi

Auto-TLDR; Compression of Large Convolutional Neural Networks by Weight Pruning and Quantization

Abstract Slides Poster Similar

WeightAlign: Normalizing Activations by Weight Alignment

Xiangwei Shi, Yunqiang Li, Xin Liu, Jan Van Gemert

Auto-TLDR; WeightAlign: Normalization of Activations without Sample Statistics

Abstract Slides Poster Similar

Improved Residual Networks for Image and Video Recognition

Ionut Cosmin Duta, Li Liu, Fan Zhu, Ling Shao

Auto-TLDR; Residual Networks for Deep Learning

Abstract Slides Poster Similar

VPU Specific CNNs through Neural Architecture Search

Ciarán Donegan, Hamza Yous, Saksham Sinha, Jonathan Byrne

Auto-TLDR; Efficient Convolutional Neural Networks for Edge Devices using Neural Architecture Search

Abstract Slides Poster Similar

Selecting Useful Knowledge from Previous Tasks for Future Learning in a Single Network

Feifei Shi, Peng Wang, Zhongchao Shi, Yong Rui

Auto-TLDR; Continual Learning with Gradient-based Threshold Threshold

Abstract Slides Poster Similar

Energy Minimum Regularization in Continual Learning

Auto-TLDR; Energy Minimization Regularization for Continuous Learning

ResNet-Like Architecture with Low Hardware Requirements

Elena Limonova, Daniil Alfonso, Dmitry Nikolaev, Vladimir V. Arlazarov

Auto-TLDR; BM-ResNet: Bipolar Morphological ResNet for Image Classification

Abstract Slides Poster Similar

Resource-efficient DNNs for Keyword Spotting using Neural Architecture Search and Quantization

David Peter, Wolfgang Roth, Franz Pernkopf

Auto-TLDR; Neural Architecture Search for Keyword Spotting in Limited Resource Environments

Abstract Slides Poster Similar

Stage-Wise Neural Architecture Search

Artur Jordão, Fernando Akio Yamada, Maiko Lie, William Schwartz

Auto-TLDR; Efficient Neural Architecture Search for Deep Convolutional Networks

Abstract Slides Poster Similar

Color, Edge, and Pixel-Wise Explanation of Predictions Based onInterpretable Neural Network Model

Auto-TLDR; Explainable Deep Neural Network with Edge Detecting Filters

Revisiting the Training of Very Deep Neural Networks without Skip Connections

Oyebade Kayode Oyedotun, Abd El Rahman Shabayek, Djamila Aouada, Bjorn Ottersten

Auto-TLDR; Optimization of Very Deep PlainNets without shortcut connections with 'vanishing and exploding units' activations'

Abstract Slides Poster Similar

Attention As Activation

Yimian Dai, Stefan Oehmcke, Fabian Gieseke, Yiquan Wu, Kobus Barnard

Auto-TLDR; Attentional Activation Units for Convolutional Networks

Towards Low-Bit Quantization of Deep Neural Networks with Limited Data

Yong Yuan, Chen Chen, Xiyuan Hu, Silong Peng

Auto-TLDR; Low-Precision Quantization of Deep Neural Networks with Limited Data

Abstract Slides Poster Similar

MaxDropout: Deep Neural Network Regularization Based on Maximum Output Values

Claudio Filipi Gonçalves Santos, Danilo Colombo, Mateus Roder, Joao Paulo Papa

Auto-TLDR; MaxDropout: A Regularizer for Deep Neural Networks

Abstract Slides Poster Similar

Operation and Topology Aware Fast Differentiable Architecture Search

Shahid Siddiqui, Christos Kyrkou, Theocharis Theocharides

Auto-TLDR; EDARTS: Efficient Differentiable Architecture Search with Efficient Optimization

Abstract Slides Poster Similar

Efficient-Receptive Field Block with Group Spatial Attention Mechanism for Object Detection

Jiacheng Zhang, Zhicheng Zhao, Fei Su

Auto-TLDR; E-RFB: Efficient-Receptive Field Block for Deep Neural Network for Object Detection

Abstract Slides Poster Similar

Locality-Promoting Representation Learning

Auto-TLDR; Locality-promoting Regularization for Neural Networks

Abstract Slides Poster Similar

Efficient Super Resolution by Recursive Aggregation

Zhengxiong Luo Zhengxiong Luo, Yan Huang, Shang Li, Liang Wang, Tieniu Tan

Auto-TLDR; Recursive Aggregation Network for Efficient Deep Super Resolution

Abstract Slides Poster Similar