Joint Learning Multiple Curvature Descriptor for 3D Palmprint Recognition

Lunke Fei,

Bob Zhang,

Jie Wen,

Chunwei Tian,

Peng Liu,

Shuping Zhao

Auto-TLDR; Joint Feature Learning for 3D palmprint recognition using curvature data vectors

Similar papers

Discrete Semantic Matrix Factorization Hashing for Cross-Modal Retrieval

Jianyang Qin, Lunke Fei, Shaohua Teng, Wei Zhang, Genping Zhao, Haoliang Yuan

Auto-TLDR; Discrete Semantic Matrix Factorization Hashing for Cross-Modal Retrieval

Abstract Slides Poster Similar

A Local Descriptor with Physiological Characteristic for Finger Vein Recognition

Liping Zhang, Weijun Li, Ning Xin

Auto-TLDR; Finger vein-specific local feature descriptors based physiological characteristic of finger vein patterns

Abstract Slides Poster Similar

Feature Extraction by Joint Robust Discriminant Analysis and Inter-Class Sparsity

Auto-TLDR; Robust Discriminant Analysis with Feature Selection and Inter-class Sparsity (RDA_FSIS)

Object Classification of Remote Sensing Images Based on Optimized Projection Supervised Discrete Hashing

Qianqian Zhang, Yazhou Liu, Quansen Sun

Auto-TLDR; Optimized Projection Supervised Discrete Hashing for Large-Scale Remote Sensing Image Object Classification

Abstract Slides Poster Similar

Face Anti-Spoofing Based on Dynamic Color Texture Analysis Using Local Directional Number Pattern

Junwei Zhou, Ke Shu, Peng Liu, Jianwen Xiang, Shengwu Xiong

Auto-TLDR; LDN-TOP Representation followed by ProCRC Classification for Face Anti-Spoofing

Abstract Slides Poster Similar

Feature Extraction and Selection Via Robust Discriminant Analysis and Class Sparsity

Auto-TLDR; Hybrid Linear Discriminant Embedding for supervised multi-class classification

Abstract Slides Poster Similar

Cross-spectrum Face Recognition Using Subspace Projection Hashing

Hanrui Wang, Xingbo Dong, Jin Zhe, Jean-Luc Dugelay, Massimo Tistarelli

Auto-TLDR; Subspace Projection Hashing for Cross-Spectrum Face Recognition

Abstract Slides Poster Similar

Embedding Shared Low-Rank and Feature Correlation for Multi-View Data Analysis

Zhan Wang, Lizhi Wang, Hua Huang

Auto-TLDR; embedding shared low-rank and feature correlation for multi-view data analysis

Abstract Slides Poster Similar

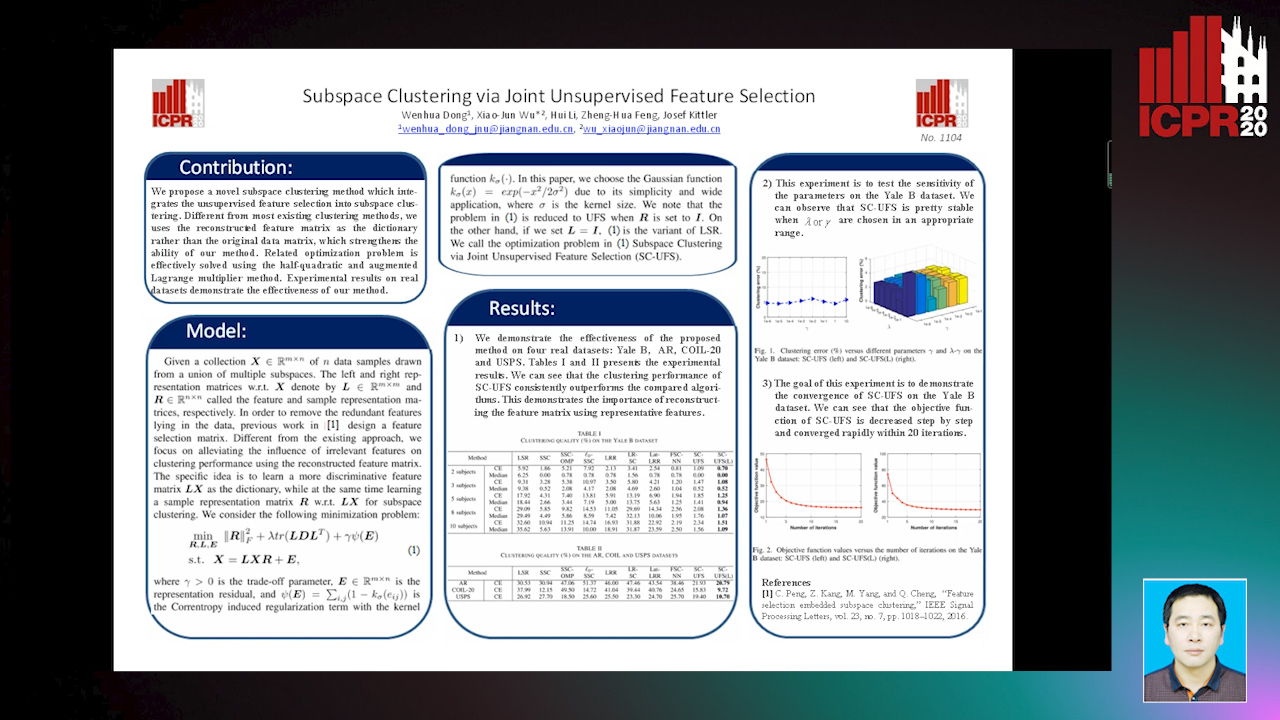

Subspace Clustering Via Joint Unsupervised Feature Selection

Wenhua Dong, Xiaojun Wu, Hui Li, Zhenhua Feng, Josef Kittler

Auto-TLDR; Unsupervised Feature Selection for Subspace Clustering

Label Self-Adaption Hashing for Image Retrieval

Jianglin Lu, Zhihui Lai, Hailing Wang, Jie Zhou

Auto-TLDR; Label Self-Adaption Hashing for Large-Scale Image Retrieval

Abstract Slides Poster Similar

Local Grouped Invariant Order Pattern for Grayscale-Inversion and Rotation Invariant Texture Classification

Yankai Huang, Tiecheng Song, Shuang Li, Yuanjing Han

Auto-TLDR; Local grouped invariant order pattern for grayscale-inversion and rotation invariant texture classification

Abstract Slides Poster Similar

A Distinct Discriminant Canonical Correlation Analysis Network Based Deep Information Quality Representation for Image Classification

Lei Gao, Zheng Guo, Ling Guan Ling Guan

Auto-TLDR; DDCCANet: Deep Information Quality Representation for Image Classification

Abstract Slides Poster Similar

SoftmaxOut Transformation-Permutation Network for Facial Template Protection

Hakyoung Lee, Cheng Yaw Low, Andrew Teoh

Auto-TLDR; SoftmaxOut Transformation-Permutation Network for C cancellable Biometrics

Abstract Slides Poster Similar

VSB^2-Net: Visual-Semantic Bi-Branch Network for Zero-Shot Hashing

Xin Li, Xiangfeng Wang, Bo Jin, Wenjie Zhang, Jun Wang, Hongyuan Zha

Auto-TLDR; VSB^2-Net: inductive zero-shot hashing for image retrieval

Abstract Slides Poster Similar

DFH-GAN: A Deep Face Hashing with Generative Adversarial Network

Bo Xiao, Lanxiang Zhou, Yifei Wang, Qiangfang Xu

Auto-TLDR; Deep Face Hashing with GAN for Face Image Retrieval

Abstract Slides Poster Similar

Fast Discrete Cross-Modal Hashing Based on Label Relaxation and Matrix Factorization

Donglin Zhang, Xiaojun Wu, Zhen Liu, Jun Yu, Josef Kittler

Auto-TLDR; LRMF: Label Relaxation and Discrete Matrix Factorization for Cross-Modal Retrieval

Sample-Dependent Distance for 1 : N Identification Via Discriminative Feature Selection

Auto-TLDR; Feature Selection Mask for 1:N Identification Problems with Binary Features

Abstract Slides Poster Similar

A Spectral Clustering on Grassmann Manifold Via Double Low Rank Constraint

Xinglin Piao, Yongli Hu, Junbin Gao, Yanfeng Sun, Xin Yang, Baocai Yin

Auto-TLDR; Double Low Rank Representation for High-Dimensional Data Clustering on Grassmann Manifold

Face Anti-Spoofing Using Spatial Pyramid Pooling

Lei Shi, Zhuo Zhou, Zhenhua Guo

Auto-TLDR; Spatial Pyramid Pooling for Face Anti-Spoofing

Abstract Slides Poster Similar

Soft Label and Discriminant Embedding Estimation for Semi-Supervised Classification

Fadi Dornaika, Abdullah Baradaaji, Youssof El Traboulsi

Auto-TLDR; Semi-supervised Semi-Supervised Learning for Linear Feature Extraction and Label Propagation

Abstract Slides Poster Similar

Super-Resolution Guided Pore Detection for Fingerprint Recognition

Syeda Nyma Ferdous, Ali Dabouei, Jeremy Dawson, Nasser M. Nasarabadi

Auto-TLDR; Super-Resolution Generative Adversarial Network for Fingerprint Recognition Using Pore Features

Abstract Slides Poster Similar

Hierarchical Deep Hashing for Fast Large Scale Image Retrieval

Yongfei Zhang, Cheng Peng, Zhang Jingtao, Xianglong Liu, Shiliang Pu, Changhuai Chen

Auto-TLDR; Hierarchical indexed deep hashing for fast large scale image retrieval

Abstract Slides Poster Similar

First and Second-Order Sorted Local Binary Pattern Features for Grayscale-Inversion and Rotation Invariant Texture Classification

Tiecheng Song, Yuanjing Han, Jie Feng, Yuanlin Wang, Chenqiang Gao

Auto-TLDR; First- and Secondorder Sorted Local Binary Pattern for texture classification under inverse grayscale changes and image rotation

Abstract Slides Poster Similar

Joint Compressive Autoencoders for Full-Image-To-Image Hiding

Xiyao Liu, Ziping Ma, Xingbei Guo, Jialu Hou, Lei Wang, Gerald Schaefer, Hui Fang

Auto-TLDR; J-CAE: Joint Compressive Autoencoder for Image Hiding

Abstract Slides Poster Similar

Improved Deep Classwise Hashing with Centers Similarity Learning for Image Retrieval

Auto-TLDR; Deep Classwise Hashing for Image Retrieval Using Center Similarity Learning

Abstract Slides Poster Similar

RGB-Infrared Person Re-Identification Via Image Modality Conversion

Huangpeng Dai, Qing Xie, Yanchun Ma, Yongjian Liu, Shengwu Xiong

Auto-TLDR; CE2L: A Novel Network for Cross-Modality Re-identification with Feature Alignment

Abstract Slides Poster Similar

Cancelable Biometrics Vault: A Secure Key-Binding Biometric Cryptosystem Based on Chaffing and Winnowing

Osama Ouda, Karthik Nandakumar, Arun Ross

Auto-TLDR; Cancelable Biometrics Vault for Key-binding Biometric Cryptosystem Framework

Abstract Slides Poster Similar

Multi-Scale Keypoint Matching

Auto-TLDR; Multi-Scale Keypoint Matching Using Multi-Scale Information

Abstract Slides Poster Similar

Two-Level Attention-Based Fusion Learning for RGB-D Face Recognition

Hardik Uppal, Alireza Sepas-Moghaddam, Michael Greenspan, Ali Etemad

Auto-TLDR; Fused RGB-D Facial Recognition using Attention-Aware Feature Fusion

Abstract Slides Poster Similar

Rethinking ReID:Multi-Feature Fusion Person Re-Identification Based on Orientation Constraints

Mingjing Ai, Guozhi Shan, Bo Liu, Tianyang Liu

Auto-TLDR; Person Re-identification with Orientation Constrained Network

Abstract Slides Poster Similar

Self-Paced Bottom-Up Clustering Network with Side Information for Person Re-Identification

Mingkun Li, Chun-Guang Li, Ruo-Pei Guo, Jun Guo

Auto-TLDR; Self-Paced Bottom-up Clustering Network with Side Information for Unsupervised Person Re-identification

Abstract Slides Poster Similar

G-FAN: Graph-Based Feature Aggregation Network for Video Face Recognition

He Zhao, Yongjie Shi, Xin Tong, Jingsi Wen, Xianghua Ying, Jinshi Hongbin Zha

Auto-TLDR; Graph-based Feature Aggregation Network for Video Face Recognition

Abstract Slides Poster Similar

Multi-Scale Cascading Network with Compact Feature Learning for RGB-Infrared Person Re-Identification

Can Zhang, Hong Liu, Wei Guo, Mang Ye

Auto-TLDR; Multi-Scale Part-Aware Cascading for RGB-Infrared Person Re-identification

Abstract Slides Poster Similar

Double Manifolds Regularized Non-Negative Matrix Factorization for Data Representation

Jipeng Guo, Shuai Yin, Yanfeng Sun, Yongli Hu

Auto-TLDR; Double Manifolds Regularized Non-negative Matrix Factorization for Clustering

Abstract Slides Poster Similar

Finger Vein Recognition and Intra-Subject Similarity Evaluation of Finger Veins Using the CNN Triplet Loss

Georg Wimmer, Bernhard Prommegger, Andreas Uhl

Auto-TLDR; Finger vein recognition using CNNs and hard triplet online selection

Abstract Slides Poster Similar

Learning Metric Features for Writer-Independent Signature Verification Using Dual Triplet Loss

Auto-TLDR; A dual triplet loss based method for offline writer-independent signature verification

Person Recognition with HGR Maximal Correlation on Multimodal Data

Yihua Liang, Fei Ma, Yang Li, Shao-Lun Huang

Auto-TLDR; A correlation-based multimodal person recognition framework that learns discriminative embeddings of persons by joint learning visual features and audio features

Abstract Slides Poster Similar

Supervised Feature Embedding for Classification by Learning Rank-Based Neighborhoods

Ghazaal Sheikhi, Hakan Altincay

Auto-TLDR; Supervised Feature Embedding with Representation Learning of Rank-based Neighborhoods

Three-Dimensional Lip Motion Network for Text-Independent Speaker Recognition

Jianrong Wang, Tong Wu, Shanyu Wang, Mei Yu, Qiang Fang, Ju Zhang, Li Liu

Auto-TLDR; Lip Motion Network for Text-Independent and Text-Dependent Speaker Recognition

Abstract Slides Poster Similar

Adaptive Feature Fusion Network for Gaze Tracking in Mobile Tablets

Yiwei Bao, Yihua Cheng, Yunfei Liu, Feng Lu

Auto-TLDR; Adaptive Feature Fusion Network for Multi-stream Gaze Estimation in Mobile Tablets

Abstract Slides Poster Similar

Appliance Identification Using a Histogram Post-Processing of 2D Local Binary Patterns for Smart Grid Applications

Yassine Himeur, Abdullah Alsalemi, Faycal Bensaali, Abbes Amira

Auto-TLDR; LBP-BEVM based Local Binary Patterns for Appliances Identification in the Smart Grid

Exploiting Local Indexing and Deep Feature Confidence Scores for Fast Image-To-Video Search

Savas Ozkan, Gözde Bozdağı Akar

Auto-TLDR; Fast and Robust Image-to-Video Retrieval Using Local and Global Descriptors

Abstract Slides Poster Similar

A Unified Framework for Distance-Aware Domain Adaptation

Fei Wang, Youdong Ding, Huan Liang, Yuzhen Gao, Wenqi Che

Auto-TLDR; distance-aware domain adaptation

Abstract Slides Poster Similar

Progressive Learning Algorithm for Efficient Person Re-Identification

Zhen Li, Hanyang Shao, Liang Niu, Nian Xue

Auto-TLDR; Progressive Learning Algorithm for Large-Scale Person Re-Identification

Abstract Slides Poster Similar

Cross-Media Hash Retrieval Using Multi-head Attention Network

Zhixin Li, Feng Ling, Chuansheng Xu, Canlong Zhang, Huifang Ma

Auto-TLDR; Unsupervised Cross-Media Hash Retrieval Using Multi-Head Attention Network

Abstract Slides Poster Similar

Low Rank Representation on Product Grassmann Manifolds for Multi-viewSubspace Clustering

Jipeng Guo, Yanfeng Sun, Junbin Gao, Yongli Hu, Baocai Yin

Auto-TLDR; Low Rank Representation on Product Grassmann Manifold for Multi-View Data Clustering

Abstract Slides Poster Similar

Modeling Extent-Of-Texture Information for Ground Terrain Recognition

Shuvozit Ghose, Pinaki Nath Chowdhury, Partha Pratim Roy, Umapada Pal

Auto-TLDR; Extent-of-Texture Guided Inter-domain Message Passing for Ground Terrain Recognition

Abstract Slides Poster Similar

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures