Self-Paced Bottom-Up Clustering Network with Side Information for Person Re-Identification

Mingkun Li,

Chun-Guang Li,

Ruo-Pei Guo,

Jun Guo

Auto-TLDR; Self-Paced Bottom-up Clustering Network with Side Information for Unsupervised Person Re-identification

Similar papers

Progressive Unsupervised Domain Adaptation for Image-Based Person Re-Identification

Mingliang Yang, Da Huang, Jing Zhao

Auto-TLDR; Progressive Unsupervised Domain Adaptation for Person Re-Identification

Abstract Slides Poster Similar

CANU-ReID: A Conditional Adversarial Network for Unsupervised Person Re-IDentification

Guillaume Delorme, Yihong Xu, Stéphane Lathuiliere, Radu Horaud, Xavier Alameda-Pineda

Auto-TLDR; Unsupervised Person Re-Identification with Clustering and Adversarial Learning

Semi-Supervised Person Re-Identification by Attribute Similarity Guidance

Peixian Hong, Ancong Wu, Wei-Shi Zheng

Auto-TLDR; Attribute Similarity Guidance Guidance Loss for Semi-supervised Person Re-identification

Abstract Slides Poster Similar

Unsupervised Domain Adaptation for Person Re-Identification through Source-Guided Pseudo-Labeling

Fabian Dubourvieux, Romaric Audigier, Angélique Loesch, Ainouz-Zemouche Samia, Stéphane Canu

Auto-TLDR; Pseudo-labeling for Unsupervised Domain Adaptation for Person Re-Identification

Abstract Slides Poster Similar

Attention-Based Model with Attribute Classification for Cross-Domain Person Re-Identification

Simin Xu, Lingkun Luo, Shiqiang Hu

Auto-TLDR; An attention-based model with attribute classification for cross-domain person re-identification

Building Computationally Efficient and Well-Generalizing Person Re-Identification Models with Metric Learning

Vladislav Sovrasov, Dmitry Sidnev

Auto-TLDR; Cross-Domain Generalization in Person Re-identification using Omni-Scale Network

Pose Variation Adaptation for Person Re-Identification

Lei Zhang, Na Jiang, Qishuai Diao, Yue Xu, Zhong Zhou, Wei Wu

Auto-TLDR; Pose Transfer Generative Adversarial Network for Person Re-identification

Abstract Slides Poster Similar

Progressive Learning Algorithm for Efficient Person Re-Identification

Zhen Li, Hanyang Shao, Liang Niu, Nian Xue

Auto-TLDR; Progressive Learning Algorithm for Large-Scale Person Re-Identification

Abstract Slides Poster Similar

Deep Top-Rank Counter Metric for Person Re-Identification

Chen Chen, Hao Dou, Xiyuan Hu, Silong Peng

Auto-TLDR; Deep Top-Rank Counter Metric for Person Re-identification

Abstract Slides Poster Similar

Adaptive L2 Regularization in Person Re-Identification

Xingyang Ni, Liang Fang, Heikki Juhani Huttunen

Auto-TLDR; AdaptiveReID: Adaptive L2 Regularization for Person Re-identification

Abstract Slides Poster Similar

Online Domain Adaptation for Person Re-Identification with a Human in the Loop

Rita Delussu, Lorenzo Putzu, Giorgio Fumera, Fabio Roli

Auto-TLDR; Human-in-the-loop for Person Re-Identification in Infeasible Applications

Abstract Slides Poster Similar

Domain Generalized Person Re-Identification Via Cross-Domain Episodic Learning

Ci-Siang Lin, Yuan Chia Cheng, Yu-Chiang Frank Wang

Auto-TLDR; Domain-Invariant Person Re-identification with Episodic Learning

Abstract Slides Poster Similar

Spatial-Aware GAN for Unsupervised Person Re-Identification

Fangneng Zhan, Changgong Zhang

Auto-TLDR; Unsupervised Unsupervised Domain Adaptation for Person Re-Identification

Self and Channel Attention Network for Person Re-Identification

Asad Munir, Niki Martinel, Christian Micheloni

Auto-TLDR; SCAN: Self and Channel Attention Network for Person Re-identification

Abstract Slides Poster Similar

Not 3D Re-ID: Simple Single Stream 2D Convolution for Robust Video Re-Identification

Auto-TLDR; ResNet50-IBN for Video-based Person Re-Identification using Single Stream 2D Convolution Network

Abstract Slides Poster Similar

Top-DB-Net: Top DropBlock for Activation Enhancement in Person Re-Identification

Auto-TLDR; Top-DB-Net for Person Re-Identification using Top DropBlock

Abstract Slides Poster Similar

Open-World Group Retrieval with Ambiguity Removal: A Benchmark

Ling Mei, Jian-Huang Lai, Zhanxiang Feng, Xiaohua Xie

Auto-TLDR; P2GSM-AR: Re-identifying changing groups of people under the open-world and group-ambiguity scenarios

Abstract Slides Poster Similar

Attentive Part-Aware Networks for Partial Person Re-Identification

Lijuan Huo, Chunfeng Song, Zhengyi Liu, Zhaoxiang Zhang

Auto-TLDR; Part-Aware Learning for Partial Person Re-identification

Abstract Slides Poster Similar

Convolutional Feature Transfer via Camera-Specific Discriminative Pooling for Person Re-Identification

Tetsu Matsukawa, Einoshin Suzuki

Auto-TLDR; A small-scale CNN feature transfer method for person re-identification

Abstract Slides Poster Similar

Recurrent Deep Attention Network for Person Re-Identification

Changhao Wang, Jun Zhou, Xianfei Duan, Guanwen Zhang, Wei Zhou

Auto-TLDR; Recurrent Deep Attention Network for Person Re-identification

Abstract Slides Poster Similar

Rethinking ReID:Multi-Feature Fusion Person Re-Identification Based on Orientation Constraints

Mingjing Ai, Guozhi Shan, Bo Liu, Tianyang Liu

Auto-TLDR; Person Re-identification with Orientation Constrained Network

Abstract Slides Poster Similar

How Important Are Faces for Person Re-Identification?

Julia Dietlmeier, Joseph Antony, Kevin Mcguinness, Noel E O'Connor

Auto-TLDR; Anonymization of Person Re-identification Datasets with Face Detection and Blurring

Abstract Slides Poster Similar

Polynomial Universal Adversarial Perturbations for Person Re-Identification

Wenjie Ding, Xing Wei, Rongrong Ji, Xiaopeng Hong, Yihong Gong

Auto-TLDR; Polynomial Universal Adversarial Perturbation for Re-identification Methods

Abstract Slides Poster Similar

RGB-Infrared Person Re-Identification Via Image Modality Conversion

Huangpeng Dai, Qing Xie, Yanchun Ma, Yongjian Liu, Shengwu Xiong

Auto-TLDR; CE2L: A Novel Network for Cross-Modality Re-identification with Feature Alignment

Abstract Slides Poster Similar

Multi-Scale Cascading Network with Compact Feature Learning for RGB-Infrared Person Re-Identification

Can Zhang, Hong Liu, Wei Guo, Mang Ye

Auto-TLDR; Multi-Scale Part-Aware Cascading for RGB-Infrared Person Re-identification

Abstract Slides Poster Similar

Decoupled Self-Attention Module for Person Re-Identification

Chao Zhao, Zhenyu Zhang, Jian Yang, Yan Yan

Auto-TLDR; Decoupled Self-attention Module for Person Re-identification

Abstract Slides Poster Similar

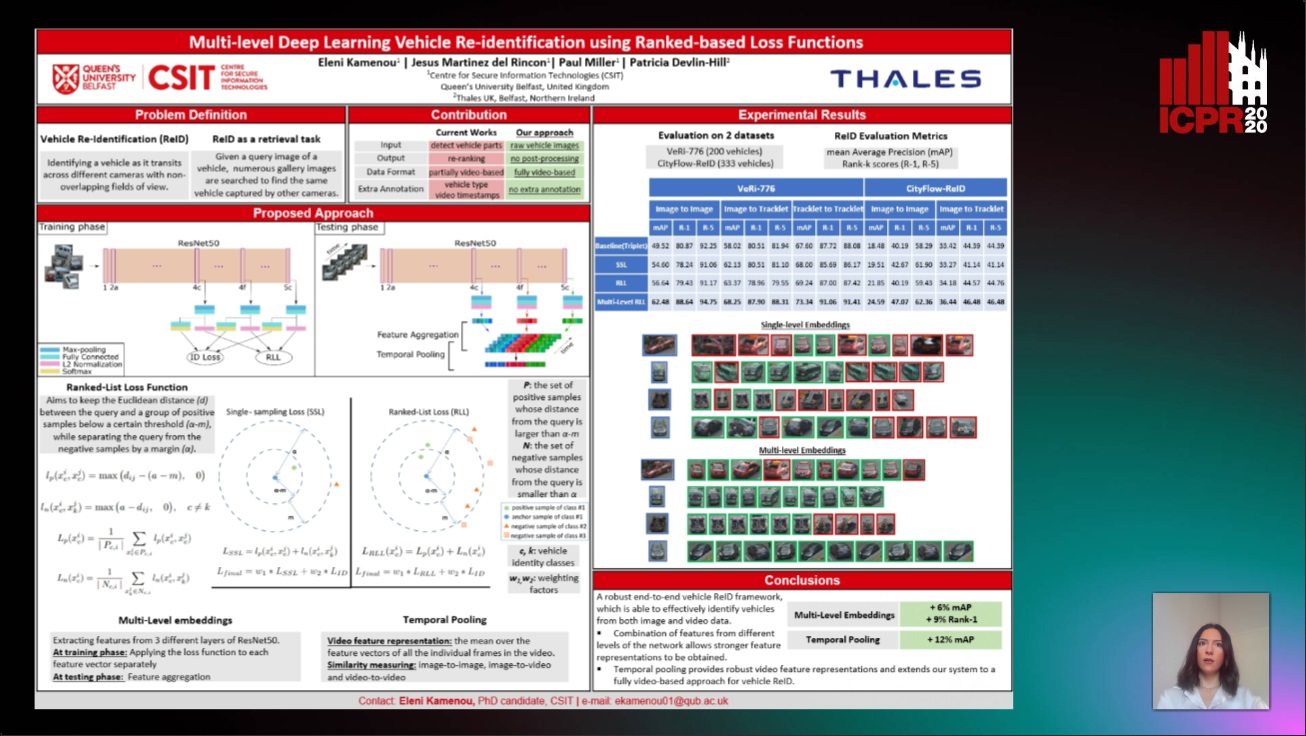

Multi-Level Deep Learning Vehicle Re-Identification Using Ranked-Based Loss Functions

Eleni Kamenou, Jesus Martinez-Del-Rincon, Paul Miller, Patricia Devlin - Hill

Auto-TLDR; Multi-Level Re-identification Network for Vehicle Re-Identification

Abstract Slides Poster Similar

Sample-Dependent Distance for 1 : N Identification Via Discriminative Feature Selection

Auto-TLDR; Feature Selection Mask for 1:N Identification Problems with Binary Features

Abstract Slides Poster Similar

SSDL: Self-Supervised Domain Learning for Improved Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Self-supervised Domain Learning for Face Recognition in unconstrained environments

Abstract Slides Poster Similar

DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Gaoang Wang, Chen Lin, Tianqiang Liu, Mingwei He, Jiebo Luo

Auto-TLDR; DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Abstract Slides Poster Similar

Improved Deep Classwise Hashing with Centers Similarity Learning for Image Retrieval

Auto-TLDR; Deep Classwise Hashing for Image Retrieval Using Center Similarity Learning

Abstract Slides Poster Similar

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

A Base-Derivative Framework for Cross-Modality RGB-Infrared Person Re-Identification

Hong Liu, Ziling Miao, Bing Yang, Runwei Ding

Auto-TLDR; Cross-modality RGB-Infrared Person Re-identification with Auxiliary Modalities

Abstract Slides Poster Similar

A Unified Framework for Distance-Aware Domain Adaptation

Fei Wang, Youdong Ding, Huan Liang, Yuzhen Gao, Wenqi Che

Auto-TLDR; distance-aware domain adaptation

Abstract Slides Poster Similar

Constrained Spectral Clustering Network with Self-Training

Xinyue Liu, Shichong Yang, Linlin Zong

Auto-TLDR; Constrained Spectral Clustering Network: A Constrained Deep spectral clustering network

Abstract Slides Poster Similar

Multi-Label Contrastive Focal Loss for Pedestrian Attribute Recognition

Xiaoqiang Zheng, Zhenxia Yu, Lin Chen, Fan Zhu, Shilong Wang

Auto-TLDR; Multi-label Contrastive Focal Loss for Pedestrian Attribute Recognition

Abstract Slides Poster Similar

Semi-Supervised Domain Adaptation Via Selective Pseudo Labeling and Progressive Self-Training

Auto-TLDR; Semi-supervised Domain Adaptation with Pseudo Labels

Abstract Slides Poster Similar

Energy-Constrained Self-Training for Unsupervised Domain Adaptation

Xiaofeng Liu, Xiongchang Liu, Bo Hu, Jun Lu, Jonghye Woo, Jane You

Auto-TLDR; Unsupervised Domain Adaptation with Energy Function Minimization

Abstract Slides Poster Similar

Progressive Cluster Purification for Unsupervised Feature Learning

Yifei Zhang, Chang Liu, Yu Zhou, Wei Wang, Weiping Wang, Qixiang Ye

Auto-TLDR; Progressive Cluster Purification for Unsupervised Feature Learning

Abstract Slides Poster Similar

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

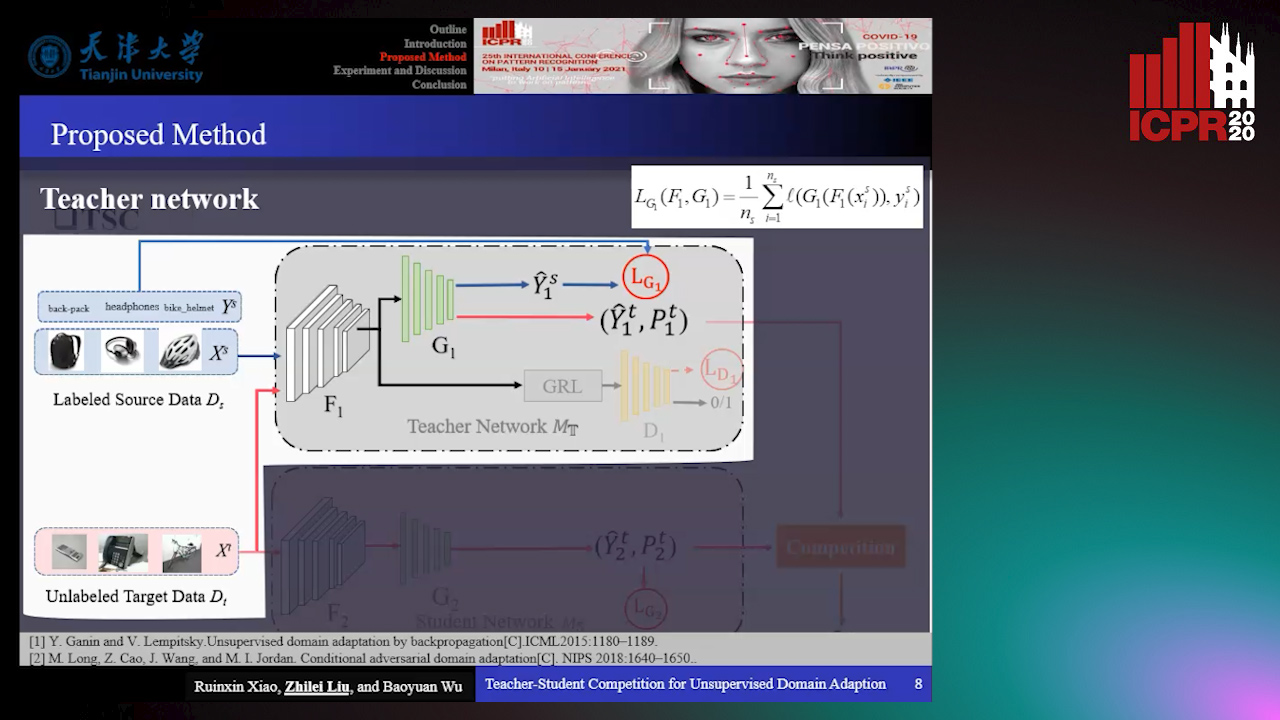

Teacher-Student Competition for Unsupervised Domain Adaptation

Ruixin Xiao, Zhilei Liu, Baoyuan Wu

Auto-TLDR; Unsupervised Domain Adaption with Teacher-Student Competition

Abstract Slides Poster Similar

ClusterFace: Joint Clustering and Classification for Set-Based Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Joint Clustering and Classification for Face Recognition in the Wild

Abstract Slides Poster Similar

Supervised Domain Adaptation Using Graph Embedding

Lukas Hedegaard, Omar Ali Sheikh-Omar, Alexandros Iosifidis

Auto-TLDR; Domain Adaptation from the Perspective of Multi-view Graph Embedding and Dimensionality Reduction

Abstract Slides Poster Similar

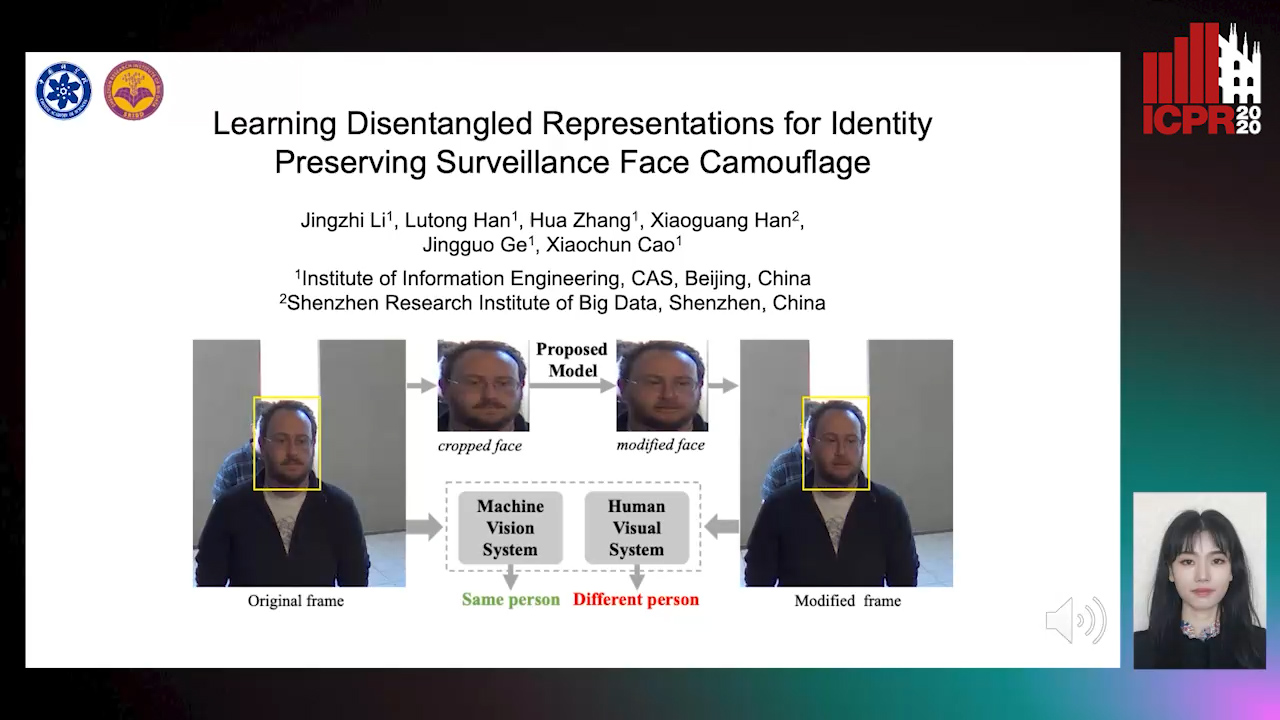

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

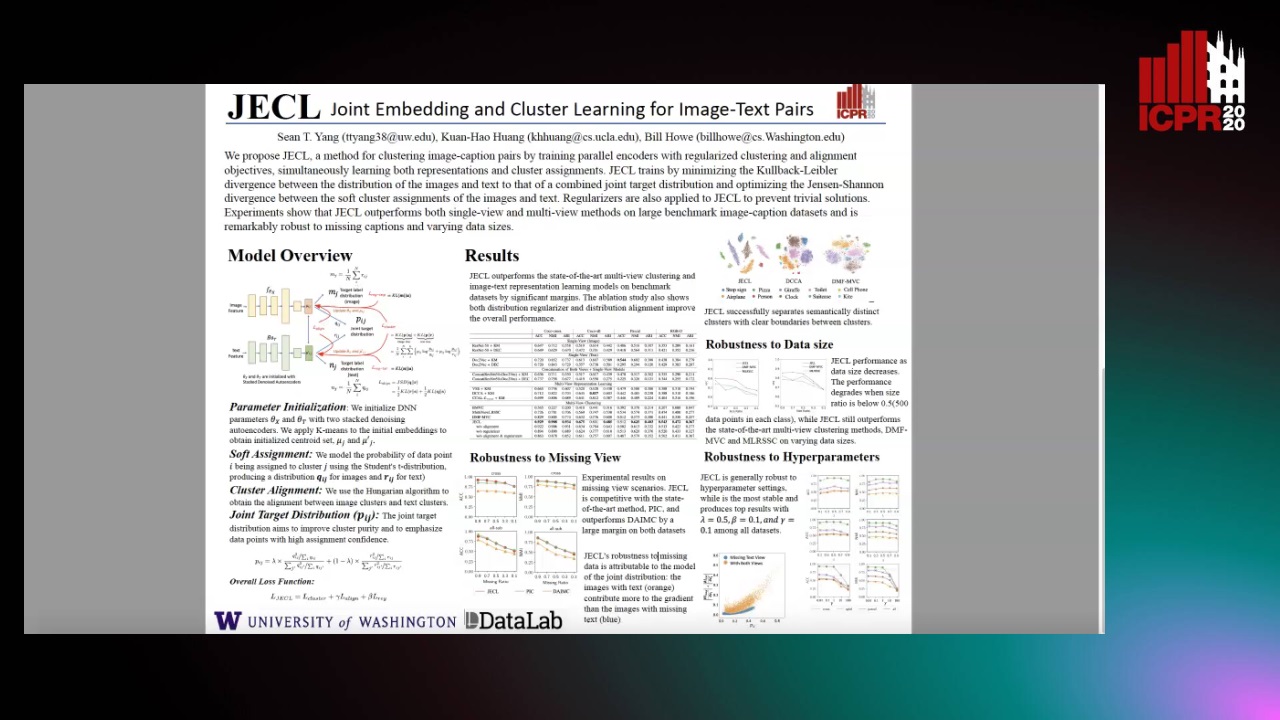

JECL: Joint Embedding and Cluster Learning for Image-Text Pairs

Sean Yang, Kuan-Hao Huang, Bill Howe

Auto-TLDR; JECL: Clustering Image-Caption Pairs with Parallel Encoders and Regularized Clusters

Multi-Modal Deep Clustering: Unsupervised Partitioning of Images

Auto-TLDR; Multi-Modal Deep Clustering for Unlabeled Images

Abstract Slides Poster Similar

A Duplex Spatiotemporal Filtering Network for Video-Based Person Re-Identification

Chong Zheng, Ping Wei, Nanning Zheng

Auto-TLDR; Duplex Spatiotemporal Filtering Network for Person Re-identification in Videos

Abstract Slides Poster Similar

Local Clustering with Mean Teacher for Semi-Supervised Learning

Zexi Chen, Benjamin Dutton, Bharathkumar Ramachandra, Tianfu Wu, Ranga Raju Vatsavai

Auto-TLDR; Local Clustering for Semi-supervised Learning