A Duplex Spatiotemporal Filtering Network for Video-Based Person Re-Identification

Chong Zheng,

Ping Wei,

Nanning Zheng

Auto-TLDR; Duplex Spatiotemporal Filtering Network for Person Re-identification in Videos

Similar papers

Not 3D Re-ID: Simple Single Stream 2D Convolution for Robust Video Re-Identification

Auto-TLDR; ResNet50-IBN for Video-based Person Re-Identification using Single Stream 2D Convolution Network

Abstract Slides Poster Similar

Attention-Based Model with Attribute Classification for Cross-Domain Person Re-Identification

Simin Xu, Lingkun Luo, Shiqiang Hu

Auto-TLDR; An attention-based model with attribute classification for cross-domain person re-identification

Rethinking ReID:Multi-Feature Fusion Person Re-Identification Based on Orientation Constraints

Mingjing Ai, Guozhi Shan, Bo Liu, Tianyang Liu

Auto-TLDR; Person Re-identification with Orientation Constrained Network

Abstract Slides Poster Similar

Decoupled Self-Attention Module for Person Re-Identification

Chao Zhao, Zhenyu Zhang, Jian Yang, Yan Yan

Auto-TLDR; Decoupled Self-attention Module for Person Re-identification

Abstract Slides Poster Similar

Self and Channel Attention Network for Person Re-Identification

Asad Munir, Niki Martinel, Christian Micheloni

Auto-TLDR; SCAN: Self and Channel Attention Network for Person Re-identification

Abstract Slides Poster Similar

Top-DB-Net: Top DropBlock for Activation Enhancement in Person Re-Identification

Auto-TLDR; Top-DB-Net for Person Re-Identification using Top DropBlock

Abstract Slides Poster Similar

Deep Top-Rank Counter Metric for Person Re-Identification

Chen Chen, Hao Dou, Xiyuan Hu, Silong Peng

Auto-TLDR; Deep Top-Rank Counter Metric for Person Re-identification

Abstract Slides Poster Similar

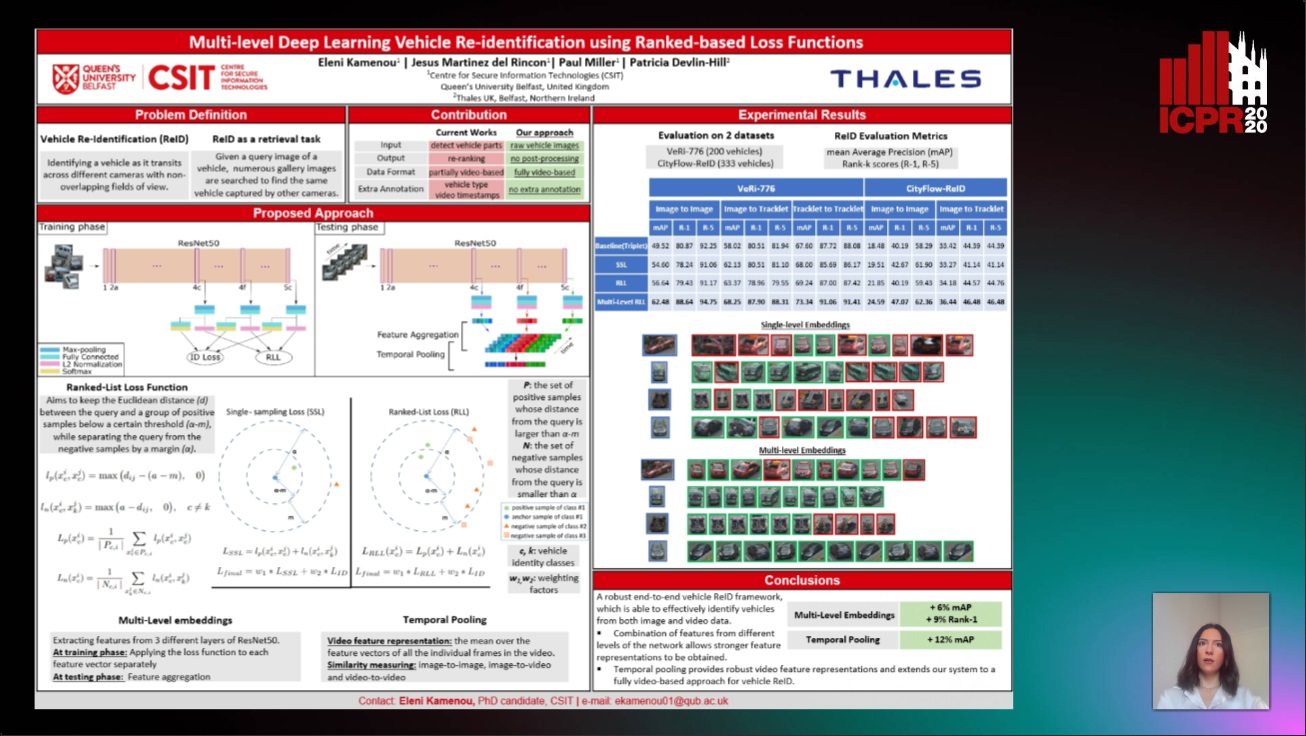

Multi-Level Deep Learning Vehicle Re-Identification Using Ranked-Based Loss Functions

Eleni Kamenou, Jesus Martinez-Del-Rincon, Paul Miller, Patricia Devlin - Hill

Auto-TLDR; Multi-Level Re-identification Network for Vehicle Re-Identification

Abstract Slides Poster Similar

Recurrent Deep Attention Network for Person Re-Identification

Changhao Wang, Jun Zhou, Xianfei Duan, Guanwen Zhang, Wei Zhou

Auto-TLDR; Recurrent Deep Attention Network for Person Re-identification

Abstract Slides Poster Similar

Attentive Part-Aware Networks for Partial Person Re-Identification

Lijuan Huo, Chunfeng Song, Zhengyi Liu, Zhaoxiang Zhang

Auto-TLDR; Part-Aware Learning for Partial Person Re-identification

Abstract Slides Poster Similar

Adaptive L2 Regularization in Person Re-Identification

Xingyang Ni, Liang Fang, Heikki Juhani Huttunen

Auto-TLDR; AdaptiveReID: Adaptive L2 Regularization for Person Re-identification

Abstract Slides Poster Similar

Building Computationally Efficient and Well-Generalizing Person Re-Identification Models with Metric Learning

Vladislav Sovrasov, Dmitry Sidnev

Auto-TLDR; Cross-Domain Generalization in Person Re-identification using Omni-Scale Network

G-FAN: Graph-Based Feature Aggregation Network for Video Face Recognition

He Zhao, Yongjie Shi, Xin Tong, Jingsi Wen, Xianghua Ying, Jinshi Hongbin Zha

Auto-TLDR; Graph-based Feature Aggregation Network for Video Face Recognition

Abstract Slides Poster Similar

How Important Are Faces for Person Re-Identification?

Julia Dietlmeier, Joseph Antony, Kevin Mcguinness, Noel E O'Connor

Auto-TLDR; Anonymization of Person Re-identification Datasets with Face Detection and Blurring

Abstract Slides Poster Similar

PHNet: Parasite-Host Network for Video Crowd Counting

Shiqiao Meng, Jiajie Li, Weiwei Guo, Jinfeng Jiang, Lai Ye

Auto-TLDR; PHNet: A Parasite-Host Network for Video Crowd Counting

Abstract Slides Poster Similar

Multi-Scale Cascading Network with Compact Feature Learning for RGB-Infrared Person Re-Identification

Can Zhang, Hong Liu, Wei Guo, Mang Ye

Auto-TLDR; Multi-Scale Part-Aware Cascading for RGB-Infrared Person Re-identification

Abstract Slides Poster Similar

Progressive Learning Algorithm for Efficient Person Re-Identification

Zhen Li, Hanyang Shao, Liang Niu, Nian Xue

Auto-TLDR; Progressive Learning Algorithm for Large-Scale Person Re-Identification

Abstract Slides Poster Similar

Pose Variation Adaptation for Person Re-Identification

Lei Zhang, Na Jiang, Qishuai Diao, Yue Xu, Zhong Zhou, Wei Wu

Auto-TLDR; Pose Transfer Generative Adversarial Network for Person Re-identification

Abstract Slides Poster Similar

TSMSAN: A Three-Stream Multi-Scale Attentive Network for Video Saliency Detection

Jingwen Yang, Guanwen Zhang, Wei Zhou

Auto-TLDR; Three-stream Multi-scale attentive network for video saliency detection in dynamic scenes

Abstract Slides Poster Similar

Open-World Group Retrieval with Ambiguity Removal: A Benchmark

Ling Mei, Jian-Huang Lai, Zhanxiang Feng, Xiaohua Xie

Auto-TLDR; P2GSM-AR: Re-identifying changing groups of people under the open-world and group-ambiguity scenarios

Abstract Slides Poster Similar

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

MixTConv: Mixed Temporal Convolutional Kernels for Efficient Action Recognition

Kaiyu Shan, Yongtao Wang, Zhi Tang, Ying Chen, Yangyan Li

Auto-TLDR; Mixed Temporal Convolution for Action Recognition

Abstract Slides Poster Similar

Multi-Label Contrastive Focal Loss for Pedestrian Attribute Recognition

Xiaoqiang Zheng, Zhenxia Yu, Lin Chen, Fan Zhu, Shilong Wang

Auto-TLDR; Multi-label Contrastive Focal Loss for Pedestrian Attribute Recognition

Abstract Slides Poster Similar

SAT-Net: Self-Attention and Temporal Fusion for Facial Action Unit Detection

Zhihua Li, Zheng Zhang, Lijun Yin

Auto-TLDR; Temporal Fusion and Self-Attention Network for Facial Action Unit Detection

Abstract Slides Poster Similar

Convolutional Feature Transfer via Camera-Specific Discriminative Pooling for Person Re-Identification

Tetsu Matsukawa, Einoshin Suzuki

Auto-TLDR; A small-scale CNN feature transfer method for person re-identification

Abstract Slides Poster Similar

A Base-Derivative Framework for Cross-Modality RGB-Infrared Person Re-Identification

Hong Liu, Ziling Miao, Bing Yang, Runwei Ding

Auto-TLDR; Cross-modality RGB-Infrared Person Re-identification with Auxiliary Modalities

Abstract Slides Poster Similar

RGB-Infrared Person Re-Identification Via Image Modality Conversion

Huangpeng Dai, Qing Xie, Yanchun Ma, Yongjian Liu, Shengwu Xiong

Auto-TLDR; CE2L: A Novel Network for Cross-Modality Re-identification with Feature Alignment

Abstract Slides Poster Similar

Flow-Guided Spatial Attention Tracking for Egocentric Activity Recognition

Auto-TLDR; flow-guided spatial attention tracking for egocentric activity recognition

Abstract Slides Poster Similar

Video Object Detection Using Object's Motion Context and Spatio-Temporal Feature Aggregation

Jaekyum Kim, Junho Koh, Byeongwon Lee, Seungji Yang, Jun Won Choi

Auto-TLDR; Video Object Detection Using Spatio-Temporal Aggregated Features and Gated Attention Network

Abstract Slides Poster Similar

ACCLVOS: Atrous Convolution with Spatial-Temporal ConvLSTM for Video Object Segmentation

Muzhou Xu, Shan Zong, Chunping Liu, Shengrong Gong, Zhaohui Wang, Yu Xia

Auto-TLDR; Semi-supervised Video Object Segmentation using U-shape Convolution and ConvLSTM

Abstract Slides Poster Similar

Attention-Driven Body Pose Encoding for Human Activity Recognition

Bappaditya Debnath, Swagat Kumar, Marry O'Brien, Ardhendu Behera

Auto-TLDR; Attention-based Body Pose Encoding for Human Activity Recognition

Abstract Slides Poster Similar

Video-Based Facial Expression Recognition Using Graph Convolutional Networks

Daizong Liu, Hongting Zhang, Pan Zhou

Auto-TLDR; Graph Convolutional Network for Video-based Facial Expression Recognition

Abstract Slides Poster Similar

SCA Net: Sparse Channel Attention Module for Action Recognition

Hang Song, Yonghong Song, Yuanlin Zhang

Auto-TLDR; SCA Net: Efficient Group Convolution for Sparse Channel Attention

Abstract Slides Poster Similar

MFI: Multi-Range Feature Interchange for Video Action Recognition

Sikai Bai, Qi Wang, Xuelong Li

Auto-TLDR; Multi-range Feature Interchange Network for Action Recognition in Videos

Abstract Slides Poster Similar

An Improved Bilinear Pooling Method for Image-Based Action Recognition

Auto-TLDR; An improved bilinear pooling method for image-based action recognition

Abstract Slides Poster Similar

Two-Stream Temporal Convolutional Network for Dynamic Facial Attractiveness Prediction

Nina Weng, Jiahao Wang, Annan Li, Yunhong Wang

Auto-TLDR; 2S-TCN: A Two-Stream Temporal Convolutional Network for Dynamic Facial Attractiveness Prediction

Abstract Slides Poster Similar

Attention-Based Deep Metric Learning for Near-Duplicate Video Retrieval

Kuan-Hsun Wang, Chia Chun Cheng, Yi-Ling Chen, Yale Song, Shang-Hong Lai

Auto-TLDR; Attention-based Deep Metric Learning for Near-duplicate Video Retrieval

Channel-Wise Dense Connection Graph Convolutional Network for Skeleton-Based Action Recognition

Michael Lao Banteng, Zhiyong Wu

Auto-TLDR; Two-stream channel-wise dense connection GCN for human action recognition

Abstract Slides Poster Similar

Vision-Based Multi-Modal Framework for Action Recognition

Djamila Romaissa Beddiar, Mourad Oussalah, Brahim Nini

Auto-TLDR; Multi-modal Framework for Human Activity Recognition Using RGB, Depth and Skeleton Data

Abstract Slides Poster Similar

Learning Object Deformation and Motion Adaption for Semi-Supervised Video Object Segmentation

Xiaoyang Zheng, Xin Tan, Jianming Guo, Lizhuang Ma

Auto-TLDR; Semi-supervised Video Object Segmentation with Mask-propagation-based Model

Abstract Slides Poster Similar

Progressive Unsupervised Domain Adaptation for Image-Based Person Re-Identification

Mingliang Yang, Da Huang, Jing Zhao

Auto-TLDR; Progressive Unsupervised Domain Adaptation for Person Re-Identification

Abstract Slides Poster Similar

You Ought to Look Around: Precise, Large Span Action Detection

Ge Pan, Zhang Han, Fan Yu, Yonghong Song, Yuanlin Zhang, Han Yuan

Auto-TLDR; YOLA: Local Feature Extraction for Action Localization with Variable receptive field

Polynomial Universal Adversarial Perturbations for Person Re-Identification

Wenjie Ding, Xing Wei, Rongrong Ji, Xiaopeng Hong, Yihong Gong

Auto-TLDR; Polynomial Universal Adversarial Perturbation for Re-identification Methods

Abstract Slides Poster Similar

Attention Pyramid Module for Scene Recognition

Zhinan Qiao, Xiaohui Yuan, Chengyuan Zhuang, Abolfazl Meyarian

Auto-TLDR; Attention Pyramid Module for Multi-Scale Scene Recognition

Abstract Slides Poster Similar

Temporal Feature Enhancement Network with External Memory for Object Detection in Surveillance Video

Masato Fujitake, Akihiro Sugimoto

Auto-TLDR; Temporal Attention Based External Memory Network for Surveillance Object Detection

Siamese Dynamic Mask Estimation Network for Fast Video Object Segmentation

Dexiang Hong, Guorong Li, Kai Xu, Li Su, Qingming Huang

Auto-TLDR; Siamese Dynamic Mask Estimation for Video Object Segmentation

Abstract Slides Poster Similar

RWF-2000: An Open Large Scale Video Database for Violence Detection

Ming Cheng, Kunjing Cai, Ming Li

Auto-TLDR; Flow Gated Network for Violence Detection in Surveillance Cameras

Abstract Slides Poster Similar

VTT: Long-Term Visual Tracking with Transformers

Tianling Bian, Yang Hua, Tao Song, Zhengui Xue, Ruhui Ma, Neil Robertson, Haibing Guan

Auto-TLDR; Visual Tracking Transformer with transformers for long-term visual tracking