Progressive Unsupervised Domain Adaptation for Image-Based Person Re-Identification

Mingliang Yang,

Da Huang,

Jing Zhao

Auto-TLDR; Progressive Unsupervised Domain Adaptation for Person Re-Identification

Similar papers

Self-Paced Bottom-Up Clustering Network with Side Information for Person Re-Identification

Mingkun Li, Chun-Guang Li, Ruo-Pei Guo, Jun Guo

Auto-TLDR; Self-Paced Bottom-up Clustering Network with Side Information for Unsupervised Person Re-identification

Abstract Slides Poster Similar

Unsupervised Domain Adaptation for Person Re-Identification through Source-Guided Pseudo-Labeling

Fabian Dubourvieux, Romaric Audigier, Angélique Loesch, Ainouz-Zemouche Samia, Stéphane Canu

Auto-TLDR; Pseudo-labeling for Unsupervised Domain Adaptation for Person Re-Identification

Abstract Slides Poster Similar

Semi-Supervised Person Re-Identification by Attribute Similarity Guidance

Peixian Hong, Ancong Wu, Wei-Shi Zheng

Auto-TLDR; Attribute Similarity Guidance Guidance Loss for Semi-supervised Person Re-identification

Abstract Slides Poster Similar

CANU-ReID: A Conditional Adversarial Network for Unsupervised Person Re-IDentification

Guillaume Delorme, Yihong Xu, Stéphane Lathuiliere, Radu Horaud, Xavier Alameda-Pineda

Auto-TLDR; Unsupervised Person Re-Identification with Clustering and Adversarial Learning

Attention-Based Model with Attribute Classification for Cross-Domain Person Re-Identification

Simin Xu, Lingkun Luo, Shiqiang Hu

Auto-TLDR; An attention-based model with attribute classification for cross-domain person re-identification

Progressive Learning Algorithm for Efficient Person Re-Identification

Zhen Li, Hanyang Shao, Liang Niu, Nian Xue

Auto-TLDR; Progressive Learning Algorithm for Large-Scale Person Re-Identification

Abstract Slides Poster Similar

Domain Generalized Person Re-Identification Via Cross-Domain Episodic Learning

Ci-Siang Lin, Yuan Chia Cheng, Yu-Chiang Frank Wang

Auto-TLDR; Domain-Invariant Person Re-identification with Episodic Learning

Abstract Slides Poster Similar

Building Computationally Efficient and Well-Generalizing Person Re-Identification Models with Metric Learning

Vladislav Sovrasov, Dmitry Sidnev

Auto-TLDR; Cross-Domain Generalization in Person Re-identification using Omni-Scale Network

Adaptive L2 Regularization in Person Re-Identification

Xingyang Ni, Liang Fang, Heikki Juhani Huttunen

Auto-TLDR; AdaptiveReID: Adaptive L2 Regularization for Person Re-identification

Abstract Slides Poster Similar

Semi-Supervised Domain Adaptation Via Selective Pseudo Labeling and Progressive Self-Training

Auto-TLDR; Semi-supervised Domain Adaptation with Pseudo Labels

Abstract Slides Poster Similar

Not 3D Re-ID: Simple Single Stream 2D Convolution for Robust Video Re-Identification

Auto-TLDR; ResNet50-IBN for Video-based Person Re-Identification using Single Stream 2D Convolution Network

Abstract Slides Poster Similar

Online Domain Adaptation for Person Re-Identification with a Human in the Loop

Rita Delussu, Lorenzo Putzu, Giorgio Fumera, Fabio Roli

Auto-TLDR; Human-in-the-loop for Person Re-Identification in Infeasible Applications

Abstract Slides Poster Similar

Self and Channel Attention Network for Person Re-Identification

Asad Munir, Niki Martinel, Christian Micheloni

Auto-TLDR; SCAN: Self and Channel Attention Network for Person Re-identification

Abstract Slides Poster Similar

Deep Top-Rank Counter Metric for Person Re-Identification

Chen Chen, Hao Dou, Xiyuan Hu, Silong Peng

Auto-TLDR; Deep Top-Rank Counter Metric for Person Re-identification

Abstract Slides Poster Similar

Pose Variation Adaptation for Person Re-Identification

Lei Zhang, Na Jiang, Qishuai Diao, Yue Xu, Zhong Zhou, Wei Wu

Auto-TLDR; Pose Transfer Generative Adversarial Network for Person Re-identification

Abstract Slides Poster Similar

Rethinking ReID:Multi-Feature Fusion Person Re-Identification Based on Orientation Constraints

Mingjing Ai, Guozhi Shan, Bo Liu, Tianyang Liu

Auto-TLDR; Person Re-identification with Orientation Constrained Network

Abstract Slides Poster Similar

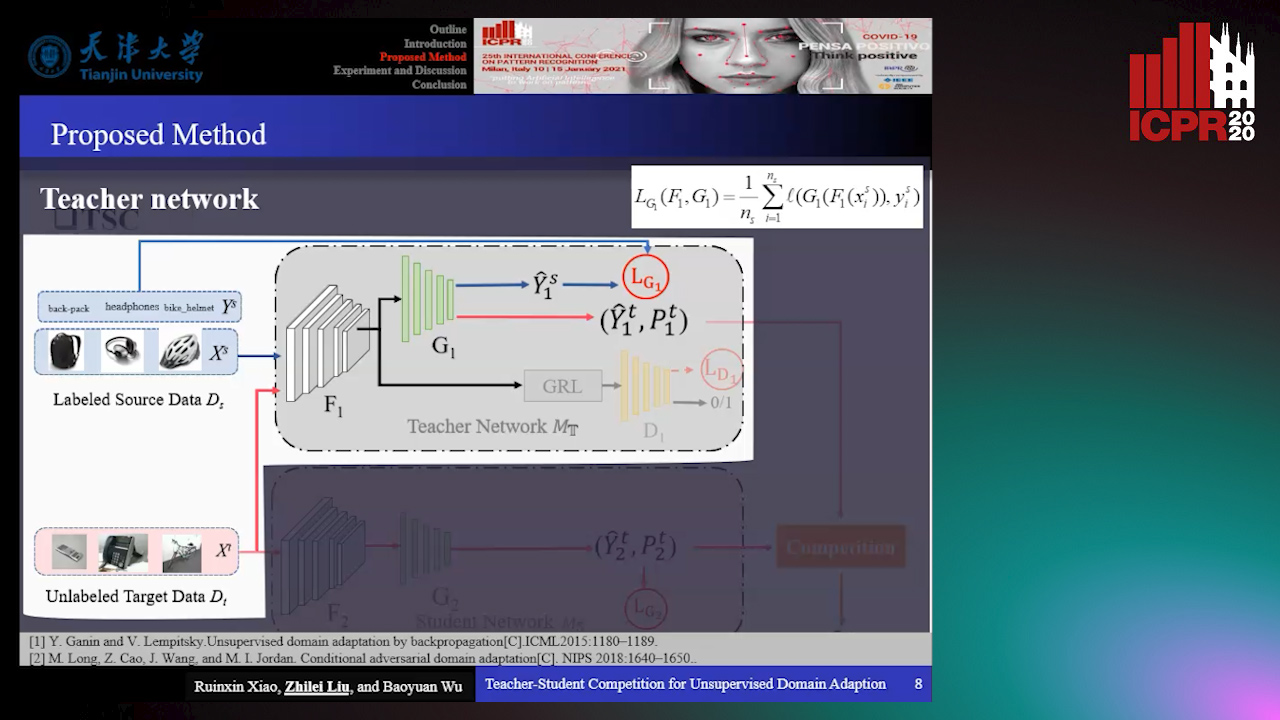

Teacher-Student Competition for Unsupervised Domain Adaptation

Ruixin Xiao, Zhilei Liu, Baoyuan Wu

Auto-TLDR; Unsupervised Domain Adaption with Teacher-Student Competition

Abstract Slides Poster Similar

Attentive Part-Aware Networks for Partial Person Re-Identification

Lijuan Huo, Chunfeng Song, Zhengyi Liu, Zhaoxiang Zhang

Auto-TLDR; Part-Aware Learning for Partial Person Re-identification

Abstract Slides Poster Similar

Polynomial Universal Adversarial Perturbations for Person Re-Identification

Wenjie Ding, Xing Wei, Rongrong Ji, Xiaopeng Hong, Yihong Gong

Auto-TLDR; Polynomial Universal Adversarial Perturbation for Re-identification Methods

Abstract Slides Poster Similar

Recurrent Deep Attention Network for Person Re-Identification

Changhao Wang, Jun Zhou, Xianfei Duan, Guanwen Zhang, Wei Zhou

Auto-TLDR; Recurrent Deep Attention Network for Person Re-identification

Abstract Slides Poster Similar

SSDL: Self-Supervised Domain Learning for Improved Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Self-supervised Domain Learning for Face Recognition in unconstrained environments

Abstract Slides Poster Similar

Energy-Constrained Self-Training for Unsupervised Domain Adaptation

Xiaofeng Liu, Xiongchang Liu, Bo Hu, Jun Lu, Jonghye Woo, Jane You

Auto-TLDR; Unsupervised Domain Adaptation with Energy Function Minimization

Abstract Slides Poster Similar

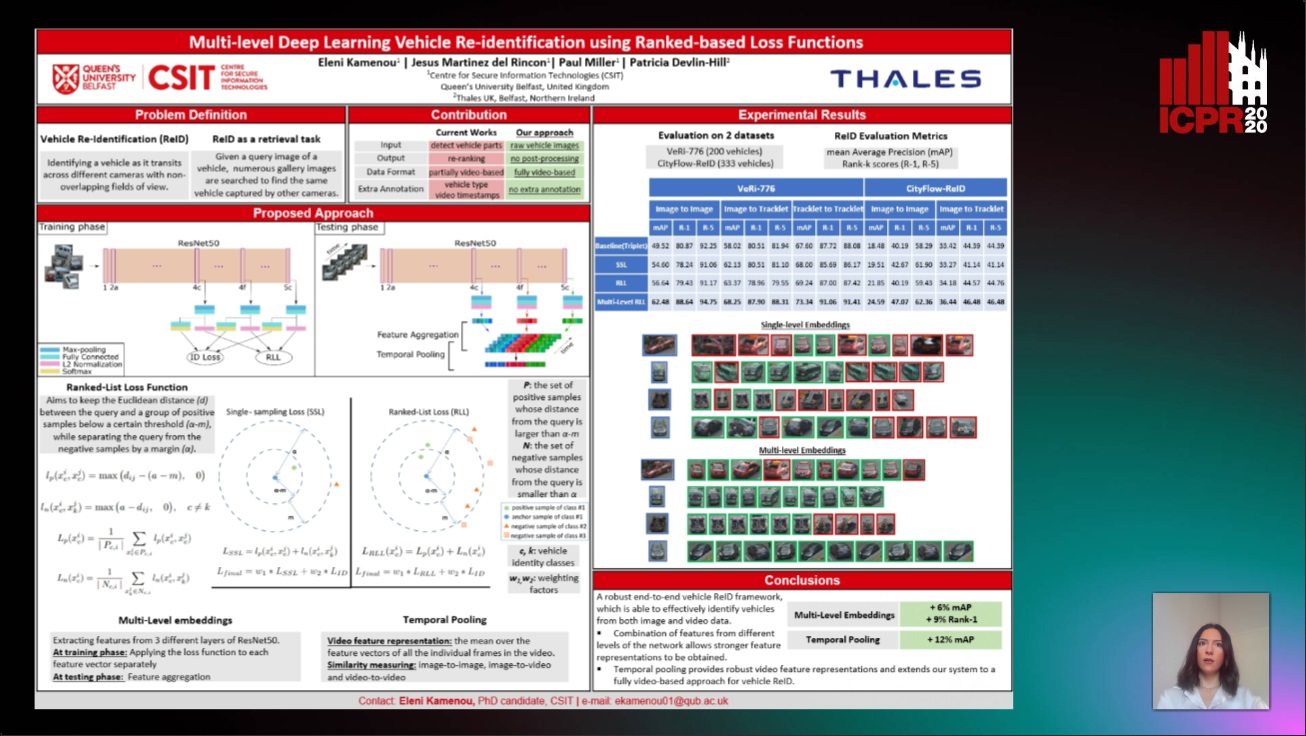

Multi-Level Deep Learning Vehicle Re-Identification Using Ranked-Based Loss Functions

Eleni Kamenou, Jesus Martinez-Del-Rincon, Paul Miller, Patricia Devlin - Hill

Auto-TLDR; Multi-Level Re-identification Network for Vehicle Re-Identification

Abstract Slides Poster Similar

Spatial-Aware GAN for Unsupervised Person Re-Identification

Fangneng Zhan, Changgong Zhang

Auto-TLDR; Unsupervised Unsupervised Domain Adaptation for Person Re-Identification

RGB-Infrared Person Re-Identification Via Image Modality Conversion

Huangpeng Dai, Qing Xie, Yanchun Ma, Yongjian Liu, Shengwu Xiong

Auto-TLDR; CE2L: A Novel Network for Cross-Modality Re-identification with Feature Alignment

Abstract Slides Poster Similar

Learning Embeddings for Image Clustering: An Empirical Study of Triplet Loss Approaches

Kalun Ho, Janis Keuper, Franz-Josef Pfreundt, Margret Keuper

Auto-TLDR; Clustering Objectives for K-means and Correlation Clustering Using Triplet Loss

Abstract Slides Poster Similar

Top-DB-Net: Top DropBlock for Activation Enhancement in Person Re-Identification

Auto-TLDR; Top-DB-Net for Person Re-Identification using Top DropBlock

Abstract Slides Poster Similar

Multi-Label Contrastive Focal Loss for Pedestrian Attribute Recognition

Xiaoqiang Zheng, Zhenxia Yu, Lin Chen, Fan Zhu, Shilong Wang

Auto-TLDR; Multi-label Contrastive Focal Loss for Pedestrian Attribute Recognition

Abstract Slides Poster Similar

DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Gaoang Wang, Chen Lin, Tianqiang Liu, Mingwei He, Jiebo Luo

Auto-TLDR; DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Abstract Slides Poster Similar

Stochastic Label Refinery: Toward Better Target Label Distribution

Xi Fang, Jiancheng Yang, Bingbing Ni

Auto-TLDR; Stochastic Label Refinery for Deep Supervised Learning

Abstract Slides Poster Similar

How Important Are Faces for Person Re-Identification?

Julia Dietlmeier, Joseph Antony, Kevin Mcguinness, Noel E O'Connor

Auto-TLDR; Anonymization of Person Re-identification Datasets with Face Detection and Blurring

Abstract Slides Poster Similar

Convolutional Feature Transfer via Camera-Specific Discriminative Pooling for Person Re-Identification

Tetsu Matsukawa, Einoshin Suzuki

Auto-TLDR; A small-scale CNN feature transfer method for person re-identification

Abstract Slides Poster Similar

Multi-Scale Cascading Network with Compact Feature Learning for RGB-Infrared Person Re-Identification

Can Zhang, Hong Liu, Wei Guo, Mang Ye

Auto-TLDR; Multi-Scale Part-Aware Cascading for RGB-Infrared Person Re-identification

Abstract Slides Poster Similar

Decoupled Self-Attention Module for Person Re-Identification

Chao Zhao, Zhenyu Zhang, Jian Yang, Yan Yan

Auto-TLDR; Decoupled Self-attention Module for Person Re-identification

Abstract Slides Poster Similar

Sample-Dependent Distance for 1 : N Identification Via Discriminative Feature Selection

Auto-TLDR; Feature Selection Mask for 1:N Identification Problems with Binary Features

Abstract Slides Poster Similar

A Unified Framework for Distance-Aware Domain Adaptation

Fei Wang, Youdong Ding, Huan Liang, Yuzhen Gao, Wenqi Che

Auto-TLDR; distance-aware domain adaptation

Abstract Slides Poster Similar

Open-World Group Retrieval with Ambiguity Removal: A Benchmark

Ling Mei, Jian-Huang Lai, Zhanxiang Feng, Xiaohua Xie

Auto-TLDR; P2GSM-AR: Re-identifying changing groups of people under the open-world and group-ambiguity scenarios

Abstract Slides Poster Similar

Self-Training for Domain Adaptive Scene Text Detection

Yudi Chen, Wei Wang, Yu Zhou, Fei Yang, Dongbao Yang, Weiping Wang

Auto-TLDR; A self-training framework for image-based scene text detection

Foreground-Focused Domain Adaption for Object Detection

Auto-TLDR; Unsupervised Domain Adaptation for Unsupervised Object Detection

Revisiting ImprovedGAN with Metric Learning for Semi-Supervised Learning

Jaewoo Park, Yoon Gyo Jung, Andrew Teoh

Auto-TLDR; Improving ImprovedGAN with Metric Learning for Semi-supervised Learning

Abstract Slides Poster Similar

Class Conditional Alignment for Partial Domain Adaptation

Mohsen Kheirandishfard, Fariba Zohrizadeh, Farhad Kamangar

Auto-TLDR; Multi-class Adversarial Adaptation for Partial Domain Adaptation

Abstract Slides Poster Similar

Open Set Domain Recognition Via Attention-Based GCN and Semantic Matching Optimization

Xinxing He, Yuan Yuan, Zhiyu Jiang

Auto-TLDR; Attention-based GCN and Semantic Matching Optimization for Open Set Domain Recognition

Abstract Slides Poster Similar

Progressive Cluster Purification for Unsupervised Feature Learning

Yifei Zhang, Chang Liu, Yu Zhou, Wei Wang, Weiping Wang, Qixiang Ye

Auto-TLDR; Progressive Cluster Purification for Unsupervised Feature Learning

Abstract Slides Poster Similar

Towards Robust Learning with Different Label Noise Distributions

Diego Ortego, Eric Arazo, Paul Albert, Noel E O'Connor, Kevin Mcguinness

Auto-TLDR; Distribution Robust Pseudo-Labeling with Semi-supervised Learning

Unsupervised Domain Adaptation with Multiple Domain Discriminators and Adaptive Self-Training

Teo Spadotto, Marco Toldo, Umberto Michieli, Pietro Zanuttigh

Auto-TLDR; Unsupervised Domain Adaptation for Semantic Segmentation of Urban Scenes

Abstract Slides Poster Similar

A Base-Derivative Framework for Cross-Modality RGB-Infrared Person Re-Identification

Hong Liu, Ziling Miao, Bing Yang, Runwei Ding

Auto-TLDR; Cross-modality RGB-Infrared Person Re-identification with Auxiliary Modalities

Abstract Slides Poster Similar

An Adaptive Video-To-Video Face Identification System Based on Self-Training

Eric Lopez-Lopez, Carlos V. Regueiro, Xosé M. Pardo

Auto-TLDR; Adaptive Video-to-Video Face Recognition using Dynamic Ensembles of SVM's

Abstract Slides Poster Similar

Constrained Spectral Clustering Network with Self-Training

Xinyue Liu, Shichong Yang, Linlin Zong

Auto-TLDR; Constrained Spectral Clustering Network: A Constrained Deep spectral clustering network

Abstract Slides Poster Similar