Energy-Constrained Self-Training for Unsupervised Domain Adaptation

Xiaofeng Liu,

Xiongchang Liu,

Bo Hu,

Jun Lu,

Jonghye Woo,

Jane You

Auto-TLDR; Unsupervised Domain Adaptation with Energy Function Minimization

Similar papers

Unsupervised Domain Adaptation with Multiple Domain Discriminators and Adaptive Self-Training

Teo Spadotto, Marco Toldo, Umberto Michieli, Pietro Zanuttigh

Auto-TLDR; Unsupervised Domain Adaptation for Semantic Segmentation of Urban Scenes

Abstract Slides Poster Similar

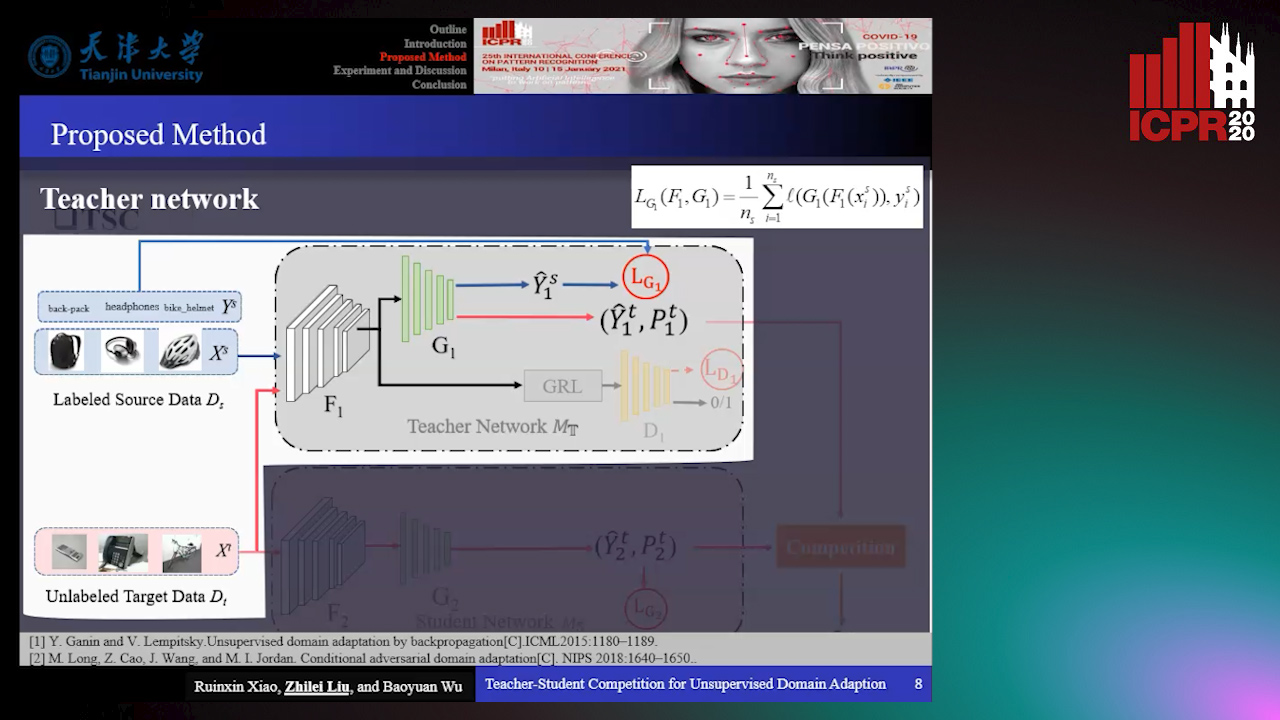

Teacher-Student Competition for Unsupervised Domain Adaptation

Ruixin Xiao, Zhilei Liu, Baoyuan Wu

Auto-TLDR; Unsupervised Domain Adaption with Teacher-Student Competition

Abstract Slides Poster Similar

Semi-Supervised Domain Adaptation Via Selective Pseudo Labeling and Progressive Self-Training

Auto-TLDR; Semi-supervised Domain Adaptation with Pseudo Labels

Abstract Slides Poster Similar

Cross-Domain Semantic Segmentation of Urban Scenes Via Multi-Level Feature Alignment

Bin Zhang, Shengjie Zhao, Rongqing Zhang

Auto-TLDR; Cross-Domain Semantic Segmentation Using Generative Adversarial Networks

Abstract Slides Poster Similar

Class Conditional Alignment for Partial Domain Adaptation

Mohsen Kheirandishfard, Fariba Zohrizadeh, Farhad Kamangar

Auto-TLDR; Multi-class Adversarial Adaptation for Partial Domain Adaptation

Abstract Slides Poster Similar

Unsupervised Domain Adaptation for Person Re-Identification through Source-Guided Pseudo-Labeling

Fabian Dubourvieux, Romaric Audigier, Angélique Loesch, Ainouz-Zemouche Samia, Stéphane Canu

Auto-TLDR; Pseudo-labeling for Unsupervised Domain Adaptation for Person Re-Identification

Abstract Slides Poster Similar

Identity-Aware Facial Expression Recognition in Compressed Video

Xiaofeng Liu, Linghao Jin, Xu Han, Jun Lu, Jonghye Woo, Jane You

Auto-TLDR; Exploring Facial Expression Representation in Compressed Video with Mutual Information Minimization

Foreground-Focused Domain Adaption for Object Detection

Auto-TLDR; Unsupervised Domain Adaptation for Unsupervised Object Detection

Self-Supervised Domain Adaptation with Consistency Training

Liang Xiao, Jiaolong Xu, Dawei Zhao, Zhiyu Wang, Li Wang, Yiming Nie, Bin Dai

Auto-TLDR; Unsupervised Domain Adaptation for Image Classification

Abstract Slides Poster Similar

SSDL: Self-Supervised Domain Learning for Improved Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Self-supervised Domain Learning for Face Recognition in unconstrained environments

Abstract Slides Poster Similar

Progressive Unsupervised Domain Adaptation for Image-Based Person Re-Identification

Mingliang Yang, Da Huang, Jing Zhao

Auto-TLDR; Progressive Unsupervised Domain Adaptation for Person Re-Identification

Abstract Slides Poster Similar

Adversarially Constrained Interpolation for Unsupervised Domain Adaptation

Mohamed Azzam, Aurele Tohokantche Gnanha, Hau-San Wong, Si Wu

Auto-TLDR; Unsupervised Domain Adaptation with Domain Mixup Strategy

Abstract Slides Poster Similar

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

Stochastic Label Refinery: Toward Better Target Label Distribution

Xi Fang, Jiancheng Yang, Bingbing Ni

Auto-TLDR; Stochastic Label Refinery for Deep Supervised Learning

Abstract Slides Poster Similar

A Unified Framework for Distance-Aware Domain Adaptation

Fei Wang, Youdong Ding, Huan Liang, Yuzhen Gao, Wenqi Che

Auto-TLDR; distance-aware domain adaptation

Abstract Slides Poster Similar

Unsupervised Multi-Task Domain Adaptation

Auto-TLDR; Unsupervised Domain Adaptation with Multi-task Learning for Image Recognition

Abstract Slides Poster Similar

Enlarging Discriminative Power by Adding an Extra Class in Unsupervised Domain Adaptation

Hai Tran, Sumyeong Ahn, Taeyoung Lee, Yung Yi

Auto-TLDR; Unsupervised Domain Adaptation using Artificial Classes

Abstract Slides Poster Similar

CANU-ReID: A Conditional Adversarial Network for Unsupervised Person Re-IDentification

Guillaume Delorme, Yihong Xu, Stéphane Lathuiliere, Radu Horaud, Xavier Alameda-Pineda

Auto-TLDR; Unsupervised Person Re-Identification with Clustering and Adversarial Learning

Randomized Transferable Machine

Auto-TLDR; Randomized Transferable Machine for Suboptimal Feature-based Transfer Learning

Abstract Slides Poster Similar

Building Computationally Efficient and Well-Generalizing Person Re-Identification Models with Metric Learning

Vladislav Sovrasov, Dmitry Sidnev

Auto-TLDR; Cross-Domain Generalization in Person Re-identification using Omni-Scale Network

Text Recognition in Real Scenarios with a Few Labeled Samples

Jinghuang Lin, Cheng Zhanzhan, Fan Bai, Yi Niu, Shiliang Pu, Shuigeng Zhou

Auto-TLDR; Few-shot Adversarial Sequence Domain Adaptation for Scene Text Recognition

Abstract Slides Poster Similar

Supervised Domain Adaptation Using Graph Embedding

Lukas Hedegaard, Omar Ali Sheikh-Omar, Alexandros Iosifidis

Auto-TLDR; Domain Adaptation from the Perspective of Multi-view Graph Embedding and Dimensionality Reduction

Abstract Slides Poster Similar

Self-Training for Domain Adaptive Scene Text Detection

Yudi Chen, Wei Wang, Yu Zhou, Fei Yang, Dongbao Yang, Weiping Wang

Auto-TLDR; A self-training framework for image-based scene text detection

Towards Robust Learning with Different Label Noise Distributions

Diego Ortego, Eric Arazo, Paul Albert, Noel E O'Connor, Kevin Mcguinness

Auto-TLDR; Distribution Robust Pseudo-Labeling with Semi-supervised Learning

Semi-Supervised Person Re-Identification by Attribute Similarity Guidance

Peixian Hong, Ancong Wu, Wei-Shi Zheng

Auto-TLDR; Attribute Similarity Guidance Guidance Loss for Semi-supervised Person Re-identification

Abstract Slides Poster Similar

Online Domain Adaptation for Person Re-Identification with a Human in the Loop

Rita Delussu, Lorenzo Putzu, Giorgio Fumera, Fabio Roli

Auto-TLDR; Human-in-the-loop for Person Re-Identification in Infeasible Applications

Abstract Slides Poster Similar

Manual-Label Free 3D Detection Via an Open-Source Simulator

Zhen Yang, Chi Zhang, Zhaoxiang Zhang, Huiming Guo

Auto-TLDR; DA-VoxelNet: A Novel Domain Adaptive VoxelNet for LIDAR-based 3D Object Detection

Abstract Slides Poster Similar

A Simple Domain Shifting Network for Generating Low Quality Images

Guruprasad Hegde, Avinash Nittur Ramesh, Kanchana Vaishnavi Gandikota, Michael Möller, Roman Obermaisser

Auto-TLDR; Robotic Image Classification Using Quality degrading networks

Abstract Slides Poster Similar

Rethinking Domain Generalization Baselines

Francesco Cappio Borlino, Antonio D'Innocente, Tatiana Tommasi

Auto-TLDR; Style Transfer Data Augmentation for Domain Generalization

Abstract Slides Poster Similar

Joint Supervised and Self-Supervised Learning for 3D Real World Challenges

Antonio Alliegro, Davide Boscaini, Tatiana Tommasi

Auto-TLDR; Self-supervision for 3D Shape Classification and Segmentation in Point Clouds

DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Gaoang Wang, Chen Lin, Tianqiang Liu, Mingwei He, Jiebo Luo

Auto-TLDR; DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Abstract Slides Poster Similar

Open Set Domain Recognition Via Attention-Based GCN and Semantic Matching Optimization

Xinxing He, Yuan Yuan, Zhiyu Jiang

Auto-TLDR; Attention-based GCN and Semantic Matching Optimization for Open Set Domain Recognition

Abstract Slides Poster Similar

Domain Generalized Person Re-Identification Via Cross-Domain Episodic Learning

Ci-Siang Lin, Yuan Chia Cheng, Yu-Chiang Frank Wang

Auto-TLDR; Domain-Invariant Person Re-identification with Episodic Learning

Abstract Slides Poster Similar

Spatial-Aware GAN for Unsupervised Person Re-Identification

Fangneng Zhan, Changgong Zhang

Auto-TLDR; Unsupervised Unsupervised Domain Adaptation for Person Re-Identification

Self-Paced Bottom-Up Clustering Network with Side Information for Person Re-Identification

Mingkun Li, Chun-Guang Li, Ruo-Pei Guo, Jun Guo

Auto-TLDR; Self-Paced Bottom-up Clustering Network with Side Information for Unsupervised Person Re-identification

Abstract Slides Poster Similar

Attention-Based Model with Attribute Classification for Cross-Domain Person Re-Identification

Simin Xu, Lingkun Luo, Shiqiang Hu

Auto-TLDR; An attention-based model with attribute classification for cross-domain person re-identification

Rethinking Deep Active Learning: Using Unlabeled Data at Model Training

Oriane Siméoni, Mateusz Budnik, Yannis Avrithis, Guillaume Gravier

Auto-TLDR; Unlabeled Data for Active Learning

Abstract Slides Poster Similar

Quantifying the Use of Domain Randomization

Mohammad Ani, Hector Basevi, Ales Leonardis

Auto-TLDR; Evaluating Domain Randomization for Synthetic Image Generation by directly measuring the difference between realistic and synthetic data distributions

Abstract Slides Poster Similar

WeightAlign: Normalizing Activations by Weight Alignment

Xiangwei Shi, Yunqiang Li, Xin Liu, Jan Van Gemert

Auto-TLDR; WeightAlign: Normalization of Activations without Sample Statistics

Abstract Slides Poster Similar

Progressive Adversarial Semantic Segmentation

Abdullah-Al-Zubaer Imran, Demetri Terzopoulos

Auto-TLDR; Progressive Adversarial Semantic Segmentation for End-to-End Medical Image Segmenting

Abstract Slides Poster Similar

Deep Reinforcement Learning for Autonomous Driving by Transferring Visual Features

Hongli Zhou, Guanwen Zhang, Wei Zhou

Auto-TLDR; Deep Reinforcement Learning for Autonomous Driving by Transferring Visual Features

Abstract Slides Poster Similar

Generative Latent Implicit Conditional Optimization When Learning from Small Sample

Auto-TLDR; GLICO: Generative Latent Implicit Conditional Optimization for Small Sample Learning

Abstract Slides Poster Similar

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

DAPC: Domain Adaptation People Counting Via Style-Level Transfer Learning and Scene-Aware Estimation

Na Jiang, Xingsen Wen, Zhiping Shi

Auto-TLDR; Domain Adaptation People counting via Style-Level Transfer Learning and Scene-Aware Estimation

Abstract Slides Poster Similar

Dealing with Scarce Labelled Data: Semi-Supervised Deep Learning with Mix Match for Covid-19 Detection Using Chest X-Ray Images

Saúl Calderón Ramirez, Raghvendra Giri, Shengxiang Yang, Armaghan Moemeni, Mario Umaña, David Elizondo, Jordina Torrents-Barrena, Miguel A. Molina-Cabello

Auto-TLDR; Semi-supervised Deep Learning for Covid-19 Detection using Chest X-rays

Abstract Slides Poster Similar

GAP: Quantifying the Generative Adversarial Set and Class Feature Applicability of Deep Neural Networks

Edward Collier, Supratik Mukhopadhyay

Auto-TLDR; Approximating Adversarial Learning in Deep Neural Networks Using Set and Class Adversaries

Abstract Slides Poster Similar

CASNet: Common Attribute Support Network for Image Instance and Panoptic Segmentation

Xiaolong Liu, Yuqing Hou, Anbang Yao, Yurong Chen, Keqiang Li

Auto-TLDR; Common Attribute Support Network for instance segmentation and panoptic segmentation

Abstract Slides Poster Similar

Efficient Shadow Detection and Removal Using Synthetic Data with Domain Adaptation

Rui Guo, Babajide Ayinde, Hao Sun

Auto-TLDR; Shadow Detection and Removal with Domain Adaptation and Synthetic Image Database