CASNet: Common Attribute Support Network for Image Instance and Panoptic Segmentation

Xiaolong Liu,

Yuqing Hou,

Anbang Yao,

Yurong Chen,

Keqiang Li

Auto-TLDR; Common Attribute Support Network for instance segmentation and panoptic segmentation

Similar papers

Learning a Dynamic High-Resolution Network for Multi-Scale Pedestrian Detection

Mengyuan Ding, Shanshan Zhang, Jian Yang

Auto-TLDR; Learningable Dynamic HRNet for Pedestrian Detection

Abstract Slides Poster Similar

SFPN: Semantic Feature Pyramid Network for Object Detection

Auto-TLDR; SFPN: Semantic Feature Pyramid Network to Address Information Dilution Issue in FPN

Abstract Slides Poster Similar

A Novel Region of Interest Extraction Layer for Instance Segmentation

Leonardo Rossi, Akbar Karimi, Andrea Prati

Auto-TLDR; Generic RoI Extractor for Two-Stage Neural Network for Instance Segmentation

Abstract Slides Poster Similar

End-To-End Deep Learning Methods for Automated Damage Detection in Extreme Events at Various Scales

Yongsheng Bai, Alper Yilmaz, Halil Sezen

Auto-TLDR; Robust Mask R-CNN for Crack Detection in Extreme Events

Abstract Slides Poster Similar

Forground-Guided Vehicle Perception Framework

Kun Tian, Tong Zhou, Shiming Xiang, Chunhong Pan

Auto-TLDR; A foreground segmentation branch for vehicle detection

Abstract Slides Poster Similar

SyNet: An Ensemble Network for Object Detection in UAV Images

Auto-TLDR; SyNet: Combining Multi-Stage and Single-Stage Object Detection for Aerial Images

FourierNet: Compact Mask Representation for Instance Segmentation Using Differentiable Shape Decoders

Hamd Ul Moqeet Riaz, Nuri Benbarka, Andreas Zell

Auto-TLDR; FourierNet: A Single shot, anchor-free, fully convolutional instance segmentation method that predicts a shape vector

Abstract Slides Poster Similar

Scene Text Detection with Selected Anchors

Anna Zhu, Hang Du, Shengwu Xiong

Auto-TLDR; AS-RPN: Anchor Selection-based Region Proposal Network for Scene Text Detection

Abstract Slides Poster Similar

Triplet-Path Dilated Network for Detection and Segmentation of General Pathological Images

Jiaqi Luo, Zhicheng Zhao, Fei Su, Limei Guo

Auto-TLDR; Triplet-path Network for One-Stage Object Detection and Segmentation in Pathological Images

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

Object Detection Model Based on Scene-Level Region Proposal Self-Attention

Yu Quan, Zhixin Li, Canlong Zhang, Huifang Ma

Auto-TLDR; Exploiting Semantic Informations for Object Detection

Abstract Slides Poster Similar

One-Stage Multi-Task Detector for 3D Cardiac MR Imaging

Weizeng Lu, Xi Jia, Wei Chen, Nicolò Savioli, Antonio De Marvao, Linlin Shen, Declan O'Regan, Jinming Duan

Auto-TLDR; Multi-task Learning for Real-Time, simultaneous landmark location and bounding box detection in 3D space

Abstract Slides Poster Similar

Hybrid Cascade Point Search Network for High Precision Bar Chart Component Detection

Junyu Luo, Jinpeng Wang, Chin-Yew Lin

Auto-TLDR; Object Detection of Chart Components in Chart Images Using Point-based and Region-Based Object Detection Framework

Abstract Slides Poster Similar

An Accurate Threshold Insensitive Kernel Detector for Arbitrary Shaped Text

Xijun Qian, Yifan Liu, Yu-Bin Yang

Auto-TLDR; TIKD: threshold insensitive kernel detector for arbitrary shaped text

Yolo+FPN: 2D and 3D Fused Object Detection with an RGB-D Camera

Auto-TLDR; Yolo+FPN: Combining 2D and 3D Object Detection for Real-Time Object Detection

Abstract Slides Poster Similar

StrongPose: Bottom-up and Strong Keypoint Heat Map Based Pose Estimation

Auto-TLDR; StrongPose: A bottom-up box-free approach for human pose estimation and action recognition

Abstract Slides Poster Similar

FeatureNMS: Non-Maximum Suppression by Learning Feature Embeddings

Auto-TLDR; FeatureNMS: Non-Maximum Suppression for Multiple Object Detection

Abstract Slides Poster Similar

S-VoteNet: Deep Hough Voting with Spherical Proposal for 3D Object Detection

Yanxian Chen, Huimin Ma, Xi Li, Xiong Luo

Auto-TLDR; S-VoteNet: 3D Object Detection with Spherical Bounded Box Prediction

Abstract Slides Poster Similar

Joint Semantic-Instance Segmentation of 3D Point Clouds: Instance Separation and Semantic Fusion

Auto-TLDR; Joint Semantic Segmentation and Instance Separation of 3D Point Clouds

Abstract Slides Poster Similar

A Fine-Grained Dataset and Its Efficient Semantic Segmentation for Unstructured Driving Scenarios

Kai Andreas Metzger, Peter Mortimer, Hans J "Joe" Wuensche

Auto-TLDR; TAS500: A Semantic Segmentation Dataset for Autonomous Driving in Unstructured Environments

Abstract Slides Poster Similar

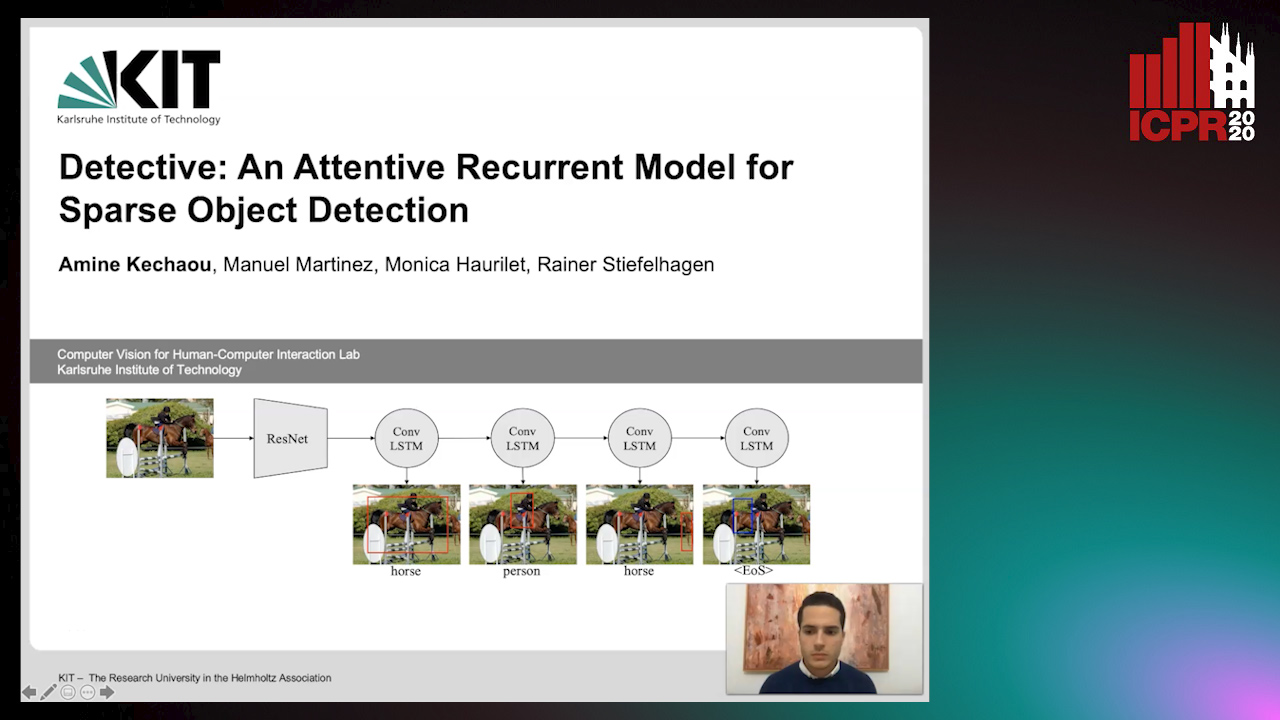

Detective: An Attentive Recurrent Model for Sparse Object Detection

Amine Kechaou, Manuel Martinez, Monica Haurilet, Rainer Stiefelhagen

Auto-TLDR; Detective: An attentive object detector that identifies objects in images in a sequential manner

Abstract Slides Poster Similar

Small Object Detection by Generative and Discriminative Learning

Yi Gu, Jie Li, Chentao Wu, Weijia Jia, Jianping Chen

Auto-TLDR; Generative and Discriminative Learning for Small Object Detection

Abstract Slides Poster Similar

GSTO: Gated Scale-Transfer Operation for Multi-Scale Feature Learning in Semantic Segmentation

Zhuoying Wang, Yongtao Wang, Zhi Tang, Yangyan Li, Ying Chen, Haibin Ling, Weisi Lin

Auto-TLDR; Gated Scale-Transfer Operation for Semantic Segmentation

Abstract Slides Poster Similar

Enhanced Vote Network for 3D Object Detection in Point Clouds

Auto-TLDR; A Vote Feature Enhancement Network for 3D Bounding Box Prediction

Abstract Slides Poster Similar

DualBox: Generating BBox Pair with Strong Correspondence Via Occlusion Pattern Clustering and Proposal Refinement

Zheng Ge, Chuyu Hu, Xin Huang, Baiqiao Qiu, Osamu Yoshie

Auto-TLDR; R2NMS: Combining Full and Visible Body Bounding Box for Dense Pedestrian Detection

Abstract Slides Poster Similar

Feature Embedding Based Text Instance Grouping for Largely Spaced and Occluded Text Detection

Pan Gao, Qi Wan, Renwu Gao, Linlin Shen

Auto-TLDR; Text Instance Embedding Based Feature Embeddings for Multiple Text Instance Grouping

Abstract Slides Poster Similar

Detecting Objects with High Object Region Percentage

Fen Fang, Qianli Xu, Liyuan Li, Ying Gu, Joo-Hwee Lim

Auto-TLDR; Faster R-CNN for High-ORP Object Detection

Abstract Slides Poster Similar

Bidirectional Matrix Feature Pyramid Network for Object Detection

Auto-TLDR; BMFPN: Bidirectional Matrix Feature Pyramid Network for Object Detection

Abstract Slides Poster Similar

MagnifierNet: Learning Efficient Small-Scale Pedestrian Detector towards Multiple Dense Regions

Qi Cheng, Mingqin Chen, Yingjie Wu, Fei Chen, Shiping Lin

Auto-TLDR; MagnifierNet: A Simple but Effective Small-Scale Pedestrian Detection Towards Multiple Dense Regions

Abstract Slides Poster Similar

Siamese Dynamic Mask Estimation Network for Fast Video Object Segmentation

Dexiang Hong, Guorong Li, Kai Xu, Li Su, Qingming Huang

Auto-TLDR; Siamese Dynamic Mask Estimation for Video Object Segmentation

Abstract Slides Poster Similar

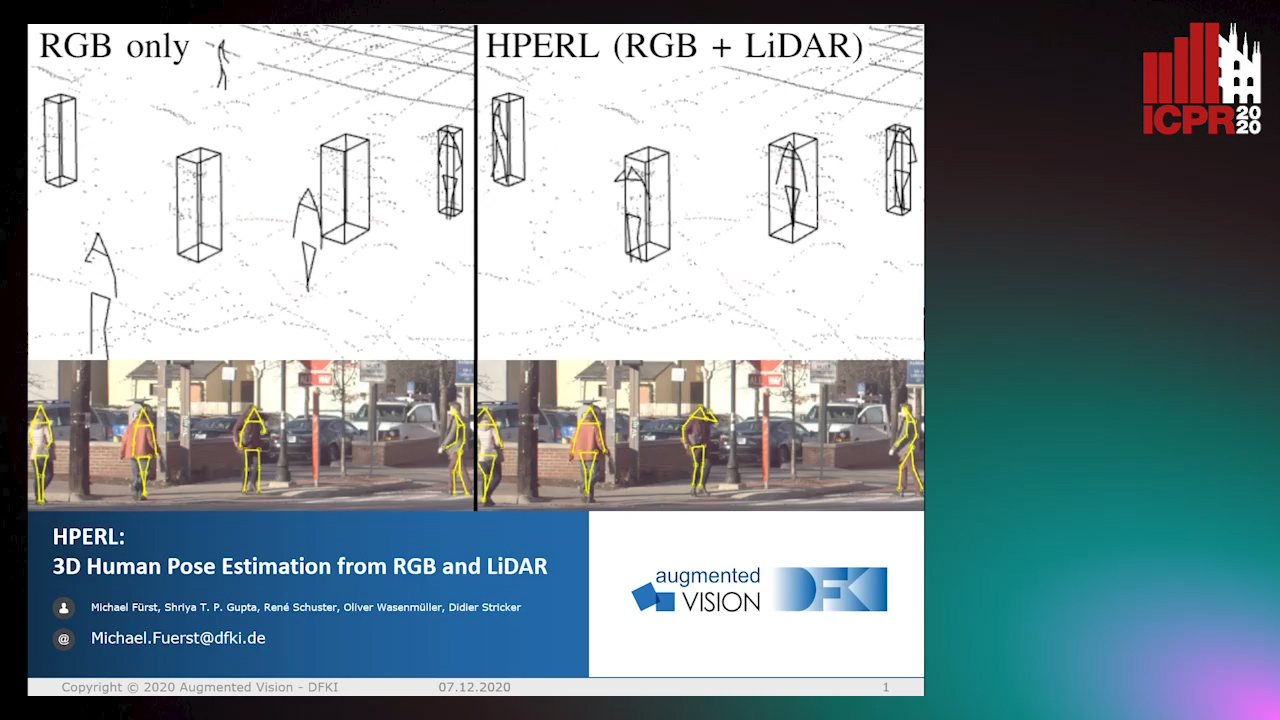

HPERL: 3D Human Pose Estimastion from RGB and LiDAR

Michael Fürst, Shriya T.P. Gupta, René Schuster, Oliver Wasenmüler, Didier Stricker

Auto-TLDR; 3D Human Pose Estimation Using RGB and LiDAR Using Weakly-Supervised Approach

Abstract Slides Poster Similar

Machine-Learned Regularization and Polygonization of Building Segmentation Masks

Stefano Zorzi, Ksenia Bittner, Friedrich Fraundorfer

Auto-TLDR; Automatic Regularization and Polygonization of Building Segmentation masks using Generative Adversarial Network

Abstract Slides Poster Similar

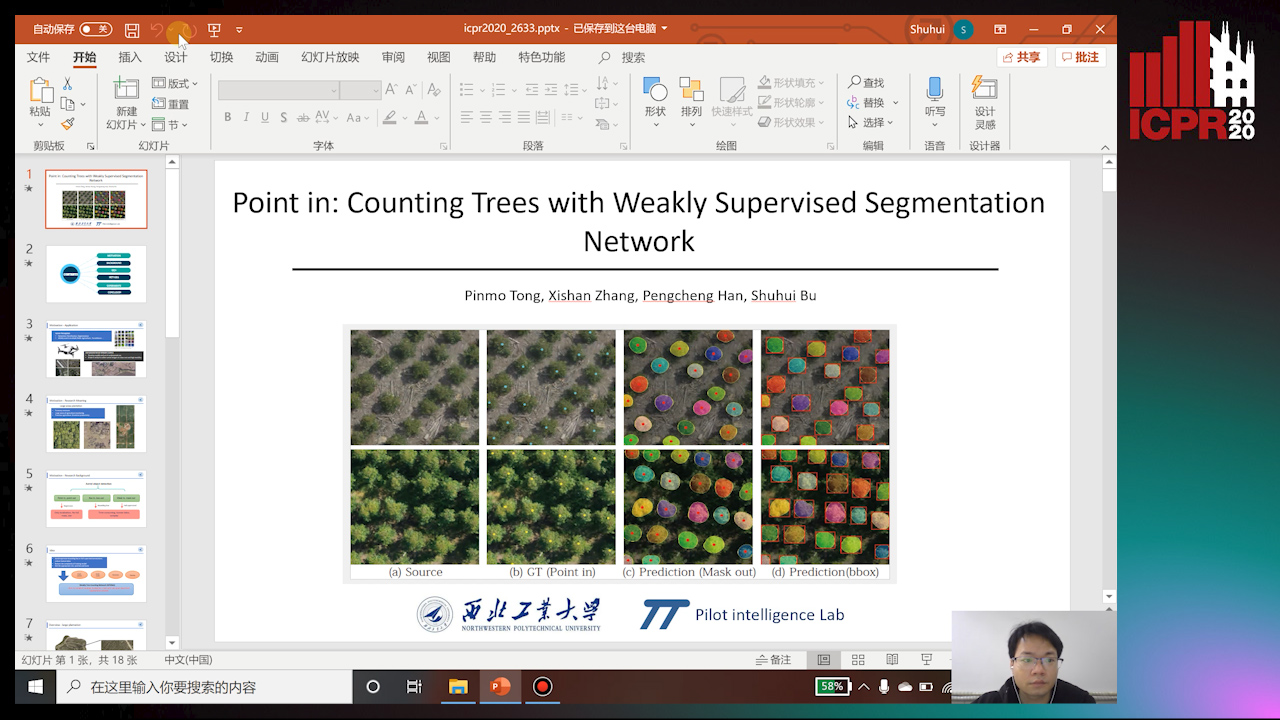

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

Tiny Object Detection in Aerial Images

Jinwang Wang, Wen Yang, Haowen Guo, Ruixiang Zhang, Gui-Song Xia

Auto-TLDR; Tiny Object Detection in Aerial Images Using Multiple Center Points Based Learning Network

P2 Net: Augmented Parallel-Pyramid Net for Attention Guided Pose Estimation

Luanxuan Hou, Jie Cao, Yuan Zhao, Haifeng Shen, Jian Tang, Ran He

Auto-TLDR; Parallel-Pyramid Net with Partial Attention for Human Pose Estimation

Abstract Slides Poster Similar

EAGLE: Large-Scale Vehicle Detection Dataset in Real-World Scenarios Using Aerial Imagery

Seyed Majid Azimi, Reza Bahmanyar, Corentin Henry, Kurz Franz

Auto-TLDR; EAGLE: A Large-Scale Dataset for Multi-class Vehicle Detection with Object Orientation Information in Airborne Imagery

Construction Worker Hardhat-Wearing Detection Based on an Improved BiFPN

Chenyang Zhang, Zhiqiang Tian, Jingyi Song, Yaoyue Zheng, Bo Xu

Auto-TLDR; A One-Stage Object Detection Method for Hardhat-Wearing in Construction Site

Abstract Slides Poster Similar

Cascade Saliency Attention Network for Object Detection in Remote Sensing Images

Dayang Yu, Rong Zhang, Shan Qin

Auto-TLDR; Cascade Saliency Attention Network for Object Detection in Remote Sensing Images

Abstract Slides Poster Similar

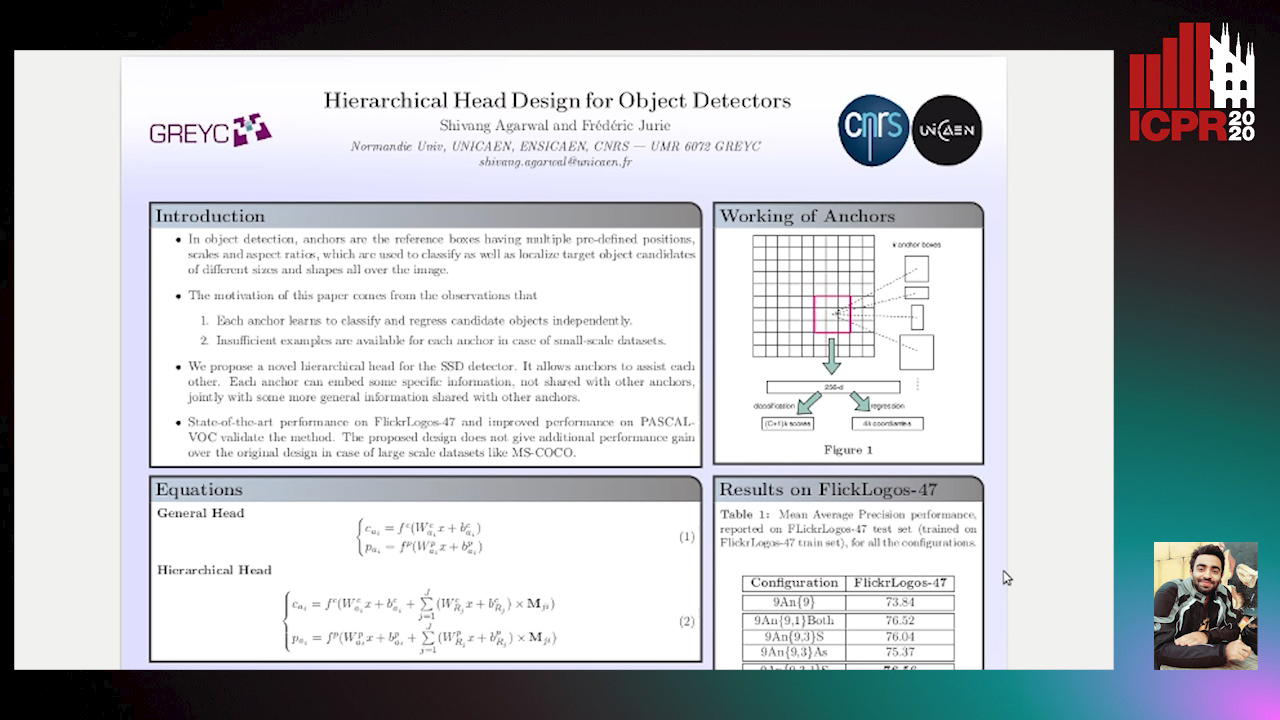

Hierarchical Head Design for Object Detectors

Shivang Agarwal, Frederic Jurie

Auto-TLDR; Hierarchical Anchor for SSD Detector

Abstract Slides Poster Similar

Foreground-Focused Domain Adaption for Object Detection

Auto-TLDR; Unsupervised Domain Adaptation for Unsupervised Object Detection

A Fast and Accurate Object Detector for Handwritten Digit String Recognition

Jun Guo, Wenjing Wei, Yifeng Ma, Cong Peng

Auto-TLDR; ChipNet: An anchor-free object detector for handwritten digit string recognition

Abstract Slides Poster Similar

3D Semantic Labeling of Photogrammetry Meshes Based on Active Learning

Mengqi Rong, Shuhan Shen, Zhanyi Hu

Auto-TLDR; 3D Semantic Expression of Urban Scenes Based on Active Learning

Abstract Slides Poster Similar

Incorporating Depth Information into Few-Shot Semantic Segmentation

Yifei Zhang, Desire Sidibe, Olivier Morel, Fabrice Meriaudeau

Auto-TLDR; RDNet: A Deep Neural Network for Few-shot Segmentation Using Depth Information

Abstract Slides Poster Similar

RescueNet: Joint Building Segmentation and Damage Assessment from Satellite Imagery

Auto-TLDR; RescueNet: End-to-End Building Segmentation and Damage Classification for Humanitarian Aid and Disaster Response

Abstract Slides Poster Similar

Context for Object Detection Via Lightweight Global and Mid-Level Representations

Mesut Erhan Unal, Adriana Kovashka

Auto-TLDR; Context-Based Object Detection with Semantic Similarity

Abstract Slides Poster Similar

Iterative Bounding Box Annotation for Object Detection

Bishwo Adhikari, Heikki Juhani Huttunen

Auto-TLDR; Semi-Automatic Bounding Box Annotation for Object Detection in Digital Images

Abstract Slides Poster Similar

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

Cross-Domain Semantic Segmentation of Urban Scenes Via Multi-Level Feature Alignment

Bin Zhang, Shengjie Zhao, Rongqing Zhang

Auto-TLDR; Cross-Domain Semantic Segmentation Using Generative Adversarial Networks

Abstract Slides Poster Similar