3CS Algorithm for Efficient Gaussian Process Model Retrieval

Fabian Berns,

Kjeld Schmidt,

Ingolf Bracht,

Christian Beecks

Auto-TLDR; Efficient retrieval of Gaussian Process Models for large-scale data using divide-&-conquer-based approach

Similar papers

Aggregating Dependent Gaussian Experts in Local Approximation

Auto-TLDR; A novel approach for aggregating the Gaussian experts by detecting strong violations of conditional independence

Abstract Slides Poster Similar

Automatically Mining Relevant Variable Interactions Via Sparse Bayesian Learning

Ryoichiro Yafune, Daisuke Sakuma, Yasuo Tabei, Noritaka Saito, Hiroto Saigo

Auto-TLDR; Sparse Bayes for Interpretable Non-linear Prediction

Abstract Slides Poster Similar

Adaptive Sampling of Pareto Frontiers with Binary Constraints Using Regression and Classification

Auto-TLDR; Adaptive Optimization for Black-Box Multi-Objective Optimizing Problems with Binary Constraints

Temporal Pattern Detection in Time-Varying Graphical Models

Federico Tomasi, Veronica Tozzo, Annalisa Barla

Auto-TLDR; A dynamical network inference model that leverages on kernels to consider general temporal patterns

Abstract Slides Poster Similar

Seasonal Inhomogeneous Nonconsecutive Arrival Process Search and Evaluation

Kimberly Holmgren, Paul Gibby, Joseph Zipkin

Auto-TLDR; SINAPSE: Fitting a Sparse Time Series Model to Seasonal Data

Abstract Slides Poster Similar

Hierarchical Routing Mixture of Experts

Wenbo Zhao, Yang Gao, Shahan Ali Memon, Bhiksha Raj, Rita Singh

Auto-TLDR; A Binary Tree-structured Hierarchical Routing Mixture of Experts for Regression

Abstract Slides Poster Similar

Factor Screening Using Bayesian Active Learning and Gaussian Process Meta-Modelling

Cheng Li, Santu Rana, Andrew William Gill, Dang Nguyen, Sunil Kumar Gupta, Svetha Venkatesh

Auto-TLDR; Data-Efficient Bayesian Active Learning for Factor Screening in Combat Simulations

GPSRL: Learning Semi-Parametric Bayesian Survival Rule Lists from Heterogeneous Patient Data

Ameer Hamza Shakur, Xiaoning Qian, Zhangyang Wang, Bobak Mortazavi, Shuai Huang

Auto-TLDR; Semi-parametric Bayesian Survival Rule List Model for Heterogeneous Survival Data

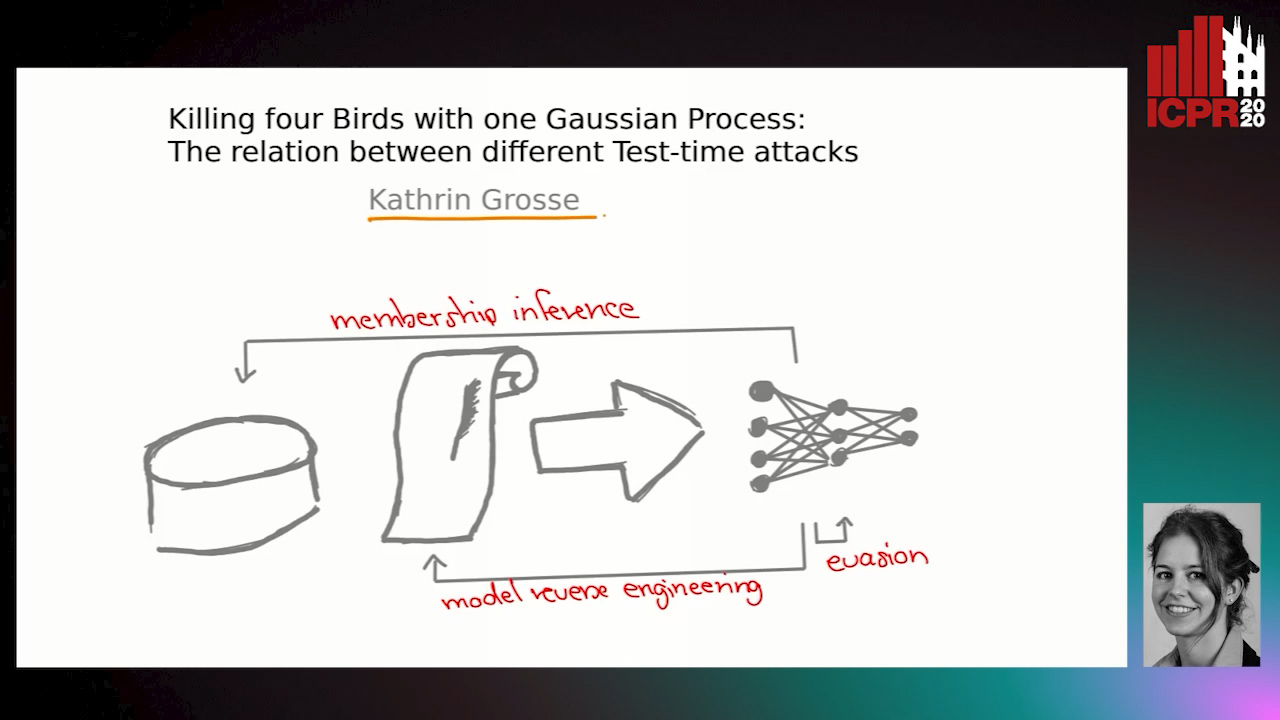

Killing Four Birds with One Gaussian Process: The Relation between Different Test-Time Attacks

Kathrin Grosse, Michael Thomas Smith, Michael Backes

Auto-TLDR; Security of Gaussian Process Classifiers against Attack Algorithms

Abstract Slides Poster Similar

A Multilinear Sampling Algorithm to Estimate Shapley Values

Auto-TLDR; A sampling method for Shapley values for multilayer Perceptrons

Abstract Slides Poster Similar

Learning Parameter Distributions to Detect Concept Drift in Data Streams

Johannes Haug, Gjergji Kasneci

Auto-TLDR; A novel framework for the detection of concept drift in streaming environments

Abstract Slides Poster Similar

Algorithm Recommendation for Data Streams

Jáder Martins Camboim De Sá, Andre Luis Debiaso Rossi, Gustavo Enrique De Almeida Prado Alves Batista, Luís Paulo Faina Garcia

Auto-TLDR; Meta-Learning for Algorithm Selection in Time-Changing Data Streams

Abstract Slides Poster Similar

Sketch-Based Community Detection Via Representative Node Sampling

Mahlagha Sedghi, Andre Beckus, George Atia

Auto-TLDR; Sketch-based Clustering of Community Detection Using a Small Sketch

Abstract Slides Poster Similar

Bayesian Active Learning for Maximal Information Gain on Model Parameters

Kasra Arnavaz, Aasa Feragen, Oswin Krause, Marco Loog

Auto-TLDR; Bayesian assumptions for Bayesian classification

Abstract Slides Poster Similar

Probabilistic Latent Factor Model for Collaborative Filtering with Bayesian Inference

Jiansheng Fang, Xiaoqing Zhang, Yan Hu, Yanwu Xu, Ming Yang, Jiang Liu

Auto-TLDR; Bayesian Latent Factor Model for Collaborative Filtering

Scalable Direction-Search-Based Approach to Subspace Clustering

Auto-TLDR; Fast Direction-Search-Based Subspace Clustering

Adaptive Matching of Kernel Means

Auto-TLDR; Adaptive Matching of Kernel Means for Knowledge Discovery and Feature Learning

Abstract Slides Poster Similar

The eXPose Approach to Crosslier Detection

Antonio Barata, Frank Takes, Hendrik Van Den Herik, Cor Veenman

Auto-TLDR; EXPose: Crosslier Detection Based on Supervised Category Modeling

Abstract Slides Poster Similar

Switching Dynamical Systems with Deep Neural Networks

Cesar Ali Ojeda Marin, Kostadin Cvejoski, Bogdan Georgiev, Ramses J. Sanchez

Auto-TLDR; Variational RNN for Switching Dynamics

Abstract Slides Poster Similar

Active Sampling for Pairwise Comparisons via Approximate Message Passing and Information Gain Maximization

Aliaksei Mikhailiuk, Clifford Wilmot, Maria Perez-Ortiz, Dingcheng Yue, Rafal Mantiuk

Auto-TLDR; ASAP: An Active Sampling Algorithm for Pairwise Comparison Data

An Efficient Empirical Solver for Localized Multiple Kernel Learning Via DNNs

Auto-TLDR; Localized Multiple Kernel Learning using LMKL-Net

Abstract Slides Poster Similar

Budgeted Batch Mode Active Learning with Generalized Cost and Utility Functions

Arvind Agarwal, Shashank Mujumdar, Nitin Gupta, Sameep Mehta

Auto-TLDR; Active Learning Based on Utility and Cost Functions

Abstract Slides Poster Similar

Assortative-Constrained Stochastic Block Models

Daniel Gribel, Thibaut Vidal, Michel Gendreau

Auto-TLDR; Constrained Stochastic Block Models for Assortative Communities in Neural Networks

Abstract Slides Poster Similar

An Invariance-Guided Stability Criterion for Time Series Clustering Validation

Florent Forest, Alex Mourer, Mustapha Lebbah, Hanane Azzag, Jérôme Lacaille

Auto-TLDR; An invariance-guided method for clustering model selection in time series data

Abstract Slides Poster Similar

Relative Feature Importance

Gunnar König, Christoph Molnar, Bernd Bischl, Moritz Grosse-Wentrup

Auto-TLDR; Relative Feature Importance for Interpretable Machine Learning

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

Multi-annotator Probabilistic Active Learning

Marek Herde, Daniel Kottke, Denis Huseljic, Bernhard Sick

Auto-TLDR; MaPAL: Multi-annotator Probabilistic Active Learning

Abstract Slides Poster Similar

Unveiling Groups of Related Tasks in Multi-Task Learning

Jordan Frecon, Saverio Salzo, Massimiliano Pontil

Auto-TLDR; Continuous Bilevel Optimization for Multi-Task Learning

Abstract Slides Poster Similar

Watermelon: A Novel Feature Selection Method Based on Bayes Error Rate Estimation and a New Interpretation of Feature Relevance and Redundancy

Auto-TLDR; Feature Selection Using Bayes Error Rate Estimation for Dynamic Feature Selection

Abstract Slides Poster Similar

PIF: Anomaly detection via preference embedding

Filippo Leveni, Luca Magri, Giacomo Boracchi, Cesare Alippi

Auto-TLDR; PIF: Anomaly Detection with Preference Embedding for Structured Patterns

Abstract Slides Poster Similar

Naturally Constrained Online Expectation Maximization

Daniela Pamplona, Antoine Manzanera

Auto-TLDR; Constrained Online Expectation-Maximization for Probabilistic Principal Components Analysis

Abstract Slides Poster Similar

Deep Transformation Models: Tackling Complex Regression Problems with Neural Network Based Transformation Models

Beate Sick, Torsten Hothorn, Oliver Dürr

Auto-TLDR; A Deep Transformation Model for Probabilistic Regression

Abstract Slides Poster Similar

Transfer Learning with Graph Neural Networks for Short-Term Highway Traffic Forecasting

Tanwi Mallick, Prasanna Balaprakash, Eric Rask, Jane Macfarlane

Auto-TLDR; Transfer Learning for Highway Traffic Forecasting on Unseen Traffic Networks

Abstract Slides Poster Similar

Uniform and Non-Uniform Sampling Methods for Sub-Linear Time K-Means Clustering

Auto-TLDR; Sub-linear Time Clustering with Constant Approximation Ratio for K-Means Problem

Abstract Slides Poster Similar

Probabilistic Word Embeddings in Kinematic Space

Adarsh Jamadandi, Rishabh Tigadoli, Ramesh Ashok Tabib, Uma Mudenagudi

Auto-TLDR; Kinematic Space for Hierarchical Representation Learning

Abstract Slides Poster Similar

Kernel-based Graph Convolutional Networks

Auto-TLDR; Spatial Graph Convolutional Networks in Recurrent Kernel Hilbert Space

Abstract Slides Poster Similar

E-DNAS: Differentiable Neural Architecture Search for Embedded Systems

Javier García López, Antonio Agudo, Francesc Moreno-Noguer

Auto-TLDR; E-DNAS: Differentiable Architecture Search for Light-Weight Networks for Image Classification

Abstract Slides Poster Similar

Partial Monotone Dependence

Denis Khryashchev, Huy Vo, Robert Haralick

Auto-TLDR; Partially Monotone Autoregressive Correlation for Time Series Forecasting

Auto Encoding Explanatory Examples with Stochastic Paths

Cesar Ali Ojeda Marin, Ramses J. Sanchez, Kostadin Cvejoski, Bogdan Georgiev

Auto-TLDR; Semantic Stochastic Path: Explaining a Classifier's Decision Making Process using latent codes

Abstract Slides Poster Similar

Emerging Relation Network and Task Embedding for Multi-Task Regression Problems

Auto-TLDR; A Comparative Study of Multi-Task Learning for Non-linear Time Series Problems

Abstract Slides Poster Similar

Modeling the Distribution of Normal Data in Pre-Trained Deep Features for Anomaly Detection

Oliver Rippel, Patrick Mertens, Dorit Merhof

Auto-TLDR; Deep Feature Representations for Anomaly Detection in Images

Abstract Slides Poster Similar

Creating Classifier Ensembles through Meta-Heuristic Algorithms for Aerial Scene Classification

Álvaro Roberto Ferreira Jr., Gustavo Gustavo Henrique De Rosa, Joao Paulo Papa, Gustavo Carneiro, Fabio Augusto Faria

Auto-TLDR; Univariate Marginal Distribution Algorithm for Aerial Scene Classification Using Meta-Heuristic Optimization

Abstract Slides Poster Similar

N2D: (Not Too) Deep Clustering Via Clustering the Local Manifold of an Autoencoded Embedding

Ryan Mcconville, Raul Santos-Rodriguez, Robert Piechocki, Ian Craddock

Auto-TLDR; Local Manifold Learning for Deep Clustering on Autoencoded Embeddings

Compression Strategies and Space-Conscious Representations for Deep Neural Networks

Giosuè Marinò, Gregorio Ghidoli, Marco Frasca, Dario Malchiodi

Auto-TLDR; Compression of Large Convolutional Neural Networks by Weight Pruning and Quantization

Abstract Slides Poster Similar

Understanding Integrated Gradients with SmoothTaylor for Deep Neural Network Attribution

Gary Shing Wee Goh, Sebastian Lapuschkin, Leander Weber, Wojciech Samek, Alexander Binder

Auto-TLDR; SmoothGrad: bridging Integrated Gradients and SmoothGrad from the Taylor's theorem perspective

Quantifying Model Uncertainty in Inverse Problems Via Bayesian Deep Gradient Descent

Riccardo Barbano, Chen Zhang, Simon Arridge, Bangti Jin

Auto-TLDR; Bayesian Neural Networks for Inverse Reconstruction via Bayesian Knowledge-Aided Computation

Abstract Slides Poster Similar

An Intransitivity Model for Matchup and Pairwise Comparison

Yan Gu, Jiuding Duan, Hisashi Kashima

Auto-TLDR; Blade-Chest: A Low-Rank Matrix Approach for Probabilistic Ranking of Players

Abstract Slides Poster Similar

Mean Decision Rules Method with Smart Sampling for Fast Large-Scale Binary SVM Classification

Alexandra Makarova, Mikhail Kurbakov, Valentina Sulimova

Auto-TLDR; Improving Mean Decision Rule for Large-Scale Binary SVM Problems

Abstract Slides Poster Similar