Region and Relations Based Multi Attention Network for Graph Classification

Manasvi Aggarwal,

M. Narasimha Murty

Auto-TLDR; R2POOL: A Graph Pooling Layer for Non-euclidean Structures

Similar papers

A General Model for Learning Node and Graph Representations Jointly

Auto-TLDR; Joint Community Detection/Dynamic Routing for Graph Classification

Abstract Slides Poster Similar

Edge-Aware Graph Attention Network for Ratio of Edge-User Estimation in Mobile Networks

Jiehui Deng, Sheng Wan, Xiang Wang, Enmei Tu, Xiaolin Huang, Jie Yang, Chen Gong

Auto-TLDR; EAGAT: Edge-Aware Graph Attention Network for Automatic REU Estimation in Mobile Networks

Abstract Slides Poster Similar

On the Global Self-attention Mechanism for Graph Convolutional Networks

Auto-TLDR; Global Self-Attention Mechanism for Graph Convolutional Networks

Classification of Intestinal Gland Cell-Graphs Using Graph Neural Networks

Linda Studer, Jannis Wallau, Heather Dawson, Inti Zlobec, Andreas Fischer

Auto-TLDR; Graph Neural Networks for Classification of Dysplastic Gland Glands using Graph Neural Networks

Abstract Slides Poster Similar

What Nodes Vote To? Graph Classification without Readout Phase

Yuxing Tian, Zheng Liu, Weiding Liu, Zeyu Zhang, Yanwen Qu

Auto-TLDR; node voting based graph classification with convolutional operator

Abstract Slides Poster Similar

TreeRNN: Topology-Preserving Deep Graph Embedding and Learning

Yecheng Lyu, Ming Li, Xinming Huang, Ulkuhan Guler, Patrick Schaumont, Ziming Zhang

Auto-TLDR; TreeRNN: Recurrent Neural Network for General Graph Classification

Abstract Slides Poster Similar

Learning Connectivity with Graph Convolutional Networks

Auto-TLDR; Learning Graph Convolutional Networks Using Topological Properties of Graphs

Abstract Slides Poster Similar

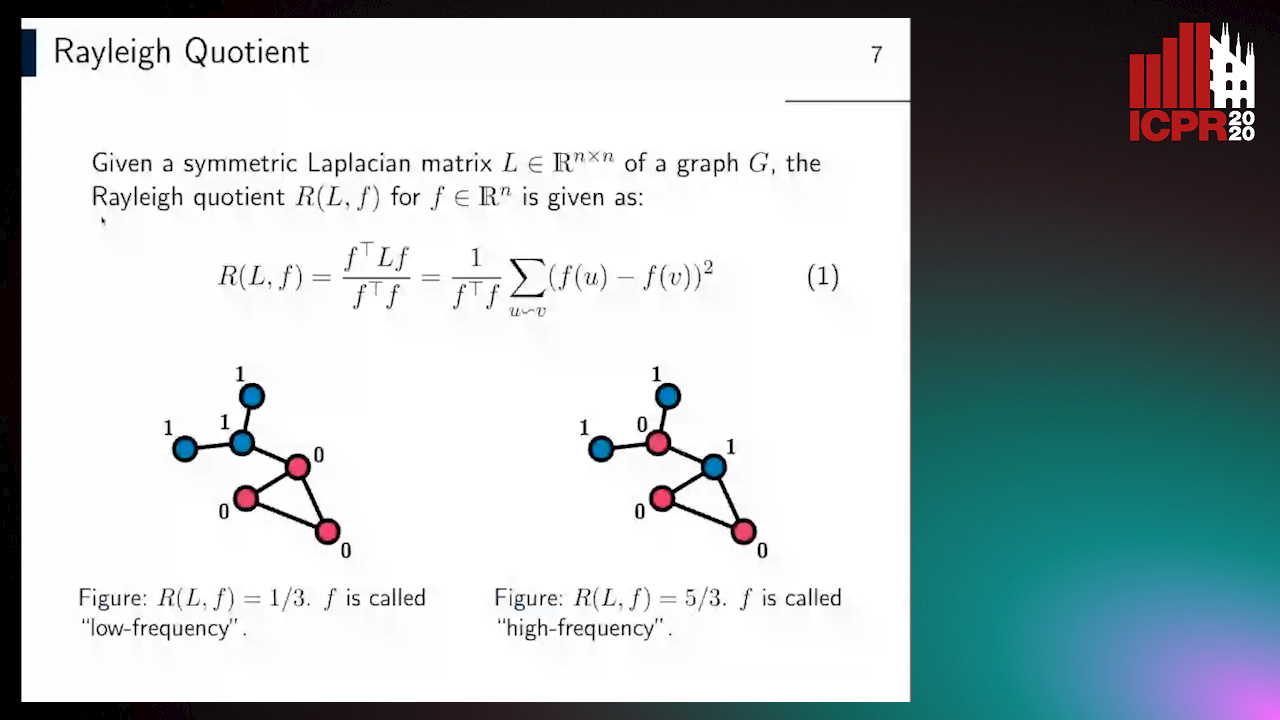

Kernel-based Graph Convolutional Networks

Auto-TLDR; Spatial Graph Convolutional Networks in Recurrent Kernel Hilbert Space

Abstract Slides Poster Similar

Revisiting Graph Neural Networks: Graph Filtering Perspective

Hoang Nguyen-Thai, Takanori Maehara, Tsuyoshi Murata

Auto-TLDR; Two-Layers Graph Convolutional Network with Graph Filters Neural Network

Abstract Slides Poster Similar

AOAM: Automatic Optimization of Adjacency Matrix for Graph Convolutional Network

Yuhang Zhang, Hongshuai Ren, Jiexia Ye, Xitong Gao, Yang Wang, Kejiang Ye, Cheng-Zhong Xu

Auto-TLDR; Adjacency Matrix for Graph Convolutional Network in Non-Euclidean Space

Abstract Slides Poster Similar

Label Incorporated Graph Neural Networks for Text Classification

Yuan Xin, Linli Xu, Junliang Guo, Jiquan Li, Xin Sheng, Yuanyuan Zhou

Auto-TLDR; Graph Neural Networks for Semi-supervised Text Classification

Abstract Slides Poster Similar

Social Network Analysis Using Knowledge-Graph Embeddings and Convolution Operations

Bonaventure Chidube Molokwu, Shaon Bhatta Shuvo, Ziad Kobti, Narayan C. Kar

Auto-TLDR; RLVECO: Representation Learning via Knowledge- Graph Embeddings and Convolution Operations for Social Network Analysis

Abstract Slides Poster Similar

Equation Attention Relationship Network (EARN) : A Geometric Deep Metric Framework for Learning Similar Math Expression Embedding

Saleem Ahmed, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; Representational Learning for Similarity Based Retrieval of Mathematical Expressions

Abstract Slides Poster Similar

Privacy Attributes-Aware Message Passing Neural Network for Visual Privacy Attributes Classification

Hanbin Hong, Wentao Bao, Yuan Hong, Yu Kong

Auto-TLDR; Privacy Attributes-Aware Message Passing Neural Network for Visual Privacy Attribute Classification

Abstract Slides Poster Similar

GCNs-Based Context-Aware Short Text Similarity Model

Auto-TLDR; Context-Aware Graph Convolutional Network for Text Similarity

Abstract Slides Poster Similar

Self-Supervised Learning with Graph Neural Networks for Region of Interest Retrieval in Histopathology

Yigit Ozen, Selim Aksoy, Kemal Kosemehmetoglu, Sevgen Onder, Aysegul Uner

Auto-TLDR; Self-supervised Contrastive Learning for Deep Representation Learning of Histopathology Images

Abstract Slides Poster Similar

Reinforcement Learning with Dual Attention Guided Graph Convolution for Relation Extraction

Zhixin Li, Yaru Sun, Suqin Tang, Canlong Zhang, Huifang Ma

Auto-TLDR; Dual Attention Graph Convolutional Network for Relation Extraction

Abstract Slides Poster Similar

PICK: Processing Key Information Extraction from Documents Using Improved Graph Learning-Convolutional Networks

Wenwen Yu, Ning Lu, Xianbiao Qi, Ping Gong, Rong Xiao

Auto-TLDR; PICK: A Graph Learning Framework for Key Information Extraction from Documents

Abstract Slides Poster Similar

Named Entity Recognition and Relation Extraction with Graph Neural Networks in Semi Structured Documents

Manuel Carbonell, Pau Riba, Mauricio Villegas, Alicia Fornés, Josep Llados

Auto-TLDR; Graph Neural Network for Entity Recognition and Relation Extraction in Semi-Structured Documents

Temporal Attention-Augmented Graph Convolutional Network for Efficient Skeleton-Based Human Action Recognition

Negar Heidari, Alexandros Iosifidis

Auto-TLDR; Temporal Attention Module for Efficient Graph Convolutional Network-based Action Recognition

Abstract Slides Poster Similar

Exploring and Exploiting the Hierarchical Structure of a Scene for Scene Graph Generation

Ikuto Kurosawa, Tetsunori Kobayashi, Yoshihiko Hayashi

Auto-TLDR; A Hierarchical Model for Scene Graph Generation

Abstract Slides Poster Similar

MEG: Multi-Evidence GNN for Multimodal Semantic Forensics

Ekraam Sabir, Ayush Jaiswal, Wael Abdalmageed, Prem Natarajan

Auto-TLDR; Scalable Image Repurposing Detection with Graph Neural Network Based Model

Abstract Slides Poster Similar

Sketch-Based Community Detection Via Representative Node Sampling

Mahlagha Sedghi, Andre Beckus, George Atia

Auto-TLDR; Sketch-based Clustering of Community Detection Using a Small Sketch

Abstract Slides Poster Similar

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

Graph Convolutional Neural Networks for Power Line Outage Identification

Auto-TLDR; Graph Convolutional Networks for Power Line Outage Identification

Object Detection Using Dual Graph Network

Shengjia Chen, Zhixin Li, Feicheng Huang, Canlong Zhang, Huifang Ma

Auto-TLDR; A Graph Convolutional Network for Object Detection with Key Relation Information

Graph-Based Interpolation of Feature Vectors for Accurate Few-Shot Classification

Yuqing Hu, Vincent Gripon, Stéphane Pateux

Auto-TLDR; Transductive Learning for Few-Shot Classification using Graph Neural Networks

Abstract Slides Poster Similar

Geographic-Semantic-Temporal Hypergraph Convolutional Network for Traffic Flow Prediction

Kesu Wang, Jing Chen, Shijie Liao, Jiaxin Hou, Qingyu Xiong

Auto-TLDR; Geographic-semantic-temporal convolutional network for traffic flow prediction

Neuron-Based Network Pruning Based on Majority Voting

Ali Alqahtani, Xianghua Xie, Ehab Essa, Mark W. Jones

Auto-TLDR; Large-Scale Neural Network Pruning using Majority Voting

Abstract Slides Poster Similar

Using Scene Graphs for Detecting Visual Relationships

Anurag Tripathi, Siddharth Srivastava, Brejesh Lall, Santanu Chaudhury

Auto-TLDR; Relationship Detection using Context Aligned Scene Graph Embeddings

Abstract Slides Poster Similar

Constructing Geographic and Long-term Temporal Graph for Traffic Forecasting

Yiwen Sun, Yulu Wang, Kun Fu, Zheng Wang, Changshui Zhang, Jieping Ye

Auto-TLDR; GLT-GCRNN: Geographic and Long-term Temporal Graph Convolutional Recurrent Neural Network for Traffic Forecasting

Abstract Slides Poster Similar

Epitomic Variational Graph Autoencoder

Rayyan Ahmad Khan, Muhammad Umer Anwaar, Martin Kleinsteuber

Auto-TLDR; EVGAE: A Generative Variational Autoencoder for Graph Data

Abstract Slides Poster Similar

Hcore-Init: Neural Network Initialization Based on Graph Degeneracy

Stratis Limnios, George Dasoulas, Dimitrios Thilikos, Michalis Vazirgiannis

Auto-TLDR; K-hypercore: Graph Mining for Deep Neural Networks

Abstract Slides Poster Similar

Modeling Extent-Of-Texture Information for Ground Terrain Recognition

Shuvozit Ghose, Pinaki Nath Chowdhury, Partha Pratim Roy, Umapada Pal

Auto-TLDR; Extent-of-Texture Guided Inter-domain Message Passing for Ground Terrain Recognition

Abstract Slides Poster Similar

Zero-Shot Text Classification with Semantically Extended Graph Convolutional Network

Tengfei Liu, Yongli Hu, Junbin Gao, Yanfeng Sun, Baocai Yin

Auto-TLDR; Semantically Extended Graph Convolutional Network for Zero-shot Text Classification

Abstract Slides Poster Similar

Directional Graph Networks with Hard Weight Assignments

Miguel Dominguez, Raymond Ptucha

Auto-TLDR; Hard Directional Graph Networks for Point Cloud Analysis

Abstract Slides Poster Similar

More Correlations Better Performance: Fully Associative Networks for Multi-Label Image Classification

Auto-TLDR; Fully Associative Network for Fully Exploiting Correlation Information in Multi-Label Classification

Abstract Slides Poster Similar

Temporal Collaborative Filtering with Graph Convolutional Neural Networks

Esther Rodrigo-Bonet, Minh Duc Nguyen, Nikos Deligiannis

Auto-TLDR; Temporal Collaborative Filtering with Graph-Neural-Network-based Neural Networks

Abstract Slides Poster Similar

Contextual Classification Using Self-Supervised Auxiliary Models for Deep Neural Networks

Sebastian Palacio, Philipp Engler, Jörn Hees, Andreas Dengel

Auto-TLDR; Self-Supervised Autogenous Learning for Deep Neural Networks

Abstract Slides Poster Similar

FatNet: A Feature-Attentive Network for 3D Point Cloud Processing

Chaitanya Kaul, Nick Pears, Suresh Manandhar

Auto-TLDR; Feature-Attentive Neural Networks for Point Cloud Classification and Segmentation

Aggregating Dependent Gaussian Experts in Local Approximation

Auto-TLDR; A novel approach for aggregating the Gaussian experts by detecting strong violations of conditional independence

Abstract Slides Poster Similar

Interpretable Structured Learning with Sparse Gated Sequence Encoder for Protein-Protein Interaction Prediction

Kishan K C, Feng Cui, Anne Haake, Rui Li

Auto-TLDR; Predicting Protein-Protein Interactions Using Sequence Representations

Abstract Slides Poster Similar

A Two-Stream Recurrent Network for Skeleton-Based Human Interaction Recognition

Qianhui Men, Edmond S. L. Ho, Shum Hubert P. H., Howard Leung

Auto-TLDR; Two-Stream Recurrent Neural Network for Human-Human Interaction Recognition

Abstract Slides Poster Similar

TAAN: Task-Aware Attention Network for Few-Shot Classification

Auto-TLDR; TAAN: Task-Aware Attention Network for Few-Shot Classification

Abstract Slides Poster Similar

A Novel Random Forest Dissimilarity Measure for Multi-View Learning

Hongliu Cao, Simon Bernard, Robert Sabourin, Laurent Heutte

Auto-TLDR; Multi-view Learning with Random Forest Relation Measure and Instance Hardness

Abstract Slides Poster Similar

Trajectory-User Link with Attention Recurrent Networks

Tao Sun, Yongjun Xu, Fei Wang, Lin Wu, 塘文 钱, Zezhi Shao

Auto-TLDR; TULAR: Trajectory-User Link with Attention Recurrent Neural Networks

Abstract Slides Poster Similar

Siamese Graph Convolution Network for Face Sketch Recognition

Liang Fan, Xianfang Sun, Paul Rosin

Auto-TLDR; A novel Siamese graph convolution network for face sketch recognition

Abstract Slides Poster Similar

Multi-Stage Attention Based Visual Question Answering

Aakansha Mishra, Ashish Anand, Prithwijit Guha

Auto-TLDR; Alternative Bi-directional Attention for Visual Question Answering