Multimodal Side-Tuning for Document Classification

Stefano Zingaro,

Giuseppe Lisanti,

Maurizio Gabbrielli

Auto-TLDR; Side-tuning for Multimodal Document Classification

Similar papers

Textual-Content Based Classification of Bundles of Untranscribed of Manuscript Images

José Ramón Prieto Fontcuberta, Enrique Vidal, Vicente Bosch, Carlos Alonso, Carmen Orcero, Lourdes Márquez

Auto-TLDR; Probabilistic Indexing for Text-based Classification of Manuscripts

Abstract Slides Poster Similar

Vision-Based Layout Detection from Scientific Literature Using Recurrent Convolutional Neural Networks

Auto-TLDR; Transfer Learning for Scientific Literature Layout Detection Using Convolutional Neural Networks

Abstract Slides Poster Similar

PICK: Processing Key Information Extraction from Documents Using Improved Graph Learning-Convolutional Networks

Wenwen Yu, Ning Lu, Xianbiao Qi, Ping Gong, Rong Xiao

Auto-TLDR; PICK: A Graph Learning Framework for Key Information Extraction from Documents

Abstract Slides Poster Similar

Trainable Spectrally Initializable Matrix Transformations in Convolutional Neural Networks

Michele Alberti, Angela Botros, Schuetz Narayan, Rolf Ingold, Marcus Liwicki, Mathias Seuret

Auto-TLDR; Trainable and Spectrally Initializable Matrix Transformations for Neural Networks

Abstract Slides Poster Similar

Named Entity Recognition and Relation Extraction with Graph Neural Networks in Semi Structured Documents

Manuel Carbonell, Pau Riba, Mauricio Villegas, Alicia Fornés, Josep Llados

Auto-TLDR; Graph Neural Network for Entity Recognition and Relation Extraction in Semi-Structured Documents

Writer Identification Using Deep Neural Networks: Impact of Patch Size and Number of Patches

Akshay Punjabi, José Ramón Prieto Fontcuberta, Enrique Vidal

Auto-TLDR; Writer Recognition Using Deep Neural Networks for Handwritten Text Images

Abstract Slides Poster Similar

Learning Neural Textual Representations for Citation Recommendation

Thanh Binh Kieu, Inigo Jauregi Unanue, Son Bao Pham, Xuan-Hieu Phan, M. Piccardi

Auto-TLDR; Sentence-BERT cascaded with Siamese and triplet networks for citation recommendation

Abstract Slides Poster Similar

Watch Your Strokes: Improving Handwritten Text Recognition with Deformable Convolutions

Iulian Cojocaru, Silvia Cascianelli, Lorenzo Baraldi, Massimiliano Corsini, Rita Cucchiara

Auto-TLDR; Deformable Convolutional Neural Networks for Handwritten Text Recognition

Abstract Slides Poster Similar

An Evaluation of DNN Architectures for Page Segmentation of Historical Newspapers

Manuel Burghardt, Bernhard Liebl

Auto-TLDR; Evaluation of Backbone Architectures for Optical Character Segmentation of Historical Documents

Abstract Slides Poster Similar

Improving Word Recognition Using Multiple Hypotheses and Deep Embeddings

Siddhant Bansal, Praveen Krishnan, C. V. Jawahar

Auto-TLDR; EmbedNet: fuse recognition-based and recognition-free approaches for word recognition using learning-based methods

Abstract Slides Poster Similar

Ancient Document Layout Analysis: Autoencoders Meet Sparse Coding

Homa Davoudi, Marco Fiorucci, Arianna Traviglia

Auto-TLDR; Unsupervised Unsupervised Representation Learning for Document Layout Analysis

Abstract Slides Poster Similar

Multiple Document Datasets Pre-Training Improves Text Line Detection with Deep Neural Networks

Mélodie Boillet, Christopher Kermorvant, Thierry Paquet

Auto-TLDR; A fully convolutional network for document layout analysis

A Gated and Bifurcated Stacked U-Net Module for Document Image Dewarping

Hmrishav Bandyopadhyay, Tanmoy Dasgupta, Nibaran Das, Mita Nasipuri

Auto-TLDR; Gated and Bifurcated Stacked U-Net for Dewarping Document Images

Abstract Slides Poster Similar

Sequential Domain Adaptation through Elastic Weight Consolidation for Sentiment Analysis

Avinash Madasu, Anvesh Rao Vijjini

Auto-TLDR; Sequential Domain Adaptation using Elastic Weight Consolidation for Sentiment Analysis

Abstract Slides Poster Similar

Recursive Recognition of Offline Handwritten Mathematical Expressions

Marco Cotogni, Claudio Cusano, Antonino Nocera

Auto-TLDR; Online Handwritten Mathematical Expression Recognition with Recurrent Neural Network

Abstract Slides Poster Similar

Learning with Delayed Feedback

Pranavan Theivendiram, Terence Sim

Auto-TLDR; Unsupervised Machine Learning with Delayed Feedback

Abstract Slides Poster Similar

Recognizing Bengali Word Images - A Zero-Shot Learning Perspective

Sukalpa Chanda, Daniël Arjen Willem Haitink, Prashant Kumar Prasad, Jochem Baas, Umapada Pal, Lambert Schomaker

Auto-TLDR; Zero-Shot Learning for Word Recognition in Bengali Script

Abstract Slides Poster Similar

Information Graphic Summarization Using a Collection of Multimodal Deep Neural Networks

Edward Kim, Connor Onweller, Kathleen F. Mccoy

Auto-TLDR; A multimodal deep learning framework that can generate summarization text supporting the main idea of an information graphic for presentation to blind or visually impaired

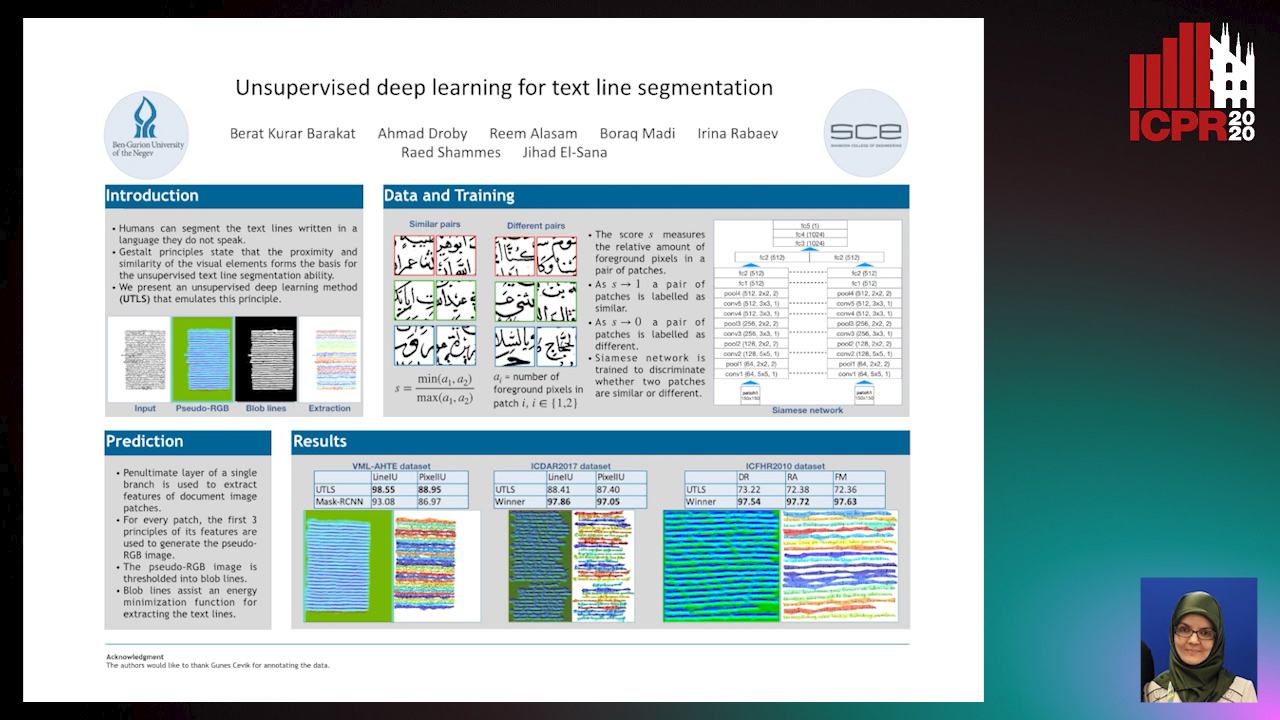

Unsupervised deep learning for text line segmentation

Berat Kurar Barakat, Ahmad Droby, Reem Alaasam, Borak Madi, Irina Rabaev, Raed Shammes, Jihad El-Sana

Auto-TLDR; Unsupervised Deep Learning for Handwritten Text Line Segmentation without Annotation

A Systematic Investigation on Deep Architectures for Automatic Skin Lesions Classification

Pierluigi Carcagni, Marco Leo, Andrea Cuna, Giuseppe Celeste, Cosimo Distante

Auto-TLDR; RegNet: Deep Investigation of Convolutional Neural Networks for Automatic Classification of Skin Lesions

Abstract Slides Poster Similar

Zero-Shot Text Classification with Semantically Extended Graph Convolutional Network

Tengfei Liu, Yongli Hu, Junbin Gao, Yanfeng Sun, Baocai Yin

Auto-TLDR; Semantically Extended Graph Convolutional Network for Zero-shot Text Classification

Abstract Slides Poster Similar

Dual Path Multi-Modal High-Order Features for Textual Content Based Visual Question Answering

Yanan Li, Yuetan Lin, Hongrui Zhao, Donghui Wang

Auto-TLDR; TextVQA: An End-to-End Visual Question Answering Model for Text-Based VQA

A Few-Shot Learning Approach for Historical Ciphered Manuscript Recognition

Mohamed Ali Souibgui, Alicia Fornés, Yousri Kessentini, Crina Tudor

Auto-TLDR; Handwritten Ciphers Recognition Using Few-Shot Object Detection

A Novel Attention-Based Aggregation Function to Combine Vision and Language

Matteo Stefanini, Marcella Cornia, Lorenzo Baraldi, Rita Cucchiara

Auto-TLDR; Fully-Attentive Reduction for Vision and Language

Abstract Slides Poster Similar

Adversarial Training for Aspect-Based Sentiment Analysis with BERT

Akbar Karimi, Andrea Prati, Leonardo Rossi

Auto-TLDR; Adversarial Training of BERT for Aspect-Based Sentiment Analysis

Abstract Slides Poster Similar

LODENet: A Holistic Approach to Offline Handwritten Chinese and Japanese Text Line Recognition

Huu Tin Hoang, Chun-Jen Peng, Hung Tran, Hung Le, Huy Hoang Nguyen

Auto-TLDR; Logographic DEComposition Encoding for Chinese and Japanese Text Line Recognition

Abstract Slides Poster Similar

On-Device Text Image Super Resolution

Dhruval Jain, Arun Prabhu, Gopi Ramena, Manoj Goyal, Debi Mohanty, Naresh Purre, Sukumar Moharana

Auto-TLDR; A Novel Deep Neural Network for Super-Resolution on Low Resolution Text Images

Abstract Slides Poster Similar

Efficient Sentence Embedding Via Semantic Subspace Analysis

Bin Wang, Fenxiao Chen, Yun Cheng Wang, C.-C. Jay Kuo

Auto-TLDR; S3E: Semantic Subspace Sentence Embedding

Abstract Slides Poster Similar

A CNN-RNN Framework for Image Annotation from Visual Cues and Social Network Metadata

Tobia Tesan, Pasquale Coscia, Lamberto Ballan

Auto-TLDR; Context-Based Image Annotation with Multiple Semantic Embeddings and Recurrent Neural Networks

Abstract Slides Poster Similar

ConvMath : A Convolutional Sequence Network for Mathematical Expression Recognition

Zuoyu Yan, Xiaode Zhang, Liangcai Gao, Ke Yuan, Zhi Tang

Auto-TLDR; Convolutional Sequence Modeling for Mathematical Expressions Recognition

Abstract Slides Poster Similar

Text Synopsis Generation for Egocentric Videos

Aidean Sharghi, Niels Lobo, Mubarak Shah

Auto-TLDR; Egocentric Video Summarization Using Multi-task Learning for End-to-End Learning

KoreALBERT: Pretraining a Lite BERT Model for Korean Language Understanding

Hyunjae Lee, Jaewoong Yun, Bongkyu Hwang, Seongho Joe, Seungjai Min, Youngjune Gwon

Auto-TLDR; KoreALBERT: A monolingual ALBERT model for Korean language understanding

Abstract Slides Poster Similar

Bridging the Gap between Natural and Medical Images through Deep Colorization

Lia Morra, Luca Piano, Fabrizio Lamberti, Tatiana Tommasi

Auto-TLDR; Transfer Learning for Diagnosis on X-ray Images Using Color Adaptation

Abstract Slides Poster Similar

A Systematic Investigation on End-To-End Deep Recognition of Grocery Products in the Wild

Marco Leo, Pierluigi Carcagni, Cosimo Distante

Auto-TLDR; Automatic Recognition of Products on grocery shelf images using Convolutional Neural Networks

Abstract Slides Poster Similar

Text Baseline Recognition Using a Recurrent Convolutional Neural Network

Matthias Wödlinger, Robert Sablatnig

Auto-TLDR; Automatic Baseline Detection of Handwritten Text Using Recurrent Convolutional Neural Network

Abstract Slides Poster Similar

Fine-Tuning Convolutional Neural Networks: A Comprehensive Guide and Benchmark Analysis for Glaucoma Screening

Amed Mvoulana, Rostom Kachouri, Mohamed Akil

Auto-TLDR; Fine-tuning Convolutional Neural Networks for Glaucoma Screening

Abstract Slides Poster Similar

GCNs-Based Context-Aware Short Text Similarity Model

Auto-TLDR; Context-Aware Graph Convolutional Network for Text Similarity

Abstract Slides Poster Similar

Class-Incremental Learning with Pre-Allocated Fixed Classifiers

Federico Pernici, Matteo Bruni, Claudio Baecchi, Francesco Turchini, Alberto Del Bimbo

Auto-TLDR; Class-Incremental Learning with Pre-allocated Output Nodes for Fixed Classifier

Abstract Slides Poster Similar

Enriching Video Captions with Contextual Text

Philipp Rimle, Pelin Dogan, Markus Gross

Auto-TLDR; Contextualized Video Captioning Using Contextual Text

Abstract Slides Poster Similar

Evaluation of BERT and ALBERT Sentence Embedding Performance on Downstream NLP Tasks

Hyunjin Choi, Judong Kim, Seongho Joe, Youngjune Gwon

Auto-TLDR; Sentence Embedding Models for BERT and ALBERT: A Comparison and Evaluation

Abstract Slides Poster Similar

Label Incorporated Graph Neural Networks for Text Classification

Yuan Xin, Linli Xu, Junliang Guo, Jiquan Li, Xin Sheng, Yuanyuan Zhou

Auto-TLDR; Graph Neural Networks for Semi-supervised Text Classification

Abstract Slides Poster Similar

ESResNet: Environmental Sound Classification Based on Visual Domain Models

Andrey Guzhov, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Environmental Sound Classification with Short-Time Fourier Transform Spectrograms

Abstract Slides Poster Similar

Extracting Action Hierarchies from Action Labels and their Use in Deep Action Recognition

Konstadinos Bacharidis, Antonis Argyros

Auto-TLDR; Exploiting the Information Content of Language Label Associations for Human Action Recognition

Abstract Slides Poster Similar

Self-Supervised Learning for Astronomical Image Classification

Ana Martinazzo, Mateus Espadoto, Nina S. T. Hirata

Auto-TLDR; Unlabeled Astronomical Images for Deep Neural Network Pre-training

Abstract Slides Poster Similar

Attentive Visual Semantic Specialized Network for Video Captioning

Jesus Perez-Martin, Benjamin Bustos, Jorge Pérez

Auto-TLDR; Adaptive Visual Semantic Specialized Network for Video Captioning

Abstract Slides Poster Similar

Deep Convolutional Embedding for Digitized Painting Clustering

Giovanna Castellano, Gennaro Vessio

Auto-TLDR; A Deep Convolutional Embedding Model for Clustering Artworks

Abstract Slides Poster Similar

Which are the factors affecting the performance of audio surveillance systems?

Antonio Greco, Antonio Roberto, Alessia Saggese, Mario Vento

Auto-TLDR; Sound Event Recognition Using Convolutional Neural Networks and Visual Representations on MIVIA Audio Events

The HisClima Database: Historical Weather Logs for Automatic Transcription and Information Extraction

Verónica Romero, Joan Andreu Sánchez

Auto-TLDR; Automatic Handwritten Text Recognition and Information Extraction from Historical Weather Logs

Abstract Slides Poster Similar