AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Maximilian Kraus,

Seyed Majid Azimi,

Emec Ercelik,

Reza Bahmanyar,

Peter Reinartz,

Alois Knoll

Auto-TLDR; AerialMPTNet: A novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features

Similar papers

Compact and Discriminative Multi-Object Tracking with Siamese CNNs

Claire Labit-Bonis, Jérôme Thomas, Frederic Lerasle

Auto-TLDR; Fast, Light-Weight and All-in-One Single Object Tracking for Multi-Target Management

Abstract Slides Poster Similar

EAGLE: Large-Scale Vehicle Detection Dataset in Real-World Scenarios Using Aerial Imagery

Seyed Majid Azimi, Reza Bahmanyar, Corentin Henry, Kurz Franz

Auto-TLDR; EAGLE: A Large-Scale Dataset for Multi-class Vehicle Detection with Object Orientation Information in Airborne Imagery

DAL: A Deep Depth-Aware Long-Term Tracker

Yanlin Qian, Song Yan, Alan Lukežič, Matej Kristan, Joni-Kristian Kamarainen, Jiri Matas

Auto-TLDR; Deep Depth-Aware Long-Term RGBD Tracking with Deep Discriminative Correlation Filter

Abstract Slides Poster Similar

Siamese Fully Convolutional Tracker with Motion Correction

Mathew Francis, Prithwijit Guha

Auto-TLDR; A Siamese Ensemble for Visual Tracking with Appearance and Motion Components

Abstract Slides Poster Similar

VTT: Long-Term Visual Tracking with Transformers

Tianling Bian, Yang Hua, Tao Song, Zhengui Xue, Ruhui Ma, Neil Robertson, Haibing Guan

Auto-TLDR; Visual Tracking Transformer with transformers for long-term visual tracking

SynDHN: Multi-Object Fish Tracker Trained on Synthetic Underwater Videos

Mygel Andrei Martija, Prospero Naval

Auto-TLDR; Underwater Multi-Object Tracking in the Wild with Deep Hungarian Network

Abstract Slides Poster Similar

SiamMT: Real-Time Arbitrary Multi-Object Tracking

Lorenzo Vaquero, Manuel Mucientes, Victor Brea

Auto-TLDR; SiamMT: A Deep-Learning-based Arbitrary Multi-Object Tracking System for Video

Abstract Slides Poster Similar

Model Decay in Long-Term Tracking

Efstratios Gavves, Ran Tao, Deepak Gupta, Arnold Smeulders

Auto-TLDR; Model Bias in Long-Term Tracking

Abstract Slides Poster Similar

Tracking Fast Moving Objects by Segmentation Network

Auto-TLDR; Fast Moving Objects Tracking by Segmentation Using Deep Learning

Abstract Slides Poster Similar

AG-GAN: An Attentive Group-Aware GAN for Pedestrian Trajectory Prediction

Yue Song, Niccolò Bisagno, Syed Zohaib Hassan, Nicola Conci

Auto-TLDR; An attentive group-aware GAN for motion prediction in crowded scenarios

Abstract Slides Poster Similar

Visual Object Tracking in Drone Images with Deep Reinforcement Learning

Auto-TLDR; A Deep Reinforcement Learning based Single Object Tracker for Drone Applications

Abstract Slides Poster Similar

PHNet: Parasite-Host Network for Video Crowd Counting

Shiqiao Meng, Jiajie Li, Weiwei Guo, Jinfeng Jiang, Lai Ye

Auto-TLDR; PHNet: A Parasite-Host Network for Video Crowd Counting

Abstract Slides Poster Similar

Transformer Networks for Trajectory Forecasting

Francesco Giuliari, Hasan Irtiza, Marco Cristani, Fabio Galasso

Auto-TLDR; TransformerNetworks for Trajectory Prediction of People Interactions

Abstract Slides Poster Similar

IPT: A Dataset for Identity Preserved Tracking in Closed Domains

Thomas Heitzinger, Martin Kampel

Auto-TLDR; Identity Preserved Tracking Using Depth Data for Privacy and Privacy

Abstract Slides Poster Similar

Image Sequence Based Cyclist Action Recognition Using Multi-Stream 3D Convolution

Stefan Zernetsch, Steven Schreck, Viktor Kress, Konrad Doll, Bernhard Sick

Auto-TLDR; 3D-ConvNet: A Multi-stream 3D Convolutional Neural Network for Detecting Cyclists in Real World Traffic Situations

Abstract Slides Poster Similar

Reducing False Positives in Object Tracking with Siamese Network

Takuya Ogawa, Takashi Shibata, Shoji Yachida, Toshinori Hosoi

Auto-TLDR; Robust Long-Term Object Tracking with Adaptive Search based on Motion Models

Abstract Slides Poster Similar

Utilising Visual Attention Cues for Vehicle Detection and Tracking

Feiyan Hu, Venkatesh Gurram Munirathnam, Noel E O'Connor, Alan Smeaton, Suzanne Little

Auto-TLDR; Visual Attention for Object Detection and Tracking in Driver-Assistance Systems

Abstract Slides Poster Similar

Future Urban Scenes Generation through Vehicles Synthesis

Alessandro Simoni, Luca Bergamini, Andrea Palazzi, Simone Calderara, Rita Cucchiara

Auto-TLDR; Predicting the Future of an Urban Scene with a Novel View Synthesis Paradigm

Abstract Slides Poster Similar

Robust Visual Object Tracking with Two-Stream Residual Convolutional Networks

Ning Zhang, Jingen Liu, Ke Wang, Dan Zeng, Tao Mei

Auto-TLDR; Two-Stream Residual Convolutional Network for Visual Tracking

Abstract Slides Poster Similar

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

Semantic Segmentation for Pedestrian Detection from Motion in Temporal Domain

Auto-TLDR; Motion Profile: Recognizing Pedestrians along with their Motion Directions in a Temporal Way

Abstract Slides Poster Similar

MFST: Multi-Features Siamese Tracker

Zhenxi Li, Guillaume-Alexandre Bilodeau, Wassim Bouachir

Auto-TLDR; Multi-Features Siamese Tracker for Robust Deep Similarity Tracking

Siamese Dynamic Mask Estimation Network for Fast Video Object Segmentation

Dexiang Hong, Guorong Li, Kai Xu, Li Su, Qingming Huang

Auto-TLDR; Siamese Dynamic Mask Estimation for Video Object Segmentation

Abstract Slides Poster Similar

An Adaptive Fusion Model Based on Kalman Filtering and LSTM for Fast Tracking of Road Signs

Chengliang Wang, Xin Xie, Chao Liao

Auto-TLDR; Fusion of ThunderNet and Region Growing Detector for Road Sign Detection and Tracking

Abstract Slides Poster Similar

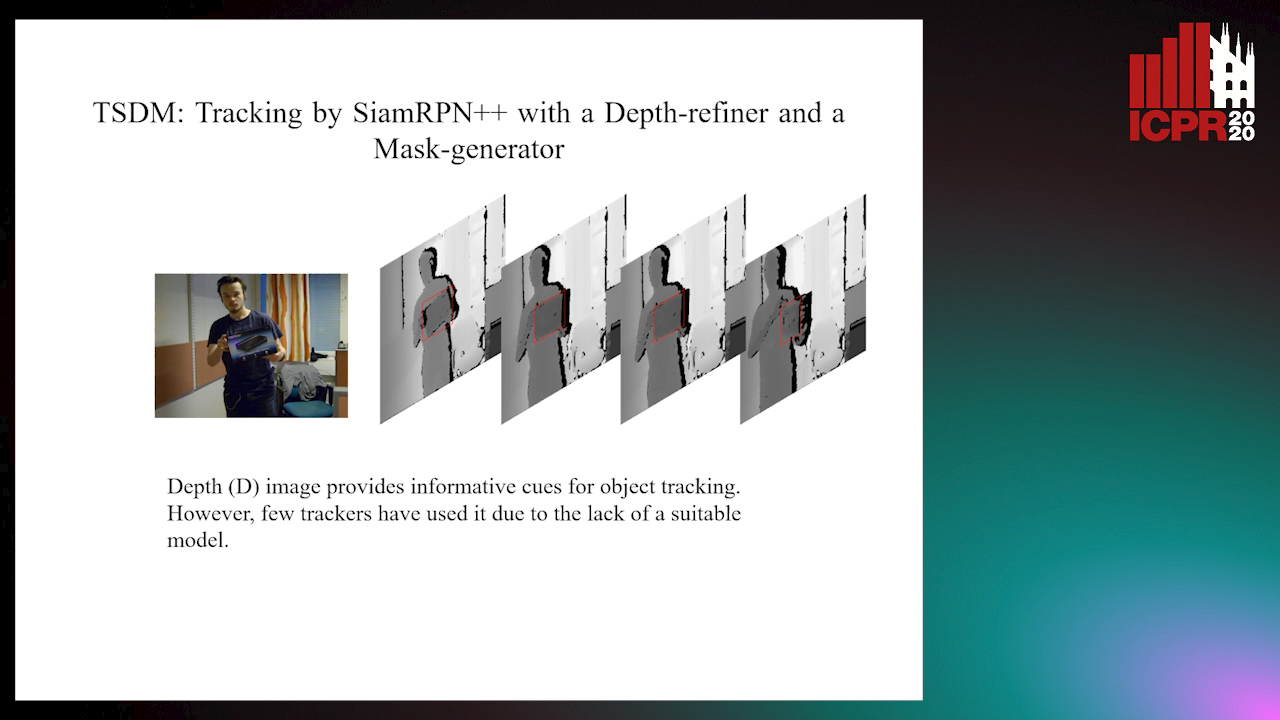

TSDM: Tracking by SiamRPN++ with a Depth-Refiner and a Mask-Generator

Pengyao Zhao, Quanli Liu, Wei Wang, Qiang Guo

Auto-TLDR; TSDM: A Depth-D Tracker for 3D Object Tracking

Abstract Slides Poster Similar

Efficient Correlation Filter Tracking with Adaptive Training Sample Update Scheme

Shan Jiang, Shuxiao Li, Chengfei Zhu, Nan Yan

Auto-TLDR; Adaptive Training Sample Update Scheme of Correlation Filter Based Trackers for Visual Tracking

Abstract Slides Poster Similar

Machine-Learned Regularization and Polygonization of Building Segmentation Masks

Stefano Zorzi, Ksenia Bittner, Friedrich Fraundorfer

Auto-TLDR; Automatic Regularization and Polygonization of Building Segmentation masks using Generative Adversarial Network

Abstract Slides Poster Similar

Encoder-Decoder Based Convolutional Neural Networks with Multi-Scale-Aware Modules for Crowd Counting

Pongpisit Thanasutives, Ken-Ichi Fukui, Masayuki Numao, Boonserm Kijsirikul

Auto-TLDR; M-SFANet and M-SegNet for Crowd Counting Using Multi-Scale Fusion Networks

Abstract Slides Poster Similar

RSINet: Rotation-Scale Invariant Network for Online Visual Tracking

Yang Fang, Geunsik Jo, Chang-Hee Lee

Auto-TLDR; RSINet: Rotation-Scale Invariant Network for Adaptive Tracking

Abstract Slides Poster Similar

Anomaly Detection, Localization and Classification for Railway Inspection

Riccardo Gasparini, Andrea D'Eusanio, Guido Borghi, Stefano Pini, Giuseppe Scaglione, Simone Calderara, Eugenio Fedeli, Rita Cucchiara

Auto-TLDR; Anomaly Detection and Localization using thermal images in the lowlight environment

RescueNet: Joint Building Segmentation and Damage Assessment from Satellite Imagery

Auto-TLDR; RescueNet: End-to-End Building Segmentation and Damage Classification for Humanitarian Aid and Disaster Response

Abstract Slides Poster Similar

What and How? Jointly Forecasting Human Action and Pose

Yanjun Zhu, Yanxia Zhang, Qiong Liu, Andreas Girgensohn

Auto-TLDR; Forecasting Human Actions and Motion Trajectories with Joint Action Classification and Pose Regression

Abstract Slides Poster Similar

DAG-Net: Double Attentive Graph Neural Network for Trajectory Forecasting

Alessio Monti, Alessia Bertugli, Simone Calderara, Rita Cucchiara

Auto-TLDR; Recurrent Generative Model for Multi-modal Human Motion Behaviour in Urban Environments

Abstract Slides Poster Similar

RWF-2000: An Open Large Scale Video Database for Violence Detection

Ming Cheng, Kunjing Cai, Ming Li

Auto-TLDR; Flow Gated Network for Violence Detection in Surveillance Cameras

Abstract Slides Poster Similar

Video Semantic Segmentation Using Deep Multi-View Representation Learning

Akrem Sellami, Salvatore Tabbone

Auto-TLDR; Deep Multi-view Representation Learning for Video Object Segmentation

Abstract Slides Poster Similar

Revisiting Sequence-To-Sequence Video Object Segmentation with Multi-Task Loss and Skip-Memory

Fatemeh Azimi, Benjamin Bischke, Sebastian Palacio, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Sequence-to-Sequence Learning for Video Object Segmentation

Abstract Slides Poster Similar

MagnifierNet: Learning Efficient Small-Scale Pedestrian Detector towards Multiple Dense Regions

Qi Cheng, Mingqin Chen, Yingjie Wu, Fei Chen, Shiping Lin

Auto-TLDR; MagnifierNet: A Simple but Effective Small-Scale Pedestrian Detection Towards Multiple Dense Regions

Abstract Slides Poster Similar

Early Wildfire Smoke Detection in Videos

Taanya Gupta, Hengyue Liu, Bir Bhanu

Auto-TLDR; Semi-supervised Spatio-Temporal Video Object Segmentation for Automatic Detection of Smoke in Videos during Forest Fire

PolyLaneNet: Lane Estimation Via Deep Polynomial Regression

Talles Torres, Rodrigo Berriel, Thiago Paixão, Claudine Badue, Alberto F. De Souza, Thiago Oliveira-Santos

Auto-TLDR; Real-Time Lane Detection with Deep Polynomial Regression

Abstract Slides Poster Similar

Multi-Resolution Fusion and Multi-Scale Input Priors Based Crowd Counting

Usman Sajid, Wenchi Ma, Guanghui Wang

Auto-TLDR; Multi-resolution Fusion Based End-to-End Crowd Counting in Still Images

Abstract Slides Poster Similar

Visual Saliency Oriented Vehicle Scale Estimation

Qixin Chen, Tie Liu, Jiali Ding, Zejian Yuan, Yuanyuan Shang

Auto-TLDR; Regularized Intensity Matching for Vehicle Scale Estimation with salient object detection

Abstract Slides Poster Similar

Tiny Object Detection in Aerial Images

Jinwang Wang, Wen Yang, Haowen Guo, Ruixiang Zhang, Gui-Song Xia

Auto-TLDR; Tiny Object Detection in Aerial Images Using Multiple Center Points Based Learning Network

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

Global Feature Aggregation for Accident Anticipation

Mishal Fatima, Umar Karim Khan, Chong Min Kyung

Auto-TLDR; Feature Aggregation for Predicting Accidents in Video Sequences

SAT-Net: Self-Attention and Temporal Fusion for Facial Action Unit Detection

Zhihua Li, Zheng Zhang, Lijun Yin

Auto-TLDR; Temporal Fusion and Self-Attention Network for Facial Action Unit Detection

Abstract Slides Poster Similar

Tackling Occlusion in Siamese Tracking with Structured Dropouts

Deepak Gupta, Efstratios Gavves, Arnold Smeulders

Auto-TLDR; Structured Dropout for Occlusion in latent space

Abstract Slides Poster Similar

Derivation of Geometrically and Semantically Annotated UAV Datasets at Large Scales from 3D City Models

Sidi Wu, Lukas Liebel, Marco Körner

Auto-TLDR; Large-Scale Dataset of Synthetic UAV Imagery for Geometric and Semantic Annotation

Abstract Slides Poster Similar

Ground-truthing Large Human Behavior Monitoring Datasets

Tehreem Qasim, Robert Fisher, Naeem Bhatti

Auto-TLDR; Semi-automated Groundtruthing for Large Video Datasets

Abstract Slides Poster Similar