SiamMT: Real-Time Arbitrary Multi-Object Tracking

Lorenzo Vaquero,

Manuel Mucientes,

Victor Brea

Auto-TLDR; SiamMT: A Deep-Learning-based Arbitrary Multi-Object Tracking System for Video

Similar papers

RSINet: Rotation-Scale Invariant Network for Online Visual Tracking

Yang Fang, Geunsik Jo, Chang-Hee Lee

Auto-TLDR; RSINet: Rotation-Scale Invariant Network for Adaptive Tracking

Abstract Slides Poster Similar

Exploiting Distilled Learning for Deep Siamese Tracking

Chengxin Liu, Zhiguo Cao, Wei Li, Yang Xiao, Shuaiyuan Du, Angfan Zhu

Auto-TLDR; Distilled Learning Framework for Siamese Tracking

Abstract Slides Poster Similar

SynDHN: Multi-Object Fish Tracker Trained on Synthetic Underwater Videos

Mygel Andrei Martija, Prospero Naval

Auto-TLDR; Underwater Multi-Object Tracking in the Wild with Deep Hungarian Network

Abstract Slides Poster Similar

MFST: Multi-Features Siamese Tracker

Zhenxi Li, Guillaume-Alexandre Bilodeau, Wassim Bouachir

Auto-TLDR; Multi-Features Siamese Tracker for Robust Deep Similarity Tracking

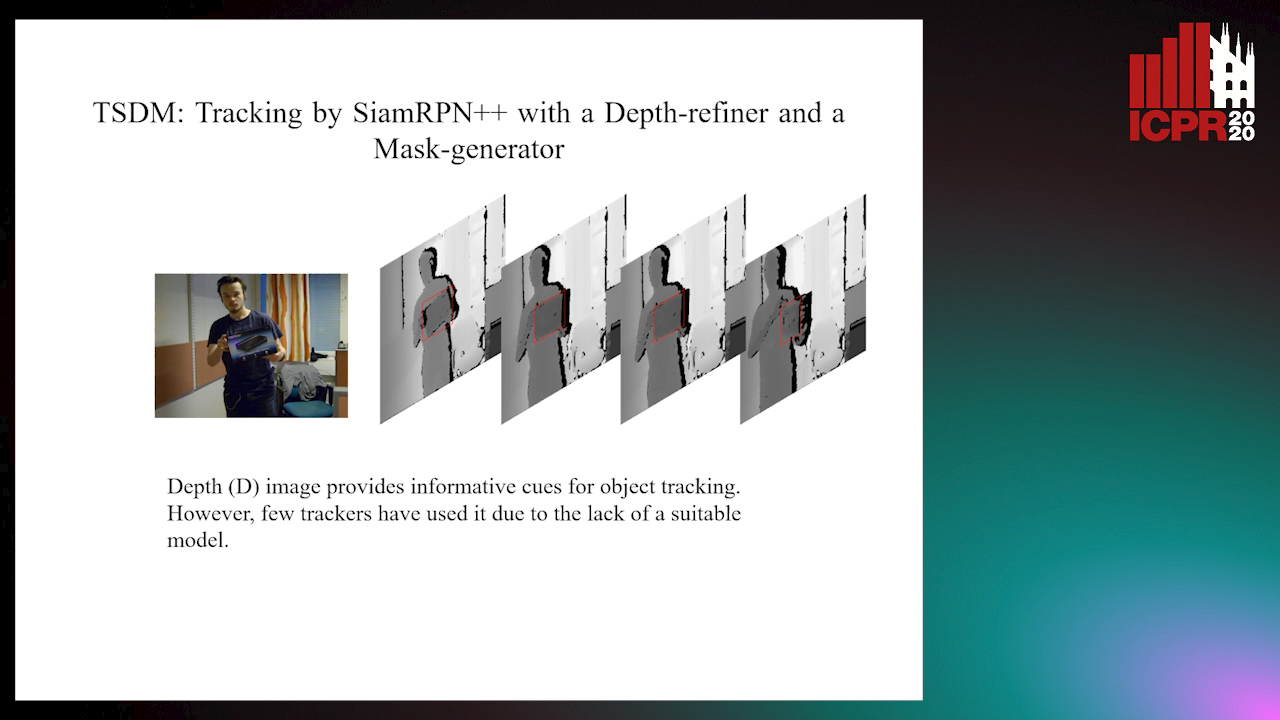

TSDM: Tracking by SiamRPN++ with a Depth-Refiner and a Mask-Generator

Pengyao Zhao, Quanli Liu, Wei Wang, Qiang Guo

Auto-TLDR; TSDM: A Depth-D Tracker for 3D Object Tracking

Abstract Slides Poster Similar

Siamese Fully Convolutional Tracker with Motion Correction

Mathew Francis, Prithwijit Guha

Auto-TLDR; A Siamese Ensemble for Visual Tracking with Appearance and Motion Components

Abstract Slides Poster Similar

Model Decay in Long-Term Tracking

Efstratios Gavves, Ran Tao, Deepak Gupta, Arnold Smeulders

Auto-TLDR; Model Bias in Long-Term Tracking

Abstract Slides Poster Similar

Tackling Occlusion in Siamese Tracking with Structured Dropouts

Deepak Gupta, Efstratios Gavves, Arnold Smeulders

Auto-TLDR; Structured Dropout for Occlusion in latent space

Abstract Slides Poster Similar

Compact and Discriminative Multi-Object Tracking with Siamese CNNs

Claire Labit-Bonis, Jérôme Thomas, Frederic Lerasle

Auto-TLDR; Fast, Light-Weight and All-in-One Single Object Tracking for Multi-Target Management

Abstract Slides Poster Similar

DAL: A Deep Depth-Aware Long-Term Tracker

Yanlin Qian, Song Yan, Alan Lukežič, Matej Kristan, Joni-Kristian Kamarainen, Jiri Matas

Auto-TLDR; Deep Depth-Aware Long-Term RGBD Tracking with Deep Discriminative Correlation Filter

Abstract Slides Poster Similar

VTT: Long-Term Visual Tracking with Transformers

Tianling Bian, Yang Hua, Tao Song, Zhengui Xue, Ruhui Ma, Neil Robertson, Haibing Guan

Auto-TLDR; Visual Tracking Transformer with transformers for long-term visual tracking

Robust Visual Object Tracking with Two-Stream Residual Convolutional Networks

Ning Zhang, Jingen Liu, Ke Wang, Dan Zeng, Tao Mei

Auto-TLDR; Two-Stream Residual Convolutional Network for Visual Tracking

Abstract Slides Poster Similar

Siamese Dynamic Mask Estimation Network for Fast Video Object Segmentation

Dexiang Hong, Guorong Li, Kai Xu, Li Su, Qingming Huang

Auto-TLDR; Siamese Dynamic Mask Estimation for Video Object Segmentation

Abstract Slides Poster Similar

AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Maximilian Kraus, Seyed Majid Azimi, Emec Ercelik, Reza Bahmanyar, Peter Reinartz, Alois Knoll

Auto-TLDR; AerialMPTNet: A novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features

Abstract Slides Poster Similar

Visual Object Tracking in Drone Images with Deep Reinforcement Learning

Auto-TLDR; A Deep Reinforcement Learning based Single Object Tracker for Drone Applications

Abstract Slides Poster Similar

Adaptive Context-Aware Discriminative Correlation Filters for Robust Visual Object Tracking

Tianyang Xu, Zhenhua Feng, Xiaojun Wu, Josef Kittler

Auto-TLDR; ACA-DCF: Adaptive Context-Aware Discriminative Correlation Filter with complementary attention mechanisms

Abstract Slides Poster Similar

Reducing False Positives in Object Tracking with Siamese Network

Takuya Ogawa, Takashi Shibata, Shoji Yachida, Toshinori Hosoi

Auto-TLDR; Robust Long-Term Object Tracking with Adaptive Search based on Motion Models

Abstract Slides Poster Similar

Motion U-Net: Multi-Cue Encoder-Decoder Network for Motion Segmentation

Gani Rahmon, Filiz Bunyak, Kannappan Palaniappan

Auto-TLDR; Motion U-Net: A Deep Learning Framework for Robust Moving Object Detection under Challenging Conditions

Abstract Slides Poster Similar

Tracking Fast Moving Objects by Segmentation Network

Auto-TLDR; Fast Moving Objects Tracking by Segmentation Using Deep Learning

Abstract Slides Poster Similar

Region-Based Non-Local Operation for Video Classification

Auto-TLDR; Regional-based Non-Local Operation for Deep Self-Attention in Convolutional Neural Networks

Abstract Slides Poster Similar

Utilising Visual Attention Cues for Vehicle Detection and Tracking

Feiyan Hu, Venkatesh Gurram Munirathnam, Noel E O'Connor, Alan Smeaton, Suzanne Little

Auto-TLDR; Visual Attention for Object Detection and Tracking in Driver-Assistance Systems

Abstract Slides Poster Similar

Learning Object Deformation and Motion Adaption for Semi-Supervised Video Object Segmentation

Xiaoyang Zheng, Xin Tan, Jianming Guo, Lizhuang Ma

Auto-TLDR; Semi-supervised Video Object Segmentation with Mask-propagation-based Model

Abstract Slides Poster Similar

Correlation-Based ConvNet for Small Object Detection in Videos

Brais Bosquet, Manuel Mucientes, Victor Brea

Auto-TLDR; STDnet-ST: An End-to-End Spatio-Temporal Convolutional Neural Network for Small Object Detection in Video

Abstract Slides Poster Similar

Efficient Correlation Filter Tracking with Adaptive Training Sample Update Scheme

Shan Jiang, Shuxiao Li, Chengfei Zhu, Nan Yan

Auto-TLDR; Adaptive Training Sample Update Scheme of Correlation Filter Based Trackers for Visual Tracking

Abstract Slides Poster Similar

Not 3D Re-ID: Simple Single Stream 2D Convolution for Robust Video Re-Identification

Auto-TLDR; ResNet50-IBN for Video-based Person Re-Identification using Single Stream 2D Convolution Network

Abstract Slides Poster Similar

Real-time Pedestrian Lane Detection for Assistive Navigation using Neural Architecture Search

Sui Paul Ang, Son Lam Phung, Thi Nhat Anh Nguyen, Soan T. M. Duong, Abdesselam Bouzerdoum, Mark M. Schira

Auto-TLDR; Real-Time Pedestrian Lane Detection Using Deep Neural Networks

Abstract Slides Poster Similar

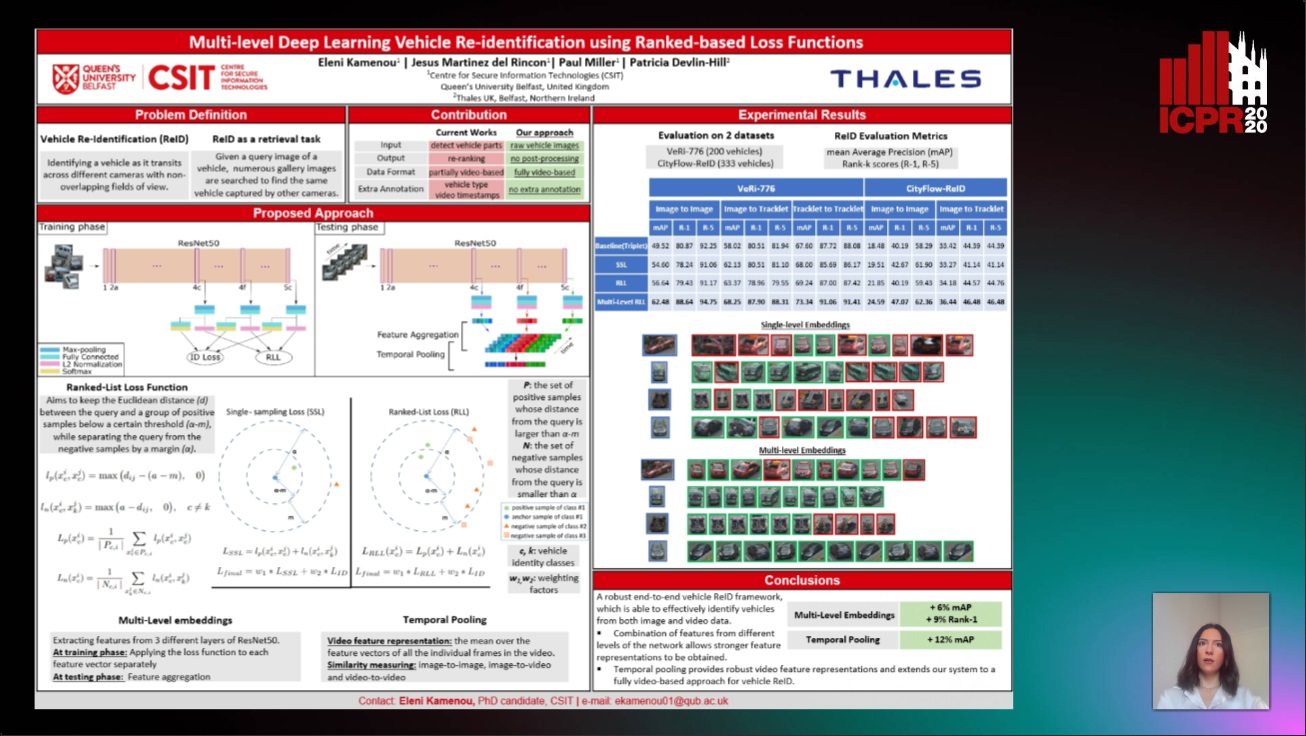

Multi-Level Deep Learning Vehicle Re-Identification Using Ranked-Based Loss Functions

Eleni Kamenou, Jesus Martinez-Del-Rincon, Paul Miller, Patricia Devlin - Hill

Auto-TLDR; Multi-Level Re-identification Network for Vehicle Re-Identification

Abstract Slides Poster Similar

IPT: A Dataset for Identity Preserved Tracking in Closed Domains

Thomas Heitzinger, Martin Kampel

Auto-TLDR; Identity Preserved Tracking Using Depth Data for Privacy and Privacy

Abstract Slides Poster Similar

RONELD: Robust Neural Network Output Enhancement for Active Lane Detection

Zhe Ming Chng, Joseph Mun Hung Lew, Jimmy Addison Lee

Auto-TLDR; Real-Time Robust Neural Network Output Enhancement for Active Lane Detection

Abstract Slides Poster Similar

Detecting Marine Species in Echograms Via Traditional, Hybrid, and Deep Learning Frameworks

Porto Marques Tunai, Alireza Rezvanifar, Melissa Cote, Alexandra Branzan Albu, Kaan Ersahin, Todd Mudge, Stephane Gauthier

Auto-TLDR; End-to-End Deep Learning for Echogram Interpretation of Marine Species in Echograms

Abstract Slides Poster Similar

Attention Pyramid Module for Scene Recognition

Zhinan Qiao, Xiaohui Yuan, Chengyuan Zhuang, Abolfazl Meyarian

Auto-TLDR; Attention Pyramid Module for Multi-Scale Scene Recognition

Abstract Slides Poster Similar

Temporal Feature Enhancement Network with External Memory for Object Detection in Surveillance Video

Masato Fujitake, Akihiro Sugimoto

Auto-TLDR; Temporal Attention Based External Memory Network for Surveillance Object Detection

Online Object Recognition Using CNN-Based Algorithm on High-Speed Camera Imaging

Shigeaki Namiki, Keiko Yokoyama, Shoji Yachida, Takashi Shibata, Hiroyoshi Miyano, Masatoshi Ishikawa

Auto-TLDR; Real-Time Object Recognition with High-Speed Camera Imaging with Population Data Clearing and Data Ensemble

Abstract Slides Poster Similar

Lightweight Low-Resolution Face Recognition for Surveillance Applications

Yoanna Martínez-Díaz, Heydi Mendez-Vazquez, Luis S. Luevano, Leonardo Chang, Miguel Gonzalez-Mendoza

Auto-TLDR; Efficiency of Lightweight Deep Face Networks on Low-Resolution Surveillance Imagery

Abstract Slides Poster Similar

Progressive Gradient Pruning for Classification, Detection and Domain Adaptation

Le Thanh Nguyen-Meidine, Eric Granger, Marco Pedersoli, Madhu Kiran, Louis-Antoine Blais-Morin

Auto-TLDR; Progressive Gradient Pruning for Iterative Filter Pruning of Convolutional Neural Networks

Abstract Slides Poster Similar

Mobile Augmented Reality: Fast, Precise, and Smooth Planar Object Tracking

Dmitrii Matveichev, Daw-Tung Lin

Auto-TLDR; Planar Object Tracking with Sparse Optical Flow Tracking and Descriptor Matching

Abstract Slides Poster Similar

Fast and Accurate Real-Time Semantic Segmentation with Dilated Asymmetric Convolutions

Leonel Rosas-Arias, Gibran Benitez-Garcia, Jose Portillo-Portillo, Gabriel Sanchez-Perez, Keiji Yanai

Auto-TLDR; FASSD-Net: Dilated Asymmetric Pyramidal Fusion for Real-Time Semantic Segmentation

Abstract Slides Poster Similar

An Empirical Analysis of Visual Features for Multiple Object Tracking in Urban Scenes

Mehdi Miah, Justine Pepin, Nicolas Saunier, Guillaume-Alexandre Bilodeau

Auto-TLDR; Evaluating Appearance Features for Multiple Object Tracking in Urban Scenes

Abstract Slides Poster Similar

Convolutional STN for Weakly Supervised Object Localization

Akhil Meethal, Marco Pedersoli, Soufiane Belharbi, Eric Granger

Auto-TLDR; Spatial Localization for Weakly Supervised Object Localization

Building Computationally Efficient and Well-Generalizing Person Re-Identification Models with Metric Learning

Vladislav Sovrasov, Dmitry Sidnev

Auto-TLDR; Cross-Domain Generalization in Person Re-identification using Omni-Scale Network

Light3DPose: Real-Time Multi-Person 3D Pose Estimation from Multiple Views

Alessio Elmi, Davide Mazzini, Pietro Tortella

Auto-TLDR; 3D Pose Estimation of Multiple People from a Few calibrated Camera Views using Deep Learning

Abstract Slides Poster Similar

Visual Saliency Oriented Vehicle Scale Estimation

Qixin Chen, Tie Liu, Jiali Ding, Zejian Yuan, Yuanyuan Shang

Auto-TLDR; Regularized Intensity Matching for Vehicle Scale Estimation with salient object detection

Abstract Slides Poster Similar

3D Attention Mechanism for Fine-Grained Classification of Table Tennis Strokes Using a Twin Spatio-Temporal Convolutional Neural Networks

Pierre-Etienne Martin, Jenny Benois-Pineau, Renaud Péteri, Julien Morlier

Auto-TLDR; Attentional Blocks for Action Recognition in Table Tennis Strokes

Abstract Slides Poster Similar

Force Banner for the Recognition of Spatial Relations

Robin Deléarde, Camille Kurtz, Laurent Wendling, Philippe Dejean

Auto-TLDR; Spatial Relation Recognition using Force Banners

3D Facial Matching by Spiral Convolutional Metric Learning and a Biometric Fusion-Net of Demographic Properties

Soha Sadat Mahdi, Nele Nauwelaers, Philip Joris, Giorgos Bouritsas, Imperial London, Sergiy Bokhnyak, Susan Walsh, Mark Shriver, Michael Bronstein, Peter Claes

Auto-TLDR; Multi-biometric Fusion for Biometric Verification using 3D Facial Mesures

On the Information of Feature Maps and Pruning of Deep Neural Networks

Mohammadreza Soltani, Suya Wu, Jie Ding, Robert Ravier, Vahid Tarokh

Auto-TLDR; Compressing Deep Neural Models Using Mutual Information

Abstract Slides Poster Similar

Video Semantic Segmentation Using Deep Multi-View Representation Learning

Akrem Sellami, Salvatore Tabbone

Auto-TLDR; Deep Multi-view Representation Learning for Video Object Segmentation

Abstract Slides Poster Similar

Object Detection in the DCT Domain: Is Luminance the Solution?

Benjamin Deguerre, Clement Chatelain, Gilles Gasso

Auto-TLDR; Jpeg Deep: Object Detection Using Compressed JPEG Images

Abstract Slides Poster Similar