Siamese Fully Convolutional Tracker with Motion Correction

Mathew Francis,

Prithwijit Guha

Auto-TLDR; A Siamese Ensemble for Visual Tracking with Appearance and Motion Components

Similar papers

Tackling Occlusion in Siamese Tracking with Structured Dropouts

Deepak Gupta, Efstratios Gavves, Arnold Smeulders

Auto-TLDR; Structured Dropout for Occlusion in latent space

Abstract Slides Poster Similar

Robust Visual Object Tracking with Two-Stream Residual Convolutional Networks

Ning Zhang, Jingen Liu, Ke Wang, Dan Zeng, Tao Mei

Auto-TLDR; Two-Stream Residual Convolutional Network for Visual Tracking

Abstract Slides Poster Similar

DAL: A Deep Depth-Aware Long-Term Tracker

Yanlin Qian, Song Yan, Alan Lukežič, Matej Kristan, Joni-Kristian Kamarainen, Jiri Matas

Auto-TLDR; Deep Depth-Aware Long-Term RGBD Tracking with Deep Discriminative Correlation Filter

Abstract Slides Poster Similar

MFST: Multi-Features Siamese Tracker

Zhenxi Li, Guillaume-Alexandre Bilodeau, Wassim Bouachir

Auto-TLDR; Multi-Features Siamese Tracker for Robust Deep Similarity Tracking

Model Decay in Long-Term Tracking

Efstratios Gavves, Ran Tao, Deepak Gupta, Arnold Smeulders

Auto-TLDR; Model Bias in Long-Term Tracking

Abstract Slides Poster Similar

RSINet: Rotation-Scale Invariant Network for Online Visual Tracking

Yang Fang, Geunsik Jo, Chang-Hee Lee

Auto-TLDR; RSINet: Rotation-Scale Invariant Network for Adaptive Tracking

Abstract Slides Poster Similar

Adaptive Context-Aware Discriminative Correlation Filters for Robust Visual Object Tracking

Tianyang Xu, Zhenhua Feng, Xiaojun Wu, Josef Kittler

Auto-TLDR; ACA-DCF: Adaptive Context-Aware Discriminative Correlation Filter with complementary attention mechanisms

Abstract Slides Poster Similar

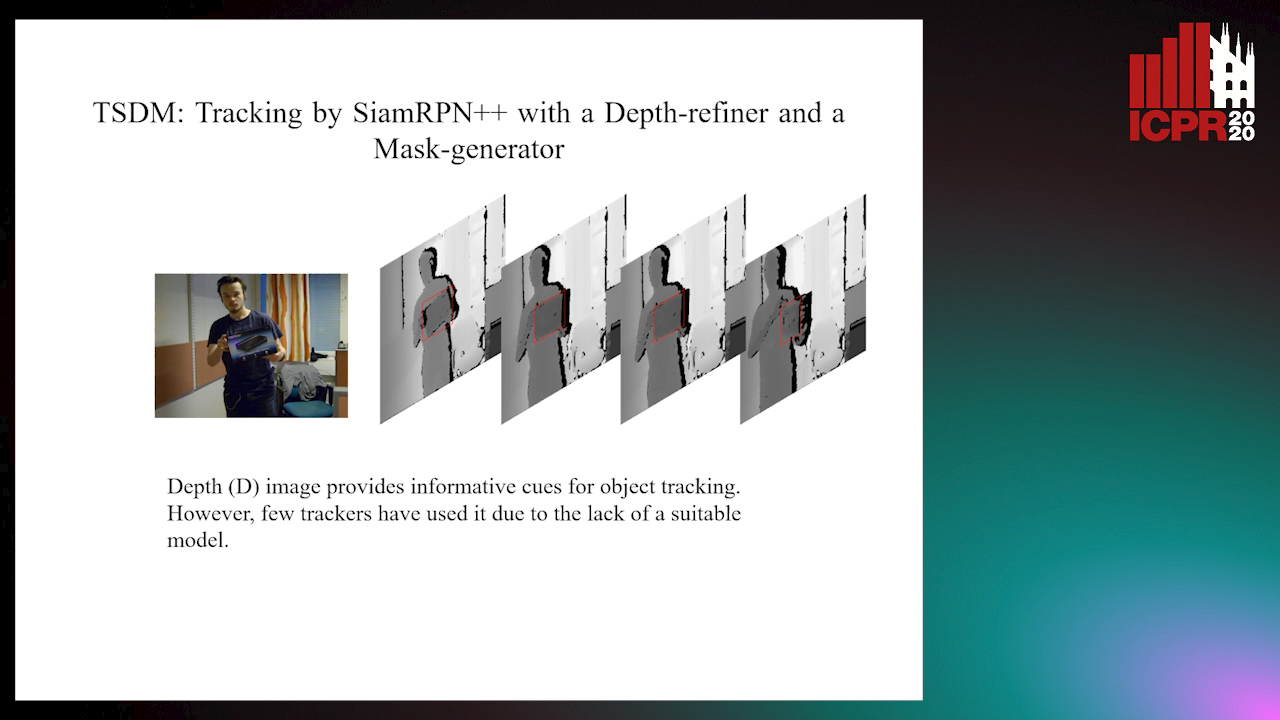

TSDM: Tracking by SiamRPN++ with a Depth-Refiner and a Mask-Generator

Pengyao Zhao, Quanli Liu, Wei Wang, Qiang Guo

Auto-TLDR; TSDM: A Depth-D Tracker for 3D Object Tracking

Abstract Slides Poster Similar

SiamMT: Real-Time Arbitrary Multi-Object Tracking

Lorenzo Vaquero, Manuel Mucientes, Victor Brea

Auto-TLDR; SiamMT: A Deep-Learning-based Arbitrary Multi-Object Tracking System for Video

Abstract Slides Poster Similar

Reducing False Positives in Object Tracking with Siamese Network

Takuya Ogawa, Takashi Shibata, Shoji Yachida, Toshinori Hosoi

Auto-TLDR; Robust Long-Term Object Tracking with Adaptive Search based on Motion Models

Abstract Slides Poster Similar

Visual Object Tracking in Drone Images with Deep Reinforcement Learning

Auto-TLDR; A Deep Reinforcement Learning based Single Object Tracker for Drone Applications

Abstract Slides Poster Similar

Exploiting Distilled Learning for Deep Siamese Tracking

Chengxin Liu, Zhiguo Cao, Wei Li, Yang Xiao, Shuaiyuan Du, Angfan Zhu

Auto-TLDR; Distilled Learning Framework for Siamese Tracking

Abstract Slides Poster Similar

VTT: Long-Term Visual Tracking with Transformers

Tianling Bian, Yang Hua, Tao Song, Zhengui Xue, Ruhui Ma, Neil Robertson, Haibing Guan

Auto-TLDR; Visual Tracking Transformer with transformers for long-term visual tracking

Siamese Dynamic Mask Estimation Network for Fast Video Object Segmentation

Dexiang Hong, Guorong Li, Kai Xu, Li Su, Qingming Huang

Auto-TLDR; Siamese Dynamic Mask Estimation for Video Object Segmentation

Abstract Slides Poster Similar

Efficient Correlation Filter Tracking with Adaptive Training Sample Update Scheme

Shan Jiang, Shuxiao Li, Chengfei Zhu, Nan Yan

Auto-TLDR; Adaptive Training Sample Update Scheme of Correlation Filter Based Trackers for Visual Tracking

Abstract Slides Poster Similar

Compact and Discriminative Multi-Object Tracking with Siamese CNNs

Claire Labit-Bonis, Jérôme Thomas, Frederic Lerasle

Auto-TLDR; Fast, Light-Weight and All-in-One Single Object Tracking for Multi-Target Management

Abstract Slides Poster Similar

AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Maximilian Kraus, Seyed Majid Azimi, Emec Ercelik, Reza Bahmanyar, Peter Reinartz, Alois Knoll

Auto-TLDR; AerialMPTNet: A novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features

Abstract Slides Poster Similar

Mobile Augmented Reality: Fast, Precise, and Smooth Planar Object Tracking

Dmitrii Matveichev, Daw-Tung Lin

Auto-TLDR; Planar Object Tracking with Sparse Optical Flow Tracking and Descriptor Matching

Abstract Slides Poster Similar

SynDHN: Multi-Object Fish Tracker Trained on Synthetic Underwater Videos

Mygel Andrei Martija, Prospero Naval

Auto-TLDR; Underwater Multi-Object Tracking in the Wild with Deep Hungarian Network

Abstract Slides Poster Similar

STaRFlow: A SpatioTemporal Recurrent Cell for Lightweight Multi-Frame Optical Flow Estimation

Pierre Godet, Alexandre Boulch, Aurélien Plyer, Guy Le Besnerais

Auto-TLDR; STaRFlow: A lightweight CNN-based algorithm for optical flow estimation

Abstract Slides Poster Similar

Video Semantic Segmentation Using Deep Multi-View Representation Learning

Akrem Sellami, Salvatore Tabbone

Auto-TLDR; Deep Multi-view Representation Learning for Video Object Segmentation

Abstract Slides Poster Similar

Object Segmentation Tracking from Generic Video Cues

Amirhossein Kardoost, Sabine Müller, Joachim Weickert, Margret Keuper

Auto-TLDR; A Light-Weight Variational Framework for Video Object Segmentation in Videos

Abstract Slides Poster Similar

Utilising Visual Attention Cues for Vehicle Detection and Tracking

Feiyan Hu, Venkatesh Gurram Munirathnam, Noel E O'Connor, Alan Smeaton, Suzanne Little

Auto-TLDR; Visual Attention for Object Detection and Tracking in Driver-Assistance Systems

Abstract Slides Poster Similar

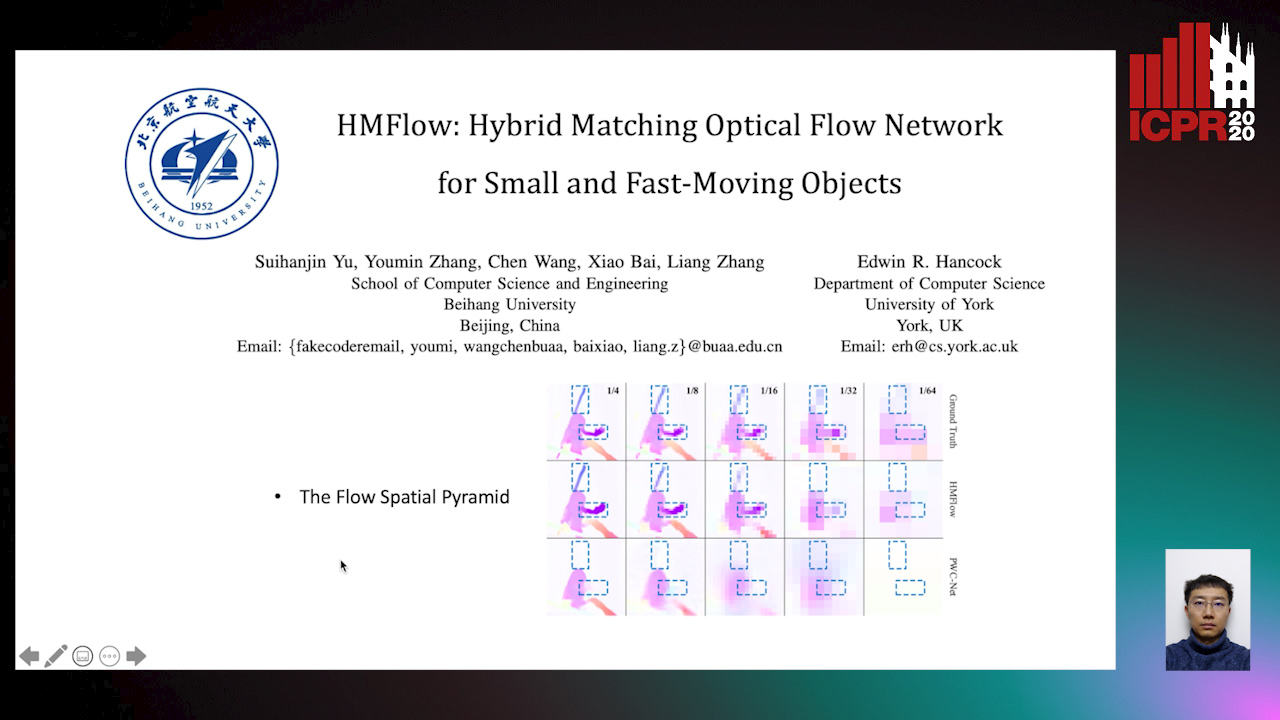

HMFlow: Hybrid Matching Optical Flow Network for Small and Fast-Moving Objects

Suihanjin Yu, Youmin Zhang, Chen Wang, Xiao Bai, Liang Zhang, Edwin Hancock

Auto-TLDR; Hybrid Matching Optical Flow Network with Global Matching Component

Abstract Slides Poster Similar

Learning Object Deformation and Motion Adaption for Semi-Supervised Video Object Segmentation

Xiaoyang Zheng, Xin Tan, Jianming Guo, Lizhuang Ma

Auto-TLDR; Semi-supervised Video Object Segmentation with Mask-propagation-based Model

Abstract Slides Poster Similar

Visual Saliency Oriented Vehicle Scale Estimation

Qixin Chen, Tie Liu, Jiali Ding, Zejian Yuan, Yuanyuan Shang

Auto-TLDR; Regularized Intensity Matching for Vehicle Scale Estimation with salient object detection

Abstract Slides Poster Similar

An Adaptive Fusion Model Based on Kalman Filtering and LSTM for Fast Tracking of Road Signs

Chengliang Wang, Xin Xie, Chao Liao

Auto-TLDR; Fusion of ThunderNet and Region Growing Detector for Road Sign Detection and Tracking

Abstract Slides Poster Similar

Tracking Fast Moving Objects by Segmentation Network

Auto-TLDR; Fast Moving Objects Tracking by Segmentation Using Deep Learning

Abstract Slides Poster Similar

Two-Stage Adaptive Object Scene Flow Using Hybrid CNN-CRF Model

Congcong Li, Haoyu Ma, Qingmin Liao

Auto-TLDR; Adaptive object scene flow estimation using a hybrid CNN-CRF model and adaptive iteration

Abstract Slides Poster Similar

Self-Supervised Joint Encoding of Motion and Appearance for First Person Action Recognition

Mirco Planamente, Andrea Bottino, Barbara Caputo

Auto-TLDR; A Single Stream Architecture for Egocentric Action Recognition from the First-Person Point of View

Abstract Slides Poster Similar

ACCLVOS: Atrous Convolution with Spatial-Temporal ConvLSTM for Video Object Segmentation

Muzhou Xu, Shan Zong, Chunping Liu, Shengrong Gong, Zhaohui Wang, Yu Xia

Auto-TLDR; Semi-supervised Video Object Segmentation using U-shape Convolution and ConvLSTM

Abstract Slides Poster Similar

Early Wildfire Smoke Detection in Videos

Taanya Gupta, Hengyue Liu, Bir Bhanu

Auto-TLDR; Semi-supervised Spatio-Temporal Video Object Segmentation for Automatic Detection of Smoke in Videos during Forest Fire

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

Revisiting Optical Flow Estimation in 360 Videos

Keshav Bhandari, Ziliang Zong, Yan Yan

Auto-TLDR; LiteFlowNet360: A Domain Adaptation Framework for 360 Video Optical Flow Estimation

Video Object Detection Using Object's Motion Context and Spatio-Temporal Feature Aggregation

Jaekyum Kim, Junho Koh, Byeongwon Lee, Seungji Yang, Jun Won Choi

Auto-TLDR; Video Object Detection Using Spatio-Temporal Aggregated Features and Gated Attention Network

Abstract Slides Poster Similar

Flow-Guided Spatial Attention Tracking for Egocentric Activity Recognition

Auto-TLDR; flow-guided spatial attention tracking for egocentric activity recognition

Abstract Slides Poster Similar

FC-DCNN: A Densely Connected Neural Network for Stereo Estimation

Dominik Hirner, Friedrich Fraundorfer

Auto-TLDR; FC-DCNN: A Lightweight Network for Stereo Estimation

Abstract Slides Poster Similar

GraphBGS: Background Subtraction Via Recovery of Graph Signals

Jhony Heriberto Giraldo Zuluaga, Thierry Bouwmans

Auto-TLDR; Graph BackGround Subtraction using Graph Signals

Abstract Slides Poster Similar

Motion and Region Aware Adversarial Learning for Fall Detection with Thermal Imaging

Vineet Mehta, Abhinav Dhall, Sujata Pal, Shehroz Khan

Auto-TLDR; Automatic Fall Detection with Adversarial Network using Thermal Imaging Camera

Abstract Slides Poster Similar

Residual Learning of Video Frame Interpolation Using Convolutional LSTM

Auto-TLDR; Video Frame Interpolation Using Residual Learning and Convolutional LSTMs

Abstract Slides Poster Similar

Self-Supervised Learning of Dynamic Representations for Static Images

Siyang Song, Enrique Sanchez, Linlin Shen, Michel Valstar

Auto-TLDR; Facial Action Unit Intensity Estimation and Affect Estimation from Still Images with Multiple Temporal Scale

Abstract Slides Poster Similar

Towards Practical Compressed Video Action Recognition: A Temporal Enhanced Multi-Stream Network

Bing Li, Longteng Kong, Dongming Zhang, Xiuguo Bao, Di Huang, Yunhong Wang

Auto-TLDR; TEMSN: Temporal Enhanced Multi-Stream Network for Compressed Video Action Recognition

Abstract Slides Poster Similar

Gabriella: An Online System for Real-Time Activity Detection in Untrimmed Security Videos

Mamshad Nayeem Rizve, Ugur Demir, Praveen Praveen Tirupattur, Aayush Jung Rana, Kevin Duarte, Ishan Rajendrakumar Dave, Yogesh Rawat, Mubarak Shah

Auto-TLDR; Gabriella: A Real-Time Online System for Activity Detection in Surveillance Videos

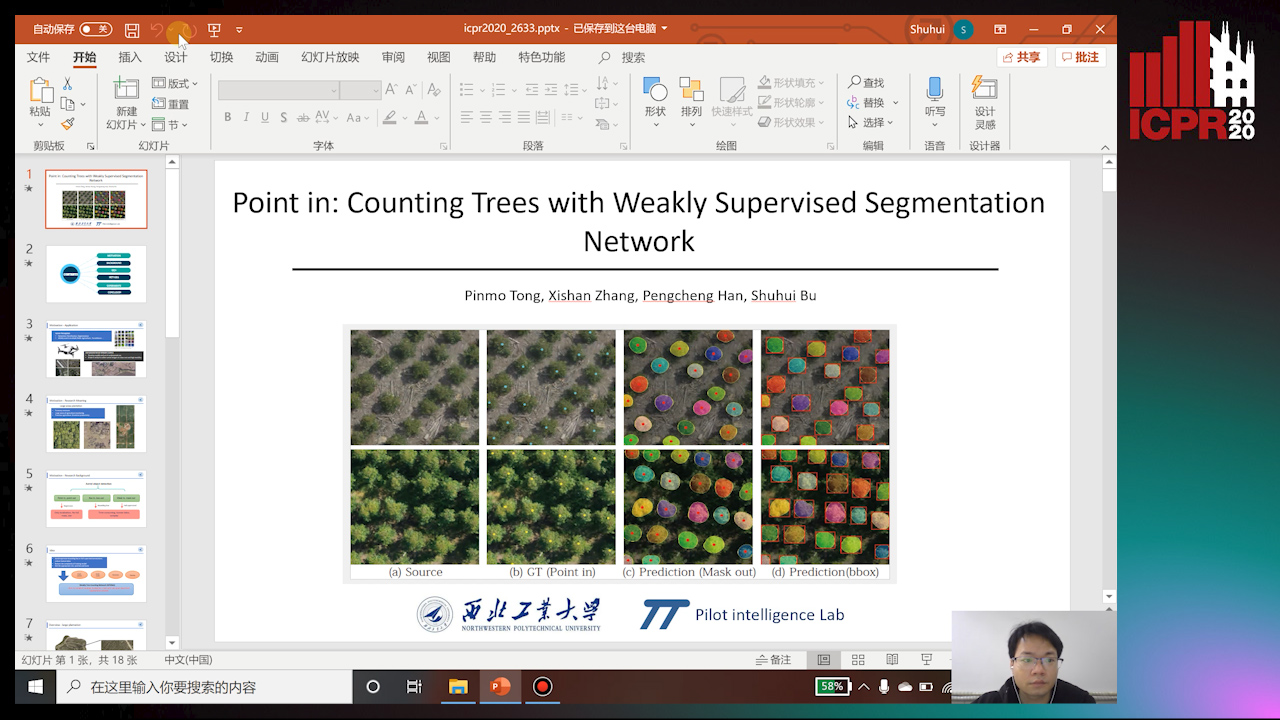

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

Revisiting Sequence-To-Sequence Video Object Segmentation with Multi-Task Loss and Skip-Memory

Fatemeh Azimi, Benjamin Bischke, Sebastian Palacio, Federico Raue, Jörn Hees, Andreas Dengel

Auto-TLDR; Sequence-to-Sequence Learning for Video Object Segmentation

Abstract Slides Poster Similar

Human Segmentation with Dynamic LiDAR Data

Tao Zhong, Wonjik Kim, Masayuki Tanaka, Masatoshi Okutomi

Auto-TLDR; Spatiotemporal Neural Network for Human Segmentation with Dynamic Point Clouds

A Lightweight Network to Learn Optical Flow from Event Data

Auto-TLDR; A lightweight pyramid network with attention mechanism to learn optical flow from events data

Motion U-Net: Multi-Cue Encoder-Decoder Network for Motion Segmentation

Gani Rahmon, Filiz Bunyak, Kannappan Palaniappan

Auto-TLDR; Motion U-Net: A Deep Learning Framework for Robust Moving Object Detection under Challenging Conditions

Abstract Slides Poster Similar