Lightweight Low-Resolution Face Recognition for Surveillance Applications

Yoanna Martínez-Díaz,

Heydi Mendez-Vazquez,

Luis S. Luevano,

Leonardo Chang,

Miguel Gonzalez-Mendoza

Auto-TLDR; Efficiency of Lightweight Deep Face Networks on Low-Resolution Surveillance Imagery

Similar papers

DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Gaoang Wang, Chen Lin, Tianqiang Liu, Mingwei He, Jiebo Luo

Auto-TLDR; DAIL: Dataset-Aware and Invariant Learning for Face Recognition

Abstract Slides Poster Similar

Quality-Based Representation for Unconstrained Face Recognition

Nelson Méndez-Llanes, Katy Castillo-Rosado, Heydi Mendez-Vazquez, Massimo Tistarelli

Auto-TLDR; activation map for face recognition in unconstrained environments

Face Image Quality Assessment for Model and Human Perception

Ken Chen, Yichao Wu, Zhenmao Li, Yudong Wu, Ding Liang

Auto-TLDR; A labour-saving method for FIQA training with contradictory data from multiple sources

Abstract Slides Poster Similar

G-FAN: Graph-Based Feature Aggregation Network for Video Face Recognition

He Zhao, Yongjie Shi, Xin Tong, Jingsi Wen, Xianghua Ying, Jinshi Hongbin Zha

Auto-TLDR; Graph-based Feature Aggregation Network for Video Face Recognition

Abstract Slides Poster Similar

Contrastive Data Learning for Facial Pose and Illumination Normalization

Auto-TLDR; Pose and Illumination Normalization with Contrast Data Learning for Face Recognition

Abstract Slides Poster Similar

Angular Sparsemax for Face Recognition

Auto-TLDR; Angular Sparsemax for Face Recognition

Abstract Slides Poster Similar

Age Gap Reducer-GAN for Recognizing Age-Separated Faces

Daksha Yadav, Naman Kohli, Mayank Vatsa, Richa Singh, Afzel Noore

Auto-TLDR; Generative Adversarial Network for Age-separated Face Recognition

Abstract Slides Poster Similar

Building Computationally Efficient and Well-Generalizing Person Re-Identification Models with Metric Learning

Vladislav Sovrasov, Dmitry Sidnev

Auto-TLDR; Cross-Domain Generalization in Person Re-identification using Omni-Scale Network

Unsupervised Disentangling of Viewpoint and Residues Variations by Substituting Representations for Robust Face Recognition

Minsu Kim, Joanna Hong, Junho Kim, Hong Joo Lee, Yong Man Ro

Auto-TLDR; Unsupervised Disentangling of Identity, viewpoint, and Residue Representations for Robust Face Recognition

Abstract Slides Poster Similar

ClusterFace: Joint Clustering and Classification for Set-Based Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Joint Clustering and Classification for Face Recognition in the Wild

Abstract Slides Poster Similar

Identifying Missing Children: Face Age-Progression Via Deep Feature Aging

Debayan Deb, Divyansh Aggarwal, Anil Jain

Auto-TLDR; Aging Face Features for Missing Children Identification

LiNet: A Lightweight Network for Image Super Resolution

Armin Mehri, Parichehr Behjati Ardakani, Angel D. Sappa

Auto-TLDR; LiNet: A Compact Dense Network for Lightweight Super Resolution

Abstract Slides Poster Similar

Pose-Robust Face Recognition by Deep Meta Capsule Network-Based Equivariant Embedding

Fangyu Wu, Jeremy Simon Smith, Wenjin Lu, Bailing Zhang

Auto-TLDR; Deep Meta Capsule Network-based Equivariant Embedding Model for Pose-Robust Face Recognition

Boosting High-Level Vision with Joint Compression Artifacts Reduction and Super-Resolution

Xiaoyu Xiang, Qian Lin, Jan Allebach

Auto-TLDR; A Context-Aware Joint CAR and SR Neural Network for High-Resolution Text Recognition and Face Detection

Abstract Slides Poster Similar

Exemplar Guided Cross-Spectral Face Hallucination Via Mutual Information Disentanglement

Haoxue Wu, Huaibo Huang, Aijing Yu, Jie Cao, Zhen Lei, Ran He

Auto-TLDR; Exemplar Guided Cross-Spectral Face Hallucination with Structural Representation Learning

Abstract Slides Poster Similar

Cam-Softmax for Discriminative Deep Feature Learning

Tamas Suveges, Stephen James Mckenna

Auto-TLDR; Cam-Softmax: A Generalisation of Activations and Softmax for Deep Feature Spaces

Abstract Slides Poster Similar

TinyVIRAT: Low-Resolution Video Action Recognition

Ugur Demir, Yogesh Rawat, Mubarak Shah

Auto-TLDR; TinyVIRAT: A Progressive Generative Approach for Action Recognition in Videos

Abstract Slides Poster Similar

How Important Are Faces for Person Re-Identification?

Julia Dietlmeier, Joseph Antony, Kevin Mcguinness, Noel E O'Connor

Auto-TLDR; Anonymization of Person Re-identification Datasets with Face Detection and Blurring

Abstract Slides Poster Similar

Multi-Laplacian GAN with Edge Enhancement for Face Super Resolution

Auto-TLDR; Face Image Super-Resolution with Enhanced Edge Information

Abstract Slides Poster Similar

Object Features and Face Detection Performance: Analyses with 3D-Rendered Synthetic Data

Jian Han, Sezer Karaoglu, Hoang-An Le, Theo Gevers

Auto-TLDR; Synthetic Data for Face Detection Using 3DU Face Dataset

Abstract Slides Poster Similar

SATGAN: Augmenting Age Biased Dataset for Cross-Age Face Recognition

Wenshuang Liu, Wenting Chen, Yuanlue Zhu, Linlin Shen

Auto-TLDR; SATGAN: Stable Age Translation GAN for Cross-Age Face Recognition

Abstract Slides Poster Similar

Face Super-Resolution Network with Incremental Enhancement of Facial Parsing Information

Shuang Liu, Chengyi Xiong, Zhirong Gao

Auto-TLDR; Learning-based Face Super-Resolution with Incremental Boosting Facial Parsing Information

Abstract Slides Poster Similar

Efficient Super Resolution by Recursive Aggregation

Zhengxiong Luo Zhengxiong Luo, Yan Huang, Shang Li, Liang Wang, Tieniu Tan

Auto-TLDR; Recursive Aggregation Network for Efficient Deep Super Resolution

Abstract Slides Poster Similar

Detection of Makeup Presentation Attacks Based on Deep Face Representations

Christian Rathgeb, Pawel Drozdowski, Christoph Busch

Auto-TLDR; An Attack Detection Scheme for Face Recognition Using Makeup Presentation Attacks

Abstract Slides Poster Similar

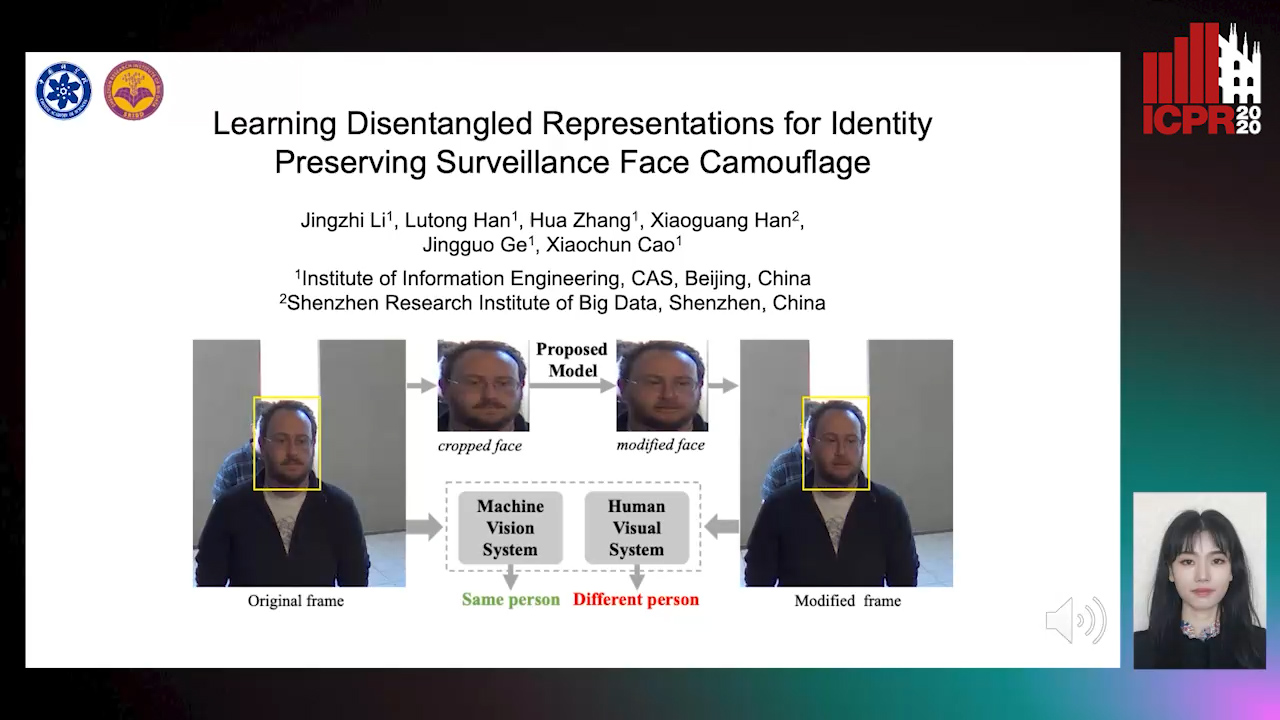

Learning Disentangled Representations for Identity Preserving Surveillance Face Camouflage

Jingzhi Li, Lutong Han, Hua Zhang, Xiaoguang Han, Jingguo Ge, Xiaochu Cao

Auto-TLDR; Individual Face Privacy under Surveillance Scenario with Multi-task Loss Function

SSDL: Self-Supervised Domain Learning for Improved Face Recognition

Samadhi Poornima Kumarasinghe Wickrama Arachchilage, Ebroul Izquierdo

Auto-TLDR; Self-supervised Domain Learning for Face Recognition in unconstrained environments

Abstract Slides Poster Similar

Super-Resolution Guided Pore Detection for Fingerprint Recognition

Syeda Nyma Ferdous, Ali Dabouei, Jeremy Dawson, Nasser M. Nasarabadi

Auto-TLDR; Super-Resolution Generative Adversarial Network for Fingerprint Recognition Using Pore Features

Abstract Slides Poster Similar

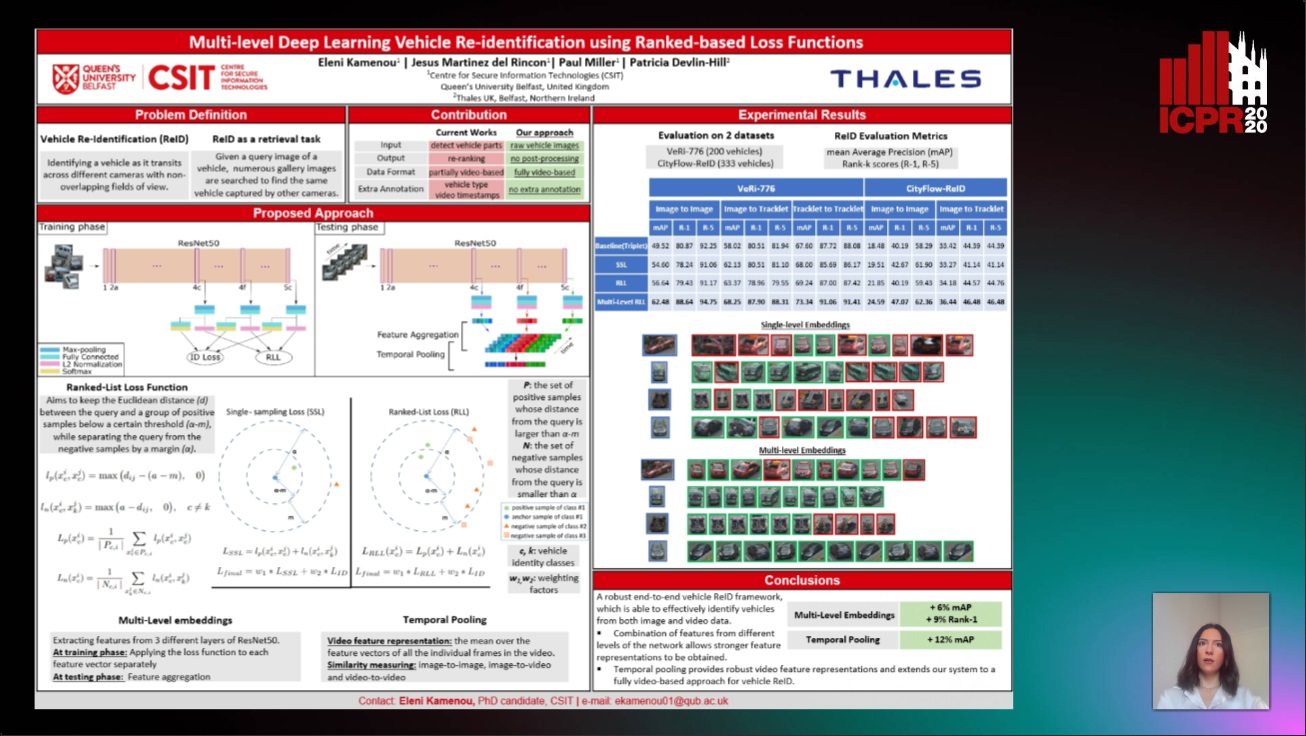

Multi-Level Deep Learning Vehicle Re-Identification Using Ranked-Based Loss Functions

Eleni Kamenou, Jesus Martinez-Del-Rincon, Paul Miller, Patricia Devlin - Hill

Auto-TLDR; Multi-Level Re-identification Network for Vehicle Re-Identification

Abstract Slides Poster Similar

Face Anti-Spoofing Using Spatial Pyramid Pooling

Lei Shi, Zhuo Zhou, Zhenhua Guo

Auto-TLDR; Spatial Pyramid Pooling for Face Anti-Spoofing

Abstract Slides Poster Similar

Progressive Splitting and Upscaling Structure for Super-Resolution

Auto-TLDR; PSUS: Progressive and Upscaling Layer for Single Image Super-Resolution

Abstract Slides Poster Similar

Two-Level Attention-Based Fusion Learning for RGB-D Face Recognition

Hardik Uppal, Alireza Sepas-Moghaddam, Michael Greenspan, Ali Etemad

Auto-TLDR; Fused RGB-D Facial Recognition using Attention-Aware Feature Fusion

Abstract Slides Poster Similar

Video Face Manipulation Detection through Ensemble of CNNs

Nicolo Bonettini, Edoardo Daniele Cannas, Sara Mandelli, Luca Bondi, Paolo Bestagini, Stefano Tubaro

Auto-TLDR; Face Manipulation Detection in Video Sequences Using Convolutional Neural Networks

Deep Iterative Residual Convolutional Network for Single Image Super-Resolution

Rao Muhammad Umer, Gian Luca Foresti, Christian Micheloni

Auto-TLDR; ISRResCNet: Deep Iterative Super-Resolution Residual Convolutional Network for Single Image Super-resolution

Small Object Detection Leveraging on Simultaneous Super-Resolution

Hong Ji, Zhi Gao, Xiaodong Liu, Tiancan Mei

Auto-TLDR; Super-Resolution via Generative Adversarial Network for Small Object Detection

Robust Pedestrian Detection in Thermal Imagery Using Synthesized Images

My Kieu, Lorenzo Berlincioni, Leonardo Galteri, Marco Bertini, Andrew Bagdanov, Alberto Del Bimbo

Auto-TLDR; Improving Pedestrian Detection in the thermal domain using Generative Adversarial Network

Abstract Slides Poster Similar

Lookalike Disambiguation: Improving Face Identification Performance at Top Ranks

Auto-TLDR; Lookalike Face Identification Using a Disambiguator for Lookalike Images

Joint Face Alignment and 3D Face Reconstruction with Efficient Convolution Neural Networks

Keqiang Li, Huaiyu Wu, Xiuqin Shang, Zhen Shen, Gang Xiong, Xisong Dong, Bin Hu, Fei-Yue Wang

Auto-TLDR; Mobile-FRNet: Efficient 3D Morphable Model Alignment and 3D Face Reconstruction from a Single 2D Facial Image

Abstract Slides Poster Similar

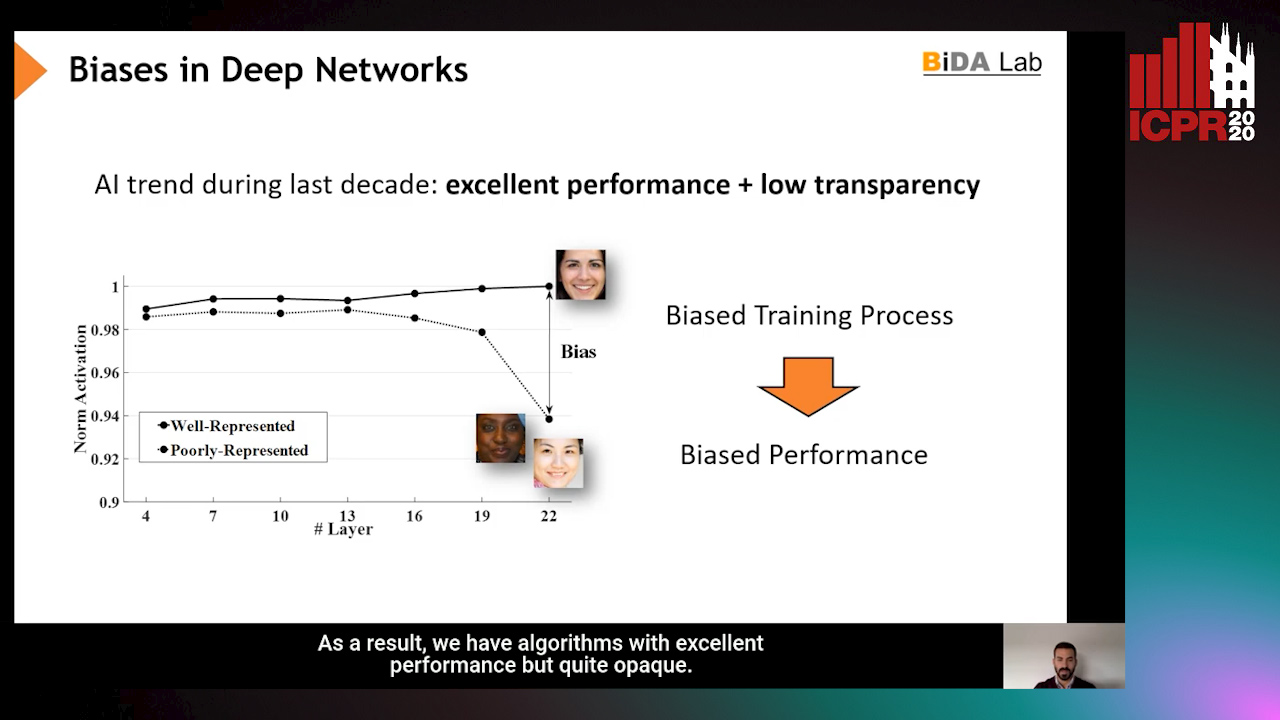

InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics

Ignacio Serna, Alejandro Peña Almansa, Aythami Morales, Julian Fierrez

Auto-TLDR; InsideBias: Detecting Bias in Deep Neural Networks from Face Images

Abstract Slides Poster Similar

Hierarchically Aggregated Residual Transformation for Single Image Super Resolution

Auto-TLDR; HARTnet: Hierarchically Aggregated Residual Transformation for Multi-Scale Super-resolution

Abstract Slides Poster Similar

Improving Low-Resolution Image Classification by Super-Resolution with Enhancing High-Frequency Content

Liguo Zhou, Guang Chen, Mingyue Feng, Alois Knoll

Auto-TLDR; Super-resolution for Low-Resolution Image Classification

Abstract Slides Poster Similar

One-Shot Representational Learning for Joint Biometric and Device Authentication

Auto-TLDR; Joint Biometric and Device Recognition from a Single Biometric Image

Abstract Slides Poster Similar

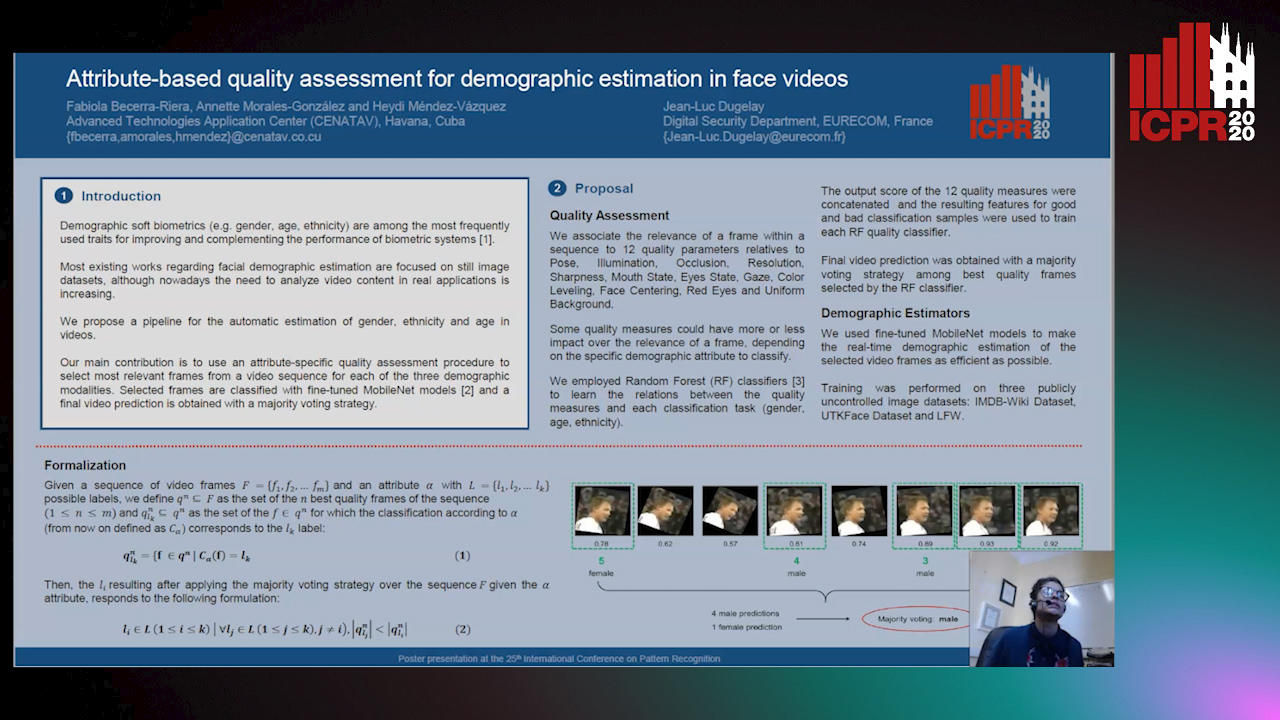

Attribute-Based Quality Assessment for Demographic Estimation in Face Videos

Fabiola Becerra-Riera, Annette Morales-González, Heydi Mendez-Vazquez, Jean-Luc Dugelay

Auto-TLDR; Facial Demographic Estimation in Video Scenarios Using Quality Assessment

On the Information of Feature Maps and Pruning of Deep Neural Networks

Mohammadreza Soltani, Suya Wu, Jie Ding, Robert Ravier, Vahid Tarokh

Auto-TLDR; Compressing Deep Neural Models Using Mutual Information

Abstract Slides Poster Similar

How Unique Is a Face: An Investigative Study

Michal Balazia, S L Happy, Francois Bremond, Antitza Dantcheva

Auto-TLDR; Uniqueness of Face Recognition: Exploring the Impact of Factors such as image resolution, feature representation, database size, age and gender

Abstract Slides Poster Similar

Fast and Accurate Real-Time Semantic Segmentation with Dilated Asymmetric Convolutions

Leonel Rosas-Arias, Gibran Benitez-Garcia, Jose Portillo-Portillo, Gabriel Sanchez-Perez, Keiji Yanai

Auto-TLDR; FASSD-Net: Dilated Asymmetric Pyramidal Fusion for Real-Time Semantic Segmentation

Abstract Slides Poster Similar

Cross-spectrum Face Recognition Using Subspace Projection Hashing

Hanrui Wang, Xingbo Dong, Jin Zhe, Jean-Luc Dugelay, Massimo Tistarelli

Auto-TLDR; Subspace Projection Hashing for Cross-Spectrum Face Recognition

Abstract Slides Poster Similar

Residual Fractal Network for Single Image Super Resolution by Widening and Deepening

Jiahang Gu, Zhaowei Qu, Xiaoru Wang, Jiawang Dan, Junwei Sun

Auto-TLDR; Residual fractal convolutional network for single image super-resolution

Abstract Slides Poster Similar

An Adaptive Video-To-Video Face Identification System Based on Self-Training

Eric Lopez-Lopez, Carlos V. Regueiro, Xosé M. Pardo

Auto-TLDR; Adaptive Video-to-Video Face Recognition using Dynamic Ensembles of SVM's

Abstract Slides Poster Similar