Multi-Resolution Fusion and Multi-Scale Input Priors Based Crowd Counting

Usman Sajid,

Wenchi Ma,

Guanghui Wang

Auto-TLDR; Multi-resolution Fusion Based End-to-End Crowd Counting in Still Images

Similar papers

Encoder-Decoder Based Convolutional Neural Networks with Multi-Scale-Aware Modules for Crowd Counting

Pongpisit Thanasutives, Ken-Ichi Fukui, Masayuki Numao, Boonserm Kijsirikul

Auto-TLDR; M-SFANet and M-SegNet for Crowd Counting Using Multi-Scale Fusion Networks

Abstract Slides Poster Similar

Spatial-Related and Scale-Aware Network for Crowd Counting

Lei Li, Yuan Dong, Hongliang Bai

Auto-TLDR; Spatial Attention for Crowd Counting

Abstract Slides Poster Similar

VGG-Embedded Adaptive Layer-Normalized Crowd Counting Net with Scale-Shuffling Modules

Dewen Guo, Jie Feng, Bingfeng Zhou

Auto-TLDR; VadaLN: VGG-embedded Adaptive Layer Normalization for Crowd Counting

Abstract Slides Poster Similar

HANet: Hybrid Attention-Aware Network for Crowd Counting

Xinxing Su, Yuchen Yuan, Xiangbo Su, Zhikang Zou, Shilei Wen, Pan Zhou

Auto-TLDR; HANet: Hybrid Attention-Aware Network for Crowd Counting with Adaptive Compensation Loss

PHNet: Parasite-Host Network for Video Crowd Counting

Shiqiao Meng, Jiajie Li, Weiwei Guo, Jinfeng Jiang, Lai Ye

Auto-TLDR; PHNet: A Parasite-Host Network for Video Crowd Counting

Abstract Slides Poster Similar

Learning Error-Driven Curriculum for Crowd Counting

Wenxi Li, Zhuoqun Cao, Qian Wang, Songjian Chen, Rui Feng

Auto-TLDR; Learning Error-Driven Curriculum for Crowd Counting with TutorNet

Learning from Web Data: Improving Crowd Counting Via Semi-Supervised Learning

Auto-TLDR; Semi-supervised Crowd Counting Baseline for Deep Neural Networks

Abstract Slides Poster Similar

DAPC: Domain Adaptation People Counting Via Style-Level Transfer Learning and Scene-Aware Estimation

Na Jiang, Xingsen Wen, Zhiping Shi

Auto-TLDR; Domain Adaptation People counting via Style-Level Transfer Learning and Scene-Aware Estimation

Abstract Slides Poster Similar

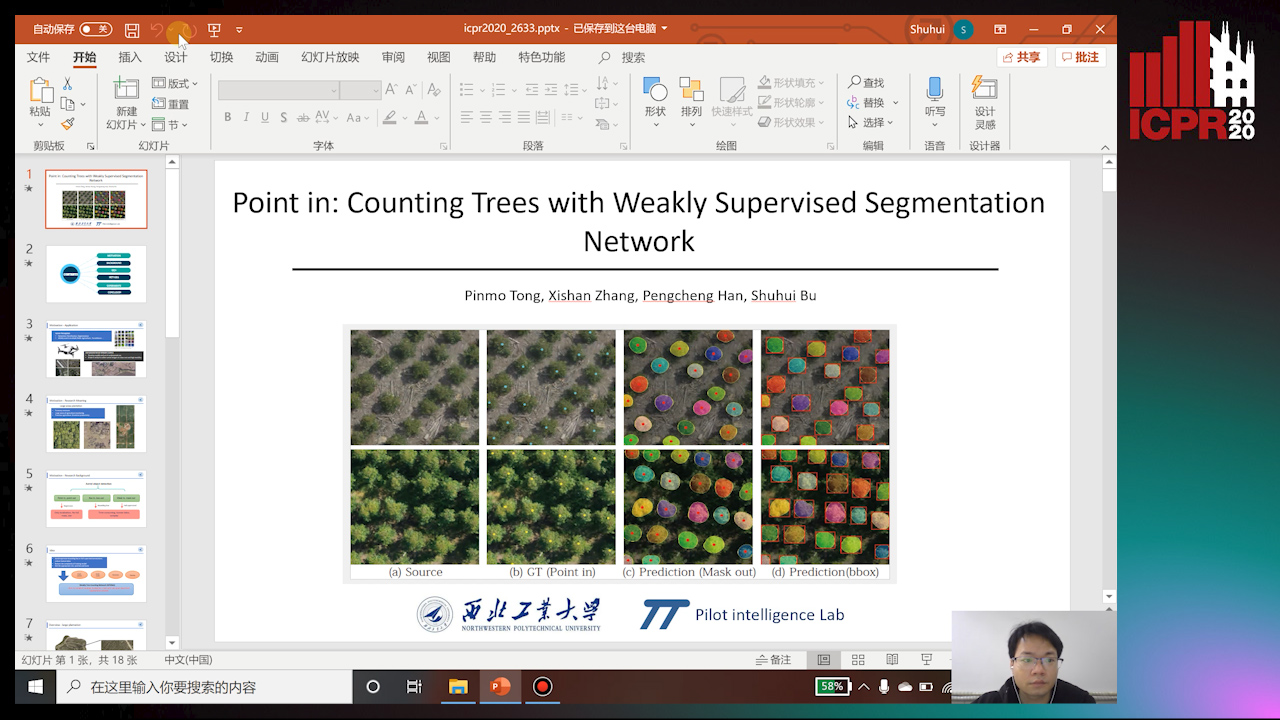

Point In: Counting Trees with Weakly Supervised Segmentation Network

Pinmo Tong, Shuhui Bu, Pengcheng Han

Auto-TLDR; Weakly Tree counting using Deep Segmentation Network with Localization and Mask Prediction

Abstract Slides Poster Similar

Learning a Dynamic High-Resolution Network for Multi-Scale Pedestrian Detection

Mengyuan Ding, Shanshan Zhang, Jian Yang

Auto-TLDR; Learningable Dynamic HRNet for Pedestrian Detection

Abstract Slides Poster Similar

Distortion-Adaptive Grape Bunch Counting for Omnidirectional Images

Ryota Akai, Yuzuko Utsumi, Yuka Miwa, Masakazu Iwamura, Koichi Kise

Auto-TLDR; Object Counting for Omnidirectional Images Using Stereographic Projection

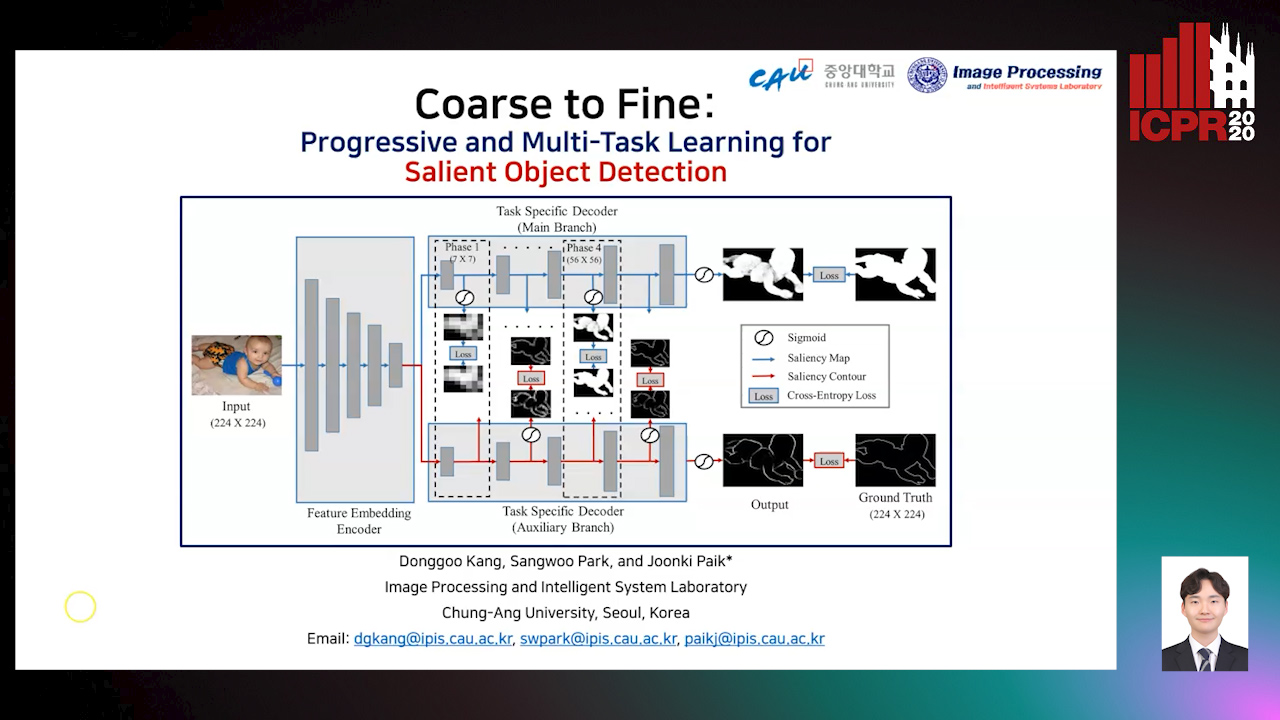

Coarse to Fine: Progressive and Multi-Task Learning for Salient Object Detection

Dong-Goo Kang, Sangwoo Park, Joonki Paik

Auto-TLDR; Progressive and mutl-task learning scheme for salient object detection

Abstract Slides Poster Similar

Efficient-Receptive Field Block with Group Spatial Attention Mechanism for Object Detection

Jiacheng Zhang, Zhicheng Zhao, Fei Su

Auto-TLDR; E-RFB: Efficient-Receptive Field Block for Deep Neural Network for Object Detection

Abstract Slides Poster Similar

Delivering Meaningful Representation for Monocular Depth Estimation

Doyeon Kim, Donggyu Joo, Junmo Kim

Auto-TLDR; Monocular Depth Estimation by Bridging the Context between Encoding and Decoding

Abstract Slides Poster Similar

Construction Worker Hardhat-Wearing Detection Based on an Improved BiFPN

Chenyang Zhang, Zhiqiang Tian, Jingyi Song, Yaoyue Zheng, Bo Xu

Auto-TLDR; A One-Stage Object Detection Method for Hardhat-Wearing in Construction Site

Abstract Slides Poster Similar

AerialMPTNet: Multi-Pedestrian Tracking in Aerial Imagery Using Temporal and Graphical Features

Maximilian Kraus, Seyed Majid Azimi, Emec Ercelik, Reza Bahmanyar, Peter Reinartz, Alois Knoll

Auto-TLDR; AerialMPTNet: A novel approach for multi-pedestrian tracking in geo-referenced aerial imagery by fusing appearance features

Abstract Slides Poster Similar

Mutual-Supervised Feature Modulation Network for Occluded Pedestrian Detection

Auto-TLDR; A Mutual-Supervised Feature Modulation Network for Occluded Pedestrian Detection

Multi-Scale Residual Pyramid Attention Network for Monocular Depth Estimation

Jing Liu, Xiaona Zhang, Zhaoxin Li, Tianlu Mao

Auto-TLDR; Multi-scale Residual Pyramid Attention Network for Monocular Depth Estimation

Abstract Slides Poster Similar

Learning to Rank for Active Learning: A Listwise Approach

Minghan Li, Xialei Liu, Joost Van De Weijer, Bogdan Raducanu

Auto-TLDR; Learning Loss for Active Learning

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

FastCompletion: A Cascade Network with Multiscale Group-Fused Inputs for Real-Time Depth Completion

Ang Li, Zejian Yuan, Yonggen Ling, Wanchao Chi, Shenghao Zhang, Chong Zhang

Auto-TLDR; Efficient Depth Completion with Clustered Hourglass Networks

Abstract Slides Poster Similar

Face Super-Resolution Network with Incremental Enhancement of Facial Parsing Information

Shuang Liu, Chengyi Xiong, Zhirong Gao

Auto-TLDR; Learning-based Face Super-Resolution with Incremental Boosting Facial Parsing Information

Abstract Slides Poster Similar

Small Object Detection by Generative and Discriminative Learning

Yi Gu, Jie Li, Chentao Wu, Weijia Jia, Jianping Chen

Auto-TLDR; Generative and Discriminative Learning for Small Object Detection

Abstract Slides Poster Similar

MagnifierNet: Learning Efficient Small-Scale Pedestrian Detector towards Multiple Dense Regions

Qi Cheng, Mingqin Chen, Yingjie Wu, Fei Chen, Shiping Lin

Auto-TLDR; MagnifierNet: A Simple but Effective Small-Scale Pedestrian Detection Towards Multiple Dense Regions

Abstract Slides Poster Similar

SIMCO: SIMilarity-Based Object COunting

Marco Godi, Christian Joppi, Andrea Giachetti, Marco Cristani

Auto-TLDR; SIMCO: An Unsupervised Multi-class Object Counting Approach on InShape

Abstract Slides Poster Similar

Adaptive L2 Regularization in Person Re-Identification

Xingyang Ni, Liang Fang, Heikki Juhani Huttunen

Auto-TLDR; AdaptiveReID: Adaptive L2 Regularization for Person Re-identification

Abstract Slides Poster Similar

Boosting High-Level Vision with Joint Compression Artifacts Reduction and Super-Resolution

Xiaoyu Xiang, Qian Lin, Jan Allebach

Auto-TLDR; A Context-Aware Joint CAR and SR Neural Network for High-Resolution Text Recognition and Face Detection

Abstract Slides Poster Similar

Nighttime Pedestrian Detection Based on Feature Attention and Transformation

Gang Li, Shanshan Zhang, Jian Yang

Auto-TLDR; FAM and FTM: Enhanced Feature Attention Module and Feature Transformation Module for nighttime pedestrian detection

Abstract Slides Poster Similar

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Real-Time Monocular Depth Estimation with Extremely Light-Weight Neural Network

Mian Jhong Chiu, Wei-Chen Chiu, Hua-Tsung Chen, Jen-Hui Chuang

Auto-TLDR; Real-Time Light-Weight Depth Prediction for Obstacle Avoidance and Environment Sensing with Deep Learning-based CNN

Abstract Slides Poster Similar

Attention Pyramid Module for Scene Recognition

Zhinan Qiao, Xiaohui Yuan, Chengyuan Zhuang, Abolfazl Meyarian

Auto-TLDR; Attention Pyramid Module for Multi-Scale Scene Recognition

Abstract Slides Poster Similar

Residual Fractal Network for Single Image Super Resolution by Widening and Deepening

Jiahang Gu, Zhaowei Qu, Xiaoru Wang, Jiawang Dan, Junwei Sun

Auto-TLDR; Residual fractal convolutional network for single image super-resolution

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

PRF-Ped: Multi-Scale Pedestrian Detector with Prior-Based Receptive Field

Yuzhi Tan, Hongxun Yao, Haoran Li, Xiusheng Lu, Haozhe Xie

Auto-TLDR; Bidirectional Feature Enhancement Module for Multi-Scale Pedestrian Detection

Abstract Slides Poster Similar

Fast and Accurate Real-Time Semantic Segmentation with Dilated Asymmetric Convolutions

Leonel Rosas-Arias, Gibran Benitez-Garcia, Jose Portillo-Portillo, Gabriel Sanchez-Perez, Keiji Yanai

Auto-TLDR; FASSD-Net: Dilated Asymmetric Pyramidal Fusion for Real-Time Semantic Segmentation

Abstract Slides Poster Similar

Exploring Severe Occlusion: Multi-Person 3D Pose Estimation with Gated Convolution

Renshu Gu, Gaoang Wang, Jenq-Neng Hwang

Auto-TLDR; 3D Human Pose Estimation for Multi-Human Videos with Occlusion

Light3DPose: Real-Time Multi-Person 3D Pose Estimation from Multiple Views

Alessio Elmi, Davide Mazzini, Pietro Tortella

Auto-TLDR; 3D Pose Estimation of Multiple People from a Few calibrated Camera Views using Deep Learning

Abstract Slides Poster Similar

Stratified Multi-Task Learning for Robust Spotting of Scene Texts

Kinjal Dasgupta, Sudip Das, Ujjwal Bhattacharya

Auto-TLDR; Feature Representation Block for Multi-task Learning of Scene Text

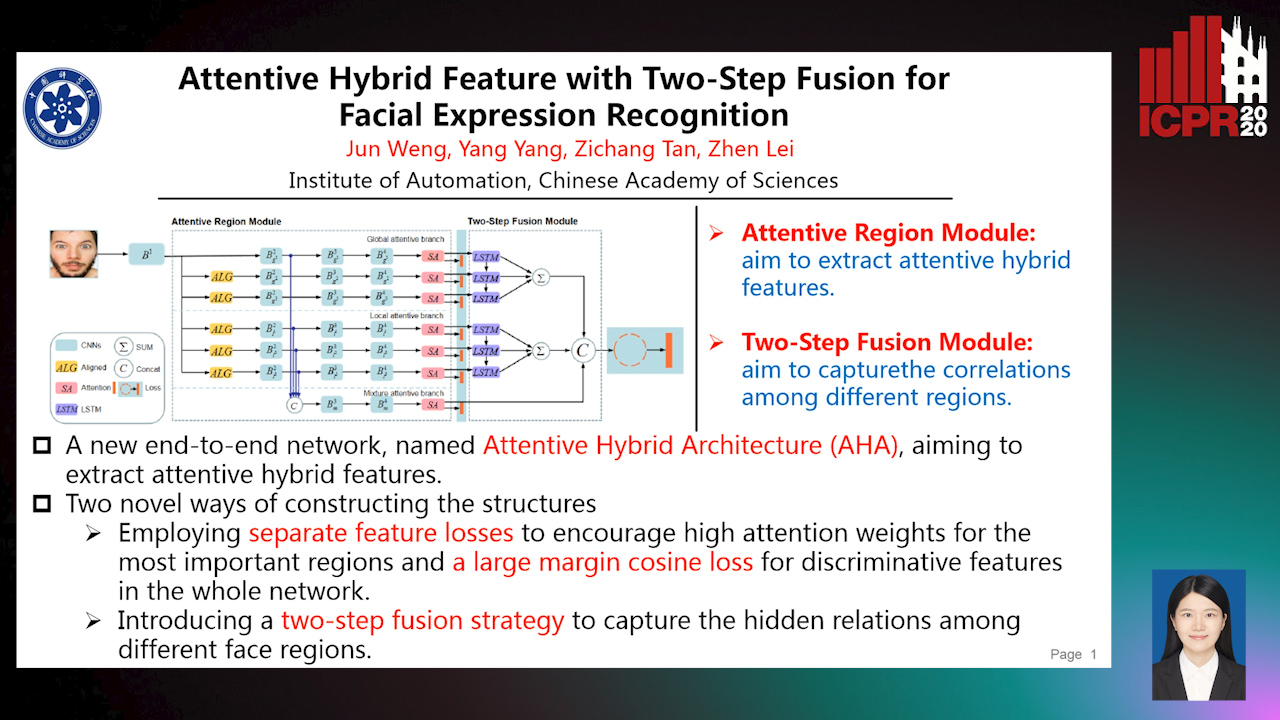

Attentive Hybrid Feature Based a Two-Step Fusion for Facial Expression Recognition

Jun Weng, Yang Yang, Zichang Tan, Zhen Lei

Auto-TLDR; Attentive Hybrid Architecture for Facial Expression Recognition

Abstract Slides Poster Similar

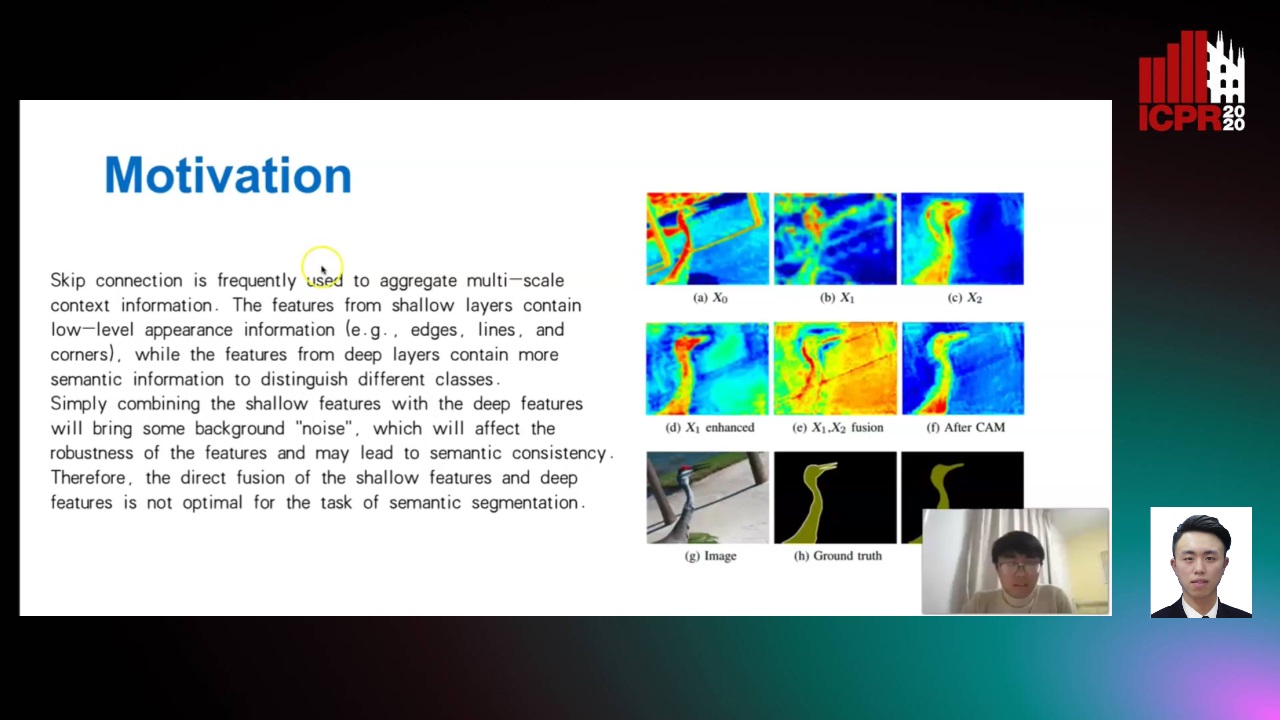

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

Enhanced Feature Pyramid Network for Semantic Segmentation

Mucong Ye, Ouyang Jinpeng, Ge Chen, Jing Zhang, Xiaogang Yu

Auto-TLDR; EFPN: Enhanced Feature Pyramid Network for Semantic Segmentation

Abstract Slides Poster Similar

Dynamic Low-Light Image Enhancement for Object Detection Via End-To-End Training

Haifeng Guo, Yirui Wu, Tong Lu

Auto-TLDR; Object Detection using Low-Light Image Enhancement for End-to-End Training

Abstract Slides Poster Similar

Hierarchically Aggregated Residual Transformation for Single Image Super Resolution

Auto-TLDR; HARTnet: Hierarchically Aggregated Residual Transformation for Multi-Scale Super-resolution

Abstract Slides Poster Similar

Dynamic Guided Network for Monocular Depth Estimation

Xiaoxia Xing, Yinghao Cai, Yiping Yang, Dayong Wen

Auto-TLDR; DGNet: Dynamic Guidance Upsampling for Self-attention-Decoding for Monocular Depth Estimation

Abstract Slides Poster Similar

Triplet-Path Dilated Network for Detection and Segmentation of General Pathological Images

Jiaqi Luo, Zhicheng Zhao, Fei Su, Limei Guo

Auto-TLDR; Triplet-path Network for One-Stage Object Detection and Segmentation in Pathological Images

Deep Universal Blind Image Denoising

Auto-TLDR; Image Denoising with Deep Convolutional Neural Networks

Multi-Order Feature Statistical Model for Fine-Grained Visual Categorization

Qingtao Wang, Ke Zhang, Shaoli Huang, Lianbo Zhang, Jin Fan

Auto-TLDR; Multi-Order Feature Statistical Method for Fine-Grained Visual Categorization

Abstract Slides Poster Similar

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar