Real-Time Monocular Depth Estimation with Extremely Light-Weight Neural Network

Mian Jhong Chiu,

Wei-Chen Chiu,

Hua-Tsung Chen,

Jen-Hui Chuang

Auto-TLDR; Real-Time Light-Weight Depth Prediction for Obstacle Avoidance and Environment Sensing with Deep Learning-based CNN

Similar papers

Extending Single Beam Lidar to Full Resolution by Fusing with Single Image Depth Estimation

Yawen Lu, Yuxing Wang, Devarth Parikh, Guoyu Lu

Auto-TLDR; Self-supervised LIDAR for Low-Cost Depth Estimation

Delivering Meaningful Representation for Monocular Depth Estimation

Doyeon Kim, Donggyu Joo, Junmo Kim

Auto-TLDR; Monocular Depth Estimation by Bridging the Context between Encoding and Decoding

Abstract Slides Poster Similar

Multi-Scale Residual Pyramid Attention Network for Monocular Depth Estimation

Jing Liu, Xiaona Zhang, Zhaoxin Li, Tianlu Mao

Auto-TLDR; Multi-scale Residual Pyramid Attention Network for Monocular Depth Estimation

Abstract Slides Poster Similar

FastCompletion: A Cascade Network with Multiscale Group-Fused Inputs for Real-Time Depth Completion

Ang Li, Zejian Yuan, Yonggen Ling, Wanchao Chi, Shenghao Zhang, Chong Zhang

Auto-TLDR; Efficient Depth Completion with Clustered Hourglass Networks

Abstract Slides Poster Similar

Dynamic Guided Network for Monocular Depth Estimation

Xiaoxia Xing, Yinghao Cai, Yiping Yang, Dayong Wen

Auto-TLDR; DGNet: Dynamic Guidance Upsampling for Self-attention-Decoding for Monocular Depth Estimation

Abstract Slides Poster Similar

Ordinal Depth Classification Using Region-Based Self-Attention

Minh Hieu Phan, Son Lam Phung, Abdesselam Bouzerdoum

Auto-TLDR; Region-based Self-Attention for Multi-scale Depth Estimation from a Single 2D Image

Abstract Slides Poster Similar

DEN: Disentangling and Exchanging Network for Depth Completion

You-Feng Wu, Vu-Hoang Tran, Ting-Wei Chang, Wei-Chen Chiu, Ching-Chun Huang

Auto-TLDR; Disentangling and Exchanging Network for Depth Completion

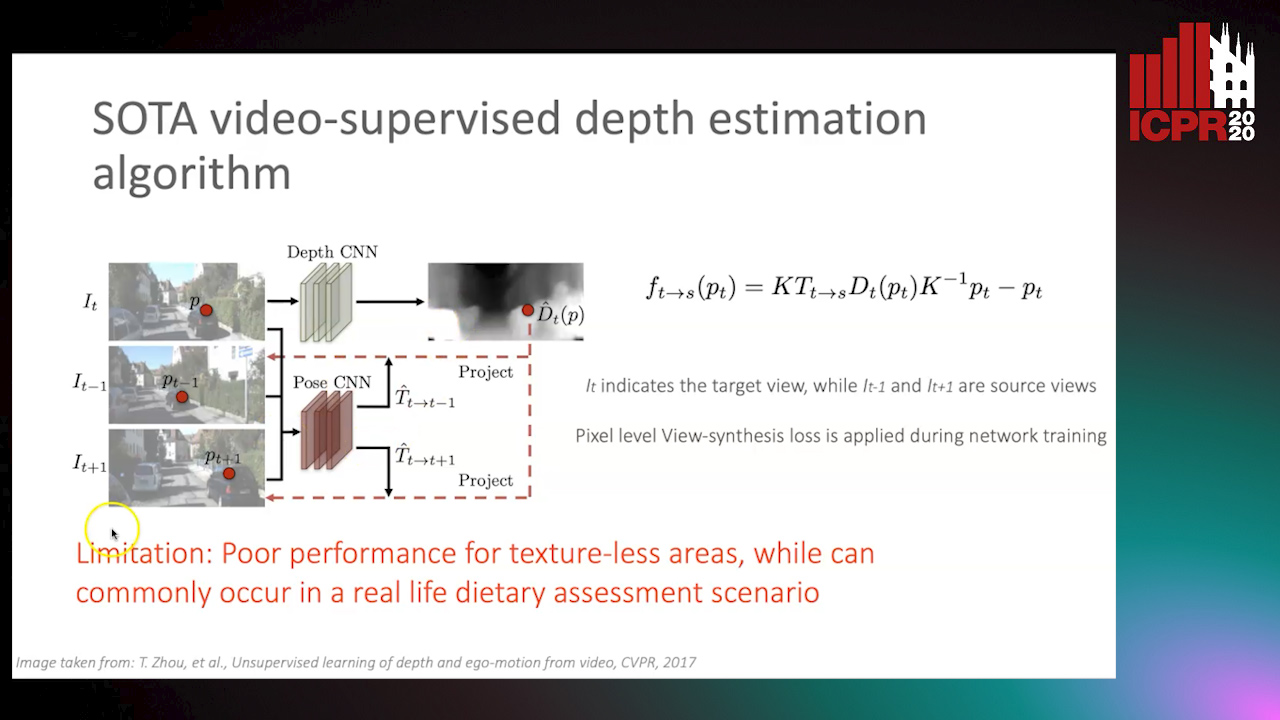

Partially Supervised Multi-Task Network for Single-View Dietary Assessment

Ya Lu, Thomai Stathopoulou, Stavroula Mougiakakou

Auto-TLDR; Food Volume Estimation from a Single Food Image via Geometric Understanding and Semantic Prediction

Abstract Slides Poster Similar

Learning Stereo Matchability in Disparity Regression Networks

Jingyang Zhang, Yao Yao, Zixin Luo, Shiwei Li, Tianwei Shen, Tian Fang, Long Quan

Auto-TLDR; Deep Stereo Matchability for Weakly Matchable Regions

P2D: A Self-Supervised Method for Depth Estimation from Polarimetry

Marc Blanchon, Desire Sidibe, Olivier Morel, Ralph Seulin, Daniel Braun, Fabrice Meriaudeau

Auto-TLDR; Polarimetric Regularization for Monocular Depth Estimation

Abstract Slides Poster Similar

Movement-Induced Priors for Deep Stereo

Yuxin Hou, Muhammad Kamran Janjua, Juho Kannala, Arno Solin

Auto-TLDR; Fusing Stereo Disparity Estimation with Movement-induced Prior Information

Abstract Slides Poster Similar

Fast and Efficient Neural Network for Light Field Disparity Estimation

Auto-TLDR; Improving Efficient Light Field Disparity Estimation Using Deep Neural Networks

Abstract Slides Poster Similar

NetCalib: A Novel Approach for LiDAR-Camera Auto-Calibration Based on Deep Learning

Shan Wu, Amnir Hadachi, Damien Vivet, Yadu Prabhakar

Auto-TLDR; Automatic Calibration of LiDAR and Cameras using Deep Neural Network

Abstract Slides Poster Similar

Leveraging a Weakly Adversarial Paradigm for Joint Learning of Disparity and Confidence Estimation

Matteo Poggi, Fabio Tosi, Filippo Aleotti, Stefano Mattoccia

Auto-TLDR; Joint Training of Deep-Networks for Outlier Detection from Stereo Images

Abstract Slides Poster Similar

Attention Stereo Matching Network

Doudou Zhang, Jing Cai, Yanbing Xue, Zan Gao, Hua Zhang

Auto-TLDR; ASM-Net: Attention Stereo Matching with Disparity Refinement

Abstract Slides Poster Similar

Deeply-Fused Attentive Network for Stereo Matching

Zuliu Yang, Xindong Ai, Weida Yang, Yong Zhao, Qifei Dai, Fuchi Li

Auto-TLDR; DF-Net: Deep Learning-based Network for Stereo Matching

Abstract Slides Poster Similar

5D Light Field Synthesis from a Monocular Video

Kyuho Bae, Andre Ivan, Hajime Nagahara, In Kyu Park

Auto-TLDR; Synthesis of Light Field Video from Monocular Video using Deep Learning

FC-DCNN: A Densely Connected Neural Network for Stereo Estimation

Dominik Hirner, Friedrich Fraundorfer

Auto-TLDR; FC-DCNN: A Lightweight Network for Stereo Estimation

Abstract Slides Poster Similar

FastSal: A Computationally Efficient Network for Visual Saliency Prediction

Auto-TLDR; MobileNetV2: A Convolutional Neural Network for Saliency Prediction

Abstract Slides Poster Similar

Transitional Asymmetric Non-Local Neural Networks for Real-World Dirt Road Segmentation

Auto-TLDR; Transitional Asymmetric Non-Local Neural Networks for Semantic Segmentation on Dirt Roads

Abstract Slides Poster Similar

Object Detection on Monocular Images with Two-Dimensional Canonical Correlation Analysis

Auto-TLDR; Multi-Task Object Detection from Monocular Images Using Multimodal RGB and Depth Data

Abstract Slides Poster Similar

Towards Efficient 3D Point Cloud Scene Completion Via Novel Depth View Synthesis

Haiyan Wang, Liang Yang, Xuejian Rong, Ying-Li Tian

Auto-TLDR; 3D Point Cloud Completion with Depth View Synthesis and Depth View synthesis

Suppressing Features That Contain Disparity Edge for Stereo Matching

Xindong Ai, Zuliu Yang, Weida Yang, Yong Zhao, Zhengzhong Yu, Fuchi Li

Auto-TLDR; SDE-Attention: A Novel Attention Mechanism for Stereo Matching

Abstract Slides Poster Similar

Edge-Aware Monocular Dense Depth Estimation with Morphology

Zhi Li, Xiaoyang Zhu, Haitao Yu, Qi Zhang, Yongshi Jiang

Auto-TLDR; Spatio-Temporally Smooth Dense Depth Maps Using Only a CPU

Abstract Slides Poster Similar

Fast and Accurate Real-Time Semantic Segmentation with Dilated Asymmetric Convolutions

Leonel Rosas-Arias, Gibran Benitez-Garcia, Jose Portillo-Portillo, Gabriel Sanchez-Perez, Keiji Yanai

Auto-TLDR; FASSD-Net: Dilated Asymmetric Pyramidal Fusion for Real-Time Semantic Segmentation

Abstract Slides Poster Similar

Hybrid Approach for 3D Head Reconstruction: Using Neural Networks and Visual Geometry

Oussema Bouafif, Bogdan Khomutenko, Mohammed Daoudi

Auto-TLDR; Recovering 3D Head Geometry from a Single Image using Deep Learning and Geometric Techniques

Abstract Slides Poster Similar

A Fine-Grained Dataset and Its Efficient Semantic Segmentation for Unstructured Driving Scenarios

Kai Andreas Metzger, Peter Mortimer, Hans J "Joe" Wuensche

Auto-TLDR; TAS500: A Semantic Segmentation Dataset for Autonomous Driving in Unstructured Environments

Abstract Slides Poster Similar

Two-Stage Adaptive Object Scene Flow Using Hybrid CNN-CRF Model

Congcong Li, Haoyu Ma, Qingmin Liao

Auto-TLDR; Adaptive object scene flow estimation using a hybrid CNN-CRF model and adaptive iteration

Abstract Slides Poster Similar

ResFPN: Residual Skip Connections in Multi-Resolution Feature Pyramid Networks for Accurate Dense Pixel Matching

Rishav ., René Schuster, Ramy Battrawy, Oliver Wasenmüler, Didier Stricker

Auto-TLDR; Resolution Feature Pyramid Networks for Dense Pixel Matching

Human Segmentation with Dynamic LiDAR Data

Tao Zhong, Wonjik Kim, Masayuki Tanaka, Masatoshi Okutomi

Auto-TLDR; Spatiotemporal Neural Network for Human Segmentation with Dynamic Point Clouds

Light3DPose: Real-Time Multi-Person 3D Pose Estimation from Multiple Views

Alessio Elmi, Davide Mazzini, Pietro Tortella

Auto-TLDR; 3D Pose Estimation of Multiple People from a Few calibrated Camera Views using Deep Learning

Abstract Slides Poster Similar

Feature Point Matching in Cross-Spectral Images with Cycle Consistency Learning

Ryosuke Furuta, Naoaki Noguchi, Xueting Wang, Toshihiko Yamasaki

Auto-TLDR; Unsupervised Learning for General Feature Point Matching in Cross-Spectral Settings

Abstract Slides Poster Similar

Enhancing Deep Semantic Segmentation of RGB-D Data with Entangled Forests

Matteo Terreran, Elia Bonetto, Stefano Ghidoni

Auto-TLDR; FuseNet: A Lighter Deep Learning Model for Semantic Segmentation

Abstract Slides Poster Similar

Enhancing Depth Quality of Stereo Vision Using Deep Learning-Based Prior Information of the Driving Environment

Weifu Li, Vijay John, Seiichi Mita

Auto-TLDR; A Novel Post-processing Mathematical Framework for Stereo Vision

Abstract Slides Poster Similar

Improving Robotic Grasping on Monocular Images Via Multi-Task Learning and Positional Loss

William Prew, Toby Breckon, Magnus Bordewich, Ulrik Beierholm

Auto-TLDR; Improving grasping performance from monocularcolour images in an end-to-end CNN architecture with multi-task learning

Abstract Slides Poster Similar

Real-Time Semantic Segmentation Via Region and Pixel Context Network

Yajun Li, Yazhou Liu, Quansen Sun

Auto-TLDR; A Dual Context Network for Real-Time Semantic Segmentation

Abstract Slides Poster Similar

Boosting High-Level Vision with Joint Compression Artifacts Reduction and Super-Resolution

Xiaoyu Xiang, Qian Lin, Jan Allebach

Auto-TLDR; A Context-Aware Joint CAR and SR Neural Network for High-Resolution Text Recognition and Face Detection

Abstract Slides Poster Similar

Yolo+FPN: 2D and 3D Fused Object Detection with an RGB-D Camera

Auto-TLDR; Yolo+FPN: Combining 2D and 3D Object Detection for Real-Time Object Detection

Abstract Slides Poster Similar

Encoder-Decoder Based Convolutional Neural Networks with Multi-Scale-Aware Modules for Crowd Counting

Pongpisit Thanasutives, Ken-Ichi Fukui, Masayuki Numao, Boonserm Kijsirikul

Auto-TLDR; M-SFANet and M-SegNet for Crowd Counting Using Multi-Scale Fusion Networks

Abstract Slides Poster Similar

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

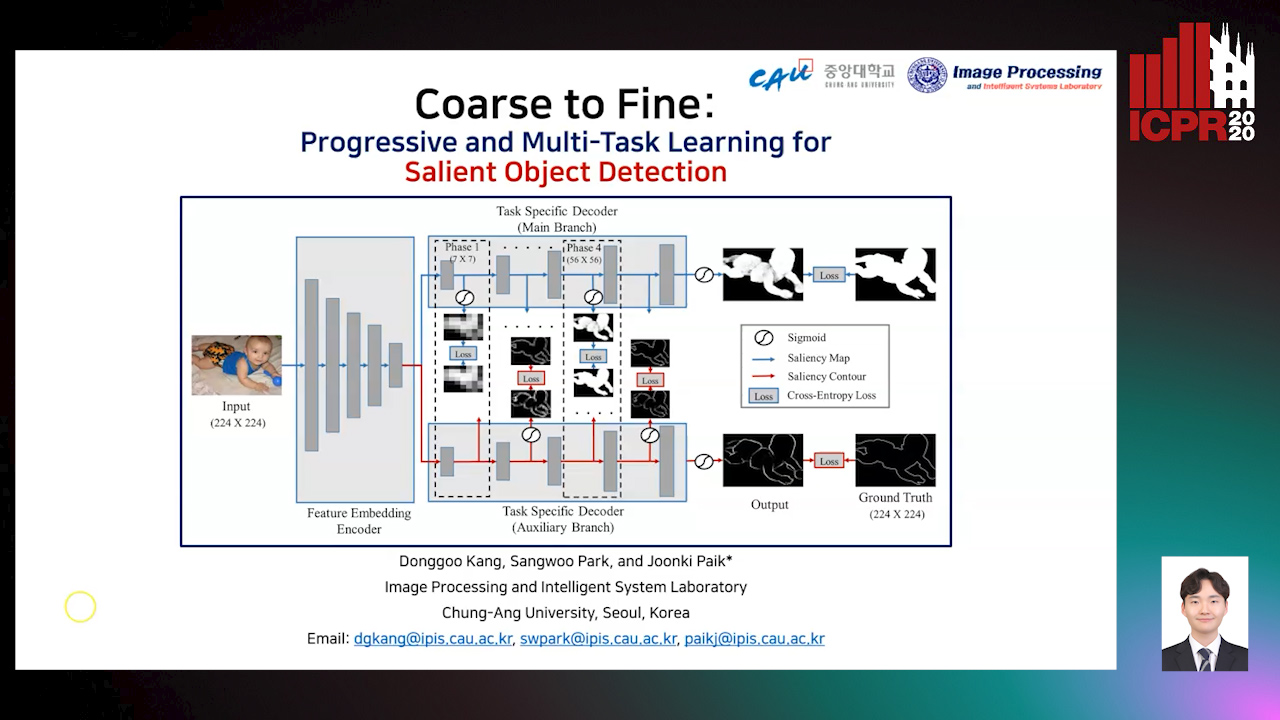

Coarse to Fine: Progressive and Multi-Task Learning for Salient Object Detection

Dong-Goo Kang, Sangwoo Park, Joonki Paik

Auto-TLDR; Progressive and mutl-task learning scheme for salient object detection

Abstract Slides Poster Similar

Object Segmentation Tracking from Generic Video Cues

Amirhossein Kardoost, Sabine Müller, Joachim Weickert, Margret Keuper

Auto-TLDR; A Light-Weight Variational Framework for Video Object Segmentation in Videos

Abstract Slides Poster Similar

STaRFlow: A SpatioTemporal Recurrent Cell for Lightweight Multi-Frame Optical Flow Estimation

Pierre Godet, Alexandre Boulch, Aurélien Plyer, Guy Le Besnerais

Auto-TLDR; STaRFlow: A lightweight CNN-based algorithm for optical flow estimation

Abstract Slides Poster Similar

Attention Based Coupled Framework for Road and Pothole Segmentation

Shaik Masihullah, Ritu Garg, Prerana Mukherjee, Anupama Ray

Auto-TLDR; Few Shot Learning for Road and Pothole Segmentation on KITTI and IDD

Abstract Slides Poster Similar

Boundary-Aware Graph Convolution for Semantic Segmentation

Hanzhe Hu, Jinshi Cui, Jinshi Hongbin Zha

Auto-TLDR; Boundary-Aware Graph Convolution for Semantic Segmentation

Abstract Slides Poster Similar

EdgeNet: Semantic Scene Completion from a Single RGB-D Image

Aloisio Dourado, Teofilo De Campos, Adrian Hilton, Hansung Kim

Auto-TLDR; Semantic Scene Completion using 3D Depth and RGB Information

Abstract Slides Poster Similar

Joint Face Alignment and 3D Face Reconstruction with Efficient Convolution Neural Networks

Keqiang Li, Huaiyu Wu, Xiuqin Shang, Zhen Shen, Gang Xiong, Xisong Dong, Bin Hu, Fei-Yue Wang

Auto-TLDR; Mobile-FRNet: Efficient 3D Morphable Model Alignment and 3D Face Reconstruction from a Single 2D Facial Image

Abstract Slides Poster Similar

The Aleatoric Uncertainty Estimation Using a Separate Formulation with Virtual Residuals

Takumi Kawashima, Qing Yu, Akari Asai, Daiki Ikami, Kiyoharu Aizawa

Auto-TLDR; Aleatoric Uncertainty Estimation in Regression Problems