Stratified Multi-Task Learning for Robust Spotting of Scene Texts

Kinjal Dasgupta,

Sudip Das,

Ujjwal Bhattacharya

Auto-TLDR; Feature Representation Block for Multi-task Learning of Scene Text

Similar papers

DUET: Detection Utilizing Enhancement for Text in Scanned or Captured Documents

Eun-Soo Jung, Hyeonggwan Son, Kyusam Oh, Yongkeun Yun, Soonhwan Kwon, Min Soo Kim

Auto-TLDR; Text Detection for Document Images Using Synthetic and Real Data

Abstract Slides Poster Similar

Gaussian Constrained Attention Network for Scene Text Recognition

Zhi Qiao, Xugong Qin, Yu Zhou, Fei Yang, Weiping Wang

Auto-TLDR; Gaussian Constrained Attention Network for Scene Text Recognition

Abstract Slides Poster Similar

Text Recognition - Real World Data and Where to Find Them

Klára Janoušková, Lluis Gomez, Dimosthenis Karatzas, Jiri Matas

Auto-TLDR; Exploiting Weakly Annotated Images for Text Extraction

Abstract Slides Poster Similar

Weakly Supervised Attention Rectification for Scene Text Recognition

Chengyu Gu, Shilin Wang, Yiwei Zhu, Zheng Huang, Kai Chen

Auto-TLDR; An auxiliary supervision branch for attention-based scene text recognition

Abstract Slides Poster Similar

Scene Text Detection with Selected Anchors

Anna Zhu, Hang Du, Shengwu Xiong

Auto-TLDR; AS-RPN: Anchor Selection-based Region Proposal Network for Scene Text Detection

Abstract Slides Poster Similar

Recognizing Multiple Text Sequences from an Image by Pure End-To-End Learning

Zhenlong Xu, Shuigeng Zhou, Fan Bai, Cheng Zhanzhan, Yi Niu, Shiliang Pu

Auto-TLDR; Pure End-to-End Learning for Multiple Text Sequences Recognition from Images

Abstract Slides Poster Similar

ReADS: A Rectified Attentional Double Supervised Network for Scene Text Recognition

Qi Song, Qianyi Jiang, Xiaolin Wei, Nan Li, Rui Zhang

Auto-TLDR; ReADS: Rectified Attentional Double Supervised Network for General Scene Text Recognition

Abstract Slides Poster Similar

MEAN: A Multi-Element Attention Based Network for Scene Text Recognition

Ruijie Yan, Liangrui Peng, Shanyu Xiao, Gang Yao, Jaesik Min

Auto-TLDR; Multi-element Attention Network for Scene Text Recognition

Abstract Slides Poster Similar

Text Recognition in Real Scenarios with a Few Labeled Samples

Jinghuang Lin, Cheng Zhanzhan, Fan Bai, Yi Niu, Shiliang Pu, Shuigeng Zhou

Auto-TLDR; Few-shot Adversarial Sequence Domain Adaptation for Scene Text Recognition

Abstract Slides Poster Similar

TCATD: Text Contour Attention for Scene Text Detection

Ziling Hu, Wu Xingjiao, Jing Yang

Auto-TLDR; Text Contour Attention Text Detector

Abstract Slides Poster Similar

Feature Embedding Based Text Instance Grouping for Largely Spaced and Occluded Text Detection

Pan Gao, Qi Wan, Renwu Gao, Linlin Shen

Auto-TLDR; Text Instance Embedding Based Feature Embeddings for Multiple Text Instance Grouping

Abstract Slides Poster Similar

A Multi-Head Self-Relation Network for Scene Text Recognition

Zhou Junwei, Hongchao Gao, Jiao Dai, Dongqin Liu, Jizhong Han

Auto-TLDR; Multi-head Self-relation Network for Scene Text Recognition

Abstract Slides Poster Similar

Self-Training for Domain Adaptive Scene Text Detection

Yudi Chen, Wei Wang, Yu Zhou, Fei Yang, Dongbao Yang, Weiping Wang

Auto-TLDR; A self-training framework for image-based scene text detection

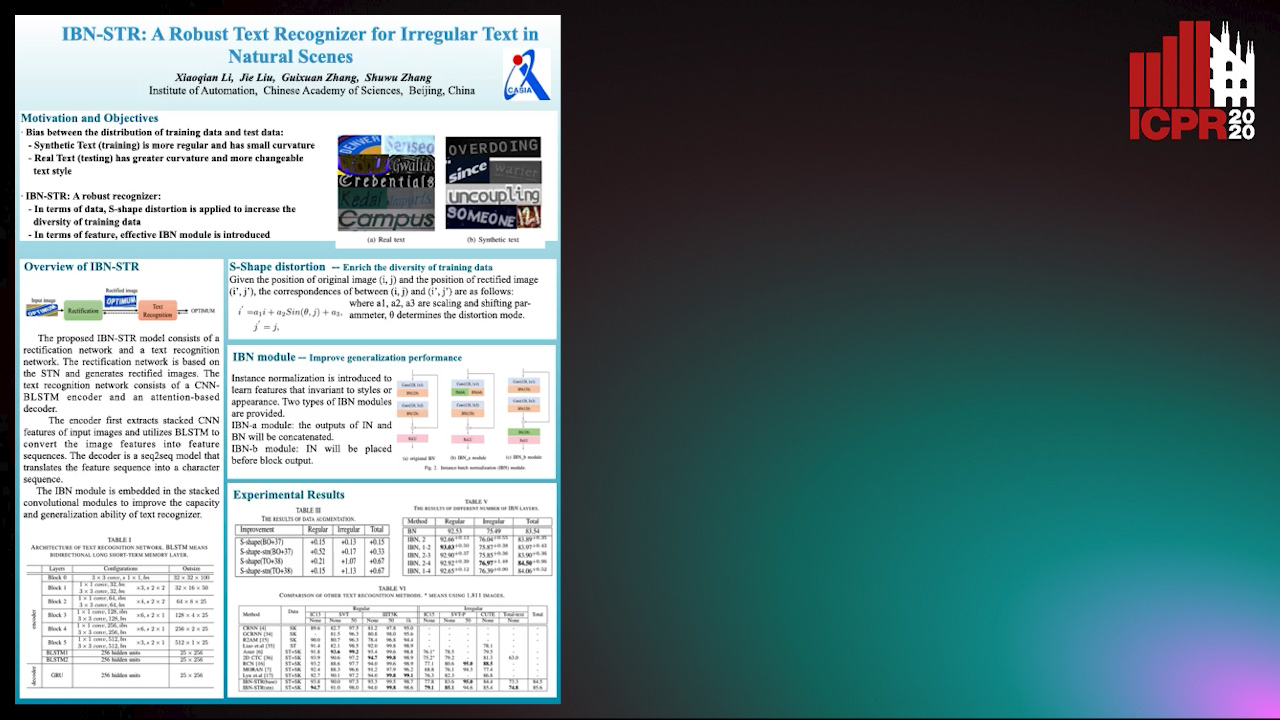

IBN-STR: A Robust Text Recognizer for Irregular Text in Natural Scenes

Xiaoqian Li, Jie Liu, Shuwu Zhang

Auto-TLDR; IBN-STR: A Robust Text Recognition System Based on Data and Feature Representation

Cost-Effective Adversarial Attacks against Scene Text Recognition

Mingkun Yang, Haitian Zheng, Xiang Bai, Jiebo Luo

Auto-TLDR; Adversarial Attacks on Scene Text Recognition

Abstract Slides Poster Similar

Robust Lexicon-Free Confidence Prediction for Text Recognition

Qi Song, Qianyi Jiang, Rui Zhang, Xiaolin Wei

Auto-TLDR; Confidence Measurement for Optical Character Recognition using Single-Input Multi-Output Network

Abstract Slides Poster Similar

Cross-Lingual Text Image Recognition Via Multi-Task Sequence to Sequence Learning

Zhuo Chen, Fei Yin, Xu-Yao Zhang, Qing Yang, Cheng-Lin Liu

Auto-TLDR; Cross-Lingual Text Image Recognition with Multi-task Learning

Abstract Slides Poster Similar

An Accurate Threshold Insensitive Kernel Detector for Arbitrary Shaped Text

Xijun Qian, Yifan Liu, Yu-Bin Yang

Auto-TLDR; TIKD: threshold insensitive kernel detector for arbitrary shaped text

2D License Plate Recognition based on Automatic Perspective Rectification

Hui Xu, Zhao-Hong Guo, Da-Han Wang, Xiang-Dong Zhou, Yu Shi

Auto-TLDR; Perspective Rectification Network for License Plate Recognition

Abstract Slides Poster Similar

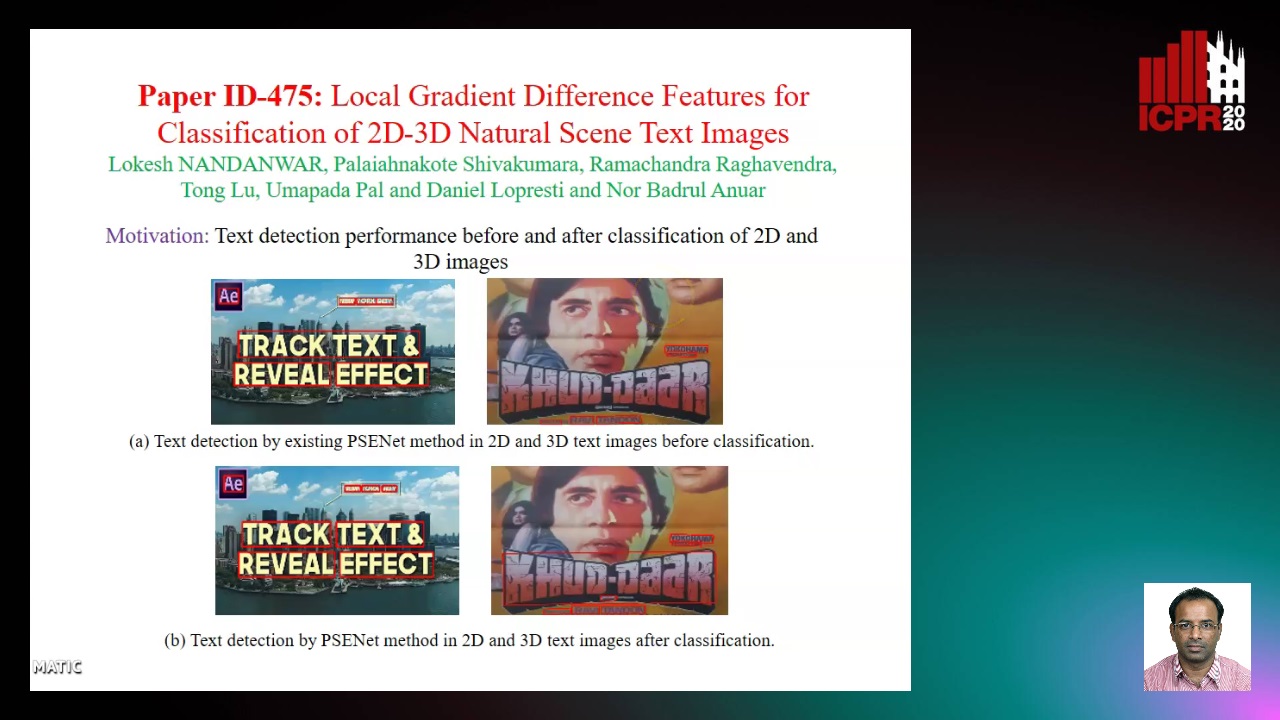

Local Gradient Difference Based Mass Features for Classification of 2D-3D Natural Scene Text Images

Lokesh Nandanwar, Shivakumara Palaiahnakote, Raghavendra Ramachandra, Tong Lu, Umapada Pal, Daniel Lopresti, Nor Badrul Anuar

Auto-TLDR; Classification of 2D and 3D Natural Scene Images Using COLD

Abstract Slides Poster Similar

Mutually Guided Dual-Task Network for Scene Text Detection

Mengbiao Zhao, Wei Feng, Fei Yin, Xu-Yao Zhang, Cheng-Lin Liu

Auto-TLDR; A dual-task network for word-level and line-level text detection

Dual Path Multi-Modal High-Order Features for Textual Content Based Visual Question Answering

Yanan Li, Yuetan Lin, Hongrui Zhao, Donghui Wang

Auto-TLDR; TextVQA: An End-to-End Visual Question Answering Model for Text-Based VQA

Sample-Aware Data Augmentor for Scene Text Recognition

Guanghao Meng, Tao Dai, Shudeng Wu, Bin Chen, Jian Lu, Yong Jiang, Shutao Xia

Auto-TLDR; Sample-Aware Data Augmentation for Scene Text Recognition

Abstract Slides Poster Similar

Transferable Adversarial Attacks for Deep Scene Text Detection

Shudeng Wu, Tao Dai, Guanghao Meng, Bin Chen, Jian Lu, Shutao Xia

Auto-TLDR; Robustness of DNN-based STD methods against Adversarial Attacks

Multi-Task Learning Based Traditional Mongolian Words Recognition

Hongxi Wei, Hui Zhang, Jing Zhang, Kexin Liu

Auto-TLDR; Multi-task Learning for Mongolian Words Recognition

Abstract Slides Poster Similar

RLST: A Reinforcement Learning Approach to Scene Text Detection Refinement

Xuan Peng, Zheng Huang, Kai Chen, Jie Guo, Weidong Qiu

Auto-TLDR; Saccadic Eye Movements and Peripheral Vision for Scene Text Detection using Reinforcement Learning

Abstract Slides Poster Similar

Watch Your Strokes: Improving Handwritten Text Recognition with Deformable Convolutions

Iulian Cojocaru, Silvia Cascianelli, Lorenzo Baraldi, Massimiliano Corsini, Rita Cucchiara

Auto-TLDR; Deformable Convolutional Neural Networks for Handwritten Text Recognition

Abstract Slides Poster Similar

Boosting High-Level Vision with Joint Compression Artifacts Reduction and Super-Resolution

Xiaoyu Xiang, Qian Lin, Jan Allebach

Auto-TLDR; A Context-Aware Joint CAR and SR Neural Network for High-Resolution Text Recognition and Face Detection

Abstract Slides Poster Similar

Fusion of Global-Local Features for Image Quality Inspection of Shipping Label

Sungho Suh, Paul Lukowicz, Yong Oh Lee

Auto-TLDR; Input Image Quality Verification for Automated Shipping Address Recognition and Verification

Abstract Slides Poster Similar

Attention Pyramid Module for Scene Recognition

Zhinan Qiao, Xiaohui Yuan, Chengyuan Zhuang, Abolfazl Meyarian

Auto-TLDR; Attention Pyramid Module for Multi-Scale Scene Recognition

Abstract Slides Poster Similar

Automated Whiteboard Lecture Video Summarization by Content Region Detection and Representation

Bhargava Urala Kota, Alexander Stone, Kenny Davila, Srirangaraj Setlur, Venu Govindaraju

Auto-TLDR; A Framework for Summarizing Whiteboard Lecture Videos Using Feature Representations of Handwritten Content Regions

TGCRBNW: A Dataset for Runner Bib Number Detection (and Recognition) in the Wild

Pablo Hernández-Carrascosa, Adrian Penate-Sanchez, Javier Lorenzo, David Freire Obregón, Modesto Castrillon

Auto-TLDR; Racing Bib Number Detection and Recognition in the Wild Using Faster R-CNN

Abstract Slides Poster Similar

Improving Word Recognition Using Multiple Hypotheses and Deep Embeddings

Siddhant Bansal, Praveen Krishnan, C. V. Jawahar

Auto-TLDR; EmbedNet: fuse recognition-based and recognition-free approaches for word recognition using learning-based methods

Abstract Slides Poster Similar

LODENet: A Holistic Approach to Offline Handwritten Chinese and Japanese Text Line Recognition

Huu Tin Hoang, Chun-Jen Peng, Hung Tran, Hung Le, Huy Hoang Nguyen

Auto-TLDR; Logographic DEComposition Encoding for Chinese and Japanese Text Line Recognition

Abstract Slides Poster Similar

Small Object Detection by Generative and Discriminative Learning

Yi Gu, Jie Li, Chentao Wu, Weijia Jia, Jianping Chen

Auto-TLDR; Generative and Discriminative Learning for Small Object Detection

Abstract Slides Poster Similar

Detecting Objects with High Object Region Percentage

Fen Fang, Qianli Xu, Liyuan Li, Ying Gu, Joo-Hwee Lim

Auto-TLDR; Faster R-CNN for High-ORP Object Detection

Abstract Slides Poster Similar

CDeC-Net: Composite Deformable Cascade Network for Table Detection in Document Images

Madhav Agarwal, Ajoy Mondal, C. V. Jawahar

Auto-TLDR; CDeC-Net: An End-to-End Trainable Deep Network for Detecting Tables in Document Images

A Fast and Accurate Object Detector for Handwritten Digit String Recognition

Jun Guo, Wenjing Wei, Yifeng Ma, Cong Peng

Auto-TLDR; ChipNet: An anchor-free object detector for handwritten digit string recognition

Abstract Slides Poster Similar

Encoder-Decoder Based Convolutional Neural Networks with Multi-Scale-Aware Modules for Crowd Counting

Pongpisit Thanasutives, Ken-Ichi Fukui, Masayuki Numao, Boonserm Kijsirikul

Auto-TLDR; M-SFANet and M-SegNet for Crowd Counting Using Multi-Scale Fusion Networks

Abstract Slides Poster Similar

Unsupervised Domain Adaptation with Multiple Domain Discriminators and Adaptive Self-Training

Teo Spadotto, Marco Toldo, Umberto Michieli, Pietro Zanuttigh

Auto-TLDR; Unsupervised Domain Adaptation for Semantic Segmentation of Urban Scenes

Abstract Slides Poster Similar

Relevance Detection in Cataract Surgery Videos by Spatio-Temporal Action Localization

Negin Ghamsarian, Mario Taschwer, Doris Putzgruber, Stephanie. Sarny, Klaus Schoeffmann

Auto-TLDR; relevance-based retrieval in cataract surgery videos

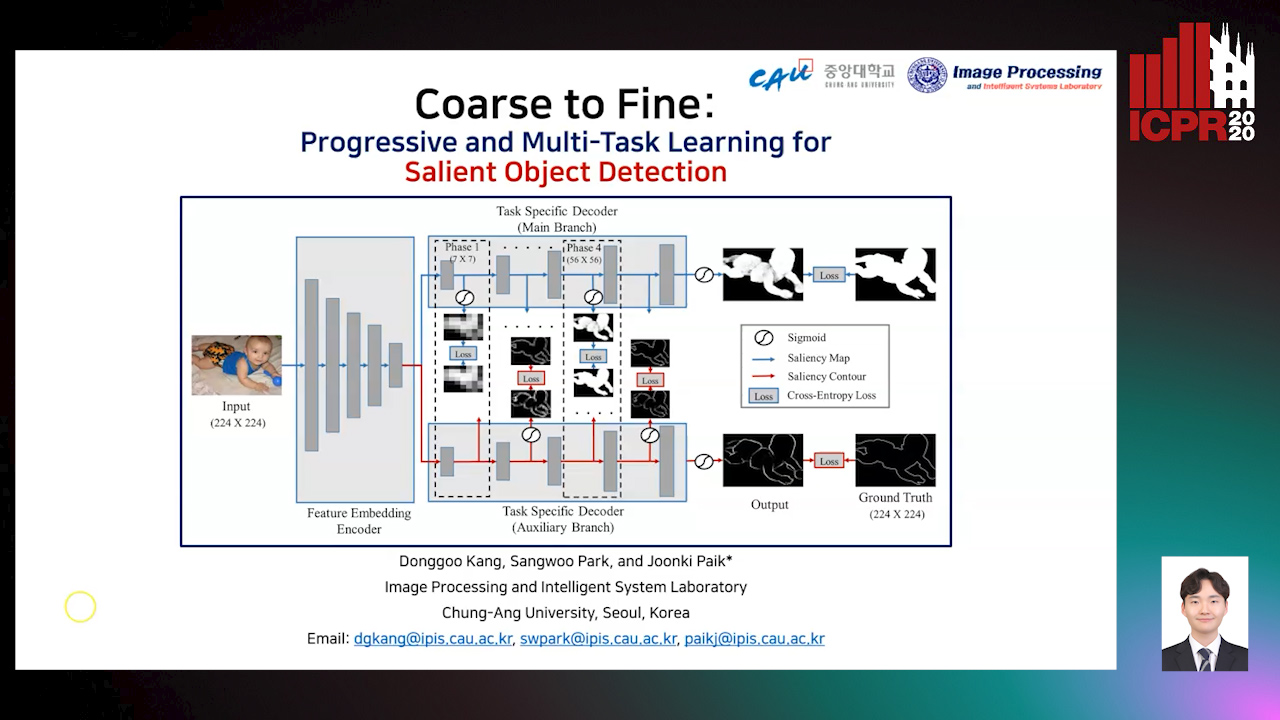

Coarse to Fine: Progressive and Multi-Task Learning for Salient Object Detection

Dong-Goo Kang, Sangwoo Park, Joonki Paik

Auto-TLDR; Progressive and mutl-task learning scheme for salient object detection

Abstract Slides Poster Similar

Enhancing Handwritten Text Recognition with N-Gram Sequencedecomposition and Multitask Learning

Vasiliki Tassopoulou, George Retsinas, Petros Maragos

Auto-TLDR; Multi-task Learning for Handwritten Text Recognition

Abstract Slides Poster Similar

Construction Worker Hardhat-Wearing Detection Based on an Improved BiFPN

Chenyang Zhang, Zhiqiang Tian, Jingyi Song, Yaoyue Zheng, Bo Xu

Auto-TLDR; A One-Stage Object Detection Method for Hardhat-Wearing in Construction Site

Abstract Slides Poster Similar

HANet: Hybrid Attention-Aware Network for Crowd Counting

Xinxing Su, Yuchen Yuan, Xiangbo Su, Zhikang Zou, Shilei Wen, Pan Zhou

Auto-TLDR; HANet: Hybrid Attention-Aware Network for Crowd Counting with Adaptive Compensation Loss

UDBNET: Unsupervised Document Binarization Network Via Adversarial Game

Amandeep Kumar, Shuvozit Ghose, Pinaki Nath Chowdhury, Partha Pratim Roy, Umapada Pal

Auto-TLDR; Three-player Min-max Adversarial Game for Unsupervised Document Binarization

Abstract Slides Poster Similar

RSINet: Rotation-Scale Invariant Network for Online Visual Tracking

Yang Fang, Geunsik Jo, Chang-Hee Lee

Auto-TLDR; RSINet: Rotation-Scale Invariant Network for Adaptive Tracking

Abstract Slides Poster Similar

Multi-Resolution Fusion and Multi-Scale Input Priors Based Crowd Counting

Usman Sajid, Wenchi Ma, Guanghui Wang

Auto-TLDR; Multi-resolution Fusion Based End-to-End Crowd Counting in Still Images

Abstract Slides Poster Similar