Better Prior Knowledge Improves Human-Pose-Based Extrinsic Camera Calibration

Olivier Moliner,

Sangxia Huang,

Kalle Åström

Auto-TLDR; Improving Human-pose-based Extrinsic Calibration for Multi-Camera Systems

Similar papers

Rotational Adjoint Methods for Learning-Free 3D Human Pose Estimation from IMU Data

Caterina Emilia Agelide Buizza, Yiannis Demiris

Auto-TLDR; Learning-free 3D Human Pose Estimation from Inertial Measurement Unit Data

Camera Calibration Using Parallel Line Segments

Auto-TLDR; Closed-Form Calibration of Surveillance Cameras using Parallel 3D Line Segment Projections

Abstract Slides Poster Similar

Calibration and Absolute Pose Estimation of Trinocular Linear Camera Array for Smart City Applications

Martin Ahrnbom, Mikael Nilsson, Håkan Ardö, Kalle Åström, Oksana Yastremska-Kravchenko, Aliaksei Laureshyn

Auto-TLDR; Trinocular Linear Camera Array Calibration for Traffic Surveillance Applications

Abstract Slides Poster Similar

Exploring Severe Occlusion: Multi-Person 3D Pose Estimation with Gated Convolution

Renshu Gu, Gaoang Wang, Jenq-Neng Hwang

Auto-TLDR; 3D Human Pose Estimation for Multi-Human Videos with Occlusion

Light3DPose: Real-Time Multi-Person 3D Pose Estimation from Multiple Views

Alessio Elmi, Davide Mazzini, Pietro Tortella

Auto-TLDR; 3D Pose Estimation of Multiple People from a Few calibrated Camera Views using Deep Learning

Abstract Slides Poster Similar

RefiNet: 3D Human Pose Refinement with Depth Maps

Andrea D'Eusanio, Stefano Pini, Guido Borghi, Roberto Vezzani, Rita Cucchiara

Auto-TLDR; RefiNet: A Multi-stage Framework for 3D Human Pose Estimation

Minimal Solvers for Indoor UAV Positioning

Marcus Valtonen Örnhag, Patrik Persson, Mårten Wadenbäck, Kalle Åström, Anders Heyden

Auto-TLDR; Relative Pose Solvers for Visual Indoor UAV Navigation

Abstract Slides Poster Similar

Occlusion-Tolerant and Personalized 3D Human Pose Estimation in RGB Images

Auto-TLDR; Real-Time 3D Human Pose Estimation in BVH using Inverse Kinematics Solver and Neural Networks

AV-SLAM: Autonomous Vehicle SLAM with Gravity Direction Initialization

Kaan Yilmaz, Baris Suslu, Sohini Roychowdhury, L. Srikar Muppirisetty

Auto-TLDR; VI-SLAM with AGI: A combination of three SLAM algorithms for autonomous vehicles

Abstract Slides Poster Similar

RISEdb: A Novel Indoor Localization Dataset

Carlos Sanchez Belenguer, Erik Wolfart, Álvaro Casado Coscollá, Vitor Sequeira

Auto-TLDR; Indoor Localization Using LiDAR SLAM and Smartphones: A Benchmarking Dataset

Abstract Slides Poster Similar

Orthographic Projection Linear Regression for Single Image 3D Human Pose Estimation

Yahui Zhang, Shaodi You, Theo Gevers

Auto-TLDR; A Deep Neural Network for 3D Human Pose Estimation from a Single 2D Image in the Wild

Abstract Slides Poster Similar

A Two-Step Approach to Lidar-Camera Calibration

Yingna Su, Yaqing Ding, Jian Yang, Hui Kong

Auto-TLDR; Closed-Form Calibration of Lidar-camera System for Ego-motion Estimation and Scene Understanding

Abstract Slides Poster Similar

A Multi-Task Neural Network for Action Recognition with 3D Key-Points

Rongxiao Tang, Wang Luyang, Zhenhua Guo

Auto-TLDR; Multi-task Neural Network for Action Recognition and 3D Human Pose Estimation

Abstract Slides Poster Similar

Unsupervised 3D Human Pose Estimation in Multi-view-multi-pose Video

Cheng Sun, Diego Thomas, Hiroshi Kawasaki

Auto-TLDR; Unsupervised 3D Human Pose Estimation from 2D Videos Using Generative Adversarial Network

Abstract Slides Poster Similar

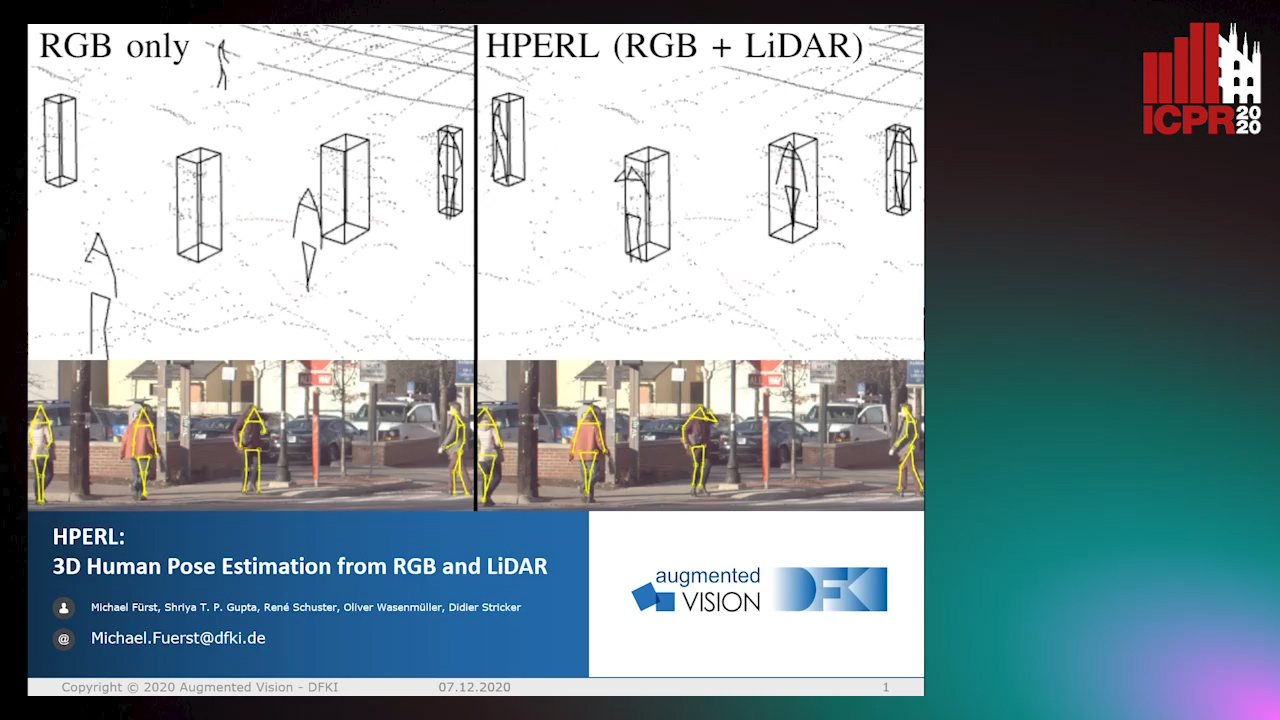

HPERL: 3D Human Pose Estimastion from RGB and LiDAR

Michael Fürst, Shriya T.P. Gupta, René Schuster, Oliver Wasenmüler, Didier Stricker

Auto-TLDR; 3D Human Pose Estimation Using RGB and LiDAR Using Weakly-Supervised Approach

Abstract Slides Poster Similar

What and How? Jointly Forecasting Human Action and Pose

Yanjun Zhu, Yanxia Zhang, Qiong Liu, Andreas Girgensohn

Auto-TLDR; Forecasting Human Actions and Motion Trajectories with Joint Action Classification and Pose Regression

Abstract Slides Poster Similar

Benchmarking Cameras for OpenVSLAM Indoors

Kevin Chappellet, Guillaume Caron, Fumio Kanehiro, Ken Sakurada, Abderrahmane Kheddar

Auto-TLDR; OpenVSLAM: Benchmarking Camera Types for Visual Simultaneous Localization and Mapping

Abstract Slides Poster Similar

Total Estimation from RGB Video: On-Line Camera Self-Calibration, Non-Rigid Shape and Motion

Auto-TLDR; Joint Auto-Calibration, Pose and 3D Reconstruction of a Non-rigid Object from an uncalibrated RGB Image Sequence

Abstract Slides Poster Similar

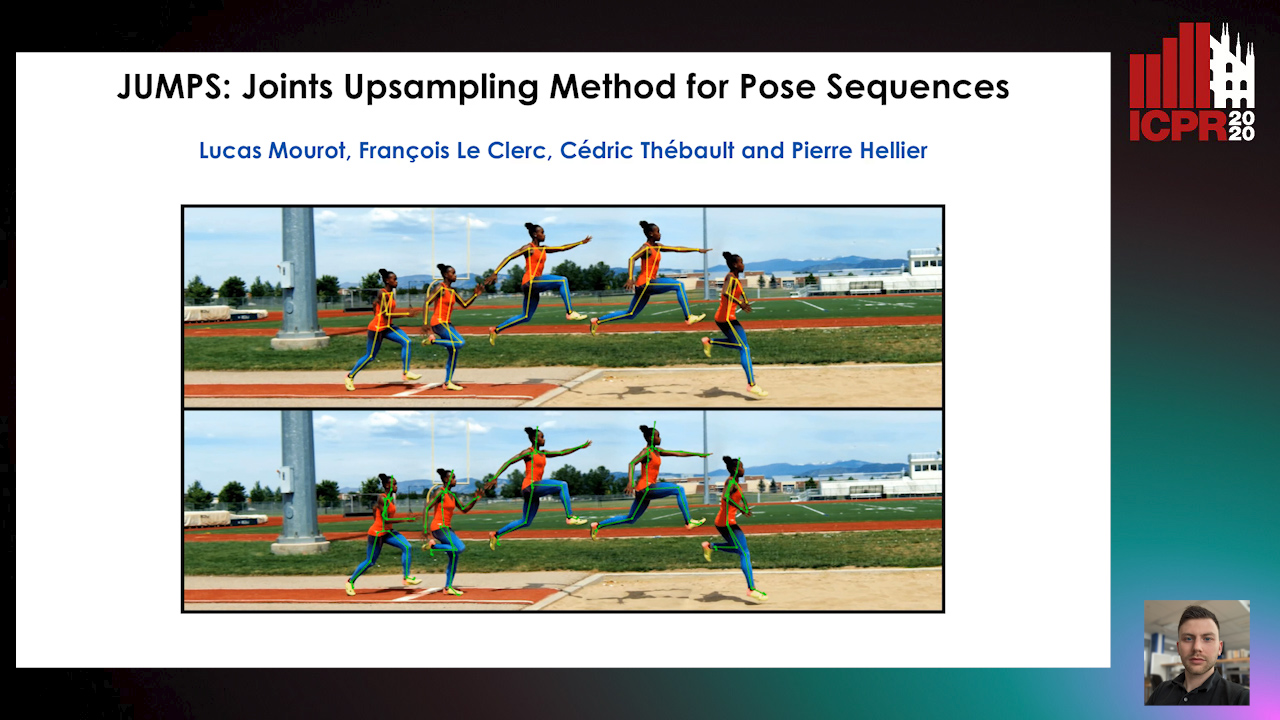

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

Extending Single Beam Lidar to Full Resolution by Fusing with Single Image Depth Estimation

Yawen Lu, Yuxing Wang, Devarth Parikh, Guoyu Lu

Auto-TLDR; Self-supervised LIDAR for Low-Cost Depth Estimation

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

NetCalib: A Novel Approach for LiDAR-Camera Auto-Calibration Based on Deep Learning

Shan Wu, Amnir Hadachi, Damien Vivet, Yadu Prabhakar

Auto-TLDR; Automatic Calibration of LiDAR and Cameras using Deep Neural Network

Abstract Slides Poster Similar

Learning to Implicitly Represent 3D Human Body from Multi-Scale Features and Multi-View Images

Zhongguo Li, Magnus Oskarsson, Anders Heyden

Auto-TLDR; Reconstruction of 3D human bodies from multi-view images using multi-stage end-to-end neural networks

Abstract Slides Poster Similar

Hybrid Approach for 3D Head Reconstruction: Using Neural Networks and Visual Geometry

Oussema Bouafif, Bogdan Khomutenko, Mohammed Daoudi

Auto-TLDR; Recovering 3D Head Geometry from a Single Image using Deep Learning and Geometric Techniques

Abstract Slides Poster Similar

Generic Merging of Structure from Motion Maps with a Low Memory Footprint

Gabrielle Flood, David Gillsjö, Patrik Persson, Anders Heyden, Kalle Åström

Auto-TLDR; A Low-Memory Footprint Representation for Robust Map Merge

Abstract Slides Poster Similar

P2D: A Self-Supervised Method for Depth Estimation from Polarimetry

Marc Blanchon, Desire Sidibe, Olivier Morel, Ralph Seulin, Daniel Braun, Fabrice Meriaudeau

Auto-TLDR; Polarimetric Regularization for Monocular Depth Estimation

Abstract Slides Poster Similar

On the Robustness of 3D Human Pose Estimation

Zerui Chen, Yan Huang, Liang Wang

Auto-TLDR; Robustness of 3D Human Pose Estimation Methods to Adversarial Attacks

StrongPose: Bottom-up and Strong Keypoint Heat Map Based Pose Estimation

Auto-TLDR; StrongPose: A bottom-up box-free approach for human pose estimation and action recognition

Abstract Slides Poster Similar

LFIR2Pose: Pose Estimation from an Extremely Low-Resolution FIR Image Sequence

Saki Iwata, Yasutomo Kawanishi, Daisuke Deguchi, Ichiro Ide, Hiroshi Murase, Tomoyoshi Aizawa

Auto-TLDR; LFIR2Pose: Human Pose Estimation from a Low-Resolution Far-InfraRed Image Sequence

Abstract Slides Poster Similar

Learning to Segment Dynamic Objects Using SLAM Outliers

Dupont Romain, Mohamed Tamaazousti, Hervé Le Borgne

Auto-TLDR; Automatic Segmentation of Dynamic Objects Using SLAM Outliers Using Consensus Inversion

Abstract Slides Poster Similar

Can You Trust Your Pose? Confidence Estimation in Visual Localization

Luca Ferranti, Xiaotian Li, Jani Boutellier, Juho Kannala

Auto-TLDR; Pose Confidence Estimation in Large-Scale Environments: A Light-weight Approach to Improving Pose Estimation Pipeline

Abstract Slides Poster Similar

Photometric Stereo with Twin-Fisheye Cameras

Jordan Caracotte, Fabio Morbidi, El Mustapha Mouaddib

Auto-TLDR; Photometric stereo problem for low-cost 360-degree cameras

Abstract Slides Poster Similar

Tilting at Windmills: Data Augmentation for Deeppose Estimation Does Not Help with Occlusions

Rafal Pytel, Osman Semih Kayhan, Jan Van Gemert

Auto-TLDR; Targeted Keypoint and Body Part Occlusion Attacks for Human Pose Estimation

Abstract Slides Poster Similar

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

IPT: A Dataset for Identity Preserved Tracking in Closed Domains

Thomas Heitzinger, Martin Kampel

Auto-TLDR; Identity Preserved Tracking Using Depth Data for Privacy and Privacy

Abstract Slides Poster Similar

Extraction and Analysis of 3D Kinematic Parameters of Table Tennis Ball from a Single Camera

Jordan Calandre, Renaud Péteri, Laurent Mascarilla, Benoit Tremblais

Auto-TLDR; 3D Ball Trajectories Analysis using a Single Camera for Sport Gesture Analysis

Abstract Slides Poster Similar

User-Independent Gaze Estimation by Extracting Pupil Parameter and Its Mapping to the Gaze Angle

Auto-TLDR; Gaze Point Estimation using Pupil Shape for Generalization

Abstract Slides Poster Similar

Multi-View Object Detection Using Epipolar Constraints within Cluttered X-Ray Security Imagery

Brian Kostadinov Shalon Isaac-Medina, Chris G. Willcocks, Toby Breckon

Auto-TLDR; Exploiting Epipolar Constraints for Multi-View Object Detection in X-ray Security Images

Abstract Slides Poster Similar

Learning to Find Good Correspondences of Multiple Objects

Youye Xie, Yingheng Tang, Gongguo Tang, William Hoff

Auto-TLDR; Multi-Object Inliers and Outliers for Perspective-n-Point and Object Recognition

Abstract Slides Poster Similar

Self-Supervised Joint Encoding of Motion and Appearance for First Person Action Recognition

Mirco Planamente, Andrea Bottino, Barbara Caputo

Auto-TLDR; A Single Stream Architecture for Egocentric Action Recognition from the First-Person Point of View

Abstract Slides Poster Similar

Video Analytics Gait Trend Measurement for Fall Prevention and Health Monitoring

Lawrence O'Gorman, Xinyi Liu, Md Imran Sarker, Mariofanna Milanova

Auto-TLDR; Towards Health Monitoring of Gait with Deep Learning

Abstract Slides Poster Similar

Learning Knowledge-Rich Sequential Model for Planar Homography Estimation in Aerial Video

Auto-TLDR; Sequential Estimation of Planar Homographic Transformations over Aerial Videos

Abstract Slides Poster Similar

Inner Eye Canthus Localization for Human Body Temperature Screening

Claudio Ferrari, Lorenzo Berlincioni, Marco Bertini, Alberto Del Bimbo

Auto-TLDR; Automatic Localization of the Inner Eye Canthus in Thermal Face Images using 3D Morphable Face Model

Abstract Slides Poster Similar

Motion Segmentation with Pairwise Matches and Unknown Number of Motions

Federica Arrigoni, Tomas Pajdla, Luca Magri

Auto-TLDR; Motion Segmentation using Multi-Modelfitting andpermutation synchronization

Abstract Slides Poster Similar

Adaptive Estimation of Optimal Color Transformations for Deep Convolutional Network Based Homography Estimation

Miguel A. Molina-Cabello, Jorge García-González, Rafael Marcos Luque-Baena, Karl Thurnhofer-Hemsi, Ezequiel López-Rubio

Auto-TLDR; Improving Homography Estimation from a Pair of Natural Images Using Deep Convolutional Neural Networks

Abstract Slides Poster Similar

Two-Stage Adaptive Object Scene Flow Using Hybrid CNN-CRF Model

Congcong Li, Haoyu Ma, Qingmin Liao

Auto-TLDR; Adaptive object scene flow estimation using a hybrid CNN-CRF model and adaptive iteration

Abstract Slides Poster Similar

Movement-Induced Priors for Deep Stereo

Yuxin Hou, Muhammad Kamran Janjua, Juho Kannala, Arno Solin

Auto-TLDR; Fusing Stereo Disparity Estimation with Movement-induced Prior Information

Abstract Slides Poster Similar

Computing Stable Resultant-Based Minimal Solvers by Hiding a Variable

Snehal Bhayani, Zuzana Kukelova, Janne Heikkilä

Auto-TLDR; Sparse Permian-Based Method for Solving Minimal Systems of Polynomial Equations