Occlusion-Tolerant and Personalized 3D Human Pose Estimation in RGB Images

Auto-TLDR; Real-Time 3D Human Pose Estimation in BVH using Inverse Kinematics Solver and Neural Networks

Similar papers

Orthographic Projection Linear Regression for Single Image 3D Human Pose Estimation

Yahui Zhang, Shaodi You, Theo Gevers

Auto-TLDR; A Deep Neural Network for 3D Human Pose Estimation from a Single 2D Image in the Wild

Abstract Slides Poster Similar

RefiNet: 3D Human Pose Refinement with Depth Maps

Andrea D'Eusanio, Stefano Pini, Guido Borghi, Roberto Vezzani, Rita Cucchiara

Auto-TLDR; RefiNet: A Multi-stage Framework for 3D Human Pose Estimation

Exploring Severe Occlusion: Multi-Person 3D Pose Estimation with Gated Convolution

Renshu Gu, Gaoang Wang, Jenq-Neng Hwang

Auto-TLDR; 3D Human Pose Estimation for Multi-Human Videos with Occlusion

Light3DPose: Real-Time Multi-Person 3D Pose Estimation from Multiple Views

Alessio Elmi, Davide Mazzini, Pietro Tortella

Auto-TLDR; 3D Pose Estimation of Multiple People from a Few calibrated Camera Views using Deep Learning

Abstract Slides Poster Similar

Rotational Adjoint Methods for Learning-Free 3D Human Pose Estimation from IMU Data

Caterina Emilia Agelide Buizza, Yiannis Demiris

Auto-TLDR; Learning-free 3D Human Pose Estimation from Inertial Measurement Unit Data

Better Prior Knowledge Improves Human-Pose-Based Extrinsic Camera Calibration

Olivier Moliner, Sangxia Huang, Kalle Åström

Auto-TLDR; Improving Human-pose-based Extrinsic Calibration for Multi-Camera Systems

Abstract Slides Poster Similar

PEAN: 3D Hand Pose Estimation Adversarial Network

Linhui Sun, Yifan Zhang, Jing Lu, Jian Cheng, Hanqing Lu

Auto-TLDR; PEAN: 3D Hand Pose Estimation with Adversarial Learning Framework

Abstract Slides Poster Similar

Unsupervised 3D Human Pose Estimation in Multi-view-multi-pose Video

Cheng Sun, Diego Thomas, Hiroshi Kawasaki

Auto-TLDR; Unsupervised 3D Human Pose Estimation from 2D Videos Using Generative Adversarial Network

Abstract Slides Poster Similar

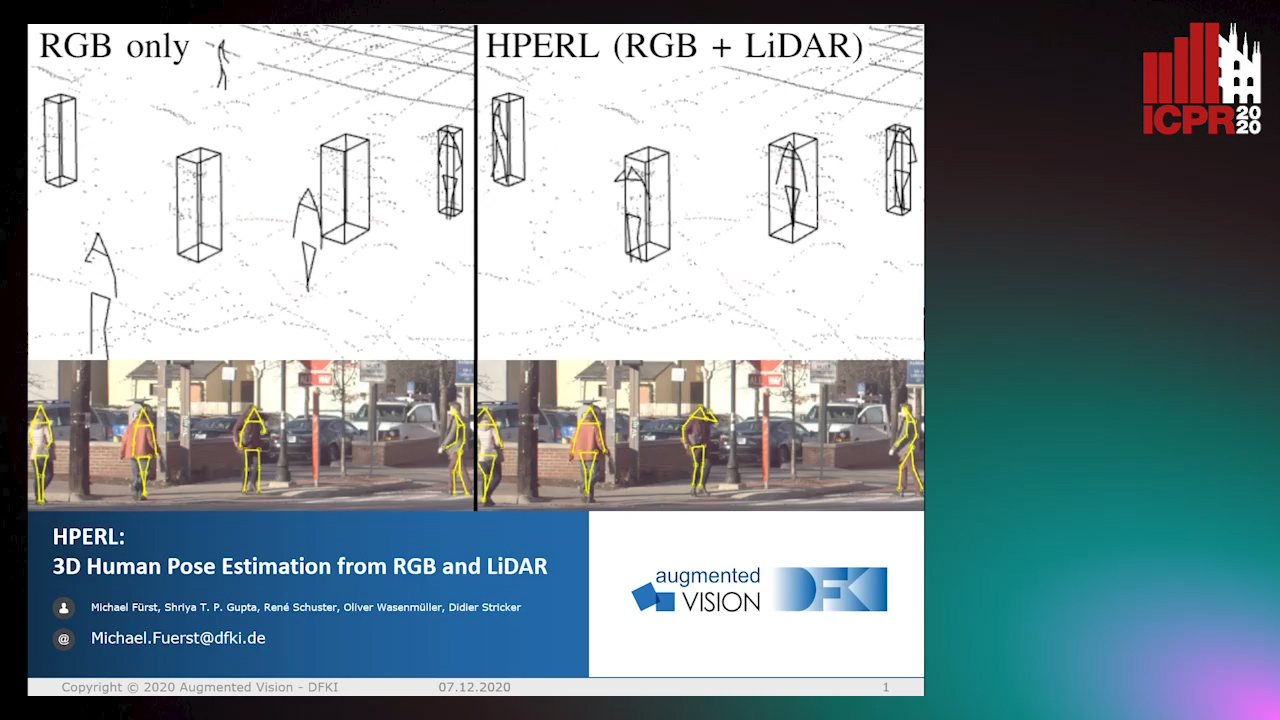

HPERL: 3D Human Pose Estimastion from RGB and LiDAR

Michael Fürst, Shriya T.P. Gupta, René Schuster, Oliver Wasenmüler, Didier Stricker

Auto-TLDR; 3D Human Pose Estimation Using RGB and LiDAR Using Weakly-Supervised Approach

Abstract Slides Poster Similar

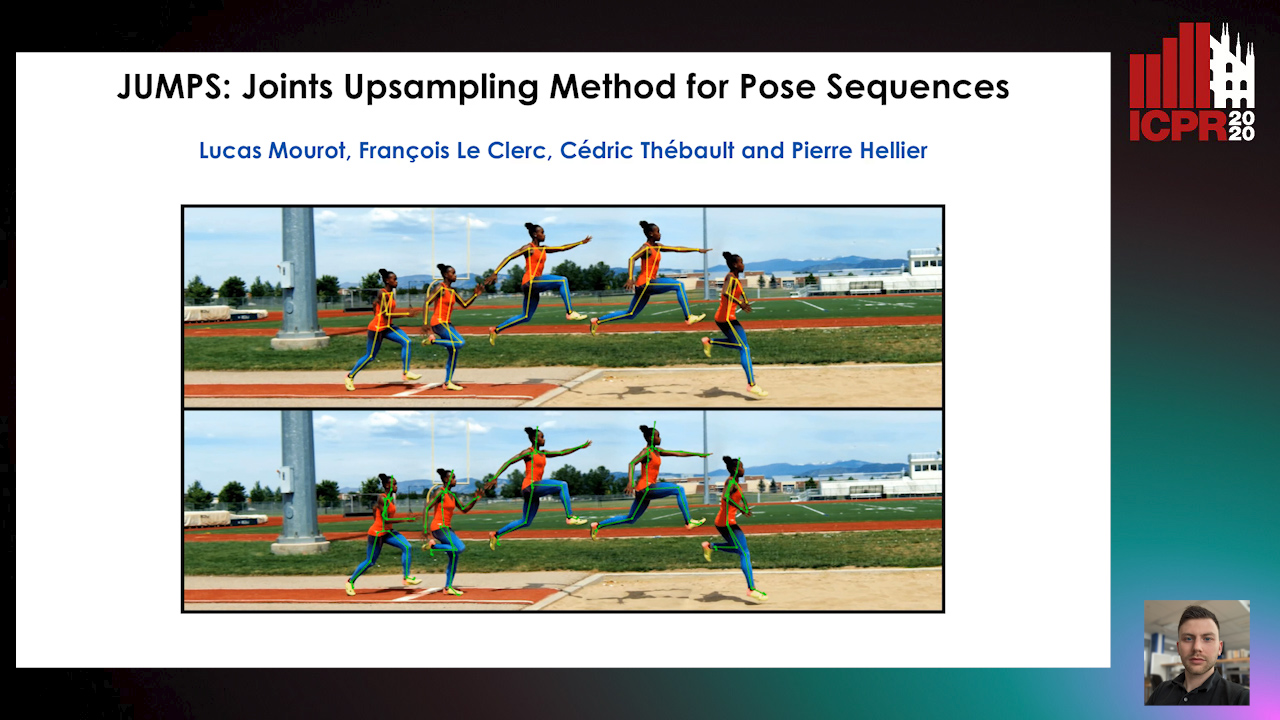

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

A Multi-Task Neural Network for Action Recognition with 3D Key-Points

Rongxiao Tang, Wang Luyang, Zhenhua Guo

Auto-TLDR; Multi-task Neural Network for Action Recognition and 3D Human Pose Estimation

Abstract Slides Poster Similar

On the Robustness of 3D Human Pose Estimation

Zerui Chen, Yan Huang, Liang Wang

Auto-TLDR; Robustness of 3D Human Pose Estimation Methods to Adversarial Attacks

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

What and How? Jointly Forecasting Human Action and Pose

Yanjun Zhu, Yanxia Zhang, Qiong Liu, Andreas Girgensohn

Auto-TLDR; Forecasting Human Actions and Motion Trajectories with Joint Action Classification and Pose Regression

Abstract Slides Poster Similar

Silhouette Body Measurement Benchmarks

Song Yan, Johan Wirta, Joni-Kristian Kamarainen

Auto-TLDR; BODY-fit: A Realistic 3D Body Measurement Dataset for Anthropometric Measurement

Abstract Slides Poster Similar

Learning to Implicitly Represent 3D Human Body from Multi-Scale Features and Multi-View Images

Zhongguo Li, Magnus Oskarsson, Anders Heyden

Auto-TLDR; Reconstruction of 3D human bodies from multi-view images using multi-stage end-to-end neural networks

Abstract Slides Poster Similar

Space-Time Domain Tensor Neural Networks: An Application on Human Pose Classification

Konstantinos Makantasis, Athanasios Voulodimos, Anastasios Doulamis, Nikolaos Doulamis, Nikolaos Bakalos

Auto-TLDR; Tensor-Based Neural Network for Spatiotemporal Pose Classifiaction using Three-Dimensional Skeleton Data

Abstract Slides Poster Similar

StrongPose: Bottom-up and Strong Keypoint Heat Map Based Pose Estimation

Auto-TLDR; StrongPose: A bottom-up box-free approach for human pose estimation and action recognition

Abstract Slides Poster Similar

Pose-Based Body Language Recognition for Emotion and Psychiatric Symptom Interpretation

Zhengyuan Yang, Amanda Kay, Yuncheng Li, Wendi Cross, Jiebo Luo

Auto-TLDR; Body Language Based Emotion Recognition for Psychiatric Symptoms Prediction

Abstract Slides Poster Similar

Hybrid Approach for 3D Head Reconstruction: Using Neural Networks and Visual Geometry

Oussema Bouafif, Bogdan Khomutenko, Mohammed Daoudi

Auto-TLDR; Recovering 3D Head Geometry from a Single Image using Deep Learning and Geometric Techniques

Abstract Slides Poster Similar

Attention-Oriented Action Recognition for Real-Time Human-Robot Interaction

Ziyang Song, Ziyi Yin, Zejian Yuan, Chong Zhang, Wanchao Chi, Yonggen Ling, Shenghao Zhang

Auto-TLDR; Attention-Oriented Multi-Level Network for Action Recognition in Interaction Scenes

Abstract Slides Poster Similar

Inner Eye Canthus Localization for Human Body Temperature Screening

Claudio Ferrari, Lorenzo Berlincioni, Marco Bertini, Alberto Del Bimbo

Auto-TLDR; Automatic Localization of the Inner Eye Canthus in Thermal Face Images using 3D Morphable Face Model

Abstract Slides Poster Similar

Learning to Take Directions One Step at a Time

Qiyang Hu, Adrian Wälchli, Tiziano Portenier, Matthias Zwicker, Paolo Favaro

Auto-TLDR; Generating a Sequence of Motion Strokes from a Single Image

Abstract Slides Poster Similar

PROPEL: Probabilistic Parametric Regression Loss for Convolutional Neural Networks

Muhammad Asad, Rilwan Basaru, S M Masudur Rahman Al Arif, Greg Slabaugh

Auto-TLDR; PRObabilistic Parametric rEgression Loss for Probabilistic Regression Using Convolutional Neural Networks

Single View Learning in Action Recognition

Gaurvi Goyal, Nicoletta Noceti, Francesca Odone

Auto-TLDR; Cross-View Action Recognition Using Domain Adaptation for Knowledge Transfer

Abstract Slides Poster Similar

Deep Ordinal Regression with Label Diversity

Axel Berg, Magnus Oskarsson, Mark Oconnor

Auto-TLDR; Discrete Regression via Classification for Neural Network Learning

Tilting at Windmills: Data Augmentation for Deeppose Estimation Does Not Help with Occlusions

Rafal Pytel, Osman Semih Kayhan, Jan Van Gemert

Auto-TLDR; Targeted Keypoint and Body Part Occlusion Attacks for Human Pose Estimation

Abstract Slides Poster Similar

Temporal Attention-Augmented Graph Convolutional Network for Efficient Skeleton-Based Human Action Recognition

Negar Heidari, Alexandros Iosifidis

Auto-TLDR; Temporal Attention Module for Efficient Graph Convolutional Network-based Action Recognition

Abstract Slides Poster Similar

Weakly Supervised Body Part Segmentation with Pose Based Part Priors

Zhengyuan Yang, Yuncheng Li, Linjie Yang, Ning Zhang, Jiebo Luo

Auto-TLDR; Weakly Supervised Body Part Segmentation Using Weak Labels

Exploiting the Logits: Joint Sign Language Recognition and Spell-Correction

Christina Runkel, Stefan Dorenkamp, Hartmut Bauermeister, Michael Möller

Auto-TLDR; A Convolutional Neural Network for Spell-correction in Sign Language Videos

Abstract Slides Poster Similar

Subspace Clustering for Action Recognition with Covariance Representations and Temporal Pruning

Giancarlo Paoletti, Jacopo Cavazza, Cigdem Beyan, Alessio Del Bue

Auto-TLDR; Unsupervised Learning for Human Action Recognition from Skeletal Data

DeepPear: Deep Pose Estimation and Action Recognition

Wen-Jiin Tsai, You-Ying Jhuang

Auto-TLDR; Human Action Recognition Using RGB Video Using 3D Human Pose and Appearance Features

Abstract Slides Poster Similar

Movement-Induced Priors for Deep Stereo

Yuxin Hou, Muhammad Kamran Janjua, Juho Kannala, Arno Solin

Auto-TLDR; Fusing Stereo Disparity Estimation with Movement-induced Prior Information

Abstract Slides Poster Similar

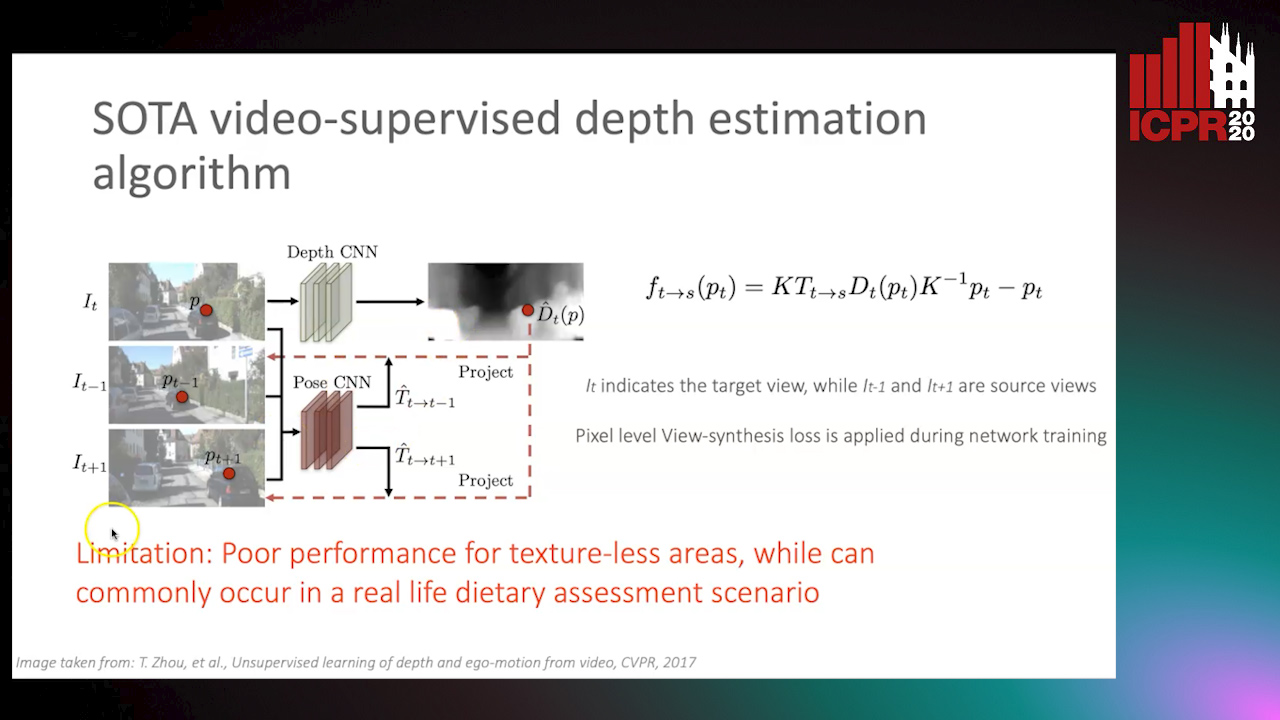

Partially Supervised Multi-Task Network for Single-View Dietary Assessment

Ya Lu, Thomai Stathopoulou, Stavroula Mougiakakou

Auto-TLDR; Food Volume Estimation from a Single Food Image via Geometric Understanding and Semantic Prediction

Abstract Slides Poster Similar

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

Minimal Solvers for Indoor UAV Positioning

Marcus Valtonen Örnhag, Patrik Persson, Mårten Wadenbäck, Kalle Åström, Anders Heyden

Auto-TLDR; Relative Pose Solvers for Visual Indoor UAV Navigation

Abstract Slides Poster Similar

LFIR2Pose: Pose Estimation from an Extremely Low-Resolution FIR Image Sequence

Saki Iwata, Yasutomo Kawanishi, Daisuke Deguchi, Ichiro Ide, Hiroshi Murase, Tomoyoshi Aizawa

Auto-TLDR; LFIR2Pose: Human Pose Estimation from a Low-Resolution Far-InfraRed Image Sequence

Abstract Slides Poster Similar

P2 Net: Augmented Parallel-Pyramid Net for Attention Guided Pose Estimation

Luanxuan Hou, Jie Cao, Yuan Zhao, Haifeng Shen, Jian Tang, Ran He

Auto-TLDR; Parallel-Pyramid Net with Partial Attention for Human Pose Estimation

Abstract Slides Poster Similar

Simple Multi-Resolution Representation Learning for Human Pose Estimation

Trung Tran Quang, Van Giang Nguyen, Daeyoung Kim

Auto-TLDR; Multi-resolution Heatmap Learning for Human Pose Estimation

Abstract Slides Poster Similar

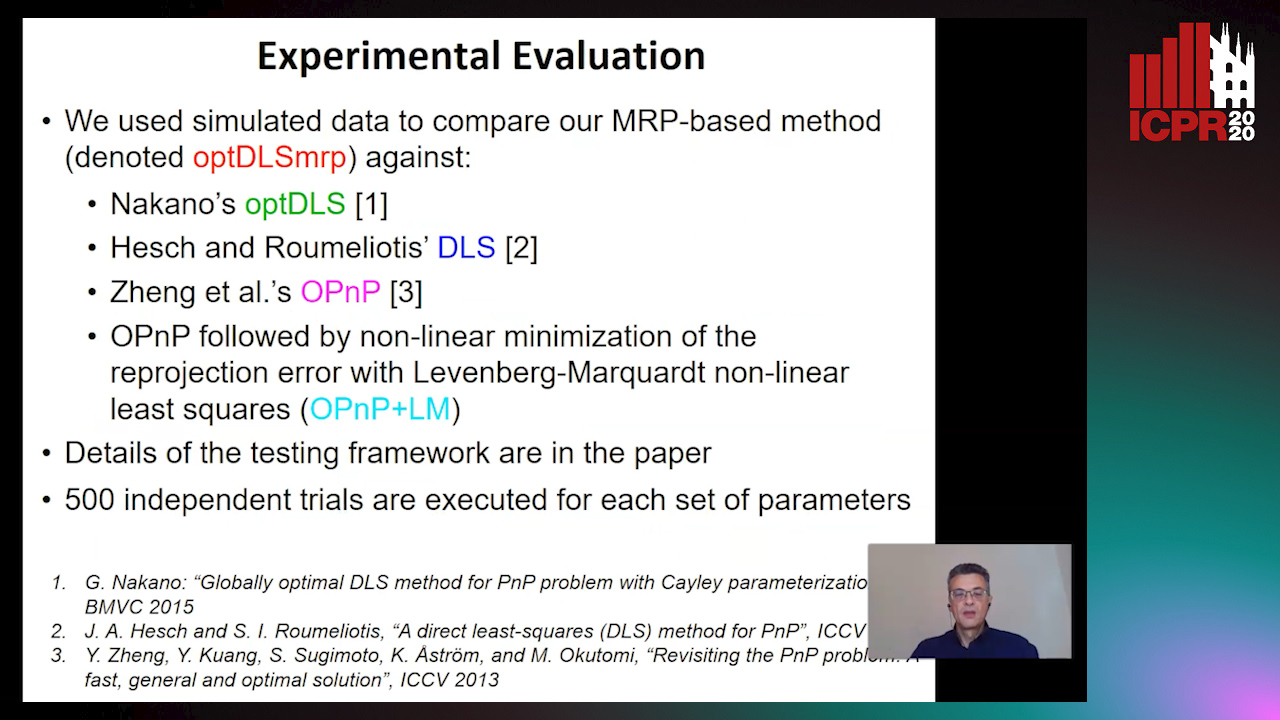

A Globally Optimal Method for the PnP Problem with MRP Rotation Parameterization

Manolis Lourakis, George Terzakis

Auto-TLDR; A Direct least squares, algebraic PnP solver with modified Rodrigues parameters

Can You Trust Your Pose? Confidence Estimation in Visual Localization

Luca Ferranti, Xiaotian Li, Jani Boutellier, Juho Kannala

Auto-TLDR; Pose Confidence Estimation in Large-Scale Environments: A Light-weight Approach to Improving Pose Estimation Pipeline

Abstract Slides Poster Similar

Real Time Fencing Move Classification and Detection at Touch Time During a Fencing Match

Cem Ekin Sunal, Chris G. Willcocks, Boguslaw Obara

Auto-TLDR; Fencing Body Move Classification and Detection Using Deep Learning

Derivation of Geometrically and Semantically Annotated UAV Datasets at Large Scales from 3D City Models

Sidi Wu, Lukas Liebel, Marco Körner

Auto-TLDR; Large-Scale Dataset of Synthetic UAV Imagery for Geometric and Semantic Annotation

Abstract Slides Poster Similar

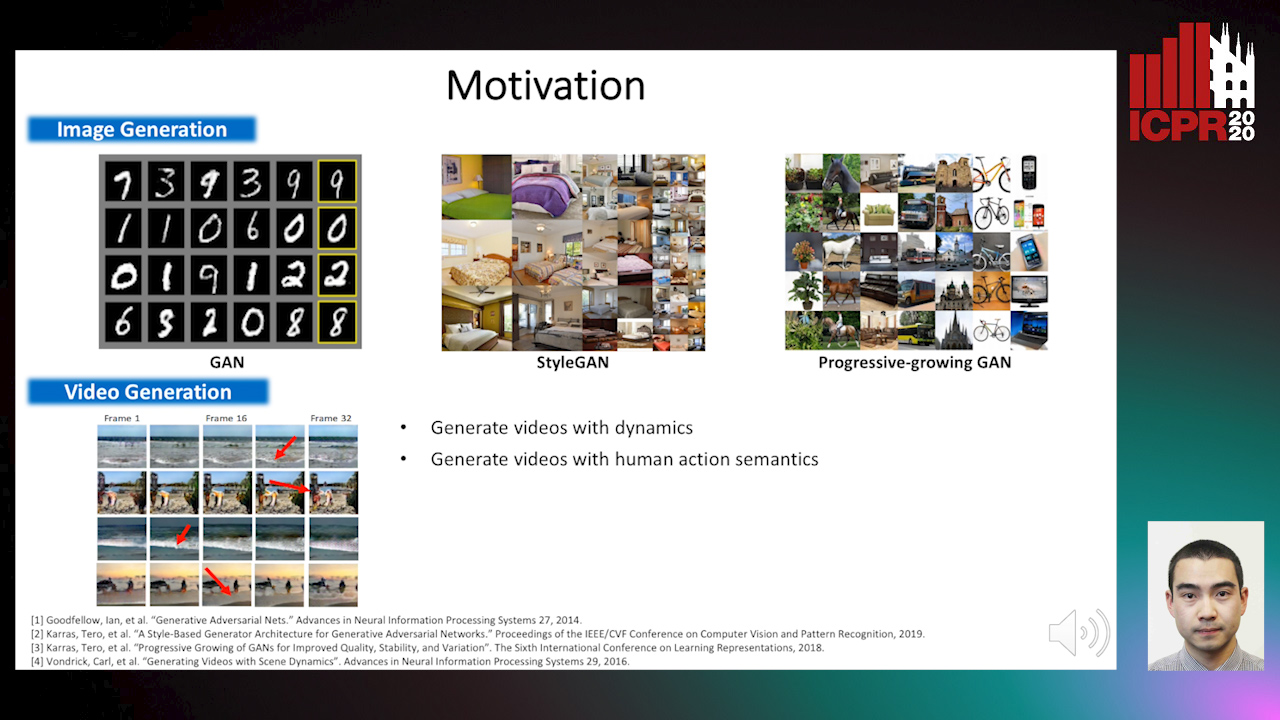

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

Rethinking ReID:Multi-Feature Fusion Person Re-Identification Based on Orientation Constraints

Mingjing Ai, Guozhi Shan, Bo Liu, Tianyang Liu

Auto-TLDR; Person Re-identification with Orientation Constrained Network

Abstract Slides Poster Similar

Vertex Feature Encoding and Hierarchical Temporal Modeling in a Spatio-Temporal Graph Convolutional Network for Action Recognition

Konstantinos Papadopoulos, Enjie Ghorbel, Djamila Aouada, Bjorn Ottersten

Auto-TLDR; Spatio-Temporal Graph Convolutional Network for Skeleton-Based Action Recognition

Abstract Slides Poster Similar

P2D: A Self-Supervised Method for Depth Estimation from Polarimetry

Marc Blanchon, Desire Sidibe, Olivier Morel, Ralph Seulin, Daniel Braun, Fabrice Meriaudeau

Auto-TLDR; Polarimetric Regularization for Monocular Depth Estimation

Abstract Slides Poster Similar

Self-Supervised Detection and Pose Estimation of Logistical Objects in 3D Sensor Data

Nikolas Müller, Jonas Stenzel, Jian-Jia Chen

Auto-TLDR; A self-supervised and fully automated deep learning approach for object pose estimation using simulated 3D data

Abstract Slides Poster Similar