Estimation of Clinical Tremor Using Spatio-Temporal Adversarial AutoEncoder

Li Zhang,

Vidya Koesmahargyo,

Isaac Galatzer-Levy

Auto-TLDR; ST-AAE: Spatio-temporal Adversarial Autoencoder for Clinical Assessment of Hand Tremor Frequency and Severity

Similar papers

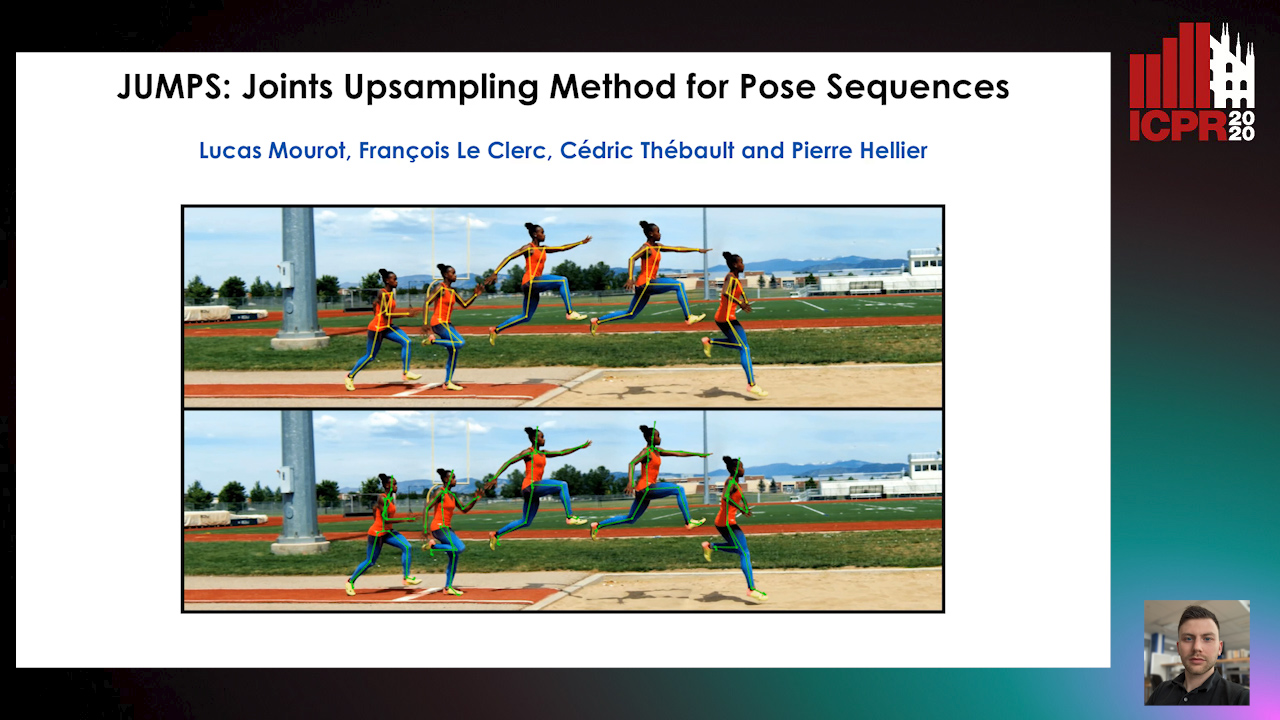

JUMPS: Joints Upsampling Method for Pose Sequences

Lucas Mourot, Francois Le Clerc, Cédric Thébault, Pierre Hellier

Auto-TLDR; JUMPS: Increasing the Number of Joints in 2D Pose Estimation and Recovering Occluded or Missing Joints

Abstract Slides Poster Similar

What and How? Jointly Forecasting Human Action and Pose

Yanjun Zhu, Yanxia Zhang, Qiong Liu, Andreas Girgensohn

Auto-TLDR; Forecasting Human Actions and Motion Trajectories with Joint Action Classification and Pose Regression

Abstract Slides Poster Similar

Self-Supervised Joint Encoding of Motion and Appearance for First Person Action Recognition

Mirco Planamente, Andrea Bottino, Barbara Caputo

Auto-TLDR; A Single Stream Architecture for Egocentric Action Recognition from the First-Person Point of View

Abstract Slides Poster Similar

GazeMAE: General Representations of Eye Movements Using a Micro-Macro Autoencoder

Louise Gillian C. Bautista, Prospero Naval

Auto-TLDR; Fast and Slow Eye Movement Representations for Sentiment-agnostic Eye Tracking

Abstract Slides Poster Similar

Pose-Based Body Language Recognition for Emotion and Psychiatric Symptom Interpretation

Zhengyuan Yang, Amanda Kay, Yuncheng Li, Wendi Cross, Jiebo Luo

Auto-TLDR; Body Language Based Emotion Recognition for Psychiatric Symptoms Prediction

Abstract Slides Poster Similar

Motion and Region Aware Adversarial Learning for Fall Detection with Thermal Imaging

Vineet Mehta, Abhinav Dhall, Sujata Pal, Shehroz Khan

Auto-TLDR; Automatic Fall Detection with Adversarial Network using Thermal Imaging Camera

Abstract Slides Poster Similar

TinyVIRAT: Low-Resolution Video Action Recognition

Ugur Demir, Yogesh Rawat, Mubarak Shah

Auto-TLDR; TinyVIRAT: A Progressive Generative Approach for Action Recognition in Videos

Abstract Slides Poster Similar

Leveraging Synthetic Subject Invariant EEG Signals for Zero Calibration BCI

Nik Khadijah Nik Aznan, Amir Atapour-Abarghouei, Stephen Bonner, Jason Connolly, Toby Breckon

Auto-TLDR; SIS-GAN: Subject Invariant SSVEP Generative Adversarial Network for Brain-Computer Interface

Video Anomaly Detection by Estimating Likelihood of Representations

Auto-TLDR; Video Anomaly Detection in the latent feature space using a deep probabilistic model

Abstract Slides Poster Similar

Towards Practical Compressed Video Action Recognition: A Temporal Enhanced Multi-Stream Network

Bing Li, Longteng Kong, Dongming Zhang, Xiuguo Bao, Di Huang, Yunhong Wang

Auto-TLDR; TEMSN: Temporal Enhanced Multi-Stream Network for Compressed Video Action Recognition

Abstract Slides Poster Similar

Activity Recognition Using First-Person-View Cameras Based on Sparse Optical Flows

Peng-Yuan Kao, Yan-Jing Lei, Chia-Hao Chang, Chu-Song Chen, Ming-Sui Lee, Yi-Ping Hung

Auto-TLDR; 3D Convolutional Neural Network for Activity Recognition with FPV Videos

Abstract Slides Poster Similar

Fully Convolutional Neural Networks for Raw Eye Tracking Data Segmentation, Generation, and Reconstruction

Wolfgang Fuhl, Yao Rong, Enkelejda Kasneci

Auto-TLDR; Semantic Segmentation of Eye Tracking Data with Fully Convolutional Neural Networks

Abstract Slides Poster Similar

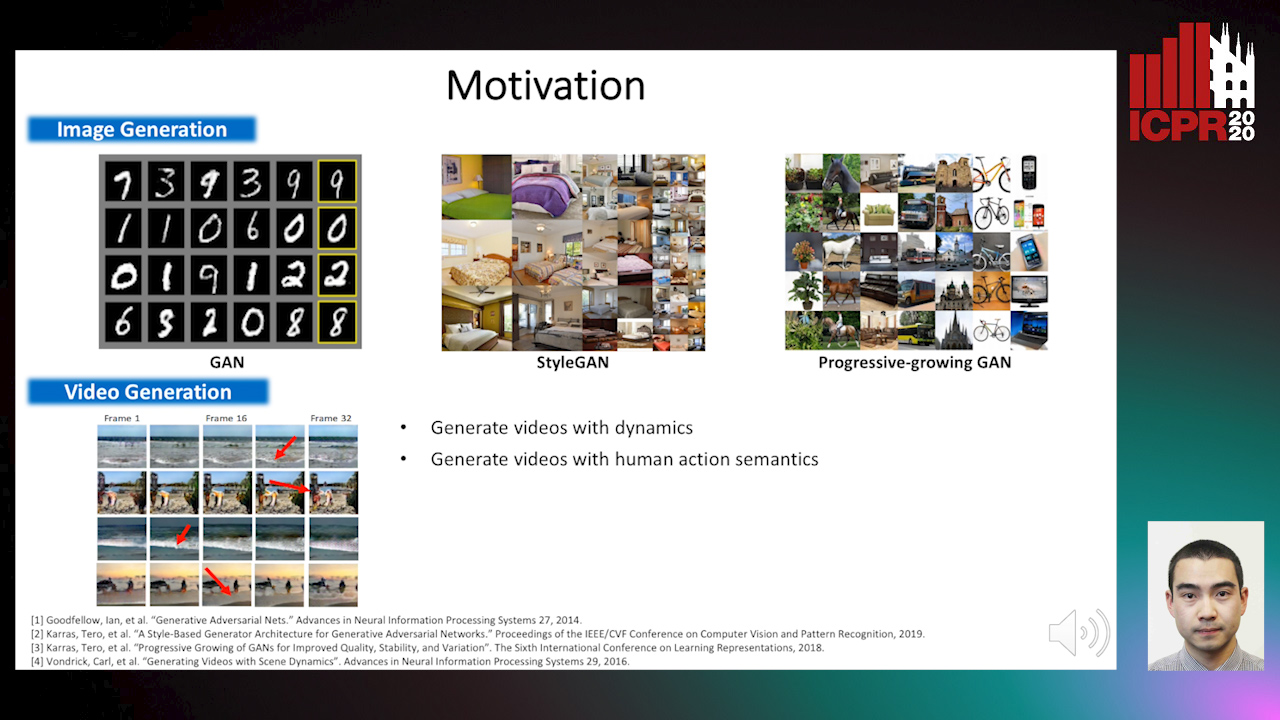

The Role of Cycle Consistency for Generating Better Human Action Videos from a Single Frame

Auto-TLDR; Generating Videos with Human Action Semantics using Cycle Constraints

Abstract Slides Poster Similar

IPN Hand: A Video Dataset and Benchmark for Real-Time Continuous Hand Gesture Recognition

Gibran Benitez-Garcia, Jesus Olivares-Mercado, Gabriel Sanchez-Perez, Keiji Yanai

Auto-TLDR; IPN Hand: A Benchmark Dataset for Continuous Hand Gesture Recognition

Abstract Slides Poster Similar

Image Sequence Based Cyclist Action Recognition Using Multi-Stream 3D Convolution

Stefan Zernetsch, Steven Schreck, Viktor Kress, Konrad Doll, Bernhard Sick

Auto-TLDR; 3D-ConvNet: A Multi-stream 3D Convolutional Neural Network for Detecting Cyclists in Real World Traffic Situations

Abstract Slides Poster Similar

Modeling Long-Term Interactions to Enhance Action Recognition

Alejandro Cartas, Petia Radeva, Mariella Dimiccoli

Auto-TLDR; A Hierarchical Long Short-Term Memory Network for Action Recognition in Egocentric Videos

Abstract Slides Poster Similar

A Joint Representation Learning and Feature Modeling Approach for One-Class Recognition

Pramuditha Perera, Vishal Patel

Auto-TLDR; Combining Generative Features and One-Class Classification for Effective One-class Recognition

Abstract Slides Poster Similar

RWF-2000: An Open Large Scale Video Database for Violence Detection

Ming Cheng, Kunjing Cai, Ming Li

Auto-TLDR; Flow Gated Network for Violence Detection in Surveillance Cameras

Abstract Slides Poster Similar

Video Semantic Segmentation Using Deep Multi-View Representation Learning

Akrem Sellami, Salvatore Tabbone

Auto-TLDR; Deep Multi-view Representation Learning for Video Object Segmentation

Abstract Slides Poster Similar

Adversarial Encoder-Multi-Task-Decoder for Multi-Stage Processes

Andre Mendes, Julian Togelius, Leandro Dos Santos Coelho

Auto-TLDR; Multi-Task Learning and Semi-Supervised Learning for Multi-Stage Processes

Weight Estimation from an RGB-D Camera in Top-View Configuration

Marco Mameli, Marina Paolanti, Nicola Conci, Filippo Tessaro, Emanuele Frontoni, Primo Zingaretti

Auto-TLDR; Top-View Weight Estimation using Deep Neural Networks

Abstract Slides Poster Similar

Single View Learning in Action Recognition

Gaurvi Goyal, Nicoletta Noceti, Francesca Odone

Auto-TLDR; Cross-View Action Recognition Using Domain Adaptation for Knowledge Transfer

Abstract Slides Poster Similar

Learning Dictionaries of Kinematic Primitives for Action Classification

Alessia Vignolo, Nicoletta Noceti, Alessandra Sciutti, Francesca Odone, Giulio Sandini

Auto-TLDR; Action Understanding using Visual Motion Primitives

Abstract Slides Poster Similar

3D Attention Mechanism for Fine-Grained Classification of Table Tennis Strokes Using a Twin Spatio-Temporal Convolutional Neural Networks

Pierre-Etienne Martin, Jenny Benois-Pineau, Renaud Péteri, Julien Morlier

Auto-TLDR; Attentional Blocks for Action Recognition in Table Tennis Strokes

Abstract Slides Poster Similar

VR Sickness Assessment with Perception Prior and Hybrid Temporal Features

Po-Chen Kuo, Li-Chung Chuang, Dong-Yi Lin, Ming-Sui Lee

Auto-TLDR; A novel content-based VR sickness assessment method which considers both the perception prior and hybrid temporal features

Abstract Slides Poster Similar

Learning Interpretable Representation for 3D Point Clouds

Feng-Guang Su, Ci-Siang Lin, Yu-Chiang Frank Wang

Auto-TLDR; Disentangling Body-type and Pose Information from 3D Point Clouds Using Adversarial Learning

Abstract Slides Poster Similar

CardioGAN: An Attention-Based Generative Adversarial Network for Generation of Electrocardiograms

Subhrajyoti Dasgupta, Sudip Das, Ujjwal Bhattacharya

Auto-TLDR; CardioGAN: Generative Adversarial Network for Synthetic Electrocardiogram Signals

Abstract Slides Poster Similar

Learning to Take Directions One Step at a Time

Qiyang Hu, Adrian Wälchli, Tiziano Portenier, Matthias Zwicker, Paolo Favaro

Auto-TLDR; Generating a Sequence of Motion Strokes from a Single Image

Abstract Slides Poster Similar

Mutual Information Based Method for Unsupervised Disentanglement of Video Representation

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; MIPAE: Mutual Information Predictive Auto-Encoder for Video Prediction

Abstract Slides Poster Similar

Reducing the Variance of Variational Estimates of Mutual Information by Limiting the Critic's Hypothesis Space to RKHS

Aditya Sreekar P, Ujjwal Tiwari, Anoop Namboodiri

Auto-TLDR; Mutual Information Estimation from Variational Lower Bounds Using a Critic's Hypothesis Space

Image Representation Learning by Transformation Regression

Xifeng Guo, Jiyuan Liu, Sihang Zhou, En Zhu, Shihao Dong

Auto-TLDR; Self-supervised Image Representation Learning using Continuous Parameter Prediction

Abstract Slides Poster Similar

Combining GANs and AutoEncoders for Efficient Anomaly Detection

Fabio Carrara, Giuseppe Amato, Luca Brombin, Fabrizio Falchi, Claudio Gennaro

Auto-TLDR; CBIGAN: Anomaly Detection in Images with Consistency Constrained BiGAN

Abstract Slides Poster Similar

Early Wildfire Smoke Detection in Videos

Taanya Gupta, Hengyue Liu, Bir Bhanu

Auto-TLDR; Semi-supervised Spatio-Temporal Video Object Segmentation for Automatic Detection of Smoke in Videos during Forest Fire

Movement-Induced Priors for Deep Stereo

Yuxin Hou, Muhammad Kamran Janjua, Juho Kannala, Arno Solin

Auto-TLDR; Fusing Stereo Disparity Estimation with Movement-induced Prior Information

Abstract Slides Poster Similar

Variational Capsule Encoder

Harish Raviprakash, Syed Anwar, Ulas Bagci

Auto-TLDR; Bayesian Capsule Networks for Representation Learning in latent space

Abstract Slides Poster Similar

Semi-Supervised Generative Adversarial Networks with a Pair of Complementary Generators for Retinopathy Screening

Yingpeng Xie, Qiwei Wan, Hai Xie, En-Leng Tan, Yanwu Xu, Baiying Lei

Auto-TLDR; Generative Adversarial Networks for Retinopathy Diagnosis via Fundus Images

Abstract Slides Poster Similar

Developing Motion Code Embedding for Action Recognition in Videos

Maxat Alibayev, David Andrea Paulius, Yu Sun

Auto-TLDR; Motion Embedding via Motion Codes for Action Recognition

Abstract Slides Poster Similar

Robust Visual Object Tracking with Two-Stream Residual Convolutional Networks

Ning Zhang, Jingen Liu, Ke Wang, Dan Zeng, Tao Mei

Auto-TLDR; Two-Stream Residual Convolutional Network for Visual Tracking

Abstract Slides Poster Similar

Dual-MTGAN: Stochastic and Deterministic Motion Transfer for Image-To-Video Synthesis

Fu-En Yang, Jing-Cheng Chang, Yuan-Hao Lee, Yu-Chiang Frank Wang

Auto-TLDR; Dual Motion Transfer GAN for Convolutional Neural Networks

Abstract Slides Poster Similar

OmniFlowNet: A Perspective Neural Network Adaptation for Optical Flow Estimation in Omnidirectional Images

Charles-Olivier Artizzu, Haozhou Zhang, Guillaume Allibert, Cédric Demonceaux

Auto-TLDR; OmniFlowNet: A Convolutional Neural Network for Omnidirectional Optical Flow Estimation

Abstract Slides Poster Similar

A Grid-Based Representation for Human Action Recognition

Soufiane Lamghari, Guillaume-Alexandre Bilodeau, Nicolas Saunier

Auto-TLDR; GRAR: Grid-based Representation for Action Recognition in Videos

Abstract Slides Poster Similar

Identity-Aware Facial Expression Recognition in Compressed Video

Xiaofeng Liu, Linghao Jin, Xu Han, Jun Lu, Jonghye Woo, Jane You

Auto-TLDR; Exploring Facial Expression Representation in Compressed Video with Mutual Information Minimization

Shape Consistent 2D Keypoint Estimation under Domain Shift

Levi Vasconcelos, Massimiliano Mancini, Davide Boscaini, Barbara Caputo, Elisa Ricci

Auto-TLDR; Deep Adaptation for Keypoint Prediction under Domain Shift

Abstract Slides Poster Similar

AVAE: Adversarial Variational Auto Encoder

Antoine Plumerault, Hervé Le Borgne, Celine Hudelot

Auto-TLDR; Combining VAE and GAN for Realistic Image Generation

Abstract Slides Poster Similar

Phase Retrieval Using Conditional Generative Adversarial Networks

Tobias Uelwer, Alexander Oberstraß, Stefan Harmeling

Auto-TLDR; Conditional Generative Adversarial Networks for Phase Retrieval

Abstract Slides Poster Similar

Future Urban Scenes Generation through Vehicles Synthesis

Alessandro Simoni, Luca Bergamini, Andrea Palazzi, Simone Calderara, Rita Cucchiara

Auto-TLDR; Predicting the Future of an Urban Scene with a Novel View Synthesis Paradigm

Abstract Slides Poster Similar

Learnable Higher-Order Representation for Action Recognition

Auto-TLDR; Learningable Higher-Order Operations for Spatiotemporal Dynamics in Video Recognition

Gabriella: An Online System for Real-Time Activity Detection in Untrimmed Security Videos

Mamshad Nayeem Rizve, Ugur Demir, Praveen Praveen Tirupattur, Aayush Jung Rana, Kevin Duarte, Ishan Rajendrakumar Dave, Yogesh Rawat, Mubarak Shah

Auto-TLDR; Gabriella: A Real-Time Online System for Activity Detection in Surveillance Videos