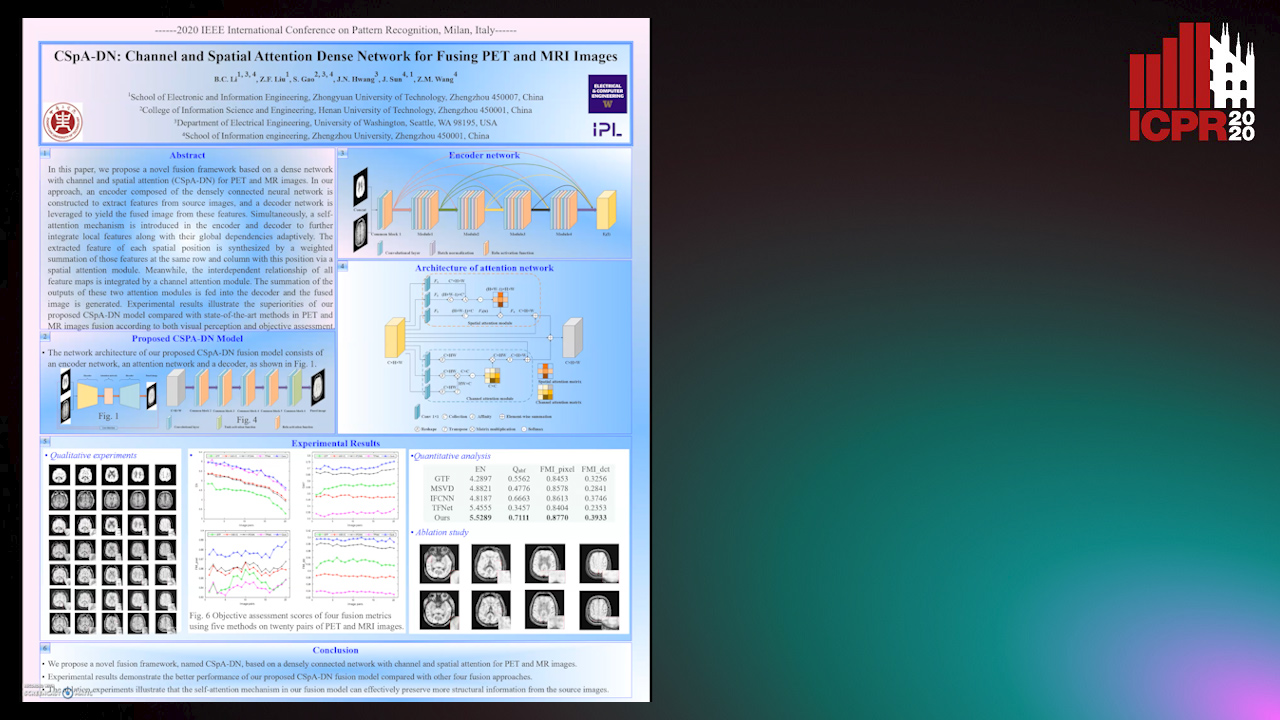

CSpA-DN: Channel and Spatial Attention Dense Network for Fusing PET and MRI Images

Bicao Li,

Zhoufeng Liu,

Shan Gao,

Jenq-Neng Hwang,

Jun Sun,

Zongmin Wang

Auto-TLDR; CSpA-DN: Unsupervised Fusion of PET and MR Images with Channel and Spatial Attention

Similar papers

A Dual-Branch Network for Infrared and Visible Image Fusion

Auto-TLDR; Image Fusion Using Autoencoder for Deep Learning

Abstract Slides Poster Similar

3D Medical Multi-Modal Segmentation Network Guided by Multi-Source Correlation Constraint

Tongxue Zhou, Stéphane Canu, Pierre Vera, Su Ruan

Auto-TLDR; Multi-modality Segmentation with Correlation Constrained Network

Abstract Slides Poster Similar

Global-Local Attention Network for Semantic Segmentation in Aerial Images

Minglong Li, Lianlei Shan, Weiqiang Wang

Auto-TLDR; GLANet: Global-Local Attention Network for Semantic Segmentation

Abstract Slides Poster Similar

Progressive Scene Segmentation Based on Self-Attention Mechanism

Yunyi Pan, Yuan Gan, Kun Liu, Yan Zhang

Auto-TLDR; Two-Stage Semantic Scene Segmentation with Self-Attention

Abstract Slides Poster Similar

Accurate Cell Segmentation in Digital Pathology Images Via Attention Enforced Networks

Zeyi Yao, Kaiqi Li, Guanhong Zhang, Yiwen Luo, Xiaoguang Zhou, Muyi Sun

Auto-TLDR; AENet: Attention Enforced Network for Automatic Cell Segmentation

Abstract Slides Poster Similar

Do Not Treat Boundaries and Regions Differently: An Example on Heart Left Atrial Segmentation

Zhou Zhao, Elodie Puybareau, Nicolas Boutry, Thierry Geraud

Auto-TLDR; Attention Full Convolutional Network for Atrial Segmentation using ResNet-101 Architecture

BCAU-Net: A Novel Architecture with Binary Channel Attention Module for MRI Brain Segmentation

Yongpei Zhu, Zicong Zhou, Guojun Liao, Kehong Yuan

Auto-TLDR; BCAU-Net: Binary Channel Attention U-Net for MRI brain segmentation

Abstract Slides Poster Similar

RSAN: Residual Subtraction and Attention Network for Single Image Super-Resolution

Shuo Wei, Xin Sun, Haoran Zhao, Junyu Dong

Auto-TLDR; RSAN: Residual subtraction and attention network for super-resolution

Arbitrary Style Transfer with Parallel Self-Attention

Tiange Zhang, Ying Gao, Feng Gao, Lin Qi, Junyu Dong

Auto-TLDR; Self-Attention-Based Arbitrary Style Transfer Using Adaptive Instance Normalization

Abstract Slides Poster Similar

Multi-Scale Residual Pyramid Attention Network for Monocular Depth Estimation

Jing Liu, Xiaona Zhang, Zhaoxin Li, Tianlu Mao

Auto-TLDR; Multi-scale Residual Pyramid Attention Network for Monocular Depth Estimation

Abstract Slides Poster Similar

Segmentation of Intracranial Aneurysm Remnant in MRA Using Dual-Attention Atrous Net

Subhashis Banerjee, Ashis Kumar Dhara, Johan Wikström, Robin Strand

Auto-TLDR; Dual-Attention Atrous Net for Segmentation of Intracranial Aneurysm Remnant from MRA Images

Abstract Slides Poster Similar

CAggNet: Crossing Aggregation Network for Medical Image Segmentation

Auto-TLDR; Crossing Aggregation Network for Medical Image Segmentation

Abstract Slides Poster Similar

Efficient-Receptive Field Block with Group Spatial Attention Mechanism for Object Detection

Jiacheng Zhang, Zhicheng Zhao, Fei Su

Auto-TLDR; E-RFB: Efficient-Receptive Field Block for Deep Neural Network for Object Detection

Abstract Slides Poster Similar

Dynamic Guided Network for Monocular Depth Estimation

Xiaoxia Xing, Yinghao Cai, Yiping Yang, Dayong Wen

Auto-TLDR; DGNet: Dynamic Guidance Upsampling for Self-attention-Decoding for Monocular Depth Estimation

Abstract Slides Poster Similar

Multi-focus Image Fusion for Confocal Microscopy Using U-Net Regression Map

Md Maruf Hossain Shuvo, Yasmin M. Kassim, Filiz Bunyak, Olga V. Glinskii, Leike Xie, Vladislav V Glinsky, Virginia H. Huxley, Kannappan Palaniappan

Auto-TLDR; Independent Single Channel U-Net Fusion for Multi-focus Microscopy Images

Abstract Slides Poster Similar

A Multi-Focus Image Fusion Method Based on Fractal Dimension and Guided Filtering

Nikoo Dehghani, Ehsanollah Kabir

Auto-TLDR; Fractal Dimension-based Multi-focus Image Fusion with Guide Filtering

Abstract Slides Poster Similar

DA-RefineNet: Dual-Inputs Attention RefineNet for Whole Slide Image Segmentation

Ziqiang Li, Rentuo Tao, Qianrun Wu, Bin Li

Auto-TLDR; DA-RefineNet: A dual-inputs attention network for whole slide image segmentation

Abstract Slides Poster Similar

Adaptive Image Compression Using GAN Based Semantic-Perceptual Residual Compensation

Ruojing Wang, Zitang Sun, Sei-Ichiro Kamata, Weili Chen

Auto-TLDR; Adaptive Image Compression using GAN based Semantic-Perceptual Residual Compensation

Abstract Slides Poster Similar

Deep Residual Attention Network for Hyperspectral Image Reconstruction

Auto-TLDR; Deep Convolutional Neural Network for Hyperspectral Image Reconstruction from a Snapshot

Abstract Slides Poster Similar

Attention Stereo Matching Network

Doudou Zhang, Jing Cai, Yanbing Xue, Zan Gao, Hua Zhang

Auto-TLDR; ASM-Net: Attention Stereo Matching with Disparity Refinement

Abstract Slides Poster Similar

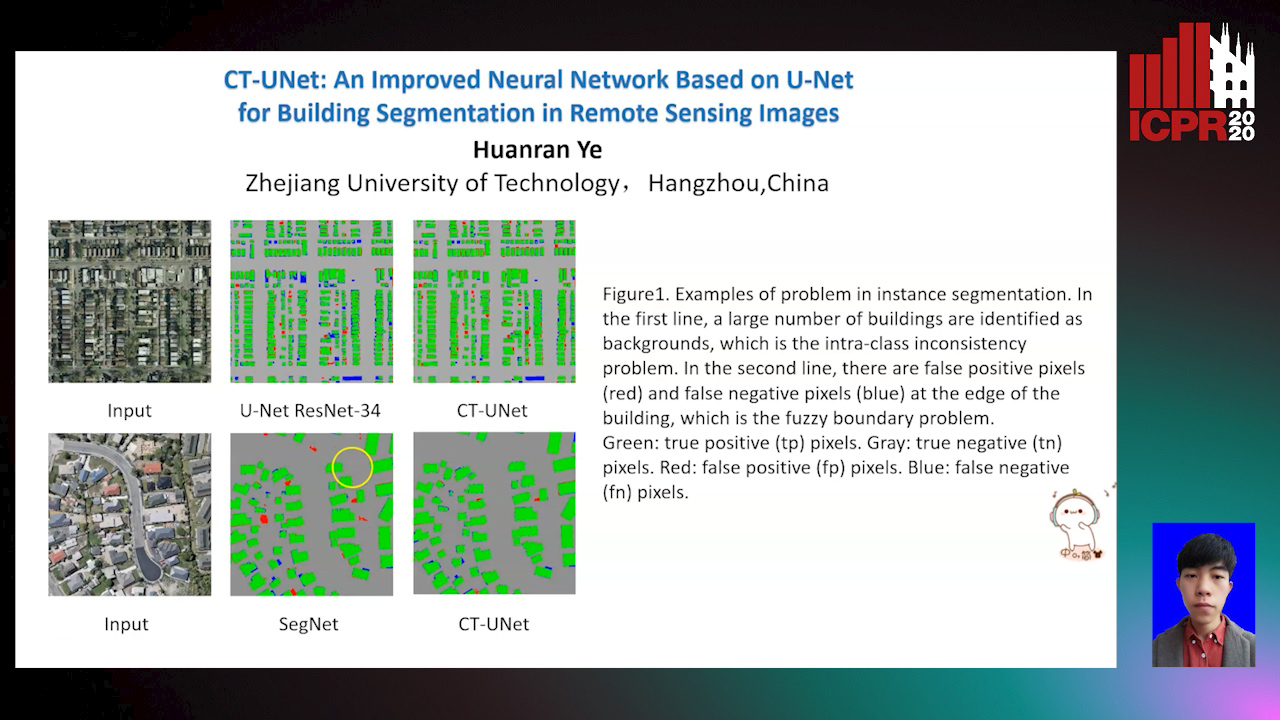

CT-UNet: An Improved Neural Network Based on U-Net for Building Segmentation in Remote Sensing Images

Huanran Ye, Sheng Liu, Kun Jin, Haohao Cheng

Auto-TLDR; Context-Transfer-UNet: A UNet-based Network for Building Segmentation in Remote Sensing Images

Abstract Slides Poster Similar

Selective Kernel and Motion-Emphasized Loss Based Attention-Guided Network for HDR Imaging of Dynamic Scenes

Yipeng Deng, Qin Liu, Takeshi Ikenaga

Auto-TLDR; SK-AHDRNet: A Deep Network with attention module and motion-emphasized loss function to produce ghost-free HDR images

Abstract Slides Poster Similar

Deep Fusion of RGB and NIR Paired Images Using Convolutional Neural Networks

Auto-TLDR; Deep Fusion of RGB and NIR paired images in low light condition using convolutional neural networks

Abstract Slides Poster Similar

Transitional Asymmetric Non-Local Neural Networks for Real-World Dirt Road Segmentation

Auto-TLDR; Transitional Asymmetric Non-Local Neural Networks for Semantic Segmentation on Dirt Roads

Abstract Slides Poster Similar

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

MANet: Multimodal Attention Network Based Point-View Fusion for 3D Shape Recognition

Yaxin Zhao, Jichao Jiao, Ning Li

Auto-TLDR; Fusion Network for 3D Shape Recognition based on Multimodal Attention Mechanism

Abstract Slides Poster Similar

Aggregating Object Features Based on Attention Weights for Fine-Grained Image Retrieval

Hongli Lin, Yongqi Song, Zixuan Zeng, Weisheng Wang

Auto-TLDR; DSAW: Unsupervised Dual-selection for Fine-Grained Image Retrieval

Two-Level Attention-Based Fusion Learning for RGB-D Face Recognition

Hardik Uppal, Alireza Sepas-Moghaddam, Michael Greenspan, Ali Etemad

Auto-TLDR; Fused RGB-D Facial Recognition using Attention-Aware Feature Fusion

Abstract Slides Poster Similar

Hierarchically Aggregated Residual Transformation for Single Image Super Resolution

Auto-TLDR; HARTnet: Hierarchically Aggregated Residual Transformation for Multi-Scale Super-resolution

Abstract Slides Poster Similar

A Benchmark Dataset for Segmenting Liver, Vasculature and Lesions from Large-Scale Computed Tomography Data

Bo Wang, Zhengqing Xu, Wei Xu, Qingsen Yan, Liang Zhang, Zheng You

Auto-TLDR; The Biggest Treatment-Oriented Liver Cancer Dataset for Segmentation

Abstract Slides Poster Similar

Single Image Deblurring Using Bi-Attention Network

Auto-TLDR; Bi-Attention Neural Network for Single Image Deblurring

Automatic Semantic Segmentation of Structural Elements related to the Spinal Cord in the Lumbar Region by Using Convolutional Neural Networks

Jhon Jairo Sáenz Gamboa, Maria De La Iglesia-Vaya, Jon Ander Gómez

Auto-TLDR; Semantic Segmentation of Lumbar Spine Using Convolutional Neural Networks

Abstract Slides Poster Similar

DARN: Deep Attentive Refinement Network for Liver Tumor Segmentation from 3D CT Volume

Yao Zhang, Jiang Tian, Cheng Zhong, Yang Zhang, Zhongchao Shi, Zhiqiang He

Auto-TLDR; Deep Attentive Refinement Network for Liver Tumor Segmentation from 3D Computed Tomography Using Multi-Level Features

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

Dual-Attention Guided Dropblock Module for Weakly Supervised Object Localization

Junhui Yin, Siqing Zhang, Dongliang Chang, Zhanyu Ma, Jun Guo

Auto-TLDR; Dual-Attention Guided Dropblock for Weakly Supervised Object Localization

Abstract Slides Poster Similar

Cross-Layer Information Refining Network for Single Image Super-Resolution

Hongyi Zhang, Wen Lu, Xiaopeng Sun

Auto-TLDR; Interlaced Spatial Attention Block for Single Image Super-Resolution

Abstract Slides Poster Similar

An Improved Bilinear Pooling Method for Image-Based Action Recognition

Auto-TLDR; An improved bilinear pooling method for image-based action recognition

Abstract Slides Poster Similar

Encoder-Decoder Based Convolutional Neural Networks with Multi-Scale-Aware Modules for Crowd Counting

Pongpisit Thanasutives, Ken-Ichi Fukui, Masayuki Numao, Boonserm Kijsirikul

Auto-TLDR; M-SFANet and M-SegNet for Crowd Counting Using Multi-Scale Fusion Networks

Abstract Slides Poster Similar

Deeply-Fused Attentive Network for Stereo Matching

Zuliu Yang, Xindong Ai, Weida Yang, Yong Zhao, Qifei Dai, Fuchi Li

Auto-TLDR; DF-Net: Deep Learning-based Network for Stereo Matching

Abstract Slides Poster Similar

BiLuNet: A Multi-Path Network for Semantic Segmentation on X-Ray Images

Van Luan Tran, Huei-Yung Lin, Rachel Liu, Chun-Han Tseng, Chun-Han Tseng

Auto-TLDR; BiLuNet: Multi-path Convolutional Neural Network for Semantic Segmentation of Lumbar vertebrae, sacrum,

PCANet: Pyramid Context-Aware Network for Retinal Vessel Segmentation

Yi Zhang, Yixuan Chen, Kai Zhang

Auto-TLDR; PCANet: Adaptive Context-Aware Network for Automated Retinal Vessel Segmentation

Abstract Slides Poster Similar

Face Super-Resolution Network with Incremental Enhancement of Facial Parsing Information

Shuang Liu, Chengyi Xiong, Zhirong Gao

Auto-TLDR; Learning-based Face Super-Resolution with Incremental Boosting Facial Parsing Information

Abstract Slides Poster Similar

SIDGAN: Single Image Dehazing without Paired Supervision

Pan Wei, Xin Wang, Lei Wang, Ji Xiang, Zihan Wang

Auto-TLDR; DehazeGAN: An End-to-End Generative Adversarial Network for Image Dehazing

Abstract Slides Poster Similar

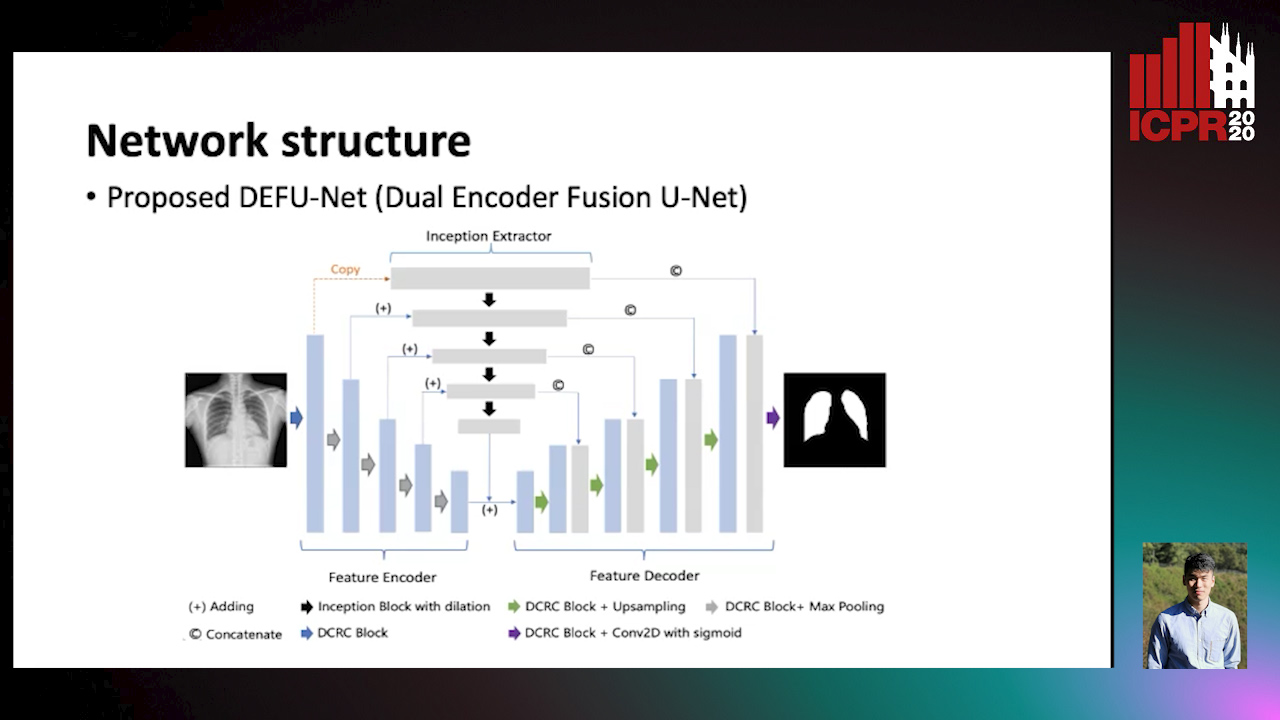

Dual Encoder Fusion U-Net (DEFU-Net) for Cross-manufacturer Chest X-Ray Segmentation

Zhang Lipei, Aozhi Liu, Jing Xiao

Auto-TLDR; Inception Convolutional Neural Network with Dilation for Chest X-Ray Segmentation

Multi-Scale and Attention Based ResNet for Heartbeat Classification

Haojie Zhang, Gongping Yang, Yuwen Huang, Feng Yuan, Yilong Yin

Auto-TLDR; A Multi-Scale and Attention based ResNet for ECG heartbeat classification in intra-patient and inter-patient paradigms

Abstract Slides Poster Similar

FOANet: A Focus of Attention Network with Application to Myocardium Segmentation

Zhou Zhao, Elodie Puybareau, Nicolas Boutry, Thierry Geraud

Auto-TLDR; FOANet: A Hybrid Loss Function for Myocardium Segmentation of Cardiac Magnetic Resonance Images

Abstract Slides Poster Similar

Boosting High-Level Vision with Joint Compression Artifacts Reduction and Super-Resolution

Xiaoyu Xiang, Qian Lin, Jan Allebach

Auto-TLDR; A Context-Aware Joint CAR and SR Neural Network for High-Resolution Text Recognition and Face Detection

Abstract Slides Poster Similar

Multi-Scale Cascading Network with Compact Feature Learning for RGB-Infrared Person Re-Identification

Can Zhang, Hong Liu, Wei Guo, Mang Ye

Auto-TLDR; Multi-Scale Part-Aware Cascading for RGB-Infrared Person Re-identification

Abstract Slides Poster Similar