Arbitrary Style Transfer with Parallel Self-Attention

Tiange Zhang,

Ying Gao,

Feng Gao,

Lin Qi,

Junyu Dong

Auto-TLDR; Self-Attention-Based Arbitrary Style Transfer Using Adaptive Instance Normalization

Similar papers

ACRM: Attention Cascade R-CNN with Mix-NMS for Metallic Surface Defect Detection

Junting Fang, Xiaoyang Tan, Yuhui Wang

Auto-TLDR; Attention Cascade R-CNN with Mix Non-Maximum Suppression for Robust Metal Defect Detection

Abstract Slides Poster Similar

Attention Stereo Matching Network

Doudou Zhang, Jing Cai, Yanbing Xue, Zan Gao, Hua Zhang

Auto-TLDR; ASM-Net: Attention Stereo Matching with Disparity Refinement

Abstract Slides Poster Similar

Attentional Wavelet Network for Traditional Chinese Painting Transfer

Rui Wang, Huaibo Huang, Aihua Zheng, Ran He

Auto-TLDR; Attentional Wavelet Network for Photo to Chinese Painting Transfer

Abstract Slides Poster Similar

Efficient-Receptive Field Block with Group Spatial Attention Mechanism for Object Detection

Jiacheng Zhang, Zhicheng Zhao, Fei Su

Auto-TLDR; E-RFB: Efficient-Receptive Field Block for Deep Neural Network for Object Detection

Abstract Slides Poster Similar

Free-Form Image Inpainting Via Contrastive Attention Network

Xin Ma, Xiaoqiang Zhou, Huaibo Huang, Zhenhua Chai, Xiaolin Wei, Ran He

Auto-TLDR; Self-supervised Siamese inference for image inpainting

Attention Pyramid Module for Scene Recognition

Zhinan Qiao, Xiaohui Yuan, Chengyuan Zhuang, Abolfazl Meyarian

Auto-TLDR; Attention Pyramid Module for Multi-Scale Scene Recognition

Abstract Slides Poster Similar

An Improved Bilinear Pooling Method for Image-Based Action Recognition

Auto-TLDR; An improved bilinear pooling method for image-based action recognition

Abstract Slides Poster Similar

BCAU-Net: A Novel Architecture with Binary Channel Attention Module for MRI Brain Segmentation

Yongpei Zhu, Zicong Zhou, Guojun Liao, Kehong Yuan

Auto-TLDR; BCAU-Net: Binary Channel Attention U-Net for MRI brain segmentation

Abstract Slides Poster Similar

Single Image Deblurring Using Bi-Attention Network

Auto-TLDR; Bi-Attention Neural Network for Single Image Deblurring

Attention As Activation

Yimian Dai, Stefan Oehmcke, Fabian Gieseke, Yiquan Wu, Kobus Barnard

Auto-TLDR; Attentional Activation Units for Convolutional Networks

RSAN: Residual Subtraction and Attention Network for Single Image Super-Resolution

Shuo Wei, Xin Sun, Haoran Zhao, Junyu Dong

Auto-TLDR; RSAN: Residual subtraction and attention network for super-resolution

PSDNet: A Balanced Architecture of Accuracy and Parameters for Semantic Segmentation

Auto-TLDR; Pyramid Pooling Module with SE1Cblock and D2SUpsample Network (PSDNet)

Abstract Slides Poster Similar

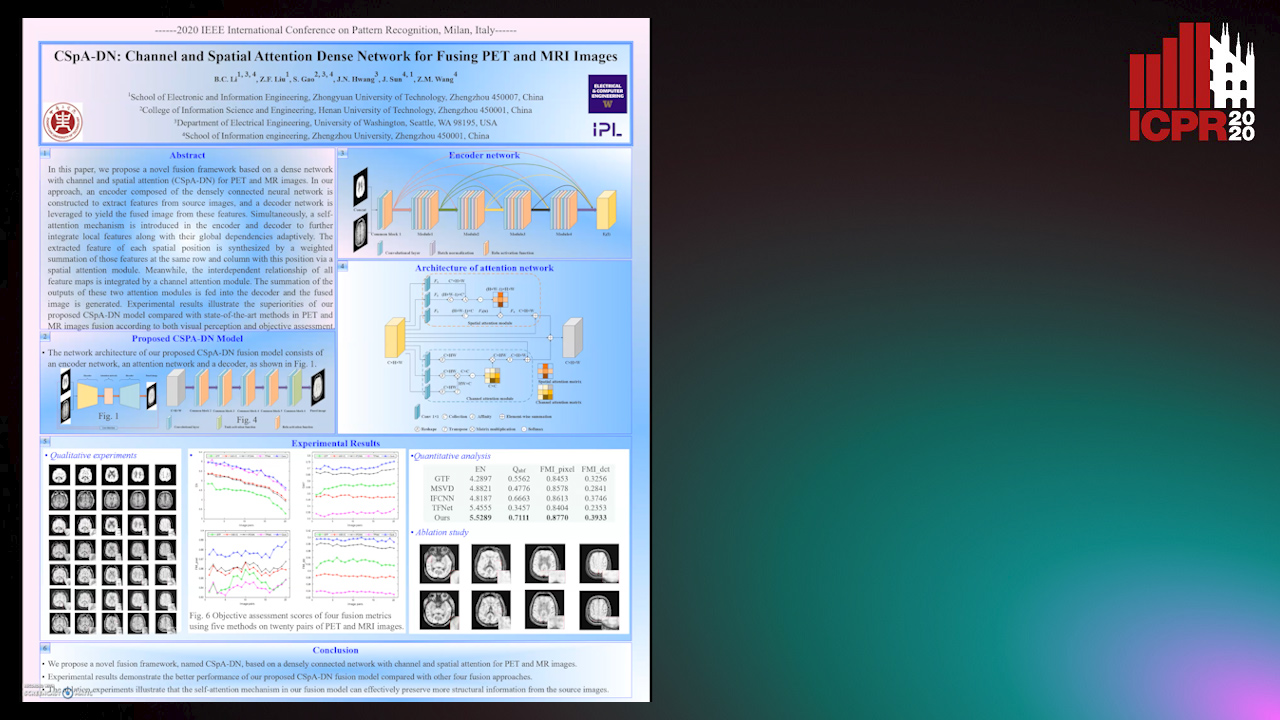

CSpA-DN: Channel and Spatial Attention Dense Network for Fusing PET and MRI Images

Bicao Li, Zhoufeng Liu, Shan Gao, Jenq-Neng Hwang, Jun Sun, Zongmin Wang

Auto-TLDR; CSpA-DN: Unsupervised Fusion of PET and MR Images with Channel and Spatial Attention

Abstract Slides Poster Similar

Dual-Attention Guided Dropblock Module for Weakly Supervised Object Localization

Junhui Yin, Siqing Zhang, Dongliang Chang, Zhanyu Ma, Jun Guo

Auto-TLDR; Dual-Attention Guided Dropblock for Weakly Supervised Object Localization

Abstract Slides Poster Similar

Cross-Layer Information Refining Network for Single Image Super-Resolution

Hongyi Zhang, Wen Lu, Xiaopeng Sun

Auto-TLDR; Interlaced Spatial Attention Block for Single Image Super-Resolution

Abstract Slides Poster Similar

Progressive Scene Segmentation Based on Self-Attention Mechanism

Yunyi Pan, Yuan Gan, Kun Liu, Yan Zhang

Auto-TLDR; Two-Stage Semantic Scene Segmentation with Self-Attention

Abstract Slides Poster Similar

Second-Order Attention Guided Convolutional Activations for Visual Recognition

Shannan Chen, Qian Wang, Qiule Sun, Bin Liu, Jianxin Zhang, Qiang Zhang

Auto-TLDR; Second-order Attention Guided Network for Convolutional Neural Networks for Visual Recognition

Abstract Slides Poster Similar

Self and Channel Attention Network for Person Re-Identification

Asad Munir, Niki Martinel, Christian Micheloni

Auto-TLDR; SCAN: Self and Channel Attention Network for Person Re-identification

Abstract Slides Poster Similar

Multi-Scale Residual Pyramid Attention Network for Monocular Depth Estimation

Jing Liu, Xiaona Zhang, Zhaoxin Li, Tianlu Mao

Auto-TLDR; Multi-scale Residual Pyramid Attention Network for Monocular Depth Estimation

Abstract Slides Poster Similar

SCA Net: Sparse Channel Attention Module for Action Recognition

Hang Song, Yonghong Song, Yuanlin Zhang

Auto-TLDR; SCA Net: Efficient Group Convolution for Sparse Channel Attention

Abstract Slides Poster Similar

Makeup Style Transfer on Low-Quality Images with Weighted Multi-Scale Attention

Daniel Organisciak, Edmond S. L. Ho, Shum Hubert P. H.

Auto-TLDR; Facial Makeup Style Transfer for Low-Resolution Images Using Multi-Scale Spatial Attention

Abstract Slides Poster Similar

Collaborative Human Machine Attention Module for Character Recognition

Chetan Ralekar, Tapan Gandhi, Santanu Chaudhury

Auto-TLDR; A Collaborative Human-Machine Attention Module for Deep Neural Networks

Abstract Slides Poster Similar

Selective Kernel and Motion-Emphasized Loss Based Attention-Guided Network for HDR Imaging of Dynamic Scenes

Yipeng Deng, Qin Liu, Takeshi Ikenaga

Auto-TLDR; SK-AHDRNet: A Deep Network with attention module and motion-emphasized loss function to produce ghost-free HDR images

Abstract Slides Poster Similar

Interactive Style Space of Deep Features and Style Innovation

Auto-TLDR; Interactive Style Space of Convolutional Neural Network Features

Abstract Slides Poster Similar

Global-Local Attention Network for Semantic Segmentation in Aerial Images

Minglong Li, Lianlei Shan, Weiqiang Wang

Auto-TLDR; GLANet: Global-Local Attention Network for Semantic Segmentation

Abstract Slides Poster Similar

Two-Level Attention-Based Fusion Learning for RGB-D Face Recognition

Hardik Uppal, Alireza Sepas-Moghaddam, Michael Greenspan, Ali Etemad

Auto-TLDR; Fused RGB-D Facial Recognition using Attention-Aware Feature Fusion

Abstract Slides Poster Similar

Accurate Cell Segmentation in Digital Pathology Images Via Attention Enforced Networks

Zeyi Yao, Kaiqi Li, Guanhong Zhang, Yiwen Luo, Xiaoguang Zhou, Muyi Sun

Auto-TLDR; AENet: Attention Enforced Network for Automatic Cell Segmentation

Abstract Slides Poster Similar

Controllable Face Aging

Auto-TLDR; A controllable face aging method via attribute disentanglement generative adversarial network

Abstract Slides Poster Similar

Dynamic Guided Network for Monocular Depth Estimation

Xiaoxia Xing, Yinghao Cai, Yiping Yang, Dayong Wen

Auto-TLDR; DGNet: Dynamic Guidance Upsampling for Self-attention-Decoding for Monocular Depth Estimation

Abstract Slides Poster Similar

Region-Based Non-Local Operation for Video Classification

Auto-TLDR; Regional-based Non-Local Operation for Deep Self-Attention in Convolutional Neural Networks

Abstract Slides Poster Similar

Cycle-Consistent Adversarial Networks and Fast Adaptive Bi-Dimensional Empirical Mode Decomposition for Style Transfer

Elissavet Batziou, Petros Alvanitopoulos, Konstantinos Ioannidis, Ioannis Patras, Stefanos Vrochidis, Ioannis Kompatsiaris

Auto-TLDR; FABEMD: Fast and Adaptive Bidimensional Empirical Mode Decomposition for Style Transfer on Images

Abstract Slides Poster Similar

TAAN: Task-Aware Attention Network for Few-Shot Classification

Auto-TLDR; TAAN: Task-Aware Attention Network for Few-Shot Classification

Abstract Slides Poster Similar

Deeply-Fused Attentive Network for Stereo Matching

Zuliu Yang, Xindong Ai, Weida Yang, Yong Zhao, Qifei Dai, Fuchi Li

Auto-TLDR; DF-Net: Deep Learning-based Network for Stereo Matching

Abstract Slides Poster Similar

VGG-Embedded Adaptive Layer-Normalized Crowd Counting Net with Scale-Shuffling Modules

Dewen Guo, Jie Feng, Bingfeng Zhou

Auto-TLDR; VadaLN: VGG-embedded Adaptive Layer Normalization for Crowd Counting

Abstract Slides Poster Similar

Global Image Sentiment Transfer

Jie An, Tianlang Chen, Songyang Zhang, Jiebo Luo

Auto-TLDR; Image Sentiment Transfer Using DenseNet121 Architecture

Context-Aware Residual Module for Image Classification

Auto-TLDR; Context-Aware Residual Module for Image Classification

Abstract Slides Poster Similar

HANet: Hybrid Attention-Aware Network for Crowd Counting

Xinxing Su, Yuchen Yuan, Xiangbo Su, Zhikang Zou, Shilei Wen, Pan Zhou

Auto-TLDR; HANet: Hybrid Attention-Aware Network for Crowd Counting with Adaptive Compensation Loss

Rethinking Domain Generalization Baselines

Francesco Cappio Borlino, Antonio D'Innocente, Tatiana Tommasi

Auto-TLDR; Style Transfer Data Augmentation for Domain Generalization

Abstract Slides Poster Similar

Cascade Attention Guided Residue Learning GAN for Cross-Modal Translation

Bin Duan, Wei Wang, Hao Tang, Hugo Latapie, Yan Yan

Auto-TLDR; Cascade Attention-Guided Residue GAN for Cross-modal Audio-Visual Learning

Abstract Slides Poster Similar

Ordinal Depth Classification Using Region-Based Self-Attention

Minh Hieu Phan, Son Lam Phung, Abdesselam Bouzerdoum

Auto-TLDR; Region-based Self-Attention for Multi-scale Depth Estimation from a Single 2D Image

Abstract Slides Poster Similar

Multi-Domain Image-To-Image Translation with Adaptive Inference Graph

The Phuc Nguyen, Stéphane Lathuiliere, Elisa Ricci

Auto-TLDR; Adaptive Graph Structure for Multi-Domain Image-to-Image Translation

Abstract Slides Poster Similar

Identity-Preserved Face Beauty Transformation with Conditional Generative Adversarial Networks

Auto-TLDR; Identity-preserved face beauty transformation using conditional GANs

Abstract Slides Poster Similar

Transitional Asymmetric Non-Local Neural Networks for Real-World Dirt Road Segmentation

Auto-TLDR; Transitional Asymmetric Non-Local Neural Networks for Semantic Segmentation on Dirt Roads

Abstract Slides Poster Similar

VITON-GT: An Image-Based Virtual Try-On Model with Geometric Transformations

Matteo Fincato, Federico Landi, Marcella Cornia, Fabio Cesari, Rita Cucchiara

Auto-TLDR; VITON-GT: An Image-based Virtual Try-on Architecture for Fashion Catalogs

Abstract Slides Poster Similar

Multi-Scale and Attention Based ResNet for Heartbeat Classification

Haojie Zhang, Gongping Yang, Yuwen Huang, Feng Yuan, Yilong Yin

Auto-TLDR; A Multi-Scale and Attention based ResNet for ECG heartbeat classification in intra-patient and inter-patient paradigms

Abstract Slides Poster Similar

Deep Residual Attention Network for Hyperspectral Image Reconstruction

Auto-TLDR; Deep Convolutional Neural Network for Hyperspectral Image Reconstruction from a Snapshot

Abstract Slides Poster Similar

Saliency Prediction on Omnidirectional Images with Brain-Like Shallow Neural Network

Zhu Dandan, Chen Yongqing, Min Xiongkuo, Zhao Defang, Zhu Yucheng, Zhou Qiangqiang, Yang Xiaokang, Tian Han

Auto-TLDR; A Brain-like Neural Network for Saliency Prediction of Head Fixations on Omnidirectional Images

Abstract Slides Poster Similar

ReADS: A Rectified Attentional Double Supervised Network for Scene Text Recognition

Qi Song, Qianyi Jiang, Xiaolin Wei, Nan Li, Rui Zhang

Auto-TLDR; ReADS: Rectified Attentional Double Supervised Network for General Scene Text Recognition

Abstract Slides Poster Similar